With great respect to one of my favorite television sitcoms, “Welcome to my semi-regular blog about ‘nothing’”! Or depending how you view it, perhaps it actually comprehends ‘everything’. Ultimately, as we progress through this tech journey, I think we’ll end up landing somewhere in-between, a perpetual state of learning, yet head-scratching at the same time.

In my day job at Intel, as the Graphics Community Innovator Lead, I have the very cool responsibility of helping to seed, curate, and promote cutting edge computer/graphics technology experimentation from some of the smartest folks in the tech world today.

By night though, I get to dive into my passion for game and immersive story development. Ever since grade school, when I made it my youthful mission to conquer every Atari 2600 game ever released, I’ve been fascinated by what it takes to create alternate worlds.

At first, I satiated my appetite for inventing alternate realities through the outlet of filmmaking, producing and directing a handful of short films between 2005-2013. Still, the cinematic flatness and lack of immersiveness left me wanting more. So in 2014, I watched eagerly as Oculus and Google (with Cardboard) introduced their respective VR technologies. It was as if a shroud had lifted, and I realized this was the canvas on which I could weave the kind of interactive stories I dreamed of in youth.

So with my new creative canvas identified, I spent hundreds of hours plodding through Unity tutorials exploring what it takes to build immersive VR experiences. In 2019, after several years of pain-staking development on nights and weekends, I released my first VR title on Steam. No matter that by all metrics that game was a commercial flop, I felt sheer exhileration that I had made it through the arduous process of creating and releasing an interactive dream world.

So with that background, it is my goal to provide readers with tips and code samples that can help them overcome many of the game development obstacles that tripped me up over the years. Additionally, I’ll venture into some of the interesting work I’m doing with Intel Graphics Innovators and provide my personal perspective on how I think it fits into the grand landscape of game development.

Right now, I’m heavily focused on AI and machine learning applications in gaming, so you may see a slight prejudice towards those areas in some of my initial blogs. Additionally, while I’m a reasonably skilled Unity developer, I’ve made it my goal this year to learn the UE5 engine, so I’ll devote some blogs to documenting that journey of trying to become proficient in multiple engines simultaneously.

Ok, since I’ve consumed much of this blog with a lot of ‘nothing’, let me wrap it up with some Unity code examples demonstrating creating editor menus and then dynamically populating those at run-time. One of my favorite features in Unity is the ability to customize editor menus, enhancing the overall development environment experience.

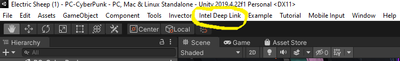

Below, I show an editor script which creates a new menu item (in this case ‘Intel Deep Link’).

When that ‘Intel Deep Link’ menu item is selected, a sub-menu option appears which when selected opens a separate control window within the Unity editor (in this case ‘OpenVINO Tools’).

For this project, I wanted to provide the user the ability to target inference on the silicon of their choice, so I had to create a way to identify the compute units on the system, and then feed them into the Unity editor drop-down.

I show the entire script below (excuse the poor formatting). You can see that since Unity doesn’t have a way to easily identify GPU’s aside from the one it was launched on, I need to call a powershell command and return the values from the OS into a string array (‘targets’). Obviously, the return value of the PS command needs to be parsed in order to populate the ‘targets’ array, which you can see is taken care of within the ‘Split’ loop.

Since I allow the user to select the silicon that each inference network is run on, I store the index of their selection in the OpenVinoControl object (defined in separate class), and use those values to launch the OpenVINO inference engine.

Code is definitely not optimized, but it does the job for creating menu’s, dynamically generating system parameters and displaying them, as well as storing user input preferences.

|

using UnityEngine; |

Hope this was useful, and look forward to bringing you more rambling inferences in my next post.

Mary is the Community Manager for this site. She likes to bike, and do college and career coaching for high school students in her spare time.

Mary is the Community Manager for this site. She likes to bike, and do college and career coaching for high school students in her spare time.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.