Selecting SSDs plays a critical role in the long-term optimization of a multitiered distributed storage infrastructure. It is a task filled with nuance, as many factors warrant consideration, including cost, performance relative to workload, footprint, and the risk of data loss. A recently published study from Intel IT provides some much-needed clarity by comparing a low-cost option with a high-performance, high-endurance option in a VMware vSAN cache drive configuration. We specifically compared the following:

- 800 GB NAND Flash SSD with a SAS interface and an endurance of 3 drive writes per day (DWPD).

- 400 GB Intel® Optane™ SSD with a PCIe/NVMe interface and an endurance of 100 DWPD.

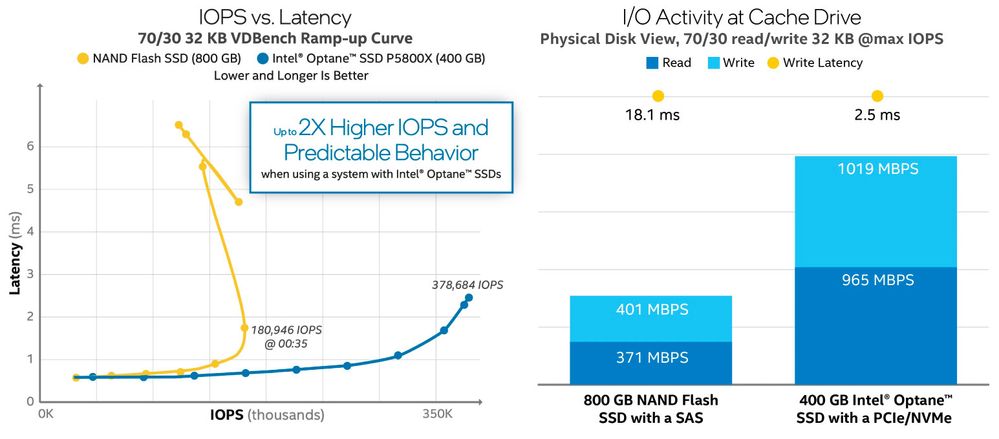

We used a common hardware configuration to test each cache SSD model under various I/O workloads, comparing throughput, execution times, and the predictability of I/O behavior during workload escalation. Figure 1 illustrates the comparative behavior of each cache SSD solution with a workload of 70 percent reads and 30 percent writes with 32 KB I/O sizes.

Under this test scenario, we observed the following:

- vSAN I/O performance for the 70/30 32 KB workload was 2X higher when using the 400 GB Intel Optane SSD as a cache drive.

- The Intel Optane SSD vDisk preparation, which consisted of writing 9.6 TB to 64x 150 GB vDisks, completed 4X faster when using the 400 GB Intel Optane SSD (not shown in the charts).

- The performance behavior was predictable when using the Intel Optane SSD, i.e., the write latency increased linearly, reaching 2.5 ms as vSAN approached the max I/O rate; however, when using the NAND Flash SSD, the write latency increased dramatically to 18 ms, causing erratic behavior.

- The de-stage rates, represented by the read activity in the physical disk view, scaled together with the VM I/O requests when using the Intel Optane SSD, whereas with the NAND Flash SSD the read throughput plateaued at ~370 MB/s, limiting vSAN I/O performance.

Intel Optane Media’s Write-In-Place Technology Adds Value

The almost 50/50 read and write activity indicates that vSAN’s write buffer is at a high utilization threshold, and the system is aggressively de-staging from cache to disks to avoid a cache full condition.

Even though the Intel Optane SSD was half the size of the SAS drive, we observed a significantly better vSAN I/O performance.

We can attribute this stark difference in performance and write I/O density to the Intel Optane media’s write-in-place technology. When data is written to a NAND Flash SSD, a program-erase (P/E) cycle is required. A P/E cycle involves writing a memory cell from the programmed state to the erased state, and back to the programmed state. P/E cycles are time consuming and directly affect drive performance. One of the main options to circumvent this NAND limitation is to increase the number of memory chips that are written in parallel. This implies that NAND Flash performance can increase when using a drive that can write concurrently to more chips.

Intel Optane media’s write-in-place technology does not require a P/E cycle when data is written to the media. This enables a significantly faster write I/O operation, and also increases the endurance of the media.

Risks Associated with Using Lower Endurance Cache Drives

Drive lifetime endurance is reported commercially as DWPD, or the number of times a drive can be written to per day for five years. It can also be presented as Terabytes Written (TBW) over the same time interval.

It is quite common to use endurance guidelines, such as VMware’s general SSD endurance guidelines for vSAN, to determine which SSD to use in the cache tier. In this vSAN guideline, we observe two endurance classes for All-Flash configurations: class C drives requiring ≥3650 TBW, and class D drives requiring ≥7300 TBW.

TBW over 5 years = drive size x DWPD x 365 days x 5 years

These guidelines are useful in that they help prevent the selection of cache drives that are totally inadequate for a production environment, but they are guidelines and not prescriptions.

We should not ignore the risks that drive replacements can bring to a production environment. For instance, the entire vSAN disk group becomes unavailable if the cache drive wears out. All drives in this disk group must be removed and subsequently recreated, increasing exposure to data loss until this operation is complete.

It remains important to verify if the drive selected can support the expected I/O workload. An 800 GB SAS 3 DWPD (4300 TBW) drive reaches its 5-year lifetime limit with a continuous write load of 28.44 MB/s.

(3 DWPD × 800 GB × 1024) ÷ (3600 secs × 24 hours) = 28.44 MB/s

It is also important to consider the write I/O amplification in the drive evaluation. The 28.44 MB/s value becomes 14.22 MB/s when we account for the I/O amplification from the RAID 1 protection method.

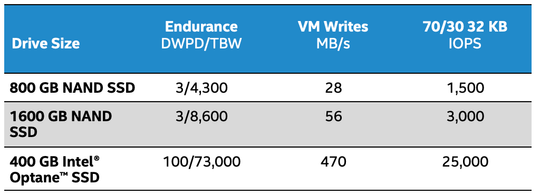

By using the drive endurance in writes per second, we can calculate the maximum supported I/O rate for different workload profiles. The table below compares the endurance of 800 and 1600 GB NAND Flash SSDs and the 400 GB Intel Optane SSD to the I/O activity of a 70/30 32 KB load supported for five years, assuming a RAID 1 protection.

For instance, if we were configuring a cluster to accommodate 48 VMs, each VM generating 500 IOPS of a 70/30 read/write 32 KB I/O load (a total of 24,000 IOPS), our workload would require a minimum of 16x 800 GB drives, 8x 1.6 TB drives, or 1x 400 GB Intel Optane SSD to satisfy endurance.

Thanks to a robust drive bandwidth, the Intel Optane SSD P5800X provides low latency and consistent I/O command completion times, making it particularly useful for critical applications. Higher bandwidth also yields more predictable performance, which can save businesses untold expense when running mission-critical applications with strict service-level agreements.

Intel Optane SSD’s significantly higher endurance minimizes the risks of premature drive replacement. The peace of mind alone is worth the investment, but it also affects the operational costs associated with drive replacements.

For more information, read the Intel white paper, “Why Intel® Optane™ SSDs Are a Better Option than NAND Flash SSDs in the Storage Cache Tier.”

Flavio Fomin is a Cloud Solutions Architect at Intel in the Intel Optane Group where he creates solutions to optimize the cost and performance of hybrid clouds using Intel® Optane™ SSDs and Intel® Optane™ persistent memory.

Flavio Fomin is a Cloud Solutions Architect at Intel in the Intel Optane Group where he creates solutions to optimize the cost and performance of hybrid clouds using Intel® Optane™ SSDs and Intel® Optane™ persistent memory.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.