According to the 2019 CNCF survey 84% of customers surveyed have container workloads in production. This is a dramatic increase from 18% in 2018. This is driven by a customer need to be more efficient and agile and a need to operate in a hybrid context to meet the growing nature and demands of the industry. The survey also shows 41% of customers with a hybrid use case up from 21% last year. 27% of surveyed customers are making use of daily release cycles.

As customers adopt these processes, managing storage in a consistent manner becomes a greater challenge. Customers across varied industry verticals have been making use of OpenShift on AWS to meet their hybrid and agility needs. Red Hat now further enables these customers through a new open Source solution: OpenShift Container Storage.

In this blog you will learn how to:

- Use AWS EC2 instances with Intel Xeon Scalable Processors for Red Hat® OpenShift® Data Foundation—previously OpenShift Container Storage.

- Configure and deploy containerized Ceph and NooBaa

- Validate deployment of containerized Ceph and NooBaa

- Use the MCG (Multi Cloud Gateway) to create a bucket and use in an application

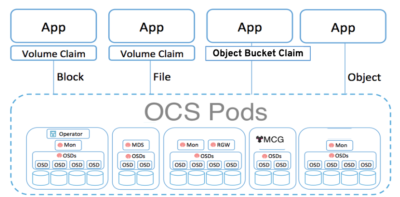

Here you will be using OpenShift Container Platform (OCP) 4.x and the OCS Operator to deploy Ceph and the Multi-Cloud-Gateway (MCG) as a persistent storage solution for OCP workloads. You can deploy OpenShift 4 using this link OpenShift 4 Deployment and then follow the instructions for AWS Installer-Provisioned Infrastructure (IPI).

Deploy your storage backend using the OCS Operator

Scale OCP cluster and add 3 new nodes

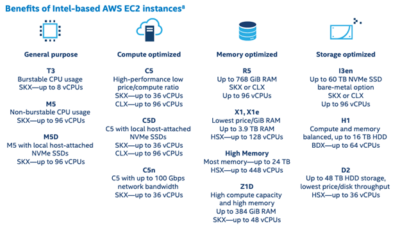

In light of the increase of production use of containers and hybrid solutions it is worth confirming that the implementation of OpenShift on AWS takes advantage of multiple availability zones to cater for resilience. You also have a choice of AWS Instance types with Intel processors. Here are the AWS instances and the Intel Xeon processors associated with them.

(Please refer to our earlier blog for steps on changing the default instance selections.)

First validate the OCP environment has 3 master and 3 worker nodes before increasing the cluster size by additional 3 worker nodes for OCS resources. The NAME of your OCP nodes will be different than shown below.

oc get nodes

Example output:

mshetty@mshetty-mac 4.7 % oc get nodes

NAME STATUS ROLES AGE VERSION

ip-10-0-135-50.us-west-2.compute.internal Ready master 57m v1.20.0+bafe72f

ip-10-0-142-229.us-west-2.compute.internal Ready worker 46m v1.20.0+bafe72f

ip-10-0-166-231.us-west-2.compute.internal Ready worker 44m v1.20.0+bafe72f

ip-10-0-190-115.us-west-2.compute.internal Ready master 55m v1.20.0+bafe72f

ip-10-0-211-28.us-west-2.compute.internal Ready worker 46m v1.20.0+bafe72f

ip-10-0-214-96.us-west-2.compute.internal Ready master 56m v1.20.0+bafe72f

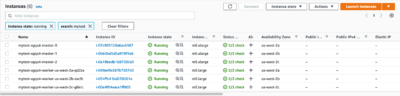

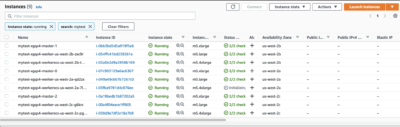

As we can see on the AWS console 3 x Master Nodes (m5.xlarge) were created, and 3 x Worker Nodes (m5.large).

OpenShift 4 allows customers to scale clusters in the same manner they are used to on the Cloud by means of machine sets. Machine sets are a significant improvement over the older versions of OpenShift. Now you are going to add 3 more OCP compute nodes to the cluster using machinesets.

This will show you the existing machinesets used to create the 3 worker nodes in the cluster already. There is a machineset for each AWS AZ (us-east-1a, us-east-1b, us-east-1c). Your machinesets NAME will be different from that below.

mshetty@mshetty-mac 4.7 % oc get machinesets -n openshift-machine-api | grep -v infra

NAME DESIRED CURRENT READY AVAILABLE AGE

mytest-xgqs4-worker-us-west-2a 1 1 1 1 7h45m

mytest-xgqs4-worker-us-west-2b 1 1 1 1 7h45m

mytest-xgqs4-worker-us-west-2c 1 1 1 1 7h45m

mytest-xgqs4-worker-us-west-2d 0 0 7h45m

NOTE: Make sure you do the next step for finding and using your CLUSTERID

mshetty@mshetty-mac 4.7 % CLUSTERID=$(oc get machineset -n openshift-machine-api -o jsonpath='{.items[0].metadata.labels.machine\.openshift\.io/cluster-api-cluster}')

mshetty@mshetty-mac 4.7 % echo $CLUSTERID

mshetty@mshetty-mac 4.7 % curl -s https://raw.githubusercontent.com/red-hat-storage/ocs-training/master/misc/workerocs-machines.yaml | sed "s/CLUSTERID/$CLUSTERID/g" | oc apply -f -

Example output:

Mayurs-MacBook-Pro:4.3 mshetty$ curl -s https://raw.githubusercontent.com/red-hat-storage/ocs-training/master/misc/workerocs-machines.yaml | sed "s/CLUSTERID/$CLUSTERID/g" | oc apply -f -

machineset.machine.openshift.io/cluster-28cf-t22gs-workerocs-us-west-2a created

machineset.machine.openshift.io/cluster-28cf-t22gs-workerocs-us-west-2b created

machineset.machine.openshift.io/cluster-28cf-t22gs-workerocs-us-west-2c created

Check that you have new machines created.

oc get machines -n openshift-machine-api | egrep 'NAME|workerocs'

They may be in pending for sometime so repeat the command above until they are in a running STATE. The NAME of your machines will be different than shown below.

Example output:

mshetty@mshetty-mac 4.7 % oc get machines -n openshift-machine-api | egrep 'NAME|workerocs'

NAME PHASE TYPE REGION ZONE AGE

mytest-xgqs4-workerocs-us-west-2a-7lrcz Provisioned m5.4xlarge us-west-2 us-west-2a 3m15s

mytest-xgqs4-workerocs-us-west-2b-tw48l Provisioned m5.4xlarge us-west-2 us-west-2b 3m15s

mytest-xgqs4-workerocs-us-west-2c-pgdhh Provisioned m5.4xlarge us-west-2 us-west-2c 3m15s

mshetty@mshetty-mac 4.7 % oc get machines -n openshift-machine-api | egrep 'NAME|workerocs'

NAME PHASE TYPE REGION ZONE AGE

mytest-xgqs4-workerocs-us-west-2a-7lrcz Running m5.4xlarge us-west-2 us-west-2a 6m38s

mytest-xgqs4-workerocs-us-west-2b-tw48l Running m5.4xlarge us-west-2 us-west-2b 6m38s

mytest-xgqs4-workerocs-us-west-2c-pgdhh Running m5.4xlarge us-west-2 us-west-2c 6m38s

You can see that the OCS worker machines are using the AWS EC2 instance type m5.4xlarge. The m5.4xlarge instance type follows our recommended instance sizing for OCS, 16 vCPU and 64 GB RAM. Such instances are based on Intel Xeon Scalable Processors Generation supporting Ceph optimizations done across multiple years of joint Intel and Red Hat enablement work for Ceph.

Now you want to see if our new machines are added to the OCP cluster.

watch "oc get machinesets -n openshift-machine-api | egrep 'NAME|workerocs'"

This step could take more than 5 minutes. The result of this command needs to look like below before you proceed. All new OCS worker machinesets should have an integer, in this case 1, filled out for all rows and under columns READY and AVAILABLE. The NAME of your machinesets will be different than shown below.

mshetty@mshetty-mac 4.7 % oc get machinesets -n openshift-machine-api | egrep 'NAME|workerocs'

NAME DESIRED CURRENT READY AVAILABLE AGE

mytest-xgqs4-workerocs-us-west-2a 1 1 1 1 22m

mytest-xgqs4-workerocs-us-west-2b 1 1 1 1 22m

mytest-xgqs4-workerocs-us-west-2c 1 1 1 1 22m

Now check to see that you have 3 new OCP worker nodes. The NAME of your OCP nodes will be different than shown below.

oc get nodes -l node-role.kubernetes.io/worker

Example output:

mshetty@mshetty-mac 4.7 % oc get nodes -l node-role.kubernetes.io/worker

NAME STATUS ROLES AGE VERSION

ip-10-0-131-134.us-west-2.compute.internal Ready worker 20m v1.20.0+bafe72f

ip-10-0-142-229.us-west-2.compute.internal Ready worker 9h v1.20.0+bafe72f

ip-10-0-166-231.us-west-2.compute.internal Ready worker 9h v1.20.0+bafe72f

ip-10-0-176-120.us-west-2.compute.internal Ready worker 20m v1.20.0+bafe72f

ip-10-0-202-68.us-west-2.compute.internal Ready worker 20m v1.20.0+bafe72f

ip-10-0-211-28.us-west-2.compute.internal Ready worker 9h v1.20.0+bafe72f

Installing the OCS Operator

In this section you will be using three of the worker OCP 4 nodes to deploy OCS 4 using the OCS Operator in OperatorHub.

Prerequisites:

You must create a namespace called openshift-storage as follows:

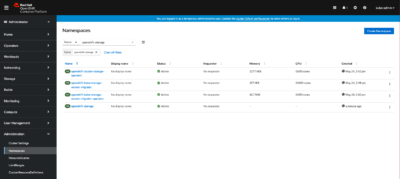

- Click Administration → Namespaces in the left pane of the OpenShift Web Console.

- Click Create Namespaces.

- In the Create Namespace dialog box, enter openshift-storage for Name and openshift.io/cluster-monitoring=true for Labels. This label is required to get the dashboards.

- Select No restrictions option for Default Network Policy.

- Click Create.

Procedure to install OpenShift Container Storage using the Red Hat OpenShift Container Platform(OCP) Operator Hub on Amazon Web Services (AWS):

- Log in to the Red Hat OpenShift Container Platform Web Console as user kubeadmin

- Click Operators → OperatorHub.

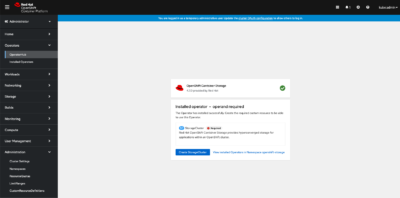

- Search for “OpenShift Container Storage” from the list of operators and click on it.

- On the OpenShift Container Storage Operator page, click Install.

On the Create Operator Subscription page, the Installation Mode, Update Channel, and Approval Strategy options are available.

- Select a specific namespace on the cluster for the Installation Mode option. Select openshift-storage namespace from the drop down menu.

- stable-4.7 channel is selected by default for the Update Channel option.

- Select an Approval Strategy: Automatic specifies that you want OpenShift Container Platform to upgradeOpenShift Container Storage automatically

Manual specifies that you want to have control to upgrade OpenShift ContainerStorage manually.

- Click Subscribe.

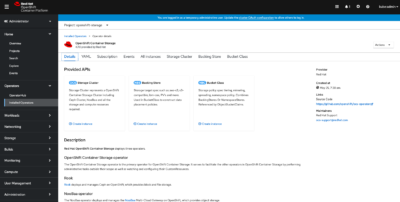

The Installed Operators page is displayed with the status of the operator.

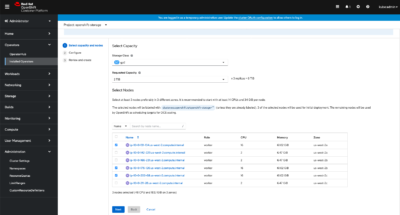

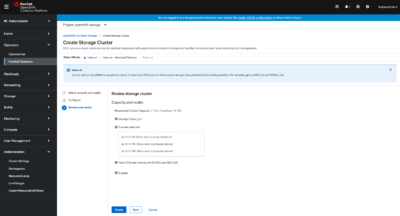

Click on the “Create Storage Cluster” button, it will take you to the following page.

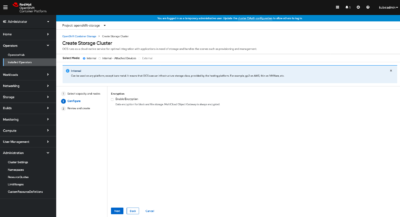

After clicking on the “Create” button, wait for the status to change from Progressing to Ready.

Verify the OCS service

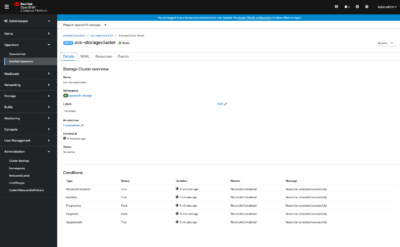

Click on Openshift Container Storage Operator to get to the OCS configuration screen.

On the top of the OCS configuration screen, scroll over to the Storage cluster, make sure the Status is Ready.

*IMPORTANT*

You can create application pods either on OpenShift Container Storage nodes or nonOpenShift Container Storage nodes and run your applications. However, it is recommended that you apply a taint to the nodes to mark them for exclusive OpenShiftContainer Storage use and not run your applications pods on these nodes. Because the tainted OpenShift nodes are dedicated to storage pods, they will only require an OpenShift Container Storage subscription and not an OpenShift subscription.

To add a taint to a node, use the following command:

Mayurs-MacBook-Pro:4.3 mshetty$ oc adm taint nodes ip-10-0-140-7.ec2.internal node.ocs.openshift.io/storage=true:NoSchedule

node/ip-10-0-140-7.ec2.internal tainted

Mayurs-MacBook-Pro:4.3 mshetty$ oc adm taint nodes ip-10-0-153-95.ec2.internal node.ocs.openshift.io/storage=true:NoSchedule

node/ip-10-0-153-95.ec2.internal tainted

Mayurs-MacBook-Pro:4.3 mshetty$ oc adm taint nodes ip-10-0-169-227.ec2.internal node.ocs.openshift.io/storage=true:NoSchedule

node/ip-10-0-169-227.ec2.internal tainted

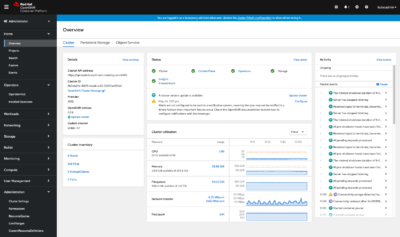

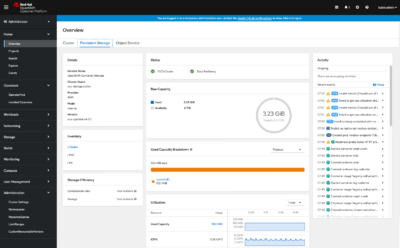

Getting to know the Storage Dashboard

You can now also check the status of your storage cluster with the OCS specific Dashboards that are included in your Openshift Web Console. You can reach this by clicking on Home on your left navigation bar, then selecting Dashboards and finally clicking on Persistent Storage on the top navigation bar of the content page.

Once this is all healthy, you will be able to use the three new StorageClasses created during the OCS 4 Install:

- ocs-storagecluster-ceph-rbd

- ocs-storagecluster-cephfs

- openshift-storage.noobaa.io

You can see these three StorageClasses from the Openshift Web Console by expanding the Storage menu in the left navigation bar and selecting Storage Classes. You can also run the command below:

mshetty@mshetty-mac 4.7 % oc -n openshift-storage get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

gp2 (default) kubernetes.io/aws-ebs Delete WaitForFirstConsumer true 19h

gp2-csi ebs.csi.aws.com Delete WaitForFirstConsumer true 19h

ocs-storagecluster-ceph-rbd openshift-storage.rbd.csi.ceph.com Delete Immediate true 147m

ocs-storagecluster-cephfs openshift-storage.cephfs.csi.ceph.com Delete Immediate true 147m

openshift-storage.noobaa.io openshift-storage.noobaa.io/obc Delete Immediate false 143m

mshetty@mshetty-mac 4.7 %

Please make sure the three storage classes are available in your cluster before proceeding.

(Note: The NooBaa pod used the ocs-storagecluster-ceph-rbd storage class for creating a PVC for mounting to it’s db container.)

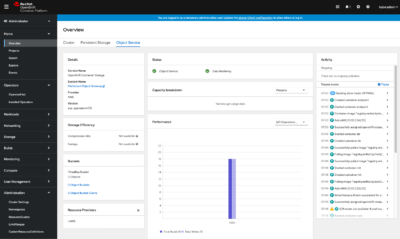

You can access the Noobaa Dashboard by clicking on the “Multicloud Object Gateway” in the Object Services tab on the OpenShift Console.

Conclusion

In this post we have seen how to add AWS instances with Intel Xeon Scalable Processors for Red Hat® OpenShift® Data Foundation—previously Red Hat OpenShift Container Storage—for persistent software-defined storage integrated with and optimized for Red Hat OpenShift Container Platform. We also saw how to use the OpenShift administrator console for dynamic, stateful, and highly available container-native storage that can be provisioned and de-provisioned on demand.

Reference

- https://red-hat-storage.github.io/ocs-training/ocs.html

- Deploying OpenShift Container Storage usingAmazon Web Services

- Agile storage for the hybrid cloud

- Add speed and agility to application development

Written by Mayur Shetty, Principal Solution Architect, Red Hat and Raghu Moorthy, Principal Engineer, Intel Inc.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.