Introduction

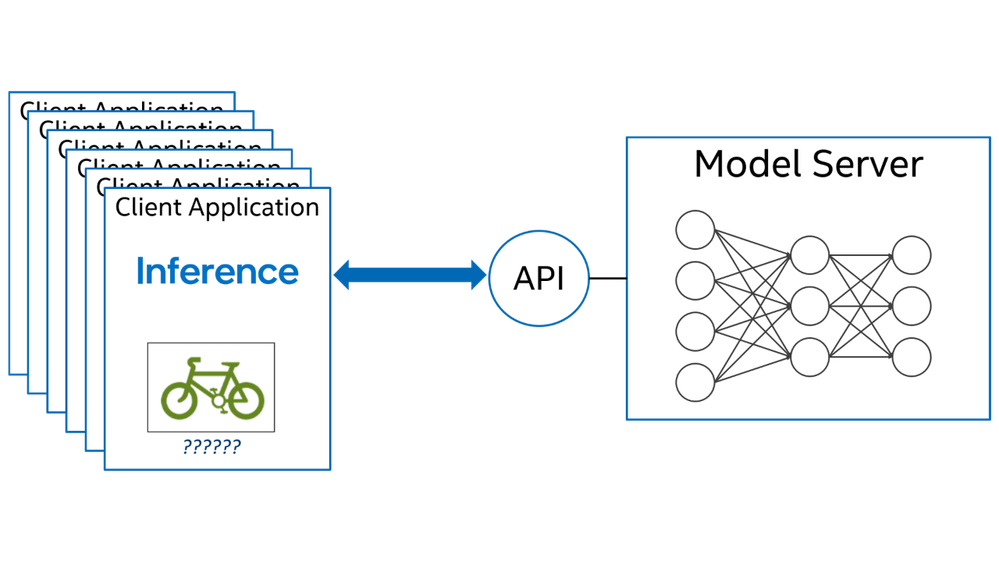

As more applications begin to leverage deep learning for tasks like Natural Language Processing (NLP), deploying inference in production often requires combining multiple models into a pipeline.

Computationally constrained edge devices may not always be able to process the inference with required latency to suit the use case and cloud inference service with more processing power may be needed. But even then, the network load and additional latencies may present an obstacle to deploying the full inference pipeline composed of multiple models accessed separately by the clients.

In this article we will show you how you can work with OpenVINO™ Model Server to use the full potential of deep learning assets. We will describe how to perform a complex Optical Character Recognition pipeline that detects and recognizes text from images with a single request. We will also explain why it could boost the user experience and performance.

What is OCR?

Optical Character Recognition (OCR) is the task of detecting and recognizing text in an image. OCR is a challenging task, especially with varying backgrounds, lighting, fonts, and distortions in images. Recently, deep learning models have been developed to process both structured and unstructured text. In this article, we will focus on leveraging two open-source models – EAST and CRNN – to detect and recognize unstructured text from natural scene images.

What is OpenVINO™ Model Server?

OpenVINO™ Model Server (OVMS) is a scalable, high-performance solution for serving deep learning models optimized for Intel® architectures. The server provides an inference service via gRPC or REST API – making it easy to deploy new algorithms and AI experiments using all models trained in a framework that is supported by OpenVINO™ inference backend. If you desire to focus on your AI application instead of serving solution, it might be right decision to choose OVMS. You can find complete feature list in project’s GitHub repository.

Overcoming the latency overhead with a single model request. Why would you want to create pipelines of AI models? And what are pipelines?

AI models are usually very specific and try to address a specific task in an optimized manner. This requires the developer to combine multiple models when solving broader problems. By interacting with the model server API, each inference request comes with some overhead in terms of latency. This is the time data needs to be transferred over the network from one place to another. One can easily imagine that by increasing the number of requests, an additional latency is introduced. What if a complete task could be performed with just one request? This is where pipelines (or DAGs as it will be described below) come in handy. OpenVINO™ Model Server allows defining pipelines combining multiple models and operations between them within one server instance. This way we can avoid the additional network communications latency by relaying on local communications between models on the server side.

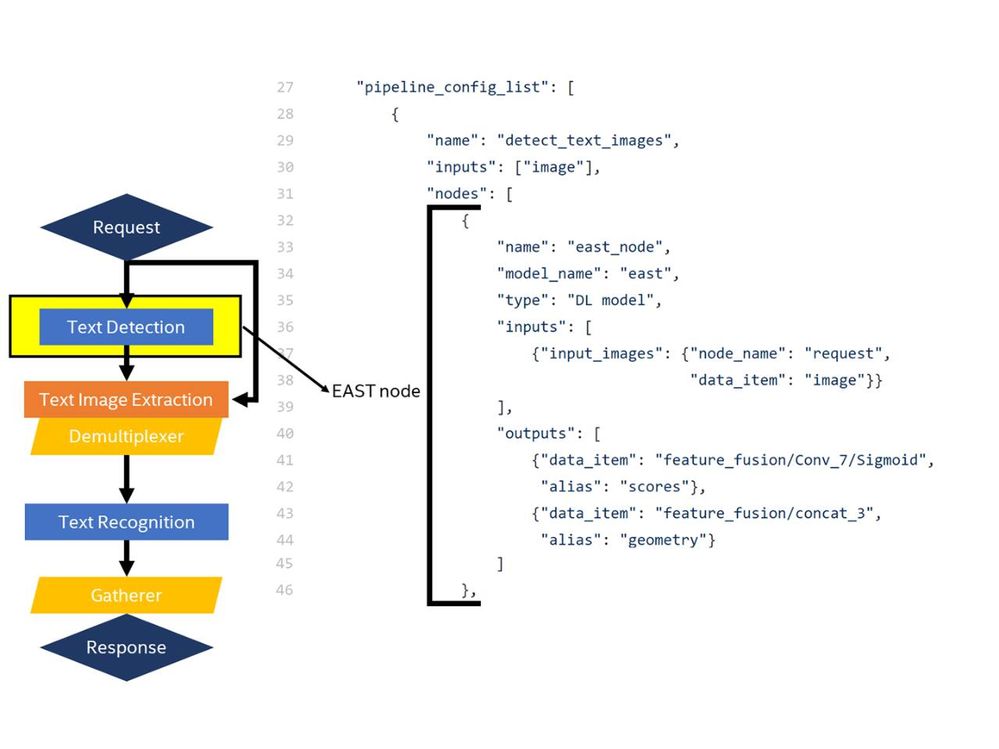

What is Directed Acyclic Graph Scheduler (DAG) and how can it glue together our pipeline of AI models?

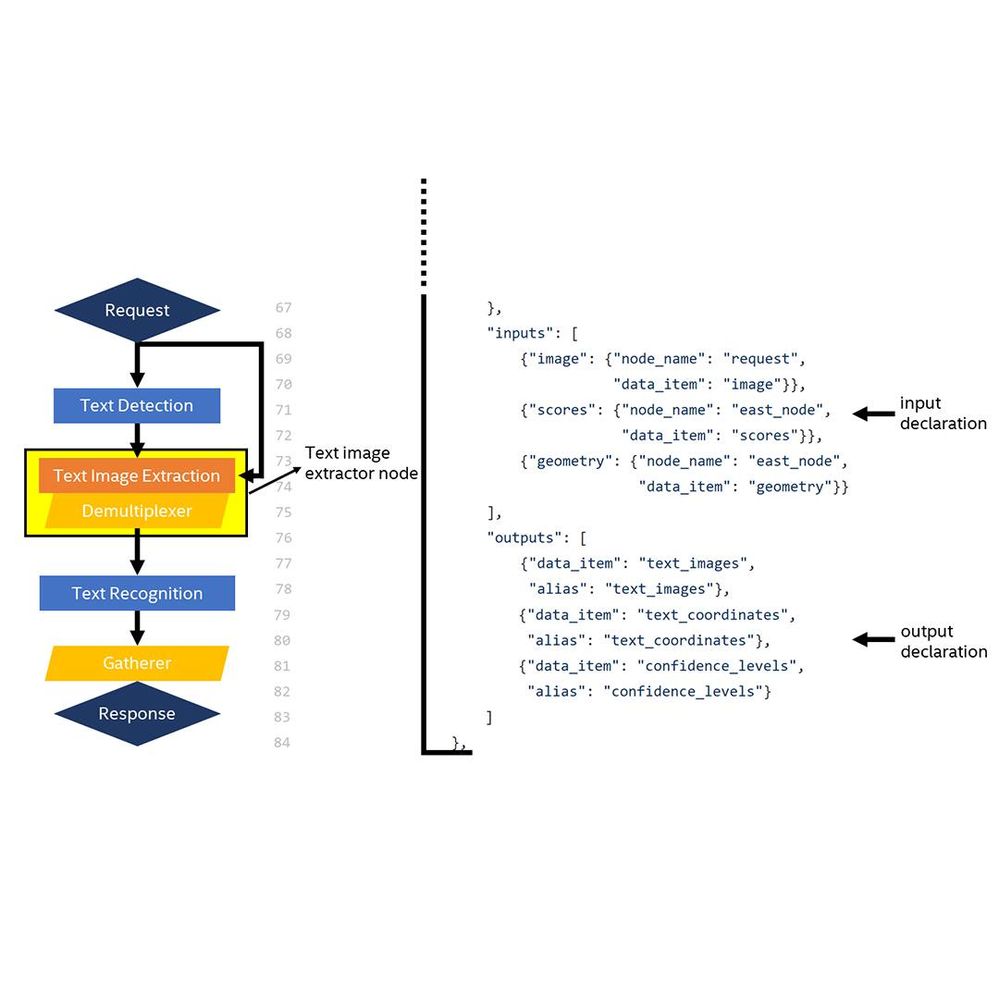

Directed Acyclic Graph Scheduler is a model server feature which controls the execution of an entire graph of interconnected models defined within the OpenVINO Model Server configuration. A DAG consists of executable nodes (representing models or pre/post processing operations) and connections between them. These connections cannot form cycles, hence the “acyclic” property. Each graph has one entry node which corresponds to the received inference request and one exit node which is linked to the response to be generated. Other nodes represent either underlying DL model or custom processing operation defined in separate shared libraries. Each request creates separate event loop which controls graph flow from source to the sink by scheduling inferences on deep learning models.

Custom pre/postprocessing steps between models – why should we care?

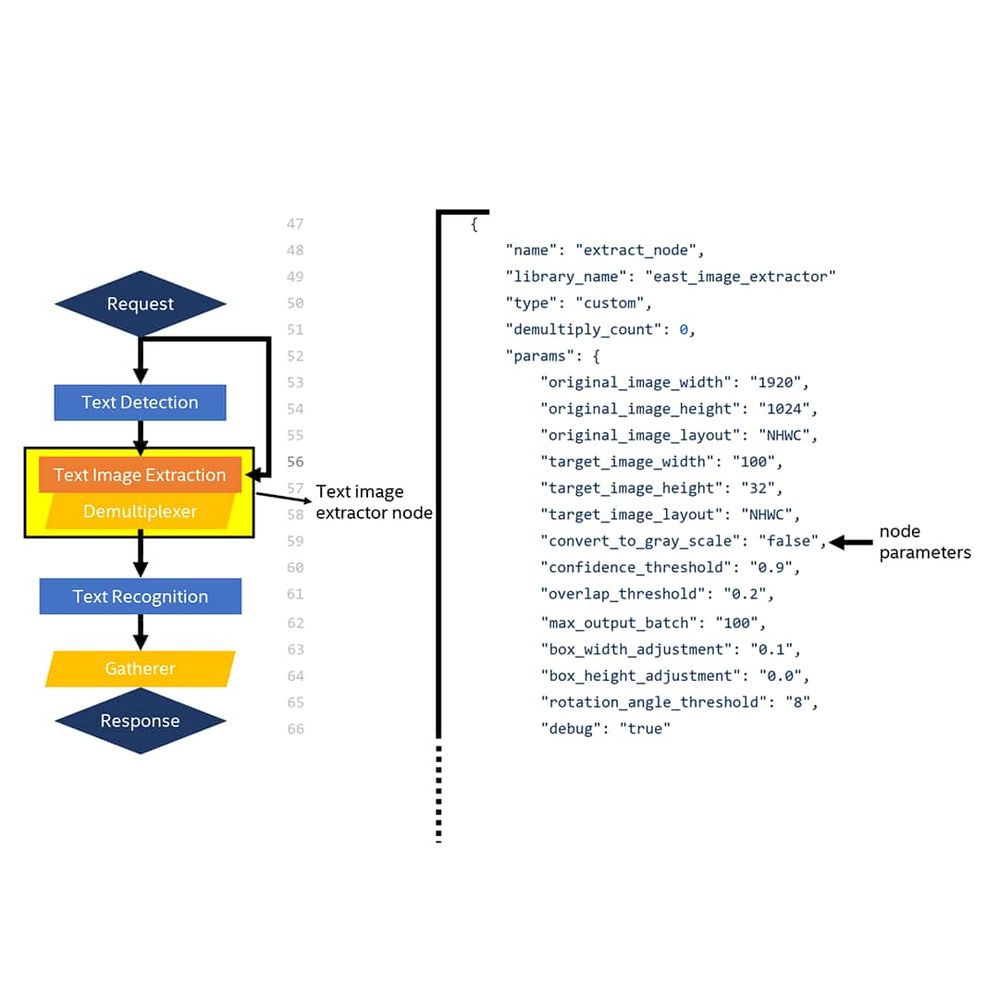

Deep learning models consume input tensors which represent input data and produce output tensors representing the inference results. Each tensor is described by a number of properties: shape, precision, and layout. In many cases the output tensor of one model may not match the input tensor of another. For example, what if one model outputs an image with NCHW layout but the following model needs it in NHWC? What if a model outputs detected object coordinates but what is needed by the following node are object images cropped out of the original image?

The solution to this problem are so called “custom nodes” that you can put between “normal” models in the DAG. These “custom nodes” are user defined processing operations implemented via shared libraries. Users can implement such shared library according to the predefined C API to perform any data transformation using desired third-party libraries such as, OpenCV. Sample custom node shared libraries can be found in OVMS GitHub repository.

In our OCR example, we need to analyze what is the output of EAST model and how to decode it to extract the bounding box information to crop the text images out of the original image. There are 2 outputs of EAST model: geometry and scores. Scores are in a shape of [1,1,H’,W’] meaning for every height and width pixel combination we have 1 value: confidence level. H’ and W’ are always 4x smaller compared to the original input image’s height and width. The geometry output is slightly more complicated, because for each H’/W’ pair it contains 5 values: distance to nearest min x, min y, max x, max y, and an angle. The postprocessing node’s job is to filter the pixel pairs based on confidence threshold, calculate the surrounding bounding box, and apply non maximum suppression to remove any overriding boxes. After this filter, we end up with N boxes, all describing specific word detection. Since we need the text image as an output, the node needs to rotate the text using angle info, and then crop and resize the text to the desired CRNN model input shape.

Preprocessing optimization

In 2021.4 release we have added optimizations to speed up DAG processing, including OCR pipeline. One is an option to change input/output layout of a model. It avoids data transposition which could be required in the client application to match the original image layout in the model. Since the custom image extractor node internally uses OpenCV to perform image operations, it prefers data to be in NHWC layout – otherwise transposition must be performed. By switching to NHWC layout we may get rid of this time-consuming step. Furthermore, we have also added binary input format support for endpoints accepting NHWC layout. With that feature, you can delegate the image decompression to the server and reduce bandwidth usage.

Demultiplexing the request. Feature for processing multiple model results separately.

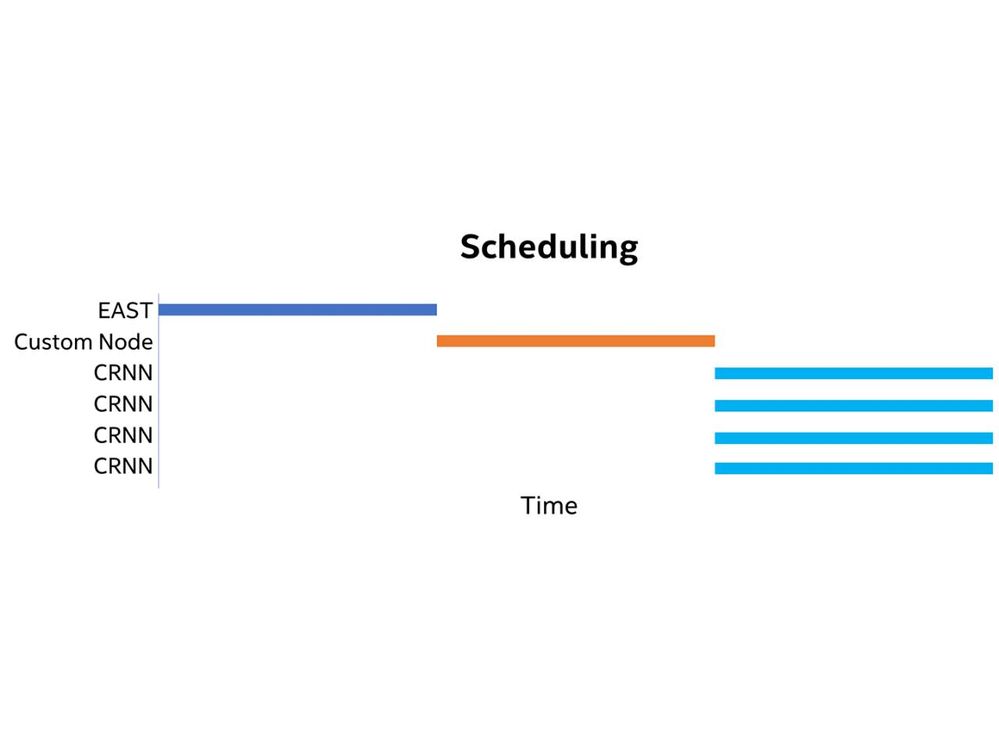

In some cases, models are used to detect objects in the image. What if this is only the first stage and these detections need to be processed further with yet another model? One could handle this in the application and after collecting the initial detection results simply send N separate requests (each representing an individual detection) to the following model and again collect the results in the client application. But as mentioned earlier, that would add a lot to the overall latency. The DAG demultiplexing feature allows you to split the N detections and process them in the following stages in separate branches of the same DAG. One can also decide at what point results should be collected or leave this responsibility to DAG itself and it will do it for you before sending a response. Each DAG node may influence the shape of our data which is explained in documentation.

How to run simple optical character recogniton application using OVMS

Below I will explain the flow briefly, full OCR documentation can be found here1.

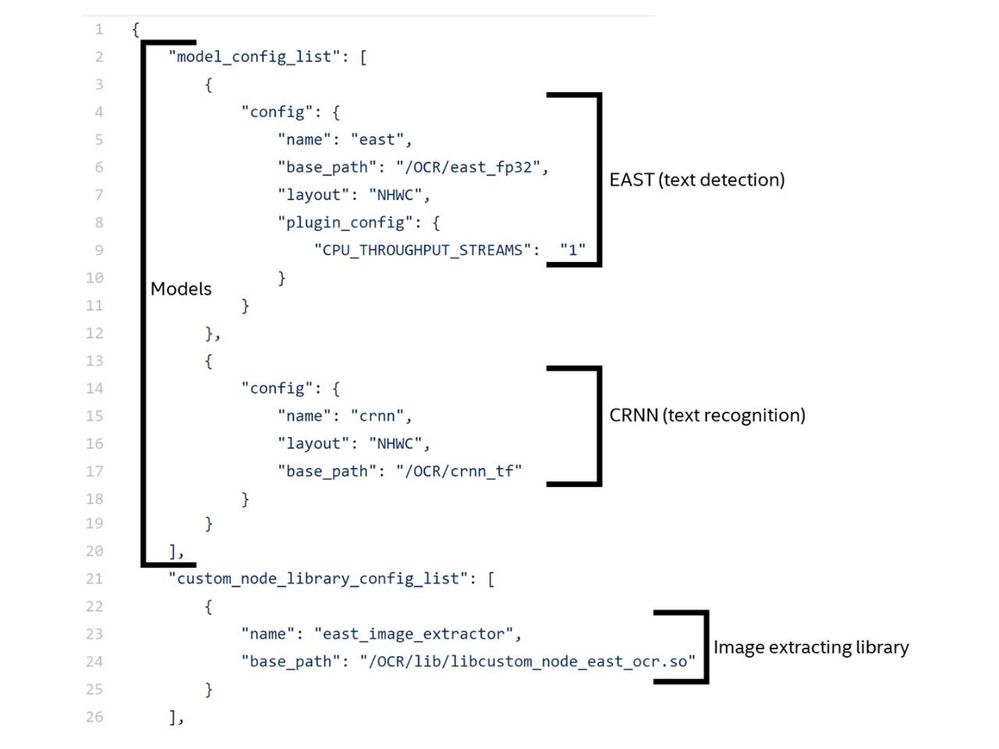

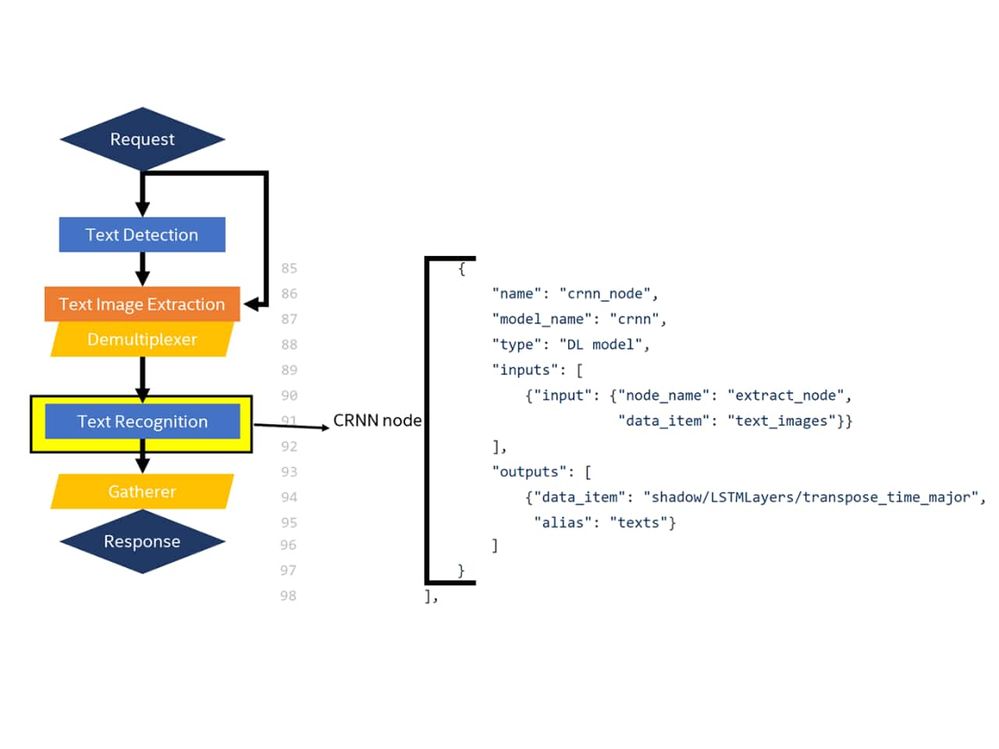

Prepare required models

We need 2 models for this task – text detection and text recognition models. For text detection, we will use model EAST which takes image as an input and outputs text box coordinates with angle information in case the text is rotated. For text recognition, we will pick CRNN model which takes an image with a single word as input and outputs the probabilities for each of possible 26 letters in 37 positions. Both models can be downloaded and converted to IR format (format which OpenVINO™ requires) using detailed instruction mentioned in ref. 1.

Prepare postprocessing node library

Next, we need a custom DAG node. Sample custom node for this use case is available in GitHub repository. Building scripts can be used to compile this shared library.

Deploy OVMS with OCR pipeline

To start OVMS with previously prepared models and custom node shared library, we need configuration file. This is where all models, node libraries and pipelines are defined. Pull OVMS docker image from DockerHub and start the server. Example python client is prepared to send request with image.

Performance boost after off-loading processing to the server

Internal event loop schedules inferences asynchronously. If there are multiple text images found in original image, every image will be scheduled for inference running OpenVINO™ backend and following nodes will wait for all its data sources to finish processing. Each node processing time can be tracked in logs with debug log level enabled. This can easily prove that multiple detections are processed simultaneously without a need to communicate with client before final response is generated.

Summary

The Directed Acyclic Graph Scheduler in OpenVINO Model Server enables the construction of complex inference pipelines -- reducing latency for custom use cases while minimizing time required to deploy inference applications. By creating custom nodes, it is possible to support almost any inference pipeline.

Check out the custom node samples on GitHub:

https://github.com/openvinotoolkit/model_server/tree/main/src/custom_nodes/

Useful links:

- OVMS GitHub repository: https://github.com/openvinotoolkit/model_server

- DAG Scheduler documentation: https://github.com/openvinotoolkit/model_server/blob/main/docs/dag_scheduler.md

- Custom Node development guide: https://github.com/openvinotoolkit/model_server/blob/main/docs/custom_node_development.md

- OpenVINO™ Model Zoo: https://github.com/openvinotoolkit/open_model_zoo

Notices & Disclaimers

Performance varies by use, configuration and other factors. Learn more at www.Intel.com/PerformanceIndex .

Performance results are based on testing as of dates shown in configurations and may not reflect all publicly available updates. See backup for configuration details. No product or component can be absolutely secure.

Your costs and results may vary.

Intel technologies may require enabled hardware, software or service activation.

Intel does not control or audit third-party data. You should consult other sources to evaluate accuracy.

No license (express or implied, by estoppel or otherwise) to any intellectual property rights is granted by this document.

Intel disclaims all express and implied warranties, including without limitation, the implied warranties of merchantability, fitness for a particular purpose, and non-infringement, as well as any warranty arising from course of performance, course of dealing, or usage in trade.

© Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Mary is the Community Manager for this site. She likes to bike, and do college and career coaching for high school students in her spare time.

Mary is the Community Manager for this site. She likes to bike, and do college and career coaching for high school students in her spare time.