Matthias Müller leads the Embodied AI Lab at Intel Labs. His team works on algorithms for perceiving and understanding the physical world in order to build digital twins and intelligent embodied agents.

Highlights:

- Intel Labs introduces VI-Depth version 1.0, an open-source model that integrates monocular depth estimation and visual inertial odometry to produce dense depth estimates with metric scale.

- Intel Labs releases MiDaS version 3.1, adding new features and improvements to the open-source deep learning model for robust relative monocular depth estimation.

- VI-Depth 1.0 and MiDaS 3.1 can be downloaded under separate open-source MIT licenses.

Intel Labs continues to improve depth estimation solutions for the computer vision community with the introduction of VI-Depth version 1.0, a new monocular visual-inertial depth estimation model, and the release of MiDaS version 3.1 for robust relative monocular depth estimation.

Introducing VI-Depth 1.0 for Monocular Visual-Inertial Depth Estimation

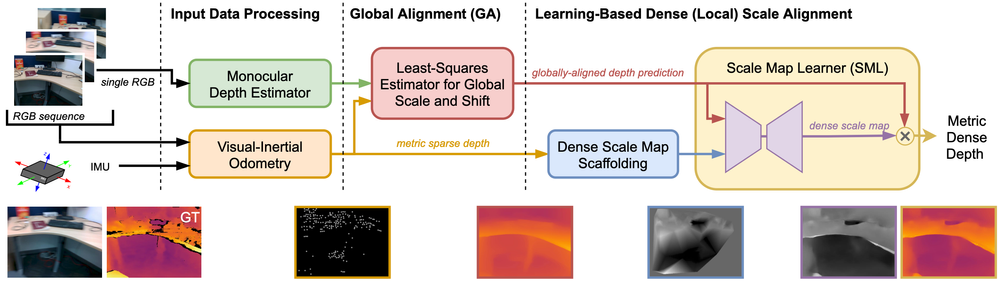

VI-Depth is visual-inertial depth estimation pipeline that integrates monocular depth estimation and visual inertial odometry (VIO) to produce dense depth estimates with metric scale. This approach performs global scale and shift alignment against sparse metric depth, followed by learning-based dense alignment. Depth perception is fundamental to visual navigation, and correctly estimating distances can help plan motion and avoid obstacles. Some visual applications require metrically accurate estimated depth, where every depth value is provided in absolute metric units and represents physical distance. VI-Depth’s accurate depth estimation can aid in scene reconstruction, mapping, and object manipulation.

Combining metric accuracy and high generality is a key challenge in learning-based depth estimation. VI-Depth incorporates inertial data into the visual depth estimation pipeline — not through sparse-to-dense depth completion, but rather through dense-to-dense depth alignment using estimated and learned scale factors. Inertial measurements inform and propagate metric scale through global and local alignment stages. VI-Depth shows improved error reduction with learning-based local alignment over least-squares global alignment only, and demonstrates successful zero-shot cross-dataset transfer from synthetic training data to real-world test data. This modular approach supports direct integration of existing and future monocular depth estimation and VIO systems. It resolves metric scale for metrically ambiguous monocular depth estimates, assisting in the deployment of robust and general monocular depth estimation models.

Figure 1. There are three stages in the visual-inertial depth estimation pipeline: (1) input processing, where RGB and IMU data feed into MiDaS monocular depth estimation alongside visual-inertial odometry, (2) global scale and shift alignment, where monocular depth estimates are fitted to sparse depth from VIO in a least-squares manner, and (3) learning-based dense scale alignment, where globally-aligned depth is locally realigned using a dense scale map regressed by the ScaleMapLearner (SML). The row of images at the bottom illustrate a VOID sample being processed through the pipeline. From left to right: the input RGB, ground truth depth, sparse depth from VIO, globally-aligned depth, scale map scaffolding, dense scale map regressed by SML, and final depth output.

Incorporating inertial data can help resolve scale ambiguity, and most mobile devices already contain inertial measurement units (IMUs). Global alignment determines appropriate global scale, while dense scale alignment (SML) operates locally and pushes or pulls regions towards correct metric depth. The SML network leverages MiDaS as an encoder backbone. In the modular pipeline, VI-Depth combines data-driven depth estimation with the MiDaS relative depth prediction model, alongside the IMU sensor measurement unit. The combination of data sources allows VI-Depth to generate more reliable dense metric depth for every pixel in an image.

VI-Depth is available under an open-source MIT license on GitHub.

MiDaS 3.1 Upgrades Improve Model Accuracy for Relative Monocular Depth Estimation

In late 2022, Intel Labs released MiDaS 3.1, adding new features and improvements to the open-source deep learning model for monocular depth estimation in computer vision. Trained on large and diverse image datasets, MiDaS is capable of providing relative depth across indoor and outdoor domains, making it a versatile backbone for many applications.

With its robust and efficient performance for estimating the relative depth of each pixel in an input image, MiDaS is useful for a wide range of applications, including robotics, augmented reality (AR), virtual reality (VR), and computer vision.

MiDaS was recently integrated into Stable Diffusion 2.0, which is a latent text-to-image diffusion model capable of generating photorealistic images with text input. The MiDaS integration enables a new depth-to-image feature for structure preserving image-to-image and shape conditional image synthesis. Stable Diffusion infers the depth of an input image using MiDaS, and then generates new images using both the text and depth information. With the MiDaS integration, Stable Diffusion's depth-guided model may produce images that look radically different from the original, but still preserve the geometry, enabling diverse applications.

Another example of this model’s success is the 360-degree VR environments created by digital creator Scottie Fox using a combination of Stable Diffusion and MiDaS. These experiments may potentially lead to new virtual applications including crime scene reconstruction for court cases, therapeutic environments for healthcare, and immersive gaming experiences.

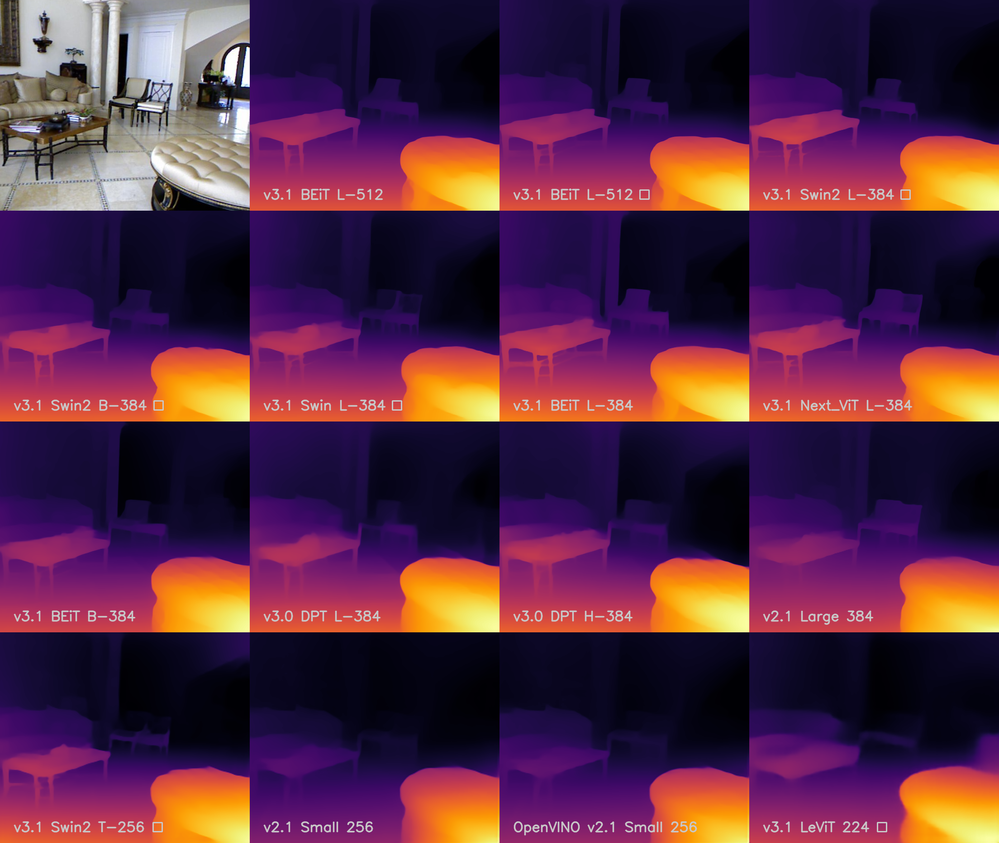

Figure 2. Visualization of the depth produced by the new MiDaS 3.1 models compared to the previous releases.

MiDaS 3.1 Features and Improvements

Implemented in PyTorch, MiDaS 3.1 includes the following upgrades:

- New models based on five different types of transformers (BEiT, Swin2, Swin, Next-ViT, and LeViT). These models may offer improved accuracy and performance compared to the models used in previous versions of MiDaS.

- The training dataset has been expanded from 10 to 12 datasets, including the addition of KITTI and NYU Depth V2 using BTS split. This dataset expansion may improve the generalization ability of the model, allowing it to efficiently perform on a wider range of tasks and environments.

- BEiTLarge 512, the best performing model, is on average about 28% more accurate than MiDaS 3.0.

- The latest version includes the ability to perform live depth estimation from a camera feed, which could be useful in a variety of applications in computer vision and robotics, including navigation and 3D reconstruction.

MiDaS 3.1 is available on GitHub, where it has received more than 2,600 stars from the community.

Matthias holds a B.Sc. in Electrical Engineering and Math Minor from Texas A&M University. Early in his career, he worked at P+Z Engineering as an Electrical Engineer developing mild-hybrid electric machines for BMW. Later, he obtained a M.Sc. and PhD in Electrical Engineering from KAUST with focus on persisentent aerial tracking and sim-to-real transfer for autonomous navigation. Matthias has contributed to more than 15 publications published in top tier conferences and journals such as CVPR, ECCV, ICCV, ICML, PAMI, Science Robotics, RSS, CoRL, ICRA and IROS. Matthias has extensive experience in object tracking and autonomous navigation of embodied agents such as cars and UAVs. He was recognized as an outstanding reviewer for CVPR’18 and won the best paper award at the ECCV’18 workshop UAVision.

Matthias holds a B.Sc. in Electrical Engineering and Math Minor from Texas A&M University. Early in his career, he worked at P+Z Engineering as an Electrical Engineer developing mild-hybrid electric machines for BMW. Later, he obtained a M.Sc. and PhD in Electrical Engineering from KAUST with focus on persisentent aerial tracking and sim-to-real transfer for autonomous navigation. Matthias has contributed to more than 15 publications published in top tier conferences and journals such as CVPR, ECCV, ICCV, ICML, PAMI, Science Robotics, RSS, CoRL, ICRA and IROS. Matthias has extensive experience in object tracking and autonomous navigation of embodied agents such as cars and UAVs. He was recognized as an outstanding reviewer for CVPR’18 and won the best paper award at the ECCV’18 workshop UAVision.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.