Previously, in the article Deploy OpenVINO Model Server in OpenShift and Kubernetes, we explained how to deploy deep learning inference workloads using the Intel® Distribution of OpenVINO™ toolkit Operator for Red Hat OpenShift*. In this article, let’s review the challenges and solutions for efficiently scaling inference services in OpenShift* – focusing primarily on load balancing gRPC (HTTP/2) traffic and managing CPU resources.

Load Balancing Services in OpenShift

Load balancing services in Kubernetes and OpenShift are based on L3/L4 (transport layer) a lightweight solution where the proxy opens a connection between the client and backend endpoints. This approach has important consequences for gRPC traffic. gRPC connections are sticky, which means the connection can be reused between multiple requests.

This saves time on the TCP handshake and makes it possible to serve stateful models for inference. However, preserving the connection impacts load balancing for clients that generate multiple requests in parallel. So long as the client connection is reused, all requests will be routed to the same backend container.

gRPC load balancing on the L3/L4 level is only effective when many clients are used or when clients establish multiple connections to the server. Evenly distributing the load between replicas is easier when multiple client connections are established.

Red Hat OpenShift Service Mesh

Leveraging the OpenShift Service Mesh Operator is one way to overcome the load balancing challenge mentioned. This Operator is based on Istio and it provides methods for controlling traffic, including the following:

- Traffic Mirroring

- Traffic Shifting

- Connection Timeouts and Retries

- Circuit Breakers

- Fault Injection

Additionally, Service Mesh can be used for enhanced traffic monitoring and improved security.

Learn more about Istio mesh use cases.

When deploying inference services, an important feature in Service Mesh is L7 load balancing on the sidecar proxy. This feature simplifies load distribution since each inference request can be dispatched separately depending on current load conditions.

For detailed instructions on how to install the Service Mesh Operator, see the OpenShift documentation. Once the Service Mesh Operator is up and running, three additional resources must be created to make it fully operational:

- Create an Istio control plane in a chosen namespace and set the default parameters or change according to your needs. For high load scenarios, increasing the resource allocation to proxy containers is recommended. For more information, see the documentation.

- Create a Service Mesh member role in the control plane namespace and associate it with projects to be added to the mesh scope.

- Create Service Mesh members in the scoped projects that should be included in the Service Mesh. Associate them with the control plane instance.

Once the Service Mesh project is established, deploying a ModelServer instance via the Operator requires just one additional “annotations” parameter in the ModelServer resource like presented below:

apiVersion: intel.com/v1alpha1

kind: ModelServer

metadata:

name: ovms-sample

spec:

annotations:

sidecar.istio.io/inject: "true"

grpc_port: 8080

image_name: registry.connect.redhat.com/intel/openvino-model-server:latest

log_level: INFO

model_name: "resnet"

model_path: "gs://ovms-public-eu/resnet50-binary"

plugin_config: '{\"CPU_THROUGHPUT_STREAMS\":\"1\"}'

replicas: 1

rest_port: 8081

service_type: ClusterIP

target_device: CPUWhen this record is created, the Operator will create deployment and service resources:

oc get pod

NAME READY STATUS RESTARTS AGE

ovms-sample-6f447b4c5-9c4sp 2/2 Running 0 60s

oc get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ovms-sample ClusterIP 10.217.4.168 <none> 8080/TCP,8081/TCP 60sNote that in Service Mesh projects, ModelServer pods will also include a second container that is automatically injected in the sidecar proxy. It provides a basic configuration to test load balancing at the request level.

Service Mesh use cases can be extended with additional records of VirtualService and DestinationRule.

The example below shows how to configure traffic that shifts between two OpenVINO™ model server services ovms-sample and ovms-sample-new, which may vary in terms of models or configurations.

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: shifting-example

spec:

hosts:

- "ovms-sample"

http:

- route:

- destination:

host: ovms-sample

port:

number: 8080

---

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: ovms-destination-rule

spec:

host: ovms-sample

trafficPolicy:

loadBalancer:

simple: LEAST_CONNThe DestinationRule changes the default load balancing algorithm to “Least Active Connections” and ensures that each node has a similar-sized queue or number of requests being processed.

Ingress Gateway

In OpenShift, the default Service Mesh configuration enables connections between pods inside the mesh namespaces. If access is needed from external namespaces or from outside of the cluster, configuring a gateway is required. All traffic to mesh services must arrive via the Istio ingress gateway.

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: gateway-ovms

spec:

selector:

istio: ingressgateway # use istio default controller

servers:

- port:

number: 80

name: grpc

protocol: GRPC

hosts:

- "*"

---

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: shifting-example

spec:

hosts:

- "*"

gateways:

- gateway-ovms

http:

- match:

- port: 80

route:

- destination:

host: ovms-sample

port:

number: 8080The configuration above exposes a virtual service with traffic shifting between two OpenVINO Model server instances at the cluster IP or external IP address of the istio-ingressgateway service:

oc get service -n ovms istio-ingressgateway

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

istio-ingressgateway ClusterIP 10.217.5.24 <none> 15021/TCP,80/TCP,443/TCP,15443/TCP 1d

Learn more about Red Hat OpenShift Service Mesh

CPU Manager Policy

Another important aspect to consider when scaling inference services is the mechanism for assigning physical CPU resources to container workloads. By default, the OpenShift kubelet uses CFS quota to apply CPU limits. This is a flexible solution because it moves workloads dynamically between CPU cores depending on the current utilization in all pods. However, some workloads, like inference execution, may be sensitive to this migration. A CPU manager policy helps ensure CPU cache affinity and stable latency results.

Running Intel® Distribution of OpenVINO™ toolkit model server with a CPU manager policy may be beneficial, especially when inference service containers are deployed with constrained resources or the node is executing other workloads in addition to the inference service.

Instructions for configuring a CPU manager policy are included in the OpenShift documentation. The example below presents a ModelServer configuration with restricted resources. It can take advantage of the CPU manager policy.

apiVersion: intel.com/v1alpha1

kind: ModelServer

metadata:

name: ovms-sample

spec:

annotations:

sidecar.istio.io/inject: "true"

grpc_port: 8080

image_name: registry.connect.redhat.com/intel/openvino-model-server:latest

log_level: INFO

model_name: "resnet"

model_path: "gs://ovms-public-eu/resnet50-binary"

plugin_config: '{\"CPU_THROUGHPUT_STREAMS\":\" CPU_THROUGHPUT_STREAMS \"}'

replicas: 1

rest_port: 8081

service_type: ClusterIP

target_device: CPU

resources:

requests:

memory: 500Mi

cpu: 4

limits:

memory: 500Mi

cpu: 4The easiest method for scaling the serving capacity is by adjusting the CPU resources. By adding more resources, the serving will process more requests. The plugin config parameter CPU_THROUGHPUT_STREAMS can be tuned to the number of expected parallel client connections. Learn more about it on Model server performance tuning

Running the client load in the cluster

Let’s consider an inference application running in an OpenShift cluster and generating requests for an Intel® Distribution of OpenVINO™ toolkit model server service. This scenario can be simulated using a container image including a sample C++ client, which runs asynchronous predictions on the ResNet50 model. In OpenShift the application can be containerized and built using ImageStream and BuildConfig resources.

kind: ImageStream

apiVersion: image.openshift.io/v1

metadata:

name: client-cpp

spec:

lookupPolicy:

local: false

---

kind: BuildConfig

apiVersion: build.openshift.io/v1

metadata:

name: build-client

spec:

nodeSelector: null

output:

to:

kind: ImageStreamTag

name: 'client-cpp:latest'

resources: {}

successfulBuildsHistoryLimit: 5

failedBuildsHistoryLimit: 5

strategy:

type: Docker

dockerStrategy:

dockerfilePath: Dockerfile

postCommit: {}

source:

type: Git

git:

uri: 'https://github.com/openvinotoolkit/model_server'

ref: 469582ff3fdd8b9757c67bf24ed34834a27821c3

contextDir: example_client/cpp

triggers:

- type: ConfigChangeThe configuration file above creates an ImageStream and builds an image with a sample c++ client. A container with that image can send a sequence of asynchronous prediction requests, which is a good example of the L7 load balancing advantage. With L3 load balancing, all requests from such client are redirected to a single replica. It reduces the throughput to a single node capacity.

The Job configuration below triggers the execution of the sample c++ client and starts submitting a sequence of 1,000,000 calls to the service.

apiVersion: batch/v1

kind: Job

metadata:

name: client

spec:

template:

metadata:

annotations:

sidecar.istio.io/inject: 'true'

spec:

containers:

- name: client

image: image-registry.openshift-image-registry.svc:5000/<project_name>/client:latest

command: ["/bin/sh", "-c"]

args:

- sleep 10;

./resnet_client_benchmark --images_list=input_images.txt --grpc_address=ovms --grpc_port=8080 --model_name=resnet --input_name=0 --output_name=1463 --iterations=1000000 --layout=binary

restartPolicy: Never

This OpenShift job generates 1M requests, split between all replicas by the service mesh.

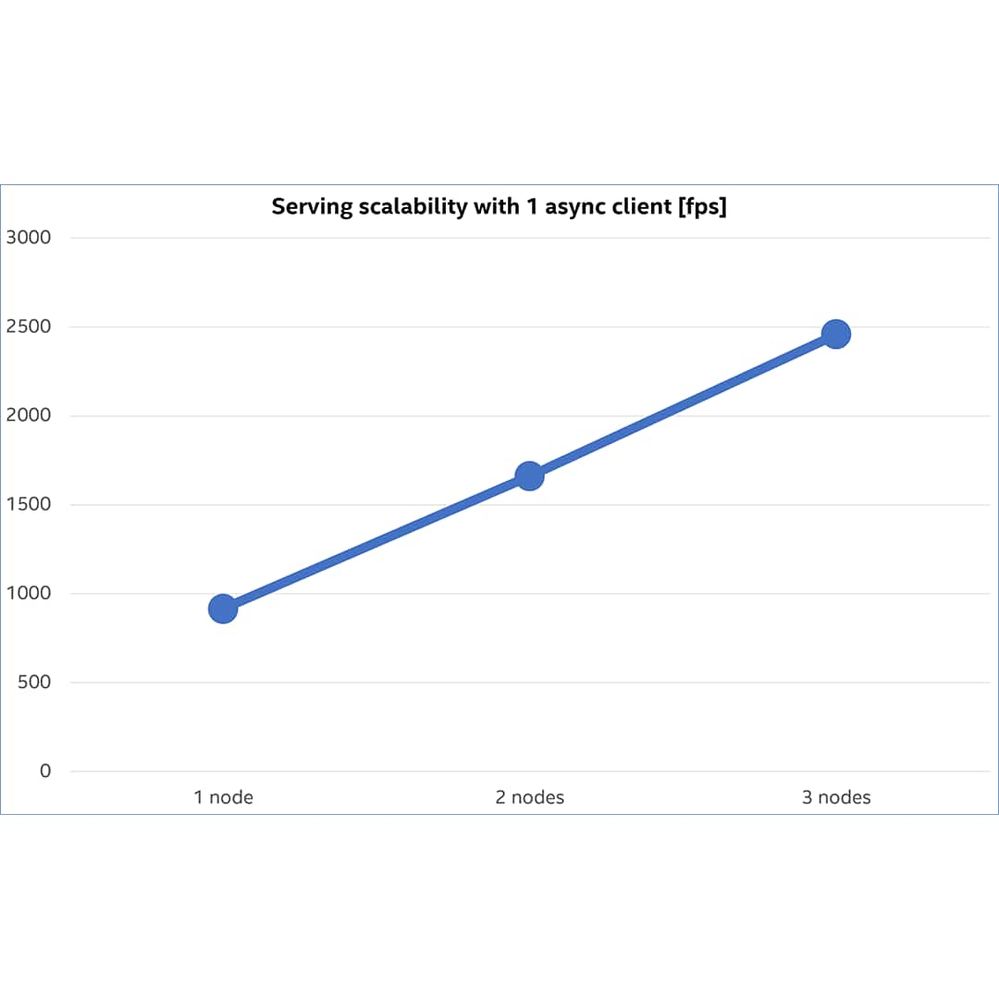

The results below show that throughput increases as the number of replicas increases. The data was captured while the Model server was serving a ResNet50-binary classification model with batch size 1. The environment was scaled by adding additional nodes to the cluster. Each node deployed one pod replica without any resource limitations.

Our sample client sends input images in compressed JPEG format, which reduces the amount of network bandwidth required in the cluster. The linear scalability shows that requests from the client are evenly distributed among the service replicas. That way the capacity of the inference execution can be adjusted to the application needs.

Conclusion

Scaling inference services with gRPC (HTTP/2) can be challenging, but many solutions exist to increase efficiency based on available resources in an OpenShift cluster.

The most efficient way to increase capacity is vertical scaling. Depending on the expected throughput, adequate resources can be applied inside the Intel® Distribution of OpenVINO™ toolkit model server container. In this scenario, capacity is increased by adding additional CPU cores to the resource limits. As the number of client connections increases, the number of Inference Engine processing streams in the device plugin configuration should increase at the same rate.

When the capacity of a single replica isn’t sufficient, OpenShift can distribute the load between multiple containers. In addition to the default layer 3 service load-balancing configuration in OpenShift, it’s possible to use layer 7 load-balancing based on Service Mesh. This approach is especially well suited for gRPC, as it’s capable of evenly distributing the requests among replicas–even with the long-lived gRPC connections.

Configuring a CPU Manager can improve inference execution within existing replicas by statically binding the container resources to the CPU cores and using CPU affinity to reduce context switching.

Notices & Disclaimers

Performance varies by use, configuration, and other factors. Learn more at www.Intel.com/PerformanceIndex.

Performance results are based on testing as of dates shown in configurations and may not reflect all publicly available updates. See backup for configuration details. No product or component can be absolutely secure.

Your costs and results may vary.

Intel technologies may require enabled hardware, software, or service activation.

Intel disclaims all express and implied warranties, including without limitation, the implied warranties of merchantability, fitness for a particular purpose, and non-infringement, as well as any warranty arising from a course of performance, course of dealing, or usage in trade.

© Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others.

Mary is the Community Manager for this site. She likes to bike, and do college and career coaching for high school students in her spare time.

Mary is the Community Manager for this site. She likes to bike, and do college and career coaching for high school students in her spare time.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.