At a Glance

- Intel’s advances in AI hardware acceleration and software optimization mean you can train large models at scale on Intel® Xeon® Scalable processor-based servers.

- Intel MLPerf Training 1.0 submissions spanned vision, recommendation systems, and reinforcement learning applications, demonstrating our ongoing software optimization work for popular deep learning frameworks and workloads.

- Our DLRM submission in the Open Division, which showcases AI innovation, trained the model in 15 minutes on 64 sockets of 3rd Generation Intel Xeon Scalable processor.

Today, many companies use Intel platforms for their AI needs, ranging from EXOS for athlete training, Burger King for context-aware fast-food recommendation, SK Telecom for Telecom network quality with time series analysis, to Laika for auto-generation of tracking points and roto shapes using image analysis [1-4]. As AI use cases become more diverse, Intel is committed to providing processors to fit customers’ unique needs – spanning CPUs, GPUs, FPGAs, and purpose-built AI accelerators. We also support the use of industry-standard benchmarks, which customers can use to make more informed purchasing decisions. So, we are pleased to share results from the just-released MLPerf Training v1.0 benchmark suite for 3rd Gen Intel® Xeon® Scalable processors, as well as the Habana Gaudi deep learning training accelerator. These results demonstrate the value Intel’s hardware and software provide by helping customers optimize for different design points and objectives as they process increasingly unstructured and complex AI workloads from the edge to the cloud.

The MLPerf Training benchmark suite measures how fast systems can train models to a target accuracy across multiple use cases. Each benchmark measures the wall clock time required to train a model on the specified dataset to achieve the target accuracy. MLPerf accepts submissions in two divisions: “Closed Division” for apples-to-apples comparison across hardware platforms and software frameworks, and “Open Division” that allows for innovation for both algorithms and software.

With MLPerf Training v1.0, Intel demonstrated that customers can train models with the Intel Xeon Scalable processors already installed in their infrastructure—and they can scale performance by adding more processors. 3rd Gen Intel® Xeon® Scalable processors are the only x86 data center CPU with built-in AI acceleration, including support for end-to-end data science tools, and a broad ecosystem of smart solutions.

Leveraging Intel® Deep Learning Boost (Intel® DL Boost) with bfloat16 on a 64-socket cluster of 3rd Gen Xeon Platinum processors, we show that Xeon can scale with the size of the DL training job by adding more servers. The bfloat16 format delivers improved training performance on workloads with high compute intensity, without sacrificing accuracy or requiring extensive hyperparameter tuning.

Results

Our submissions spanned vision, recommendation systems and reinforcement learning applications, and demonstrates our ongoing software optimization for popular deep learning frameworks and workloads.

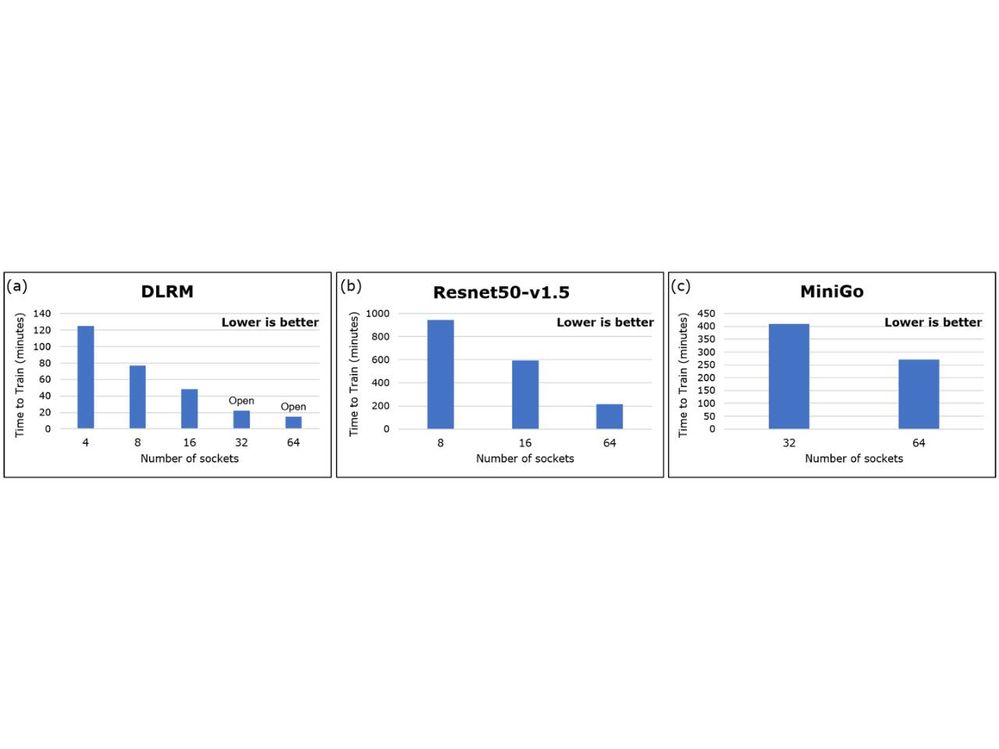

- DLRM, or Deep Learning Recommendation Model, is the benchmark representative of large-scale recommendation systems used in production. This benchmark requires a balance of memory capacity and bandwidth, floating point performance and network bandwidth to achieve good performance. Using PyTorch, we show that DLRM can be trained in ~2 hours using only 4 sockets of Intel Xeon Platinum 8380H [5]. As we’ll describe in more detail below, we also submitted DLRM results in the Open division, which highlighted our relentless approach to software innovation. We achieved a training time of 15 min on 64 sockets of Intel Xeon Platinum 8376H [6]. Your model is ready by the time you grab your coffee!

- Resnet-50 v1.5 is a widely used model for image classification and is the exemplar for convolutional neural network (CNN) based workloads. Using TensorFlow, we demonstrate that Resnet50 can be trained overnight (<10 hours) using 16 sockets of Intel Xeon Platinum 8380H, or within an afternoon (~3.5 hours) using 64 sockets of Intel Xeon Platinum 8376H [7].

- MiniGo is the MLPerf benchmark for reinforcement learning (RL) wherein the computer learns to play the board game of Go. RL has applications in many areas such as robotics, finance, autonomous driving, and traffic control. We used two major capabilities of Intel DL Boost technology to maximize compute efficiency for MiniGo: bfloat16 for the training phase, and VNNI-based int8 inference for the self-play phase. We showed that MiniGo can be trained within 7 hours on 32 sockets of Xeon Platinum 8380H and in ~4.5 hours on 64 sockets of Xeon Platinum 8376H [8].

These results, summarized in Figure 1, demonstrate that customers can scale their training workloads as needed using available infrastructure.

Figure 1: Intel’s MLPerf Training v1.0 benchmark results for (a) DLRM [5,6] (b) Resnet50-v1.5 [7] (c) MiniGo [8]. Please find software and system configuration information in the respective citations.

Software Optimizations

Here we highlight the software optimizations implemented for our DLRM Open Division submission to improve the scalability of the workload beyond the 16 sockets used in the Closed Division. These optimizations delivered ~3x better training time by increasing the socket count to 64 [5,6].

- Large-batch training: We utilize a hybrid optimizer approach, i.e. LAMB for data-parallel layers and SGD for sparse embedding, to demonstrate convergence with 256K batch size – a 4.7x improvement over the largest reference batch size of 55K [9]. Our hybrid optimizer also avoids the convergence penalty typically seen in large-batch training and reaches the target accuracy ~2x faster than reference [6,9]. Note that we avoid optimizer memory overhead by using SGD for embedding tables.

- Model parallelism: Beyond the standard hybrid parallelism in DLRM, we introduce the novel technique of splitting embedding tables along embedding dimension for distribution amongst ranks. This enables larger scale-out while reducing communication and memory requirements.

- 'split’ Optimizers: We demonstrate the memory footprint advantage of bfloat16 format for mixed precision training by eliminating fp32 master copy of weights through split-SGD and split-LAMB optimizers. The master weight is split into two 16-bit tensors representing the most and least significant bits – layer computations use only the former while weight updates use both the tensors to maintain precision.

Final Words

Intel’s vision is a world in which AI is accessible, efficient, flexible, and ubiquitous. A key requirement to achieve this vision is a practical, affordable method for training large deep learning models. Consequently, we strive to provide both hardware acceleration and software optimizations to facilitate Intel Xeon Scalable processor-based model training. Our latest MLPerf submission demonstrates the impact of this work. It shows that customers can train large models at scale using widely deployed Intel Xeon Scalable processor-based platforms. In addition, they can speed training performance by adding more processors.

In addition to highlighting scalability, our latest MLPerf submission offers a window into ongoing software optimizations in deep learning that will carry forward to our next-generation Intel Xeon Scalable processors. The Habana results in this cycle [10] further highlight our unmatched portfolio of hardware and solutions for our customers’ broad range of workloads and future needs.

Try out Intel’s MLPerf code here.

Get started with AI on 3rd Gen Intel Xeon Scalable processors.

References

[1] Intel, EXOS Pilot 3D Athlete Tracking with Pro Football Hopefuls: https://www.intel.com/content/www/us/en/newsroom/news/exos-pilot-3d-athlete-tracking-football.html (Retrieved on 06/30/2021)

[2] Burger King: Context-Aware Recommendations: https://www.intel.com/content/www/us/en/customer-spotlight/stories/burger-king-ai-customer-story.html (Retrieved on 06/30/2021)

[3] SK Telecom: AI Pipeline Improves Network Quality: https://www.intel.com/content/www/us/en/customer-spotlight/stories/sk-telecom-ai-customer-story.html (Retrieved on 06/30/2021)

[4] LAIKA Studios Expands Possibilities in Filmmaking: https://www.intel.com/content/www/us/en/customer-spotlight/stories/laika-customer-spotlight.html (Retrieved on 06/30/2021)

[5] DLRM Closed division: Retrieved from https://mlcommons.org/en/training-normal-10/ (ID:1.0-1041, 1.0-1042, 1.0-1044, 1.0-1045) on 06/30/2021. MLCommons and MLPerf name and logo are trademarks. See www.mlcommons.org for more information.

[6] DLRM Open division: Retrieved from https://mlcommons.org/en/training-normal-10/ (ID:1.0-1100, 1.0-1101) on 06/30/2021. MLCommons and MLPerf name and logo are trademarks. See www.mlcommons.org for more information.

[7] Resnet50 Closed division: Retrieved from https://mlcommons.org/en/training-normal-10/ (ID:1.0-1039, 1.0-1040, 1.0-1043) on 06/30/2021. MLCommons and MLPerf name and logo are trademarks. See www.mlcommons.org for more information.

[8] MiniGo Closed division: Retrieved from https://mlcommons.org/en/training-normal-10/ (ID:1.0-1040, 1.0-1046) on 06/30/2021. MLCommons and MLPerf name and logo are trademarks. See www.mlcommons.org for more information.

[9] DLRM Reference convergence point for batch size 55296: Retrieved from https://github.com/mlcommons/logging/blob/1.0-branch/mlperf_logging/rcp_checker/1.0.0/rcps_dlrm.json on 06/30/2021

[10] Habana Closed division: Retrieved from https://mlcommons.org/en/training-normal-10/ (ID:1.0-1029) on 06/30/2021. MLCommons and MLPerf name and logo are trademarks. See www.mlcommons.org for more information.

Notices and Disclaimers

Performance varies by use, configuration and other factors. Learn more at www.Intel.com/PerformanceIndex. ;

Performance results are based on testing as of 05/21/2021 shown in configurations and may not reflect all publicly available updates. See backup for configuration details. No product or component can be absolutely secure.

Your costs and results may vary.

Intel technologies may require enabled hardware, software or service activation.

All product plans and roadmaps are subject to change without notice.

Code names are used by Intel to identify products, technologies, or services that are in development and not publicly available. These are not "commercial" names and not intended to function as trademarks.

© Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others.

Mary is the Community Manager for this site. She likes to bike, and do college and career coaching for high school students in her spare time.

Mary is the Community Manager for this site. She likes to bike, and do college and career coaching for high school students in her spare time.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.