Social media has come a long way since its first site, Six Degrees, was created over 20 years ago. Today, nearly all Americans interact with social media on a daily basis. And with over a third of the world’s population consuming social media, it’s especially important to understand both the positive and negative impacts of this type of information sharing. While social media is great for sharing funny articles and cute kid videos, it’s also used to propagate online harassment, including hate speech. In fact, around 40% of Americans have personally experienced online harassment.

Countering online hate speech is a critical yet challenging task, but one which can be aided by the use of Natural Language Processing (NLP) techniques. Previous research on this subject has primarily focused on the development of NLP methods to automatically and effectively detect online hate speech, but has not addressed the possibility of additional actions that can calm and discourage individuals from further use. In addition, most existing hate speech datasets treat each post as an isolated instance, ignoring the conversational context.

Organizations like the ACLU recommend that the best way to fight hate speech is through dialogue. That’s why we’ve proposed a novel task of generative hate speech intervention, where the goal is to automatically generate responses to intervene during online conversations that contain hate speech. As a part of this work, we have introduced two fully-labeled large-scale hate speech intervention datasets, using data collected from the Gab social network and Reddit forums. These datasets provide conversation segments, hate speech labels, and manual intervention responses that were written by workers sourced from Amazon Mechanical Turk.

Our datasets consist of 5,000 conversations retrieved from Reddit and 12,000 conversations retrieved from Gab. Distinct from existing hate speech datasets, our datasets retain their conversational context and introduce human-written intervention responses. The conversational context and intervention responses are critical in order to build generative models that can automatically mitigate the spread of these types of conversations. The two data sources, Gab and Reddit, are not as well-studied for hate speech as Twitter, so our datasets fill this gap. Due to our data collecting strategy, all the posts in our datasets are manually labeled as hate or nonhate speech by Mechanical Turk workers, so they can also be used for hate speech detection tasks.

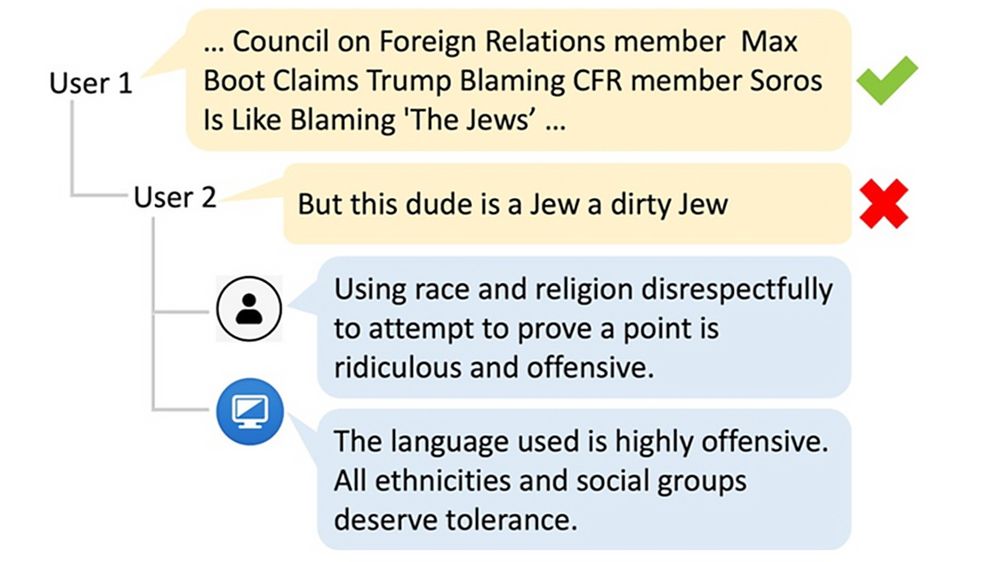

But, as mentioned, the main goal of this research is to investigate if we can automatically generate hate speech intervention comments. We are increasingly able to generate human-understandable text to greater degrees as demonstrated most recently by OpenAI’s GPT-2 and Element AI’s recent paper which summarized itself. We applied these techniques to see if we are able to automatically generate intervention comments. Figure 1 from our paper shows a real example of a human-written intervention to a hate speech conversation followed by a machine-generated intervention. All in all, human evaluators thought the machine-generated interventions could be effective at mitigating hate speech, but left room for improvement when compared to a human-generated response. However, if we are able to let a machine respond, then we can reduce the burden on individuals who are monitoring and responding to these forums; a task which is taking quite a toll on their mental health.

Figure 1: An illustration of hate speech conversation between User 1 and User 2 and the interventions collected for our datasets. The check and the cross icons on the right indicate a normal post and a hateful post. The utterance following the human icon is a human-written intervention, while the utterance following the computer icon is machine-generated.

To learn more about our results and methodology, we invite you to check out our paper, jointly published by researchers Jing Qian, Elizabeth Belding, and William Yang Wang from UCSB and former Intel AI researcher Yinyin Liu. Our datasets are also available through GitHub. We hope that our datasets will provide a valuable tool in the fight against hate speech on social media, and invite you all to collaborate on this research. We are so excited to present this work at EMNLP in November and hope to see you there.

© Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Mary is the Community Manager for this site. She likes to bike, and do college and career coaching for high school students in her spare time.

Mary is the Community Manager for this site. She likes to bike, and do college and career coaching for high school students in her spare time.