A three-part series on OpenVINO™ Deep Learning Workbench

About the Series

- Learn how to convert, fine-tune, and package an inference-ready TensorFlow model, optimized for Intel® hardware, using nothing but a web browser. Every step happens in the cloud using OpenVINO™ Deep Learning Workbench and Intel® DevCloud for the Edge.

- Part One: We show you the Intel® deep learning inference tools and the basics of how they work.

- Part Two: We go through each step of importing, converting, and benchmarking a TensorFlow model using OpenVINO Deep Learning Workbench.

- Part Three (you’re here!): We show you how to change precision levels for increased performance and export a ready-to-run inference package with just a browser using OpenVINO Deep Learning Workbench.

Part Three: Recalibrate Precision and Package Your TensorFlow Model for Deployment with OpenVINO™ Deep Learning Workbench

In Part Two, we showed you how to use OpenVINO™ Deep Learning Workbench to import a TensorFlow model, convert it to Intel® IR, benchmark it, and set a performance level for our optimized inference model.

Here in Part Three, we’re going to dig into some of the advanced tools for analysis and optimization in the workbench. Then we’ll show you how to package a production-ready inference model.

Note: If you haven’t already, please sign up for DevCloud for the Edge. It’s free, and it only takes a few minutes. If you’d like to explore before you sign up, check out our first article.

Step one: Calibrate a TensorFlow model to INT8

The processor we chose in Part Two, an Intel® Xeon® 6258R (aka Cascade Lake), has Intel® Deep Learning Boost, which accelerates performance at INT8 precision. Let’s see how performance changes when we calibrate our model from FP32 to INT8.

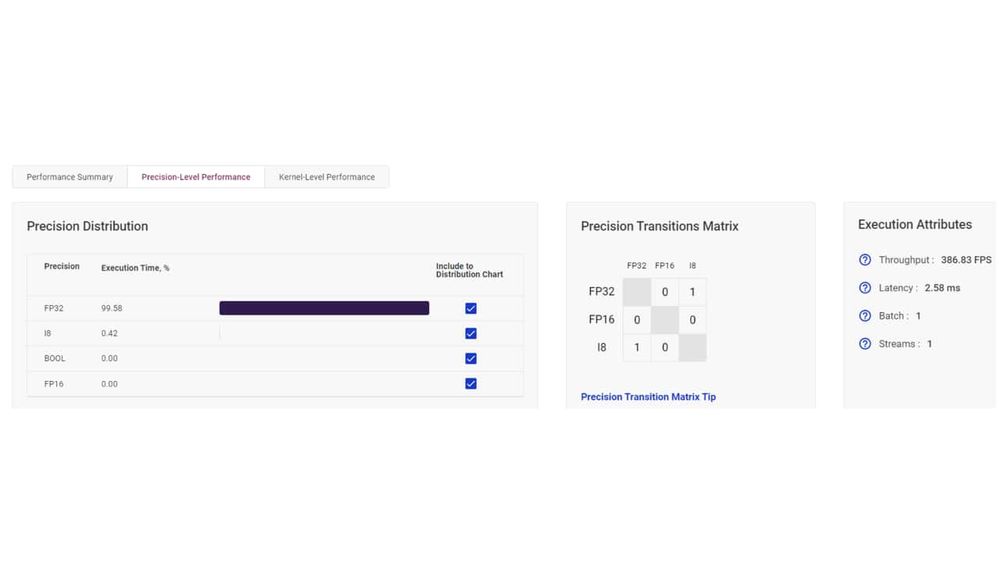

Our first benchmark for the Intel® Xeon 6258R had a throughput of 383.83 frames per second, a latency of 2.58 milliseconds, and the majority of the processing—99.58 percent of the—ran at FP32.

At FP32, our first benchmark ran at 386.83 frames per second with a latency of 2.58 milliseconds.

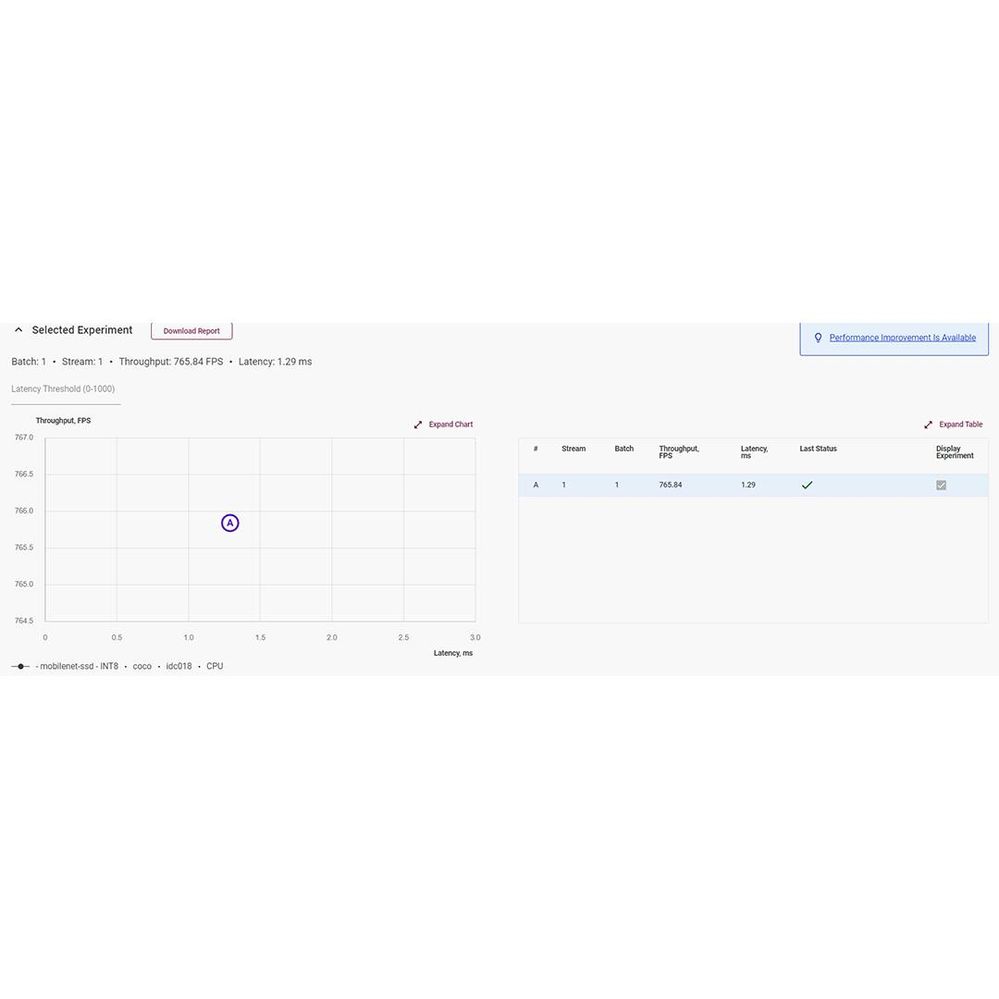

When we calibrate the same experiment to INT8, we see a dramatic performance boost. Throughput nearly doubles to 765.84 frames per second, and latency is cut in half to 1.29 milliseconds.

At INT8, performance roughly doubles.

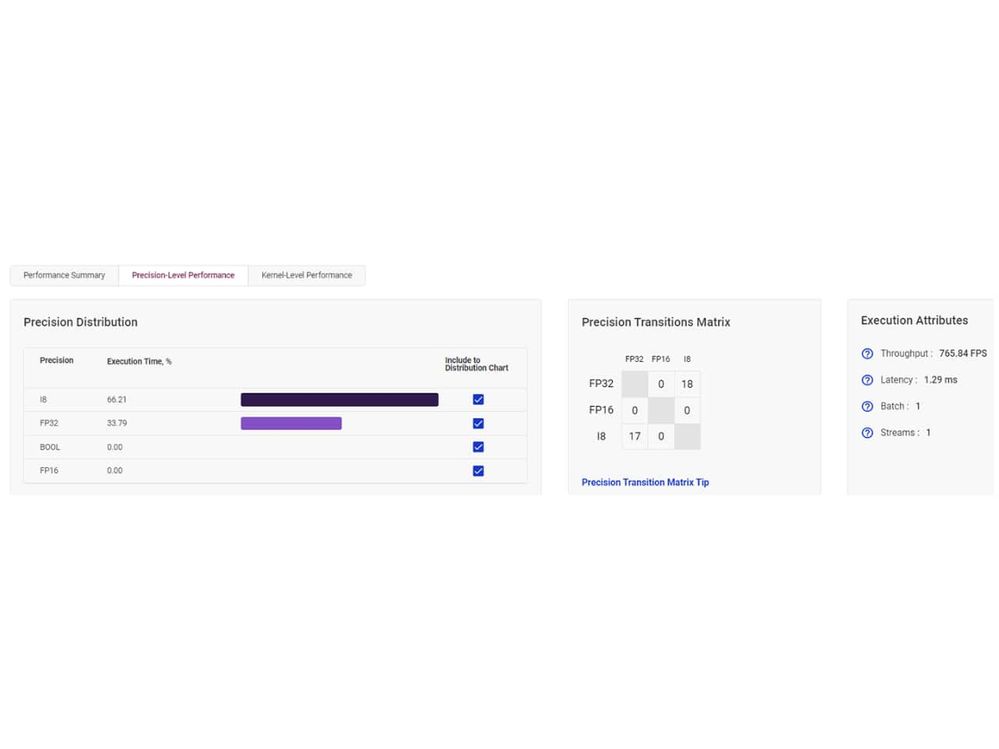

Calibration doesn’t switch every operation to INT8. FP32 precision is still in the mix. During calibration, the workbench runs multiple combinations of FP32 and INT8, then tests and checks throughput and speed. The process can take a while, but when it’s done, you’ll have a hybrid model that strikes the best balance.

Calibration mixes precision levels to create the best possible performance.

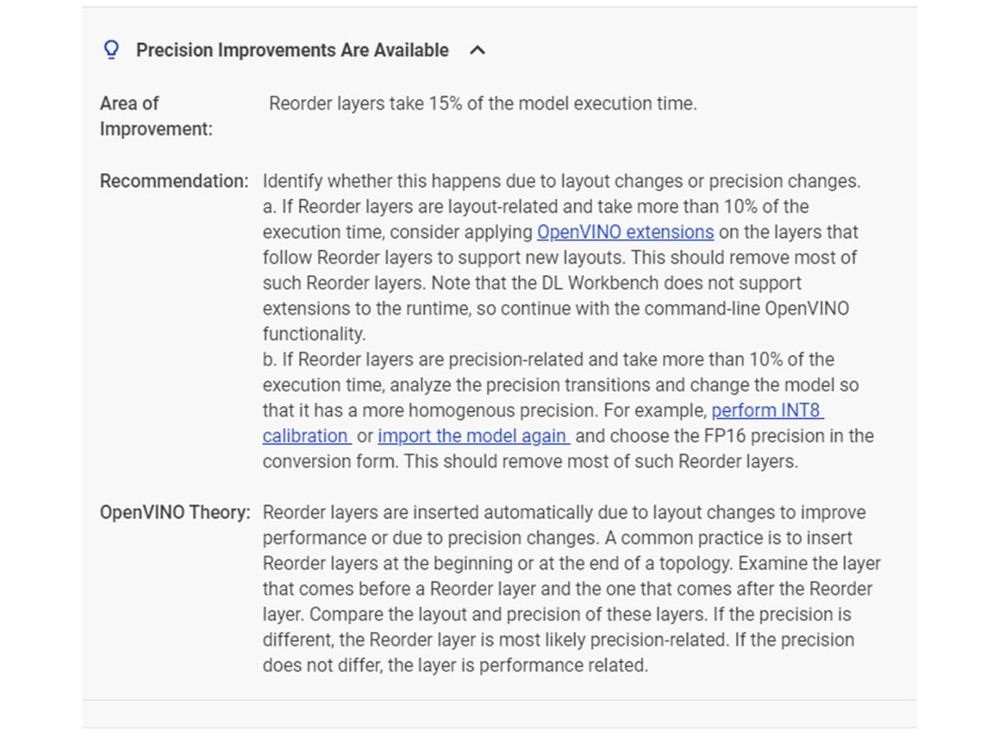

Deep Learning Workbench identifies inference inefficiencies and suggests improvements.

Step two: Packing a TensorFlow model for deployment

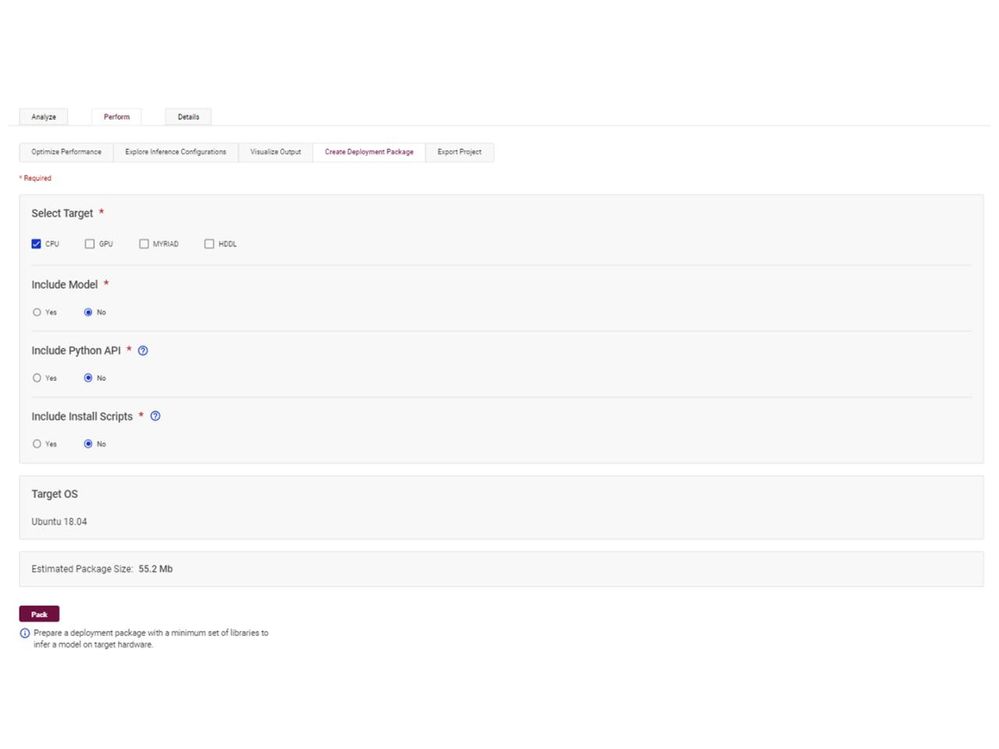

Once we reach the right balance of throughput and latency, we can pack our model for deployment. All we have to do is go to the pack tab, then choose our target hardware and what we want to include in the package. It’s that easy.

The workbench automatically packages your optimized and calibrated model.

Conclusion

That’s the end of our three-part series on creating a deployment-ready inference model using OpenVINO™ Deep Learning Workbench and the Intel® DevCloud for the Edge. Now you know how to optimize a TensorFlow model and run it on any Intel® architecture, and all you need is a web browser!

Learn more:

- Read INT8 Calibration

- Read Build Your Application with Deployment Package

- Get started with OpenVINO toolkit and Deep Learning Workbench

- Explore Deep Learning Workbench documentation

- Watch the video – Introduction to OpenVINO™ Deep Learning Workbench

- Watch the video – Get started with the Deep Learning Workbench

- Watch the video – OpenVINO™ Deep Learning WorkBench on Intel® DevCloud for the Edge

Notices and Disclaimers

Performance varies by use, configuration and other factors. Learn more at www.Intel.com/PerformanceIndex.

Performance results are based on testing as of dates shown in configurations and may not reflect all publicly available updates. See backup for configuration details. No product or component can be absolutely secure.

Your costs and results may vary.

Intel technologies may require enabled hardware, software or service activation.

Intel does not control or audit third-party data. You should consult other sources to evaluate accuracy.

© Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others.

UPDATED 04/27/2022 - Updated several links

Mary is the Community Manager for this site. She likes to bike, and do college and career coaching for high school students in her spare time.

Mary is the Community Manager for this site. She likes to bike, and do college and career coaching for high school students in her spare time.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.