Atul Kwatra is the director of the Systems Architecture Lab and chief technologist for Hardware/Software Co-Design in Intel Labs.

Highlights:

- Intel launches 4th Gen Intel® Xeon® Scalable Processor (code-named Sapphire Rapids).

- The next-generation processor features system architecture innovations from Intel Labs, including Intel architecture communications technologies, Intel® Accelerator Interfacing Architecture, Intel® Data Streaming Accelerator, Intel® Scalable I/O Virtualization and new capabilities in Intel® Virtualization Technology for Directed I/O.

- Intel Labs’ technological contributions enable increased efficiency, reliability, security and performance.

The 4th Gen Intel® Xeon® Scalable processors represent Intel’s biggest data center platform advancement. Intel’s latest Xeon Scalable processor delivers leadership performance in a purpose-built workload-first approach. Intel does this with optimized software, an open ecosystem and the most built-in accelerators of any CPU on the market for key workloads such as AI, analytics, networking, storage and high-performance computing (HPC).

Intel’s 4th Gen Intel Xeon Scalable processors are built on Intel 7 process technology and feature Intel’s new Performance-core microarchitecture, which is designed for speed and pushes the limits of low-latency and single-threaded application performance. The 4th Gen Intel Xeon Scalable processor delivers the industry’s broadest range of data center-relevant accelerators, including new instruction set architecture extensions and integrated IP to increase performance across the broadest range of customer workloads and usages. This processor family also introduces a brand-new microarchitecture to the data center and increases the core count to as many as 60 cores.

This new technology was made possible, in part, by contributions from Intel Labs, Intel’s research organization that delivers breakthrough technologies for Intel and the industry at large. The system architecture enhancements span five key areas, including Intel architecture communications technologies (ICT), Intel® Accelerator Interfacing Architecture (Intel® AIA), Intel® Data Streaming Accelerator (Intel® DSA), Intel® Scalable I/O Virtualization (Intel® S-IOV) and new capabilities in Intel® Virtualization Technology for Directed I/O (Intel® VT-d).

Intel Architecture Communications Technologies

Many of the Intel architecture communications technology (ICT) features that appear in 4th Gen Intel Xeon Scalable processors were driven by Intel Labs. These enhancements include the architecture definition and instruction set extensions (ISE) for cache line demote (CLDEMOTE), an instruction to increase processor cache performance. Using CLDEMOTE, a system can free up lower cache levels (closer to the core) and enable efficient cross-core data sharing by pushing cache lines directly to the last-level cache (LLC). This enhancement enables faster load operations on shared data structures between processor cores, such as when a large compute problem has been partitioned and parallelized across several cores. Part of this work also included simulation modeling and use-case evaluations for the instruction, and documentation is available in Intel’s Software Development Manual (SDM).

Intel Labs researchers also spearheaded Intel’s first robustly working multi-threaded simulation environments for CLDEMOTE and future instruction modeling, which is also used in related work. This large undertaking resulted in 46+ invention disclosure (IDF) filings and several research papers, including Intel’s first CLDEMOTE and Intel® Dynamic Load Balancer (Intel® DLB) research paper.

The Intel DLB accelerator enables efficient queue management offload from the core, eliminating the need for shared locks and improving efficiency, especially in communications processing applications.

Intel Labs also collaborated on several other enhancements, including:

- 5G instruction set architecture (ISA)– bringing the FP16 data type to the AVX-512 family of instructions for the first time, focusing specifically on signal processing applications for communications.

- Converged telemetry framework (CTF) – enabling enhanced no-overhead telemetry capabilities for Intel platforms.

- DDIO.Next – Enhancing the data direct I/O feature to work efficiently on Sapphire Rapids and future processors.

Intel® Accelerator Interfacing Architecture

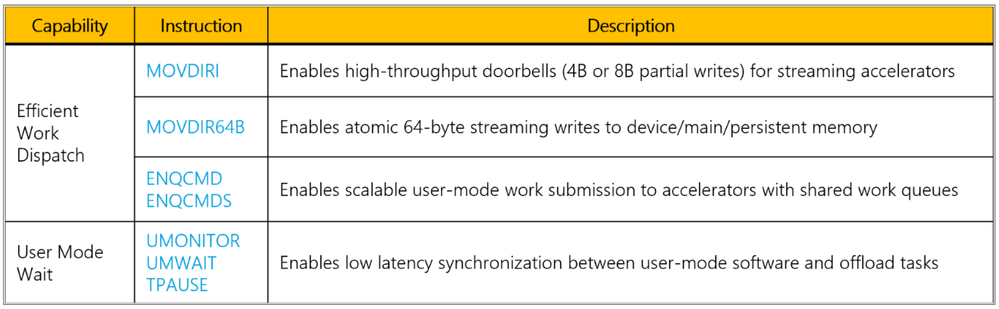

Representing Intel Labs, the Systems Architecture Lab (SAL) worked on new instructions to improve the efficiency of submitting work to and synchronizing work among the compute cores and the dedicated accelerators. This Intel® Accelerator Interfacing Architecture (Intel® AIA) works to support native dispatch, signaling and synchronization from user space. This significant architecture enhancement also enables a coherent, shared address space between the cores and acceleration engines and delivers concurrently shareable processes, containers and virtual machines (VMs). The SAL team has been working toward new Intel architecture instructions (Figure 1) and CPU infrastructure, along with shared virtual memory (SVM) and scalable I/O virtualization, to enable:

- Low overhead user-mode offload, including task dispatch and synchronization.

- A uniform view of memory between application and task offloaded to the accelerator.

- Efficient sharing of accelerators across applications, containers and virtual machines.

Figure 1. New Intel AIA instructions formulated by the Systems Architecture Lab.

Intel® Data Streaming Accelerator

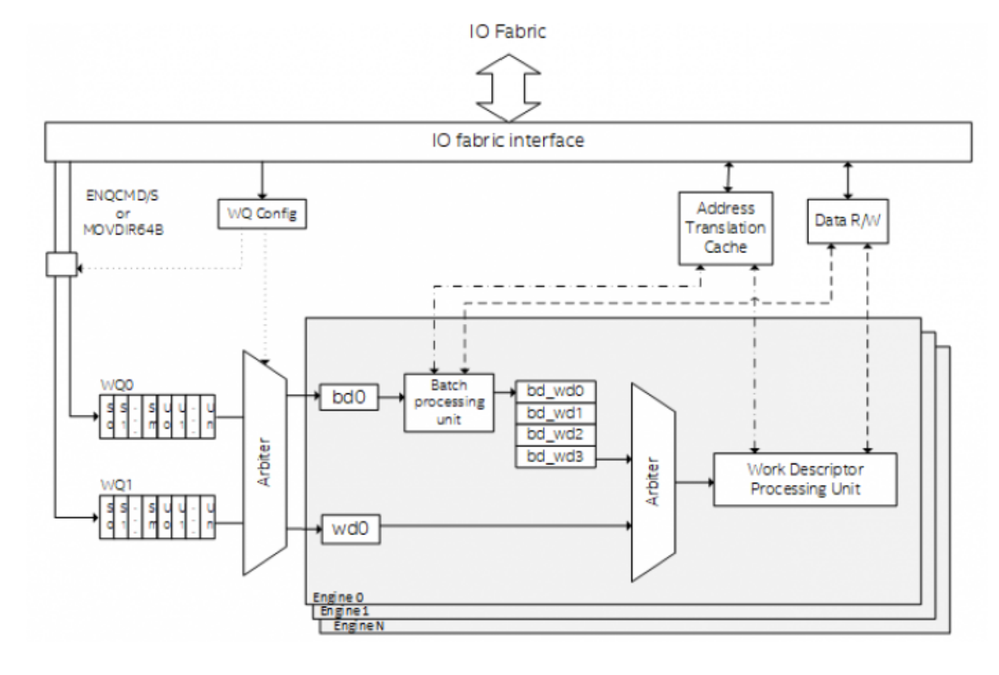

The Intel® Data Streaming Accelerator (Intel® DSA) is designed to offload common data movement and data transformation tasks that cause overhead in data center scale deployments, improving the processing of these overhead tasks to deliver increased overall workload performance. The Intel DSA can move and process data across different memory tiers (e.g., DRAM or CXL memory), CPU caches and peer devices (e.g., storage and network devices or devices connected via non-transparent bridge (NTB)). Intel DSA optimizes streaming data movement and transformation operations with up to four device instances per socket. Figure 2 illustrates the high-level blocks within the device at a conceptual level.

Figure 2: Abstracted internal block diagram of Intel DSA. The I/O fabric interface is used for receiving downstream work requests from clients and for upstream read, write and address translation operations.

In the development of the Intel DSA, SAL researchers established a technology roadmap, defined the landing zone and architecture, drove architecture convergence, and developed and published the external architecture specifications (EAS). The SAL team also worked on customer disclosures and provided guidance on various software-enabling activities and architectural support for functional and PnP debugging, including publishing of a DSA User Guide. Lastly, the group was involved in developing an initial Linux driver and the Intel® DSA Performance Micros, an open source performance microbenchmark tool used widely for DSA PnP characterization.

Intel® Scalable I/O Virtualization

Intel® Scalable I/O Virtualization (Intel® S-IOV) technology aims to enable efficient and scalable sharing of I/O devices, like network controllers, storage controllers, graphics processing units and other hardware accelerators across many containers or virtual machines. Intel S-IOV is a significant enhancement that not only provides increased scalability at a lower cost than today’s standard, but does so without sacrificing the performance benefits of the current single root I/O virtualization (SR-IOV) architecture. Additionally, the flexibility of S-IOV enables simpler device hardware designs. This flexibility also addresses limitations associated with direct device assignments, such as generational compatibility, live migration and memory over-commitment.

Intel is leading the industry into the next generation of I/O virtualization by contributing the S-IOV architecture to Open Compute Project (OCP) as S-IOV R1 (revision 1) standard. Intel is also working with other industry partners to develop the next revision of OCP S-IOV architecture (S-IOV R2). This innovative architecture was conceived in the SAL and developed in collaboration with other Intel software teams. Intel Labs researchers developed the required VT-d architecture enhancement for supporting S-IOV. SAL also worked with engineering teams to help implement Intel S-IOV in those devices. This work also included the development of hypersim-based S-IOV SDV and a Linux-based S-IOV prototype implementation to validate the architecture and assist with software enabling.

Intel® Virtualization Technology for Directed I/O

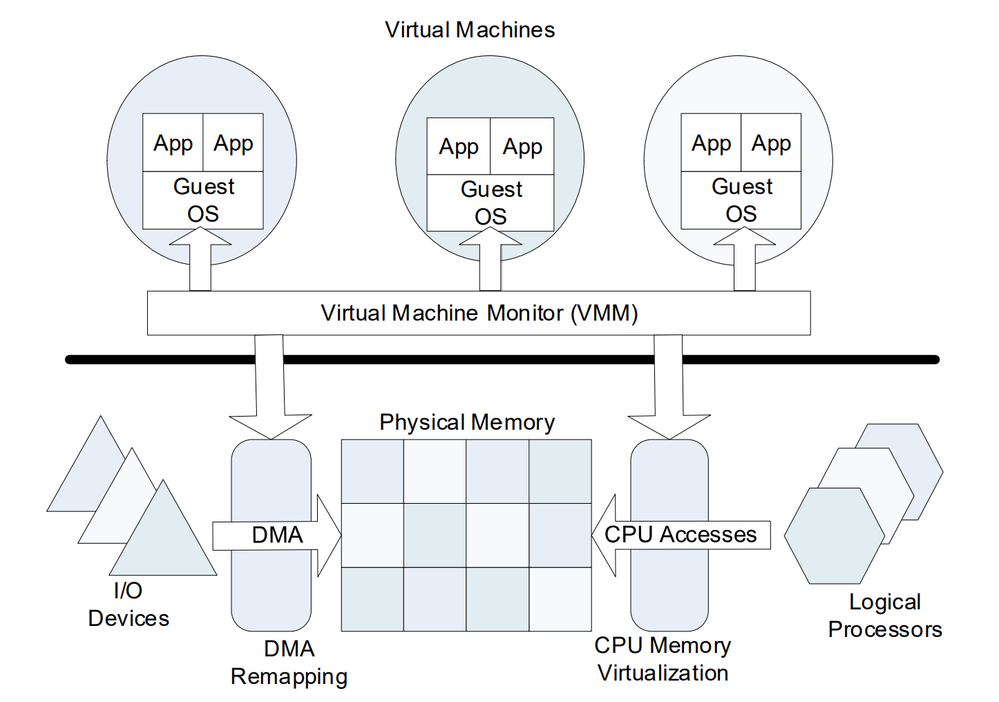

Intel® Virtualization Technology (Intel® VT) consists of technology components that support the virtualization of platforms based on Intel processors, thereby enabling the running of multiple operating systems and applications in independent partitions. This hardware-based virtualization solution, along with virtualization software, enables multiple usages such as server consolidation, activity partitioning, workload isolation, embedded management, legacy software migration and disaster recovery. Furthermore, Intel® VT for Directed I/O (Intel® VT-d) allows addresses in incoming I/O device memory transactions to be remapped to different host addresses. This provides virtual machine monitor (VMM) software with:

- Improved reliability and security through device isolation using hardware-assisted remapping.

- Improved I/O performance and availability by direct assignment of devices.

Figure 3. A depiction of how system software interacts with hardware support for both processor-level virtualization and Intel® VT for Directed I/O.

Labs researchers contributed to several new Intel VT-d features, including:

- Shared virtual memory: Including address translation services (ATS), process address space ID (PASID) and S-IOV support. This allows CPUs and XPUs to collaborate more efficiently.

- Access/Dirty bit support in second-stage page-tables: Required to support VM migration.

- Nested-IOVA: Enables isolation within-VM and protection, as opposed to the inter-VM isolation previously offered.

- On-the-fly page-table switch: For kernel soft reboot (KSR) usage. This allows the software to provide a new set of page-tables while the input-output memory management unit (IOMMU) actively performs address translation.

- Authored high-quality VT-d 3.x specifications that are friendlier to validation than all prior generations.

Through contributions like these, and many more, Intel Labs continues to play an integral role in both dreaming up and delivering breakthrough technologies. Such innovations not only benefit Intel products and clients, but the industry as well. As a global research organization, Intel Labs fosters university partnerships to anticipate and solve computing challenges of the future. Intel Labs’ broad research scope spans every layer of the technology stack, from data to novel sensing technologies, in order to advance memory, security and storage.

Stay tuned for Part 2 of this series on Intel Labs' contributions to the new Intel® Xeon® Scalable Processor. In the meantime, learn more about Intel Labs' ongoing research at Intel.com.

Technology marketing leader with a passion for connecting customers to Intel value!

Technology marketing leader with a passion for connecting customers to Intel value!

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.