When it’s time to choose a new system to run your modern data center workloads, how do you decide what to buy? You probably use benchmarking as an important factor in identifying a system that will meet your performance requirements. You might assume that a system performing better on benchmark tests will also perform better on your real-word workloads—but is that true?

Performance analysis based on telemetry data provides evidence that popular benchmarks alone may not provide a complete picture of a system’s ability to perform on modern real-world workloads.

You can’t sleep in a race car, and you wouldn’t drive an RV on a racetrack. You choose a vehicle based on many factors, not just horsepower. Likewise, you shouldn’t choose systems for your data center based only on benchmarks like SPECrate®2017_int_base. Instead, you should take an active approach to finding additional benchmarks that will effectively analyze CPU performance on workloads similar to yours.

Benchmarks with greater similarity to your workloads will better predict optimal performance while running your workloads. Intel has developed a systematic approach to actively choosing your benchmarks:

- Gather telemetry data to better understand the performance needs of your workload.

- Select a benchmark that will exercise system components in a way similar to your workload.

In other words, analyze the “performance fingerprint” of your workload, then analyze the fingerprints of various benchmarks to find ones that are most similar. Which raises questions like “what exactly is a performance fingerprint and how do you measure it?”

Performance Fingerprints

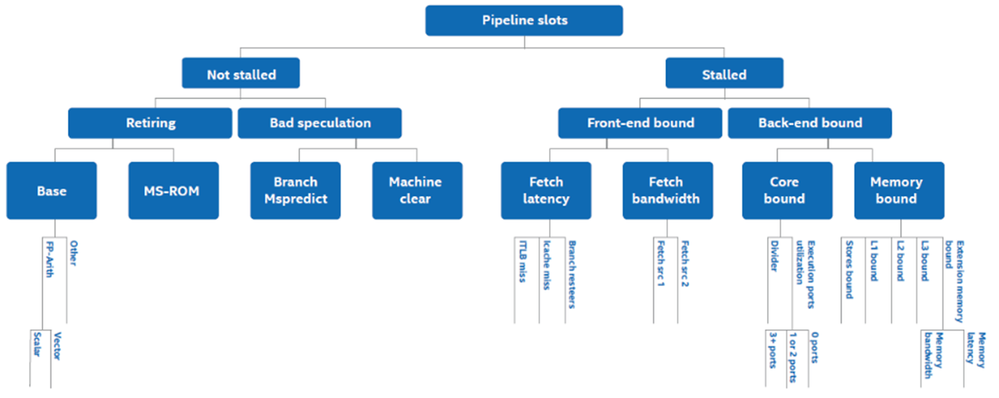

Sophisticated methods have been developed for CPU performance analysis using telemetry data from performance monitoring units (PMUs), which capture underlying microarchitectural events using hardware counters. These events can be used to understand system behavior and the impact of an application on various aspects of the CPU, such as cache, memory, and translation lookaside buffers (TLBs).

Tools such as PerfSpect enable the collection of PMU telemetry from the CPU while running a workload. The problem is the sheer quantity of data generated by the PMUs and collected by the tools. What do you do with it? To solve this problem, Intel turns to a top-down approach to performance analysis to help cut through the noise of excess data.

Figure 1 (above). A hierarchical classification tree illustrates top-down performance analysis

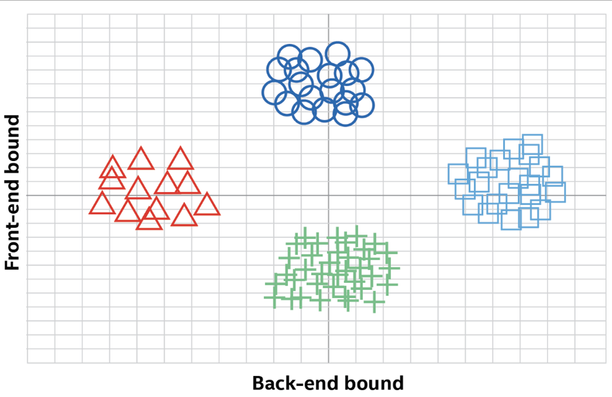

You can read more about the methodology in this technical brief. In short, Intel found that the two principal components that best differentiate workloads are the degree to which a workload is front-end bound on one hand, and the degree to which it is back-end bound on the other. By condensing an array of data down to these two characterizations, we can then plot them on a simple 2D chart, as in this conceptual example:

Plot points that are clustered together represent workloads or benchmarks that have similar fingerprints because they exercise system components in similar ways.

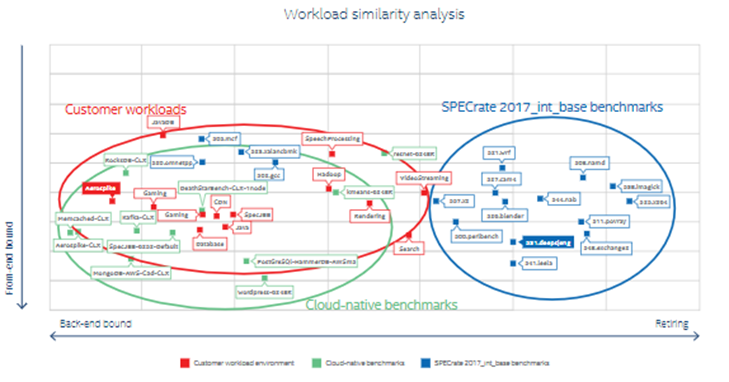

Intel used PerfSpect to extract PMU information and process top-down metrics for dozens of real-world customer workloads and benchmarking tests. The results are plotted below:

Clearly, the real-world customer workloads (red) were much more back-end bound than the SPECrate 2017_int_base benchmarks (blue) and aligned much more closely with the cloud-native benchmarks (green).

Do It Yourself

The method described here for conducting workload similarity analysis is not just theoretical. The code has been developed, and you can run your own workload-similarity analyses using a collection of open source tools at https://github.com/intel/PerfSpect.

Today’s workloads are complex, and a broad spectrum of components will contribute to optimal performance for any given workload. If a benchmark is not similar to your real-world workload, the benchmark results will mean little. If you choose benchmarks with a similar fingerprint to your target workload, then high performance on those benchmarks will be a good predictor of optimized performance on your real-world workload. The end result will be your ability to choose systems for your data center based on better information.

To learn more, read Active Benchmarking for Better Performance Predictions.

Approach developed by Harshad Sane - Principle Engineer, Intel Corporation

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.