- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I am trying to use the DisplayPort sample design on a Stratix 10 board. I have implemented the exact sample project from Intel with the Bitec daughter card but am seeing some issues.

When I plug a Windows PC (7 or 10) into the daughter card it immediately performs the link training and connects to the Operating System. Using SignalTap I can see data flowing out of the Clkrec core (VSYNC, HSYNC, DE, Data). When I plug in a monitor to the TX port I can see a windows desktop as if it were a second monitor to my Windows PC desktop.

When I plug a linux machine (or same hardware but dual boot) into the DisplayPort RX port, Linux recognizes the DisplayPort sink as a monitor. However I am not seeing any data come out of the Bitec Clkrec.

Linux is reporting that it found valid modes for the DisplayPort monitor.

Can you help me figure out why the DisplayPort core has no trouble connecting to Windows but has trouble connecting to Linux?

Setup:

OS: CentOS 6

Graphics Card Vendor: NVIDIA

DisplayPort Core: GPU mode enabled, AUX debug enabled, core is set for HBR3 and 4k60 resolution.

Tool: Quartus 19.1

Thanks!

Daniel

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Daniel,

I presume your system setup is like below. Correct me if I am wrong

- win/linux CPU -> DP Rx -> DP Tx -> monitor

Does connecting win CPU -> monitor directly (via DP cable) works ?

Do you have S10 GX dev kit board to try out to rule out potential board setup issue ?

Have you try different brand or shorter DP cable to check for potential signal integrity concern ?

Are you using below Stratix 10 Intel FPGA DisplayPort design example ?

I believe it's not about the OS difference issue but rather on the graphic card issue

- I presume you are using different graphic card on Win and Linux machine ?

- Have you compare the spec between these 2 graphic card to find out the difference that maybe the root cause

- Have you try to play around with Nvidia graphic card setting to see if it helps ? (Like BPC setting, lower down video resolution, disable adaptive sync and etc)

- Also do you really need HBR3 (8G datarate) for 4Kp60 resolution ? Can you try change DisplayPort IP to 4G datarate channel to see if works ?

- If you are using DP example design, can you dump out the MSA log as per attached 4k support sample screenshot ?

Thanks.

Regards,

dlim

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Daniel,

Thanks for the clarification on dual OS boot using same graphic card. That means your hardware setup should be fine and no signal integrity concern.

In this case, I don't think sending debug log to me will be any useful anymore. The most we can prove is graphic card is not sending correct data to DP Rx in Linux OS.

- DP Rx is just a reception IP. DP Rx cannot control how DP Tx source (graphic card) transmit AUX data to DP Rx.

- Something is funny happening on Linux OS.

The direct relation that I can think of between graphic card interaction with OS would be graphic card driver.

- The easiest way will be to either upgrade or downgrade Nvidia Linux OS driver installation and hope that it fix the issue

- Or the other possibility is you configure different graphic card setting in Linux OS vs Win OS that somehow cause the failure. You may want to cross check the setting between 2 OS.

- Finally if you had confirmed the graphic card setting used in both OS is the same but video output failure still happened. Then my suggestion is you can raise this issue back to Nvidia to seek their help in understanding graphic card driver difference between Win OS and Linux OS.

Thanks.

Regards,

dlim

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Daniel,

The previous MSA log file dump is meaningful if the DP link training already happened and user just need extra info like validate the BPC setting, monitor the link BER rate, check for MSA lock status and etc.

But from your issue description, looks like DP core is not transferring any data at all and somehow the link training is already stop or never started at all.

- For Aux bus transaction monitoring, I use external protocol analyzer to decode the Aux transaction to provide meaningful data result to user.

- For instance, I am using UNIGRAF DPA400 equipment to decode/debug DP AUX transaction

- https://www.unigraf.fi/product/dpa-400-displayport-aux-channel-monitor/

- Attached is sample log file for your reference. Then I can compare the passing log file vs failing log file to slowly isolate the issue

Thanks.

Regards,

dlim

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Dlim,

I don't have a unigraf analyzer but can provide the AUX logs that the NIOS II prints out. Would that be useful? Would you like both the working Win7 and not working CentOS logs?

Thanks,

Daniel

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

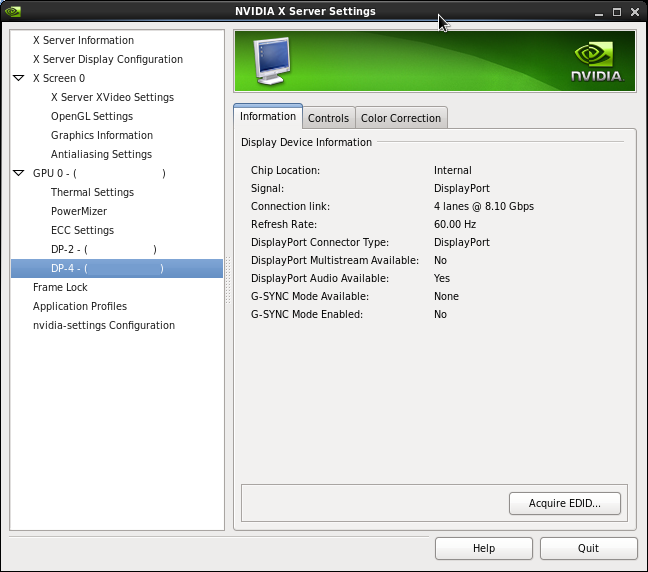

Here is the NVIDIA Control Panel showing that the DP output is using 4 lanes all at 8.1 Gbps.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Dlim,

- I have tried this and it didn’t seem to make a difference

- I am recompiling with the lower data rate set to try it out. Are the only changes to the IP core data rate settings or do I need to make changes in the RTL as well?

- I am downloading Quartus Pro v20.2 (Stratix 10 file is over 20 GB) and will try that out once it finishes downloading.

- We are targeting and doing the bulk of the testing with an NVIDIA RTX 6000 Graphics card. I have also been testing with a Geforce 660 Ti. The monitor I am using in conjunction with the IP core is a Dell U2718Q.

- The logs are attached.

The AUX logs are for linux and windows for both the GeForce 660 and the RTX 6000. The only combination that has data flowing out of the clkrec core is the Geforce 660 running win7.

Thanks,

Daniel

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi dlim,

Installing the Stratix 10 device file for Quartus Pro v20.2 is taking longer than I expected. It is 20 GB and exceeds the download file size allowed by my company. Are there plans to break up these larger device files into multiple parts in the future releases?

Thanks,

Daniel

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi dlim,

I have successfully installed Quartus v20.2 on my PC. I created a new DisplayPort sample project in Quartus v20.2 and merged in the new IP cores and software into my current design. When I try to compile it I have been experiencing an error when "Route" reaches 53%. I am not sure why this is happening now. I am attaching the stack trace displayed when crashing.

Originally I thought this was due to some port names being renamed from the cores. I cleaned that up in my RTL and it is still giving me this problem.

Any clue why this might be happening?

Thanks,

Daniel

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi dlim,

I got the project to compile but it stopped 2 more times in different spots with similar stack traces on the way.

When I loaded the compiled design from v20.2 I see similar results. Linux recognizes the DP interface but the clkrec core does not output anything.

I am attaching the AUX log from the v20.2 load connecting to Linux on the RTX 6000.

Thanks,

Daniel

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Daniel,

Sorry for the late respond as we were having big Intel forum maintenance update for the last week.

A lot of things mess up and some info are lost as well. Pls give me sometime to sort thing out internally and work on the manual clean up first.

Also sorry, I may need to request you to resend me some info again due to info lost during maintenance update.

So, I will get in touch with you again next week to resume our debug discussion. I will let you know by then what are the missing info that I need.

Thanks.

Regards,

dlim

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Daniel,

Sorry, I am back. I have been reading your recent update and looks like it's not just simple Wins vs Linux issue anymore.

- 660 TI (Win 7) - pass with video output

- 660 TI (Ubuntu) - failed with no video output ?

- RTX 6000 (Win 10) - failed with no video output ?

- RTX 6000 (CentOS) - failed with no video output ?

- For Quartus v20.2 compilation error issue

- This looks like some Quartus internal error which is totally new issue here.

- Does it happen in your own design only or also affect DisplayPort example design ?

- For no video output debug

- I don't have your exact graphic card spec but google search review some info below

- GPU (Nvidia GTX 660Ti)

- DP version ? (suspect it's DP v1.2)

- 4096x2160 (including 3840x2160) at 60Hz supported over Displayport

- GPU (Nvidia RTX 6000)

- DP v1.4, support 4 DP connector port

- 4096x2160 @ 120Hz, 5120x2880 @ 60Hz

- Dell monitor U2718Q

- DP v1.2

- 3840 x 2160 at 60 Hz

- Nvidia RTX 6000 never works could be due to it's too advance and it maybe exercising some new DP feature that's not supported by Dell Monitor that's just supporting DP v1.2.

- For instance, DP v1.2 only support till HBR2 (5.4Gb/s). So, it doesn't make sense to configure Intel FPGA DP IP to use HBR3 (8.1Gb/s) setting.

- I also don't think this DELL monitor support adaptive sync feature. Pls disable it in GPU card setting. Anyway, Intel FPGA DP IP doesn't support Nvidia G-sync feature, we only support AMD adaptive sync feature but it needs to be special enabled in Intel FPGA DP IP

- You are also stressing both Nvidia 660 TI GPU and Dell monitor since their max video resolution capability is also just 4kp60

- Not sure if you had try out below debug option ?

- Changed Intel FPGA DP IP data rate setting from 8.1Gb/s to 5.4Gb/s

- Try out different bit per colour (bpc) setting in Intel FPGA DP IP. Also do you know what's the bpc setting in GPU card ?

- Thanks for sharing the AUX log but it's very hard to read without the decoder aid. Do you manage to dump the MSA log file for the failure case as well ?

- GPU (Nvidia GTX 660Ti)

Thanks.

Regards,

dlim

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi dlim,

I am happy that you are back and the forum is back up and running.

When I compile the sample design in Quartus v20.2 it does not have this issue. My project started in v19.1 then migrated to v19.2 and then v20.2. The project never had any compilation issues remotely similar to what I am seeing in v20.2.

I tried enabling Adaptive Sync in the NIOS code, per the sample design user guide, but it had no effect.

I am not sure why the dell monitor's version of DP plays a role in the FPGA's version of DP. The setup I test with is either:

- monitor<-GPU->FPGA

- GPU->FPGA->Monitor

The issue is between the GPU and the FPGA.

I tried recompiling the design with the only the lower rates enabled (2.7 Gbps and below) but I am not sure that I did it right. Is the only change i need to make the DP RX IP Core drop down in Platform Designer or do I need to make RTL changes as well (param to bitec reconfig module, etc.).

As for the MSA log file, are you referring to the print out on the screen when the 's' key is pressed?

Thanks,

Daniel

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HI Daniel,

I drop you a private message.

I hope you can see it. Let me know if there is an issue in viewing private message as Intel forum system just got upgraded.

As for lower video resolution control - you just need to set Intel DP IP datarate to HBR2 (5.4G). No other RTL change is required.

As for the MSA screen shot. yup. Just type "s" and hit "enter" key in NIOS II shell terminal. I can see you already have it in the end of your previous working Nvidia 660 Ti Win 7 log file. I would like to see the MSA log for the failing case.

Thanks.

Regards,

dlim

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi dlim,

I was able to see the private message. I will respond to that directly.

I will work on recompiling with the lower resolution and printing out the MSA tomorrow. From my memory, the VB-ID and MSA lock are both 0 and the BER is a high number for all of the lanes.

Thanks,

Daniel

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I will test out the compilation with the lower link rate (HBR2).

When I try to dump out the MSA from the NIOS when plugged into Linux I sometimes run into a problem where the NIOS has become unresponsive. The DP Debug AUX trace stops printing mid line and does not respond to any commands.

I was able to print out this MSA dump:

------------------------------------------

------ TX Main stream attributes -----

------------------------------------------

--- Stream 0 ---

MSA lock : 0

VB-ID : 19 MISC0 : 20 MISC1 : 00

Mvid : 138E5 Nvid : 8000

Htotal : 0000 Vtotal : 0000

HSP : 0000 HSW : 0000

Hstart : 0000 Vstart : 0000

VSP : 0000 VSW : 0000

Hwidth : 0000 Vheight : 0000

CRC R : 0000 CRC G : 0000 CRC B : 0000

--- Stream 1 ---

MSA lock : 0

VB-ID : 00 MISC0 : 00 MISC1 : 00

Mvid : 0000 Nvid : 0000

Htotal : 0000 Vtotal : 0000

HSP : 0000 HSW : 0000

Hstart : 0000 Vstart : 0000

VSP : 0000 VSW : 0000

Hwidth : 0000 Vheight : 0000

CRC R : 0000 CRC G : 0000 CRC B : 0000

------------------------------------------

-------- TX Link configuration -------

------------------------------------------

Lane count : 0

Link rate : 0 Mbps

------------------------------------------

------ RX Main stream attributes -----

------------------------------------------

--- Stream 0 ---

VB-ID lock : 0 MSA lock : 0

VB-ID : 19 MISC0 : 00 MISC1 : 00

Mvid : 0100 Nvid : 0000

Htotal : 0000 Vtotal : 0000

HSP : 0000 HSW : 0000

Hstart : 0000 Vstart : 0000

VSP : 0000 VSW : 0000

Hwidth : 0000 Vheight : 0000

CRC R : 0000 CRC G : 0000 CRC B : 0000

--- Stream 1 ---

VB-ID lock : 0 MSA lock : 0

VB-ID : 00 MISC0 : 00 MISC1 : 00

Mvid : 0000 Nvid : 0000

Htotal : 0000 Vtotal : 0000

HSP : 0000 HSW : 0000

Hstart : 0000 Vstart : 0000

VSP : 0000 VSW : 0000

Hwidth : 0000 Vheight : 0000

CRC R : 0000 CRC G : 0000 CRC B : 0000

------------------------------------------

-------- RX Link configuration -------

------------------------------------------

CR Done: 0 SYM Done: 0

Lane count : 1

Link rate : 1620 Mbps

BER0 : 17F0 BER1 : 11A4

BER2 : 076C BER3 : 0DD8

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HI dlim,

When I was trying out the different versions of Quartus (19.1, 19.2 and 20.2) I can see that 19.1 does not support the Bitec rev 11 FMC card but 19.2 does. However, the I2C setup commands for the retimer chip in the NIOS code is significantly different between 19.2 and 20.2. v20.2 also implements an MC_monitor and other new functions that v19.2 did not use. Which version of the I2C commands should I be using?

Thanks,

Daniel

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi dlim,

I am working on the v20.2 compile. In the meantime, I turned off AUX debug printout in software to ensure that I would be able to get my MSA dump printouts (UART otherwise can get stuck).

I am attaching two traces. One has multiple MSA dumps for Win7 and one has multiple MSA dumps for Win10. Both show that the LT seems to finish and then lose lock (CR and SYM transition to F and then back to 0). I have hooked up some of my LEDs to the the user_led_g[2:0] which are used to show dp_rx_vid_locked and dp_rx_link_rate. I am seeing that the dp_rx_vid_locked LED is always on and the dp_rx_link_rate LEDs are constantly toggling.

Would there be any benefit in testing an RX only design? Do you know where in the RX PHY I should be placing signalTap?

Thanks,

Daniel

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page