- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The Hardware processor forum wouldn't take this question because I'm developing software. I didn't see an obvious "forum location" for this question so I'm starting here. Please redirect me if I'm in the wrong place.

I've found that the RDTSC instruction only ever returns even values (least significant bit is never set) on several of the chips I have access to. These include:

Intel(R) Xeon(R) CPU E5620 @ 2.40GHz

2.4 GHz 8-Core Intel Core i9

Intel(R) Xeon(R) CPU E3-1275 v6 @ 3.80GHz

Intel(R) Core(TM) i5-7500 CPU @ 3.40GHz

However, there are some chips that return both even and odd values (least significant bit is set):

Intel(R) Xeon(R) CPU E5-2690 0 @ 2.90GHz

Intel(R) Xeon(R) CPU E5-2667 0 @ 2.90GHz

I'm running on various versions of the linux kernel including 4.4.12, 4.4.111, 5.4.72 as well as macOS Big Sur. I've tried different optimization options on the GNU C compiler to make sure there wasn't some obvious optimizations that might impact this.

This occurs both when calling rdtsc() from inside the kernel (i.e. JitterEntropy timestamp collection) as well as the attached user-space program. No matter when/where I call RDTSC and no matter how many times I do so and no matter what I put in-between those calls -- they are always even values.

This behavior seems broken. Did something go wrong with all the various tweaks to TSC behavior that have occurred over the years? Or is this just an expected side-effect of various CPU instruction processing optimizations?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As stated earlier, the answer is:

There is no such guarantee that "RDTSC can be used to gather entropy by observing final few bits of returned value". We only guarantee that the RDTSC returns monotonically increasing values. In other words, it is OK that the TSC bit[0] is always ‘1 or always ‘0 as long as the CPU guarantees monotonic forward progress.

This issue has been resolved and we will no longer respond to this thread. If you require additional assistance from Intel, please start a new thread.

Any further interaction in this thread will be considered community only.

Regards,

Khalik.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Rerickson,

Thank you for posting in Intel C++ compiler forum. We found your earlier query https://community.intel.com/t5/Processors/RDTSC-only-returns-evenly-numbered-values-on-some-chips/td-p/1230488 and the reproducer code attached with it. We ran the same. We were able to reproduce the issue of obtaining even numbers. We’re forwarding this issue to the concerned internal team who can help you out.

Regards

Gopika Ajit

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The implementation of the RDTSC instruction is probably a lot more complex than one might initially expect. The TSC is required to increment at the nominal frequency, but it is often the case that there are no functional units anywhere on the chip running at that frequency -- all can be running at different multipliers off of the 100 MHz reference clock.

So an implementation may start with the number of "reference clocks" (which must be obtained from an uncore unit that does not go to sleep), multiply that value by the base clock multiplier, and then interpolate between the current value and the next value. The interpolation must be reasonably fast and must guarantee monotonicity. The RDTSC instruction is also microcoded, which may introduce additional idiosyncrasies related to timing.

An implementation might also start with an uncore counter that is scaled relative to the 100 MHz reference clock, such as the PCU clock which increments at a fixed 1 GHz frequency. This may not be any easier -- it just changes "multiply by the (integer) base ratio and interpolate" into a multiplication by a non-integer ratio.

Overall, it is not surprising to me that an implementation might never return odd values. Or the details might change with different uncore frequencies, or different core frequencies. Looking at a Xeon Platinum 8160 (24-core, 2.1 GHz nominal), I don't see any indication of odd-parity results across a range of frequencies, but I have not tried modifying the uncore frequency yet....

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the quick+detailed response.

Is it plausible that the "algorithm" is identical across all these different CPUs? Perhaps there needs to be a different algorithm for those chips that handle clock frequency decisions differently?

My understanding is that the TSC has sub-nanosecond "resolution". If that's correct, it seems to me that the algorithm should be able to round to a more accurate nanosecond value than it is. Or does the complexity of clock frequency behavior on some CPUs make a better algorithm too complex/slow to be practical?

I definitely understand that the algorithm must be very fast and that this could potentially impact "accuracy". It would be interesting to understand why this doesn't work quite as well on some CPUs and whether anyone has looked at tweaking the algorithm to make it more "accurate" (this feels like one of those "the conditions have changed but we forgot to change our algorithm" situations).

Or am I not understanding the issues involved here correctly? (I am *not* a hardware guy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It is hard to estimate how much work an Intel processor does when executing an RDTSC or RDTSCP instruction.

On my Skylake Xeons (Platinum 8160), the "repeat rate" for a loop repeatedly executing RDTSCP and storing the result does not vary with uncore frequency (just tested). I am a little surprised by this, but I have gotten used to surprises....

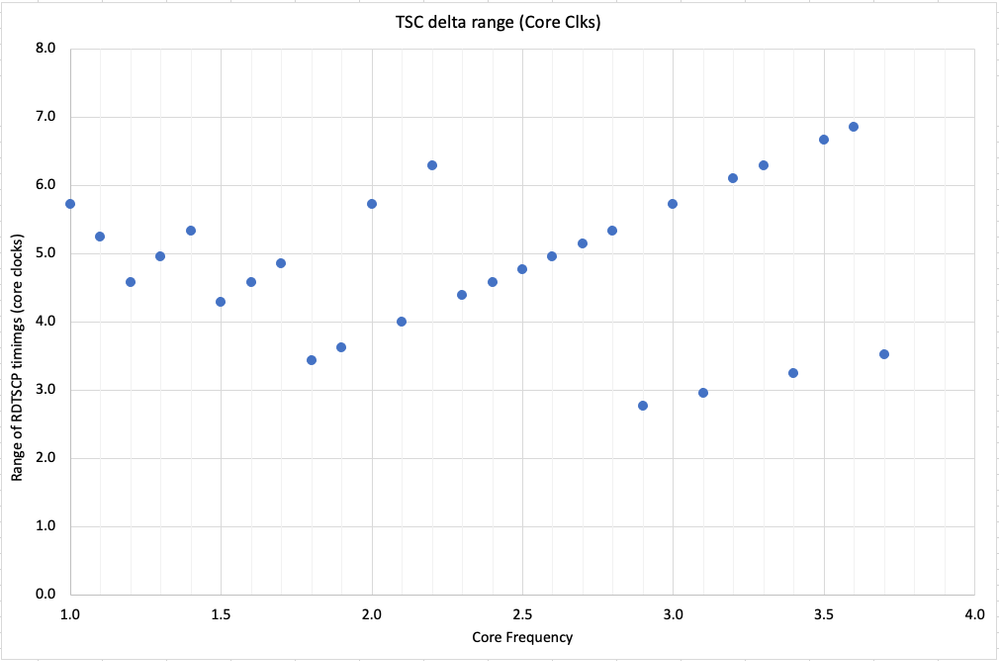

The "repeat rate" can be measured by the result of the TSC operation itself -- in units of TSC cycles. Over the range of 1.0 GHz to 3.7 GHz core frequencies, the difference in consecutive RDTSCP values varies from ~80 TSC cycles (at 1.0 GHz) to ~20 TSC cycles (at 3.5+ GHz).

Re-scaling those results to core clocks shows that the *average* difference in consecutive TSC values corresponds to about 37.5 core clocks (at whatever frequency the core is running).

If I look at the *range* of differences in consecutive TSC values and convert those to equivalent core clocks, I see that while the *average* is constant, the *range* varies systematically with frequency with an intriguing pattern.

While I don't have an exact formulation yet, this pattern is very similar to what you see with something like a Least Common Multiple between the Core Frequency Ratio (10 to 27) and the TSC Frequency Ratio of 21. This is the kind of pattern that one often sees with timing of iterative algorithms (like division) for integer values.

Maybe not helpful, but hopefully some indication that it may be challenging to figure out what is going on under the covers....

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

John,

Interesting chart (12/1/2020).

While the measurement of variations internally (iow by HW thread) appear, have you been able to determine if the actual TSC is relatively stable. IOW does it produce the same (within a very small delta) number of ticks per second?

What I mean by this is it is not unexpected for a HW thread (in a multi-core non-embedded system) to experience some moments of interference. There are other things happening on the system, thermal management, cache coordination/arbitration (note, the L1 icache can get evicted even in a tight register-only loop).

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

"Stability" in the TSC might mean a couple of different things?

Over long time scales (seconds to minutes to days) my understanding is that the operating system is responsible for monitoring whether the TSC is incrementing at a constant rate with respect to an independent real-time clock, and adjusting the scaling coefficient as necessary.

My interpretation of my TSC measurements is based on the assumption that the TSC is based on a hardware clock that is independent of the core, but also requires a bunch of microcoded Uops in the core domain that have a critical path averaging 37.5 core cycles. I would guess that the microcoded implementation of the RDTSC(P) instruction is not subject to external interrupts, but that it is subject to performance interference with other instructions that use the same functional units.

Differences between the return values from consecutive RDTSC(P) instructions are certainly subject to many types of interference. The really large differences (>1000 cycles) are easily ascribed to OS interference (which can be confirmed by using the performance counters to track interrupts and kernel cycles or instructions) or to core frequency changes (which can be confirmed using performance counters that show the elapsed "Reference Cycles Not Halted" is significantly lower than the elapsed TSC cycles). There are occasional delays of intermediate size that don't correspond to any mechanisms that I understand -- too long to be the expected sorts of HW delays, but much too short to be due to interrupts or frequency changes. Understanding these is complicated by the need to interact with the memory hierarchy in order to save the TSC results for post-processing.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I very much appreciate all the replies on how this works and why the output won't necessarily conform to what might be expected.

I'll consider the matter closed.

Regard, Rodger

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thank you for posting in Intel C++ compiler forum and sorry for the delayed response.

We have been able to reproduce issue on 3 out of 8 different machines.

Regarding question from the previous query: there is no dependency on the optimization level, kernel version, etc.

I have had a discussion with Intel CPU architect and microcoders on this topic and got some information from them:

There is no such guarantee that "RDTSC can be used to gather entropy by observing final few bits of returned value". We only guarantee that the RDTSC returns monotonically increasing values. In other words, it is OK that the TSC bit[0] is always ‘1 or always ‘0 as long as the CPU guarantees monotonic forward progress.

Regards,

Khalik.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As stated earlier, the answer is:

There is no such guarantee that "RDTSC can be used to gather entropy by observing final few bits of returned value". We only guarantee that the RDTSC returns monotonically increasing values. In other words, it is OK that the TSC bit[0] is always ‘1 or always ‘0 as long as the CPU guarantees monotonic forward progress.

This issue has been resolved and we will no longer respond to this thread. If you require additional assistance from Intel, please start a new thread.

Any further interaction in this thread will be considered community only.

Regards,

Khalik.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page