- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a rollup / cumulative summation loop which I am vectorizing.

// T can be either float or double

// SFX is a macro just verion the function with _d or _f

// for float/double types of images

VL_EXPORT void

VL_XCAT(vl_imconvcoltri_integrate_bwd_, SFX)

(T* buffer, vl_index y, T* imagei, vl_size imageStride, vl_size imageHeight)

{

for (; y >= 0 ; --y) {

imagei -= imageStride ;

buffer[y] = buffer[y + 1] + *imagei ;

}

}

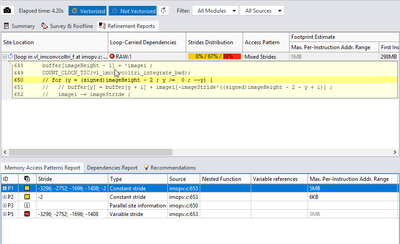

imageStride doesn't change throughout the loop and in intel advisor Refinement Report you can see different stride values for the serial code as shown below (under stride column):

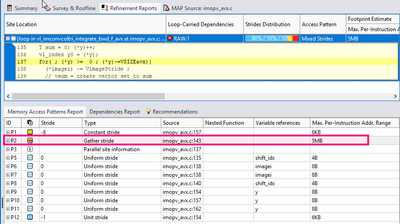

After vectorization the refinement report looks as below:

For one thing, I used gather intrinsics (_mm_i32gather_p[ds] and _mm256_i32gather_p[ds]) to load the image data into a vector data type.

I am wondering given the stride values (imageStride values) from the Refinement Report (for the serial code), would be any faster/better replacement for gather instructions under SSE, AVX and AVX512 achitecture.

Please let me know your valuable comments.

Regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The AVX instruction set does not have scatter/gather instructions. AVX2 does have these instructions. .B1.4 loop is the "vector always" implementation of the loop, which was unrolled 2x (count # addps's). IOW the "vector always" instructs the compile to perform (if possible) the computation portion via vector instructions. In this case the computation is a singular ADD.

The AVX instruction set does not have scatter/gather instructions. AVX2 does have these instructions. .B1.4 loop is the "vector always" implementation of the loop, which was unrolled 2x (count # addps's). IOW the "vector always" instructs the compile to perform (if possible) the computation portion via vector instructions. In this case the computation is a singular ADD.

The loop .B1.8 is the implementation of the for loop as scalar. This was inserted here to take care of potential non-vector-wide remainder. The scalar loop was not unrolled 2x as it likely would not be productive to do so.

The following is the AVX2 implementation (note, while it is different, the compiler did not make use of Gather and Scatter):

.B1.4:: ; Preds .B1.4 .B1.3

; Execution count [5.00e+00]

vmovss xmm0, DWORD PTR [1492+rdx+r8] ;8.16

lea rbx, QWORD PTR [rdx+rcx] ;8.9

vmovss xmm1, DWORD PTR [rdx+r8] ;8.16

add rax, 8 ;7.5

vmovss xmm4, DWORD PTR [1492+rdx+r10] ;8.23

vmovss xmm5, DWORD PTR [rdx+r10] ;8.23

vinsertps xmm3, xmm0, DWORD PTR [4476+rdx+r8], 16 ;8.16

vinsertps xmm2, xmm1, DWORD PTR [2984+rdx+r8], 16 ;8.16

vinsertps xmm4, xmm4, DWORD PTR [4476+rdx+r10], 16 ;8.23

vinsertps xmm5, xmm5, DWORD PTR [2984+rdx+r10], 16 ;8.23

vunpcklps xmm0, xmm2, xmm3 ;8.16

vunpcklps xmm1, xmm5, xmm4 ;8.23

vaddps xmm2, xmm0, xmm1 ;8.23

vmovss DWORD PTR [rbx], xmm2 ;8.9

vextractps DWORD PTR [1492+rbx], xmm2, 1 ;8.9

vextractps DWORD PTR [2984+rbx], xmm2, 2 ;8.9

vextractps DWORD PTR [4476+rbx], xmm2, 3 ;8.9

vmovss xmm3, DWORD PTR [7460+rdx+r8] ;8.16

vinsertps xmm2, xmm3, DWORD PTR [10444+rdx+r8], 16 ;8.16

vmovss xmm0, DWORD PTR [5968+rdx+r8] ;8.16

vmovss xmm3, DWORD PTR [7460+rdx+r10] ;8.23

vmovss xmm4, DWORD PTR [5968+rdx+r10] ;8.23

vinsertps xmm1, xmm0, DWORD PTR [8952+rdx+r8], 16 ;8.16

vinsertps xmm3, xmm3, DWORD PTR [10444+rdx+r10], 16 ;8.23

vinsertps xmm5, xmm4, DWORD PTR [8952+rdx+r10], 16 ;8.23

add rdx, 11936 ;7.5

vunpcklps xmm0, xmm1, xmm2 ;8.16

cmp rax, r11 ;7.5

vunpcklps xmm1, xmm5, xmm3 ;8.23

vaddps xmm2, xmm0, xmm1 ;8.23

vmovss DWORD PTR [5968+rbx], xmm2 ;8.9

vextractps DWORD PTR [7460+rbx], xmm2, 1 ;8.9

vextractps DWORD PTR [8952+rbx], xmm2, 2 ;8.9

vextractps DWORD PTR [10444+rbx], xmm2, 3 ;8.9

jb .B1.4 ; Prob 82% ;7.5

I am not sure why AVX2 chose to not use the Gather and Scatter, however, the instruction sequence is different from the AVX you presented.

And next, AVX512 (using Gather and Scatter):

.B1.4:: ; Preds .B1.4 .B1.3

; Execution count [5.00e+00]

vpxord zmm1, zmm1, zmm1 ;8.16 c1

kxnorw k1, k0, k0 ;8.16 c1

lea rsi, QWORD PTR [rbx+r11] ;8.16 c1

vpxord zmm2, zmm2, zmm2 ;8.23 c1

kxnorw k2, k0, k0 ;8.23 c1

kxnorw k3, k0, k0 ;8.9 c3

vpxord zmm4, zmm4, zmm4 ;8.16 c3

kxnorw k4, k0, k0 ;8.16 c3

vpxord zmm5, zmm5, zmm5 ;8.23 c3

vgatherdps zmm1{k1}, DWORD PTR [rsi+zmm0*4] ;8.16 c3

lea rsi, QWORD PTR [r8+r11] ;8.23 c3

kxnorw k5, k0, k0 ;8.23 c5

add r9, 32 ;7.5 c5

vgatherdps zmm2{k2}, DWORD PTR [rsi+zmm0*4] ;8.23 c5

kxnorw k6, k0, k0 ;8.9 c7

lea rsi, QWORD PTR [rcx+r11] ;8.9 c9

vaddps zmm3, zmm1, zmm2 ;8.23 c11

vscatterdps DWORD PTR [rsi+zmm0*4]{k3}, zmm3 ;8.9 c17 stall 2

lea rsi, QWORD PTR [23872+r11+rbx] ;8.16 c17

vgatherdps zmm4{k4}, DWORD PTR [rsi+zmm0*4] ;8.16 c19

lea rsi, QWORD PTR [23872+r11+r8] ;8.16 c23 stall 1

vgatherdps zmm5{k5}, DWORD PTR [rsi+zmm0*4] ;8.23 c25

lea rsi, QWORD PTR [23872+r11+rcx] ;8.16 c25

add r11, 47744 ;7.5 c25

vaddps zmm16, zmm4, zmm5 ;8.23 c31 stall 2

vscatterdps DWORD PTR [rsi+zmm0*4]{k6}, zmm16 ;8.9 c37 stall 2

cmp r9, r10 ;7.5 c37

jb .B1.4 ; Prob 82% ;7.5 c39

Jim Dempsey

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for reaching out to us.

We are working on it and will get back to you soon.

Thanks & Regards

Shivani

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Could you please provide the version of Intel advisor, compiler, and compiler details you have been using?

Could you also please provide us the compilation steps and sample reproducer code to investigate more on your issue?

Thanks & Regards

Shivani

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Intel(R) C++ Intel(R) 64 Compiler for applications running on Intel(R) 64, Version 19.1.2.254 Build 20200623

Intel Advisor 2021.1 Copyright © 2009-2020 Intel Corporation. All rights reserved. (Build no. 607872)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Gather instructions are only available with AVX2 and AVX512 (and KNC).

For gather to work efficiently, one does not update the indexes, instead, it is better to update the base address to the (next) first of stride to load (this conserves a vector register).

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Jim

I am curious how icc handles that .... so I prepared simple array add example as you can see below:

float add(float c[], float a[], float b[], int numel){

#pragma ivdep

#pragma vector always

for(int i = 0; i < numel; i+=373 ){

c[i] = a[i] + b[i];

}

float d = a[0] + b[0];

return d;

}

I forced the compiler to vectorized it ...

C:\tmp\gathertest>make

icl -arch:avx -Fo:test_gather.obj -Fa:test_gather.asm -c test_gather.cpp

Intel(R) C++ Intel(R) 64 Compiler for applications running on Intel(R) 64, Version 19.1.2.254 Build 20200623

Copyright (C) 1985-2020 Intel Corporation. All rights reserved.

test_gather.cpp

C:\tmp\gathertest>

Then I checked out asm files ...

; mark_description "Intel(R) C++ Intel(R) 64 Compiler for applications running on Intel(R) 64, Version 19.1.2.254 Build 20200623";

; mark_description "";

; mark_description "/arch:avx /Fo:test_gather.obj /Fa:test_gather.asm /c";

OPTION DOTNAME

_TEXT SEGMENT 'CODE'

; COMDAT ?add@@YAMQEAM00H@Z

TXTST0:

; -- Begin ?add@@YAMQEAM00H@Z

;?add@@YAMQEAM00H@Z ENDS

_TEXT ENDS

_TEXT SEGMENT 'CODE'

; COMDAT ?add@@YAMQEAM00H@Z

; mark_begin;

ALIGN 16

PUBLIC ?add@@YAMQEAM00H@Z

; --- add(float *, float *, float *, int)

?add@@YAMQEAM00H@Z PROC

; parameter 1: rcx

; parameter 2: rdx

; parameter 3: r8

; parameter 4: r9d

.B1.1:: ; Preds .B1.0

; Execution count [1.00e+00]

L1::

;2.54

mov r10, r8 ;2.54

mov r8, rdx ;2.54

test r9d, r9d ;5.24

jle .B1.10 ; Prob 50% ;5.24

; LOE rcx rbx rbp rsi rdi r8 r10 r12 r14 r15 r9d xmm6 xmm7 xmm8 xmm9 xmm10 xmm11 xmm12 xmm13 xmm14 xmm15

.B1.2:: ; Preds .B1.1

; Execution count [9.00e-01]

add r9d, 372 ;2.54

mov eax, 1473876177 ;2.54

imul r9d ;2.54

sar r9d, 31 ;2.54

sar edx, 7 ;2.54

sub edx, r9d ;2.54

movsxd rax, edx ;5.5

cmp rax, 16 ;5.5

jl .B1.11 ; Prob 10% ;5.5

; LOE rax rcx rbx rbp rsi rdi r8 r10 r12 r14 r15 edx xmm6 xmm7 xmm8 xmm9 xmm10 xmm11 xmm12 xmm13 xmm14 xmm15

.B1.3:: ; Preds .B1.2

; Execution count [9.00e-01]

and edx, -16 ;5.5

xor r11d, r11d ;5.5

movsxd rdx, edx ;5.5

xor r9d, r9d ;5.5

; LOE rax rdx rcx rbx rbp rsi rdi r8 r9 r10 r11 r12 r14 r15 xmm6 xmm7 xmm8 xmm9 xmm10 xmm11 xmm12 xmm13 xmm14 xmm15

.B1.4:: ; Preds .B1.4 .B1.3

; Execution count [5.00e+00]

vmovss xmm4, DWORD PTR [1492+r9+r8] ;6.16

add r11, 16 ;5.5

vmovss xmm5, DWORD PTR [r9+r8] ;6.16

vmovss xmm3, DWORD PTR [7460+r9+r8] ;6.16

vmovss xmm2, DWORD PTR [5968+r9+r8] ;6.16

vinsertps xmm0, xmm4, DWORD PTR [4476+r9+r8], 16 ;6.16

vinsertps xmm1, xmm5, DWORD PTR [2984+r9+r8], 16 ;6.16

vinsertps xmm5, xmm3, DWORD PTR [10444+r9+r8], 16 ;6.16

vinsertps xmm4, xmm2, DWORD PTR [8952+r9+r8], 16 ;6.16

vunpcklps xmm1, xmm1, xmm0 ;6.16

vunpcklps xmm0, xmm4, xmm5 ;6.16

vmovss xmm4, DWORD PTR [r9+r10] ;6.23

vmovss xmm2, DWORD PTR [1492+r9+r10] ;6.23

vinsertps xmm5, xmm4, DWORD PTR [2984+r9+r10], 16 ;6.23

vmovss xmm4, DWORD PTR [5968+r9+r10] ;6.23

vinsertf128 ymm3, ymm1, xmm0, 1 ;6.16

vinsertps xmm1, xmm2, DWORD PTR [4476+r9+r10], 16 ;6.23

vmovss xmm0, DWORD PTR [7460+r9+r10] ;6.23

vunpcklps xmm2, xmm5, xmm1 ;6.23

vinsertps xmm1, xmm0, DWORD PTR [10444+r9+r10], 16 ;6.23

vinsertps xmm5, xmm4, DWORD PTR [8952+r9+r10], 16 ;6.23

vunpcklps xmm0, xmm5, xmm1 ;6.23

vinsertf128 ymm1, ymm2, xmm0, 1 ;6.23

vaddps ymm3, ymm3, ymm1 ;6.23

vextractf128 xmm1, ymm3, 1 ;6.9

vmovss DWORD PTR [r9+rcx], xmm3 ;6.9

vmovss DWORD PTR [5968+r9+rcx], xmm1 ;6.9

vextractps DWORD PTR [1492+r9+rcx], xmm3, 1 ;6.9

vextractps DWORD PTR [2984+r9+rcx], xmm3, 2 ;6.9

vextractps DWORD PTR [4476+r9+rcx], xmm3, 3 ;6.9

vextractps DWORD PTR [7460+r9+rcx], xmm1, 1 ;6.9

vextractps DWORD PTR [8952+r9+rcx], xmm1, 2 ;6.9

vextractps DWORD PTR [10444+r9+rcx], xmm1, 3 ;6.9

vmovss xmm0, DWORD PTR [13428+r9+r8] ;6.16

vinsertps xmm5, xmm0, DWORD PTR [16412+r9+r8], 16 ;6.16

vmovss xmm2, DWORD PTR [11936+r9+r8] ;6.16

vmovss xmm1, DWORD PTR [19396+r9+r8] ;6.16

vmovss xmm0, DWORD PTR [17904+r9+r8] ;6.16

vinsertps xmm4, xmm2, DWORD PTR [14920+r9+r8], 16 ;6.16

vinsertps xmm3, xmm1, DWORD PTR [22380+r9+r8], 16 ;6.16

vinsertps xmm2, xmm0, DWORD PTR [20888+r9+r8], 16 ;6.16

vunpcklps xmm4, xmm4, xmm5 ;6.16

vunpcklps xmm5, xmm2, xmm3 ;6.16

vmovss xmm2, DWORD PTR [11936+r9+r10] ;6.23

vmovss xmm0, DWORD PTR [13428+r9+r10] ;6.23

vinsertps xmm3, xmm2, DWORD PTR [14920+r9+r10], 16 ;6.23

vmovss xmm2, DWORD PTR [17904+r9+r10] ;6.23

vinsertf128 ymm1, ymm4, xmm5, 1 ;6.16

vinsertps xmm4, xmm0, DWORD PTR [16412+r9+r10], 16 ;6.23

vmovss xmm5, DWORD PTR [19396+r9+r10] ;6.23

vunpcklps xmm0, xmm3, xmm4 ;6.23

vinsertps xmm4, xmm5, DWORD PTR [22380+r9+r10], 16 ;6.23

vinsertps xmm3, xmm2, DWORD PTR [20888+r9+r10], 16 ;6.23

vunpcklps xmm5, xmm3, xmm4 ;6.23

vinsertf128 ymm0, ymm0, xmm5, 1 ;6.23

vaddps ymm1, ymm1, ymm0 ;6.23

vextractf128 xmm0, ymm1, 1 ;6.9

vmovss DWORD PTR [11936+r9+rcx], xmm1 ;6.9

vmovss DWORD PTR [17904+r9+rcx], xmm0 ;6.9

vextractps DWORD PTR [13428+r9+rcx], xmm1, 1 ;6.9

vextractps DWORD PTR [14920+r9+rcx], xmm1, 2 ;6.9

vextractps DWORD PTR [16412+r9+rcx], xmm1, 3 ;6.9

vextractps DWORD PTR [19396+r9+rcx], xmm0, 1 ;6.9

vextractps DWORD PTR [20888+r9+rcx], xmm0, 2 ;6.9

vextractps DWORD PTR [22380+r9+rcx], xmm0, 3 ;6.9

add r9, 23872 ;5.5

cmp r11, rdx ;5.5

jb .B1.4 ; Prob 82% ;5.5

; LOE rax rdx rcx rbx rbp rsi rdi r8 r9 r10 r11 r12 r14 r15 xmm6 xmm7 xmm8 xmm9 xmm10 xmm11 xmm12 xmm13 xmm14 xmm15

.B1.6:: ; Preds .B1.4 .B1.11

; Execution count [1.00e+00]

imul r9, rdx, 1492 ;5.5

cmp rdx, rax ;5.5

jae .B1.10 ; Prob 9% ;5.5

; LOE rax rdx rcx rbx rbp rsi rdi r8 r9 r10 r12 r14 r15 xmm6 xmm7 xmm8 xmm9 xmm10 xmm11 xmm12 xmm13 xmm14 xmm15

.B1.8:: ; Preds .B1.6 .B1.8

; Execution count [5.00e+00]

vmovss xmm0, DWORD PTR [r9+r8] ;6.16

inc rdx ;5.5

vaddss xmm1, xmm0, DWORD PTR [r9+r10] ;6.23

vmovss DWORD PTR [r9+rcx], xmm1 ;6.9

add r9, 1492 ;5.5

cmp rdx, rax ;5.5

jb .B1.8 ; Prob 82% ;5.5

; LOE rax rdx rcx rbx rbp rsi rdi r8 r9 r10 r12 r14 r15 xmm6 xmm7 xmm8 xmm9 xmm10 xmm11 xmm12 xmm13 xmm14 xmm15

.B1.10:: ; Preds .B1.8 .B1.1 .B1.6

; Execution count [1.00e+00]

vmovss xmm0, DWORD PTR [r8] ;8.15

vaddss xmm0, xmm0, DWORD PTR [r10] ;8.22

vzeroupper ;9.12

ret ;9.12

; LOE

.B1.11:: ; Preds .B1.2

; Execution count [9.00e-02]: Infreq

xor edx, edx ;5.5

jmp .B1.6 ; Prob 100% ;5.5

ALIGN 16

; LOE rax rdx rcx rbx rbp rsi rdi r8 r10 r12 r14 r15 xmm6 xmm7 xmm8 xmm9 xmm10 xmm11 xmm12 xmm13 xmm14 xmm15

.B1.12::

; mark_end;

?add@@YAMQEAM00H@Z ENDP

;?add@@YAMQEAM00H@Z ENDS

_TEXT ENDS

_DATA SEGMENT 'DATA'

_DATA ENDS

; -- End ?add@@YAMQEAM00H@Z

_DATA SEGMENT 'DATA'

_DATA ENDS

EXTRN __ImageBase:PROC

EXTRN _fltused:BYTE

INCLUDELIB <libmmt>

INCLUDELIB <LIBCMT>

INCLUDELIB <libirc>

INCLUDELIB <svml_dispmt>

INCLUDELIB <OLDNAMES>

INCLUDELIB <libdecimal>

END

I only see only (though I am not an assembly expert)

- vextractps

- vinsertps

- vunpcklps

Do you think it might be a better approach to find better intrinics this way? or perhaps I better off learning the language using a reference or sth ... (I guess I am just trying to prevent asking too many stupid questions on the Intel's forum

Please let me know thank you. (appreciate it)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The AVX instruction set does not have scatter/gather instructions. AVX2 does have these instructions. .B1.4 loop is the "vector always" implementation of the loop, which was unrolled 2x (count # addps's). IOW the "vector always" instructs the compile to perform (if possible) the computation portion via vector instructions. In this case the computation is a singular ADD.

The AVX instruction set does not have scatter/gather instructions. AVX2 does have these instructions. .B1.4 loop is the "vector always" implementation of the loop, which was unrolled 2x (count # addps's). IOW the "vector always" instructs the compile to perform (if possible) the computation portion via vector instructions. In this case the computation is a singular ADD.

The loop .B1.8 is the implementation of the for loop as scalar. This was inserted here to take care of potential non-vector-wide remainder. The scalar loop was not unrolled 2x as it likely would not be productive to do so.

The following is the AVX2 implementation (note, while it is different, the compiler did not make use of Gather and Scatter):

.B1.4:: ; Preds .B1.4 .B1.3

; Execution count [5.00e+00]

vmovss xmm0, DWORD PTR [1492+rdx+r8] ;8.16

lea rbx, QWORD PTR [rdx+rcx] ;8.9

vmovss xmm1, DWORD PTR [rdx+r8] ;8.16

add rax, 8 ;7.5

vmovss xmm4, DWORD PTR [1492+rdx+r10] ;8.23

vmovss xmm5, DWORD PTR [rdx+r10] ;8.23

vinsertps xmm3, xmm0, DWORD PTR [4476+rdx+r8], 16 ;8.16

vinsertps xmm2, xmm1, DWORD PTR [2984+rdx+r8], 16 ;8.16

vinsertps xmm4, xmm4, DWORD PTR [4476+rdx+r10], 16 ;8.23

vinsertps xmm5, xmm5, DWORD PTR [2984+rdx+r10], 16 ;8.23

vunpcklps xmm0, xmm2, xmm3 ;8.16

vunpcklps xmm1, xmm5, xmm4 ;8.23

vaddps xmm2, xmm0, xmm1 ;8.23

vmovss DWORD PTR [rbx], xmm2 ;8.9

vextractps DWORD PTR [1492+rbx], xmm2, 1 ;8.9

vextractps DWORD PTR [2984+rbx], xmm2, 2 ;8.9

vextractps DWORD PTR [4476+rbx], xmm2, 3 ;8.9

vmovss xmm3, DWORD PTR [7460+rdx+r8] ;8.16

vinsertps xmm2, xmm3, DWORD PTR [10444+rdx+r8], 16 ;8.16

vmovss xmm0, DWORD PTR [5968+rdx+r8] ;8.16

vmovss xmm3, DWORD PTR [7460+rdx+r10] ;8.23

vmovss xmm4, DWORD PTR [5968+rdx+r10] ;8.23

vinsertps xmm1, xmm0, DWORD PTR [8952+rdx+r8], 16 ;8.16

vinsertps xmm3, xmm3, DWORD PTR [10444+rdx+r10], 16 ;8.23

vinsertps xmm5, xmm4, DWORD PTR [8952+rdx+r10], 16 ;8.23

add rdx, 11936 ;7.5

vunpcklps xmm0, xmm1, xmm2 ;8.16

cmp rax, r11 ;7.5

vunpcklps xmm1, xmm5, xmm3 ;8.23

vaddps xmm2, xmm0, xmm1 ;8.23

vmovss DWORD PTR [5968+rbx], xmm2 ;8.9

vextractps DWORD PTR [7460+rbx], xmm2, 1 ;8.9

vextractps DWORD PTR [8952+rbx], xmm2, 2 ;8.9

vextractps DWORD PTR [10444+rbx], xmm2, 3 ;8.9

jb .B1.4 ; Prob 82% ;7.5

I am not sure why AVX2 chose to not use the Gather and Scatter, however, the instruction sequence is different from the AVX you presented.

And next, AVX512 (using Gather and Scatter):

.B1.4:: ; Preds .B1.4 .B1.3

; Execution count [5.00e+00]

vpxord zmm1, zmm1, zmm1 ;8.16 c1

kxnorw k1, k0, k0 ;8.16 c1

lea rsi, QWORD PTR [rbx+r11] ;8.16 c1

vpxord zmm2, zmm2, zmm2 ;8.23 c1

kxnorw k2, k0, k0 ;8.23 c1

kxnorw k3, k0, k0 ;8.9 c3

vpxord zmm4, zmm4, zmm4 ;8.16 c3

kxnorw k4, k0, k0 ;8.16 c3

vpxord zmm5, zmm5, zmm5 ;8.23 c3

vgatherdps zmm1{k1}, DWORD PTR [rsi+zmm0*4] ;8.16 c3

lea rsi, QWORD PTR [r8+r11] ;8.23 c3

kxnorw k5, k0, k0 ;8.23 c5

add r9, 32 ;7.5 c5

vgatherdps zmm2{k2}, DWORD PTR [rsi+zmm0*4] ;8.23 c5

kxnorw k6, k0, k0 ;8.9 c7

lea rsi, QWORD PTR [rcx+r11] ;8.9 c9

vaddps zmm3, zmm1, zmm2 ;8.23 c11

vscatterdps DWORD PTR [rsi+zmm0*4]{k3}, zmm3 ;8.9 c17 stall 2

lea rsi, QWORD PTR [23872+r11+rbx] ;8.16 c17

vgatherdps zmm4{k4}, DWORD PTR [rsi+zmm0*4] ;8.16 c19

lea rsi, QWORD PTR [23872+r11+r8] ;8.16 c23 stall 1

vgatherdps zmm5{k5}, DWORD PTR [rsi+zmm0*4] ;8.23 c25

lea rsi, QWORD PTR [23872+r11+rcx] ;8.16 c25

add r11, 47744 ;7.5 c25

vaddps zmm16, zmm4, zmm5 ;8.23 c31 stall 2

vscatterdps DWORD PTR [rsi+zmm0*4]{k6}, zmm16 ;8.9 c37 stall 2

cmp r9, r10 ;7.5 c37

jb .B1.4 ; Prob 82% ;7.5 c39

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

By the way.

Prior to the gather/scatter loop zmm0 was loaded with the offsets:

vmovdqu32 zmm0, ZMMWORD PTR [_2il0floatpacket.0] ;

Where:

_2il0floatpacket.0 DD 000000000H,000000175H,0000002eaH,00000045fH,0000005d4H,000000749H,0000008beH,000000a33H,000000ba8H,000000d1dH,000000e92H,000001007H,00000117cH,0000012f1H,000001466H,0000015dbH

The AVX2 could have generated similar code using 256-bit vectors.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Also (if Intel is watching this thread) the above AVX512 loop is unnecessarily creating a 1's mask (kxnorw's) which would only be required (to one mask) on the last (remainder) iteration.

IOW two additional optimizatons:

1) AVX2 to make use of Gather/Scatter

2) better optimization of mask registers

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks so much for valuable details. Much appreciate your comments.

Interestingly enough -arch:core-avx2 did not generate any gather/scather either!

If I use both-arch:skylake-avx512 -arch:core-avx2, the earlier is overridden by the later! (very interesting perhaps there is rational behind it), however, if I use skylay-avx512 it did generate the gather/scatter intrinsics. (below)

C:\temp\simdtests\gathertest>make

icl -arch:skylake-avx512 -arch:core-avx2 -Fo:test_gather.obj -Fa:test_gather.asm -c test_gather.cpp

Intel(R) C++ Intel(R) 64 Compiler for applications running on Intel(R) 64, Version 19.1.2.254 Build 20200623

Copyright (C) 1985-2020 Intel Corporation. All rights reserved.

icl: command line warning #10121: overriding '/archskylake-avx512' with '/archcore-avx2'

test_gather.cpp

C:\temp\simdtests\gathertest>make

icl -arch:skylake-avx512 -Fo:test_gather.obj -Fa:test_gather.asm -c test_gather.cpp

Intel(R) C++ Intel(R) 64 Compiler for applications running on Intel(R) 64, Version 19.1.2.254 Build 20200623

Copyright (C) 1985-2020 Intel Corporation. All rights reserved.

test_gather.cpp

C:\temp\simdtests\gathertest>

.B1.4:: ; Preds .B1.4 .B1.3

; Execution count [5.00e+00]

vpcmpeqb k1, xmm0, xmm0 ;6.16

lea rsi, QWORD PTR [rbx+r11] ;6.16

vpcmpeqb k2, xmm0, xmm0 ;6.16

vpcmpeqb k3, xmm0, xmm0 ;6.23

vpcmpeqb k4, xmm0, xmm0 ;6.23

vpcmpeqb k5, xmm0, xmm0 ;6.9

vpcmpeqb k6, xmm0, xmm0 ;6.9

vxorps ymm2, ymm2, ymm2 ;6.16

add r9, 16 ;5.5

vxorps ymm4, ymm4, ymm4 ;6.16

vxorps ymm3, ymm3, ymm3 ;6.23

vxorps ymm5, ymm5, ymm5 ;6.23

vgatherdps ymm2{k1}, DWORD PTR [rsi+ymm1*4] ;6.16

vgatherdps ymm4{k2}, DWORD PTR [11936+rsi+ymm1*4] ;6.16

lea rsi, QWORD PTR [r8+r11] ;6.23

vgatherdps ymm5{k4}, DWORD PTR [11936+rsi+ymm1*4] ;6.23

vgatherdps ymm3{k3}, DWORD PTR [rsi+ymm1*4] ;6.23

vaddps ymm17, ymm4, ymm5 ;6.23

vaddps ymm16, ymm2, ymm3 ;6.23

lea rsi, QWORD PTR [rcx+r11] ;6.9

add r11, 23872 ;5.5

vscatterdps DWORD PTR [rsi+ymm0*4]{k5}, ymm16 ;6.9

vscatterdps DWORD PTR [11936+rsi+ymm0*4]{k6}, ymm17 ;6.9

cmp r9, r10 ;5.5

jb .B1.4 ; Prob 82% ;5.5

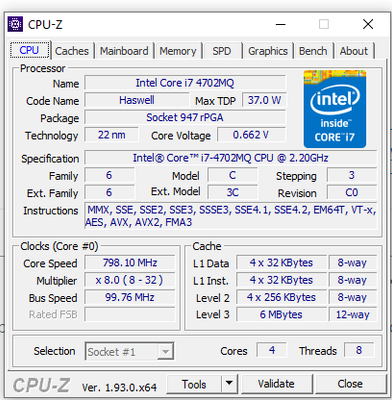

I am wondering what compiler switch should I use when my processor is not a skylake but an AVX512 supported.

Thanks

Mike

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have you considered using the intrinsic functions?

It is a little more work, but can yield productive results.

Be careful to stick with the instruction set(s) supported by your machine(s).

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Intrinsic functions makes more sense to use. I am just trying to cheat and find the right function just by looking at compilers assembly instruction selection just to make sure I am using the right intrinsics. (

Please let me know if there is a book or article for that matter.

Regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In the Intel C++ documentation you might find the following informative:

Processor Targeting (intel.com)

cpu_dispatch, cpu_specific (intel.com)

_allow_cpu_features (intel.com)

_may_i_use_cpu_feature (intel.com)

and the Intrinsics guide.

https://software.intel.com/sites/landingpage/IntrinsicsGuide/#=undefined

In the intrinsics guid, you can enter "gather_pd" into the search, then individually select(check) the Technologies check boxes:

SSE4.1 (doesn't have gather)

AVX (doesn't have gather)

AVX2 (has gather)

AVX512(F) (has gather)

If (when found) you click on the intrinsic function, you will then expose a Synopsis, Description and Operation pseudo code.

(unfortunately programming example code not provided)

To get programming example, try Google search: _mm256_i32gather_pd

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Use -QxHost

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No luck with QxHost either perhaps the processor is not supporting AVX2 ... weird though because cpu-z says it does.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Feel free to close this thread.

Regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for accepting as a solution!

Glad to know that your issue is resolved. As this issue has been resolved, we will no longer respond to this thread. If you require any additional assistance from Intel, please start a new thread. Any further interaction in this thread will be considered community only.

Have a Good day.

Thanks & Regards

Shivani

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page