- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am trying to implement FlowNetS on Dev Cloud for Edge.

I can run inference on CPU/GPU/Hetero:GPU,CPU/VPU instead of Hetero:FPGA,CPU.

How can I solve this problem? I use a bitstream file from sample tutorial. If there is any unsupported layer, it should fall back to CPU right?.

Basically, the code stuck at this line -> net = ie.read_network(model=model_xml, weights=model_bin)

and error is -> /var/spool/torque/mom_priv/jobs/70397.v-qsvr-2.devcloud-edge.SC: line 35: 23932 Segmentation fault

I put my xml file in appendex

here is my qsub command

=====

#Submit job to the queue

job_id_fpga = !qsub flownets.sh -l nodes=2:idc003a10 -F "results/fpga HETERO:FPGA,CPU FP16" -N Flownets_fpga

print(job_id_fpga[0])

#Progress indicators

if job_id_fpga:

progressIndicator('results/fpga', f'i_progress_{job_id_fpga[0]}.txt', "Inference", 0, 100)

=====

Here is my job output:

====

########################################################################

# Date: Thu Feb 18 05:48:40 PST 2021

# Job ID: 70397.v-qsvr-2.devcloud-edge

# User: u60570

# Resources: neednodes=2:idc003a10,nodes=2:idc003a10,walltime=00:10:00

########################################################################

[setupvars.sh] OpenVINO environment initialized

INTELFPGAOCLSDKROOT is not set

Using script's current directory (/opt/altera/aocl-pro-rte/aclrte-linux64)

aoc was not found, but aocl was found. Assuming only RTE is installed.

AOCL_BOARD_PACKAGE_ROOT is set to /opt/intel/openvino/bitstreams/a10_vision_design_sg2_bitstreams/BSP/a10_1150_sg2. Using that.

Adding /opt/altera/aocl-pro-rte/aclrte-linux64/bin to PATH

Adding /opt/altera/aocl-pro-rte/aclrte-linux64/linux64/lib to LD_LIBRARY_PATH

Adding /opt/altera/aocl-pro-rte/aclrte-linux64/host/linux64/lib to LD_LIBRARY_PATH

Adding /opt/intel/openvino/bitstreams/a10_vision_design_sg2_bitstreams/BSP/a10_1150_sg2/linux64/lib to LD_LIBRARY_PATH

aocl program: Running program from /opt/intel/openvino/bitstreams/a10_vision_design_sg2_bitstreams/BSP/a10_1150_sg2/linux64/libexec

Programming device: a10gx_2ddr : Intel Vision Accelerator Design with Intel Arria 10 FPGA (acla10_1150_sg20)

Program succeed.

Imported Python modules successfully.

=> will save raw output and RGB visualization

=> fetching img pairs in './test/'

=> will save everything to ./test/flow

1 samples found

input_var shape torch.Size([1, 6, 384, 512])

[ INFO ] Initializing plugin for HETERO:FPGA,CPU device...

check

An Inference Engine object has been created

HETERO:FPGA,CPU

[ INFO ] Loading FlowNetS model to the plugin

[ INFO ] Loading network files:

models/FP16/saved_model.xml

models/FP16/saved_model.bin

########################################################################

# End of output for job 70397.v-qsvr-2.devcloud-edge

# Date: Thu Feb 18 05:49:23 PST 2021

########################################################################

sys.argv[0]=[/etc/collectd/telemetrySender.py]

sys.argv[1]=[u60570]

sys.argv[2]=[70397.v-qsvr-2.devcloud-edge]

sys.argv[3]=[42]

sys.argv[4]=[1613656122]

sys.argv[5]=[1613656164]

sys.argv[6]=[idc003a10_compnode_openvino-lts_intel-core_i5-6500te_intel-hd-530_ram8gb_arria10-fpga_tank-870_iei-mustang-f100-a10]

USER_ID=[u60570]

JOB_ID=[70397]

JOB_RUNTIME=[42]

FROM_TIME=[1613656122]

TO_TIME=[1613656164]

HOST_TYPE=[idc003a10_compnode_openvino-lts_intel-core_i5-6500te_intel-hd-530_ram8gb_arria10-fpga_tank-870_iei-mustang-f100-a10]

EDGE_NAME=[s003-n014]

PBS_O_WORKDIR=[/home/u60570/My-Notebooks/flownet]

APPLICATION_NAME=[flownet]

INTEL_SKU=[core-i5]

skipping application metrics

InfluxDBClient.write_points(metric_list) result_success:[True]

=====

Here is my job error

=====

0%| | 0/1 [00:00<?, ?it/s]

100%|██████████| 1/1 [00:00<00:00, 2.77it/s]/var/spool/torque/mom_priv/jobs/70397.v-qsvr-2.devcloud-edge.SC: line 35: 23932 Segmentation fault python3 flownet.py -IR $MODELPATH -r $OUTPUT_FILE -d $DEVICE

=====

Here is my shell file

=====

# Store input arguments: <output_directory> <device> <fp_precision>

OUTPUT_FILE=$1

DEVICE=$2

FP_MODEL=$3

# The default path for the job is the user's home directory,

# change directory to where the files are.

cd $PBS_O_WORKDIR

# Make sure that the output directory exists.

mkdir -p $OUTPUT_FILE

# Check for special setup steps depending upon device to be used

if [ "$DEVICE" = "HETERO:FPGA,CPU" ]; then

# Environment variables and compilation for edge compute nodes with FPGAs - Updated for OpenVINO 2020.3

export AOCL_BOARD_PACKAGE_ROOT=/opt/intel/openvino/bitstreams/a10_vision_design_sg2_bitstreams/BSP/a10_1150_sg2

source /opt/altera/aocl-pro-rte/aclrte-linux64/init_opencl.sh

aocl program acl0 /opt/intel/openvino/bitstreams/a10_vision_design_sg2_bitstreams/2020-3_PL2_FP16_MobileNet_Clamp.aocx

export CL_CONTEXT_COMPILER_MODE_INTELFPGA=3

fi

# Check for special setup steps depending upon device to be used

if [ "$DEVICE" = "HETERO:FPGA,GPU,CPU" ]; then

# Environment variables and compilation for edge compute nodes with FPGAs - Updated for OpenVINO 2020.3

export AOCL_BOARD_PACKAGE_ROOT=/opt/intel/openvino/bitstreams/a10_vision_design_sg2_bitstreams/BSP/a10_1150_sg2

source /opt/altera/aocl-pro-rte/aclrte-linux64/init_opencl.sh

aocl program acl0 /opt/intel/openvino/bitstreams/a10_vision_design_sg2_bitstreams/2020-3_PL2_FP16_MobileNet_Clamp.aocx

export CL_CONTEXT_COMPILER_MODE_INTELFPGA=3

fi

# Set inference model IR files using specified precision

MODELPATH=models/${FP_MODEL}/saved_model

# Run the brain tumor segmentation code

python3 flownet.py -IR $MODELPATH \

-r $OUTPUT_FILE \

-d $DEVICE

=====

Here is my code

=====

import os

import time

import numpy as np

from openvino.inference_engine import IECore

from argparser import args

import torchvision.transforms as transforms

import flow_transforms

from path import Path

from tqdm import tqdm

from imageio import imread, imwrite

import torch

import logging as log

from matplotlib import pyplot as plt

from qarpo.demoutils import progressUpdate

global args, save_path

print('Imported Python modules successfully.')

import sys

import torch.nn.functional as F

import cv2

try:

from path import Path

except:

print("path not installed")

try:

from tqdm import tqdm

except:

print("tqdm not installed")

try:

from imageio import imread, imwrite

except:

print("imageio not installed")

if args.output_value == 'both':

output_string = "raw output and RGB visualization"

elif args.output_value == 'raw':

output_string = "raw output"

elif args.output_value == 'vis':

output_string = "RGB visualization"

print("=> will save " + output_string)

data_dir = Path(args.data)

print("=> fetching img pairs in '{}'".format(args.data))

if args.output is None:

save_path = data_dir/'flow'

else:

save_path = Path(args.output)

print('=> will save everything to {}'.format(save_path))

save_path.makedirs_p()

# Data loading code

input_transform = transforms.Compose([

flow_transforms.ArrayToTensor(),

transforms.Normalize(mean=[0,0,0], std=[255,255,255]),

transforms.Normalize(mean=[0.411,0.432,0.45], std=[1,1,1])

])

img_pairs = []

for ext in args.img_exts:

test_files = data_dir.files('*1.{}'.format(ext))

for file in test_files:

img_pair = file.parent / (file.stem[:-1] + '2.{}'.format(ext))

if img_pair.isfile():

img_pairs.append([file, img_pair])

print('{} samples found'.format(len(img_pairs)))

for (img1_file, img2_file) in tqdm(img_pairs):

img1 = input_transform(imread(img1_file))

img2 = input_transform(imread(img2_file))

input_var = torch.cat([img1, img2]).unsqueeze(0)

if args.bidirectional:

# feed inverted pair along with normal pair

inverted_input_var = torch.cat([img2, img1]).unsqueeze(0)

input_var = torch.cat([input_var, inverted_input_var])

print("input_var shape",input_var.shape)

def load_model(

"""

Load the OpenVINO model.

"""

log.info("Loading U-Net model to the plugin")

model_xml = args.intermediate_rep +".xml"

model_bin = args.intermediate_rep +".bin"

return model_xml, model_bin

log.basicConfig(format="[ %(levelname)s ] %(message)s", level=log.INFO, stream=sys.stdout)

# Plugin initialization for specified device and load extensions library if specified

log.info("Initializing plugin for {} device...".format(args.device))

print("check")

#plugin = IEPlugin(device=args.device, plugin_dirs=args.plugin_dir)

ie=IECore()

print("An Inference Engine object has been created")

print(args.device)

if args.cpu_extension and "CPU" in args.device:

#plugin.add_cpu_extension(args.cpu_extension)

ie.add_extension(args.cpu_extension, "CPU")

# Read IR

# If using MYRIAD then we need to load FP16 model version

model_xml, model_bin = load_model()

log.info("Loading network files:\n\t{}\n\t{}".format(model_xml, model_bin))

net = ie.read_network(model=model_xml, weights=model_bin)

print("network loaded check!")

if args.device == "CPU":

supported_layers = ie.query_network(net, args.device)

not_supported_layers = [l for l in net.layers.keys() if l not in supported_layers]

if len(not_supported_layers) != 0:

log.error("Following layers are not supported by the plugin for specified device {}:\n {}".

format(args.device, ', '.join(not_supported_layers)))

log.error("Please try to specify cpu extensions library path in sample's command line parameters using -l "

"or --cpu_extension command line argument")

sys.exit(1)

assert len(net.inputs.keys()) == 1, "Sample supports only single input topologies"

assert len(net.outputs) == 1, "Sample supports only single output topologies"

"""

Ask OpenVINO for input and output tensor names and sizes

"""

input_blob = next(iter(net.inputs)) # Name of the input layer

out_blob = next(iter(net.outputs)) # Name of the output layer

print("name_output",out_blob)

batch_size, n_channels, height, width = net.inputs[input_blob].shape

batch_size, n_out_channels, height_out, width_out = net.outputs[out_blob].shape

net.batch_size = batch_size

print("batch_size is:",batch_size)

# Loading model to the plugin

exec_net = ie.load_network(network=net,device_name=args.device)

def flow2rgb(flow_map, max_value):

flow_map_np = flow_map

_, h, w = flow_map_np.shape

flow_map_np[:,(flow_map_np[0] == 0) & (flow_map_np[1] == 0)] = float('nan')

rgb_map = np.ones((3,h,w)).astype(np.float32)

if max_value is not None:

normalized_flow_map = flow_map_np / max_value

else:

normalized_flow_map = flow_map_np / (np.abs(flow_map_np).max())

rgb_map[0] += normalized_flow_map[0]

rgb_map[1] -= 0.5*(normalized_flow_map[0] + normalized_flow_map[1])

rgb_map[2] += normalized_flow_map[1]

return rgb_map.clip(0,1)

def print_stats(exec_net, input_data, n_channels, batch_size, input_blob, out_blob, args):

"""

Prints layer by layer inference times.

Good for profiling which ops are most costly in your model.

"""

# Start sync inference

print("Starting inference ({} iterations)".format(args.number_iter))

infer_time = []

for i in range(args.number_iter):

t0 = time.time()

print(input_data.shape)

res = exec_net.infer(inputs={input_blob: input_data})

infer_time.append((time.time() - t0))

average_inference = np.average(np.asarray(infer_time))

print("Average running time of one batch: {:.5f} ms".format(average_inference))

print("Images per second = {:.3f}".format(batch_size * 1000.0 / average_inference))

perf_counts = exec_net.requests[0].get_perf_counts()

log.info("Performance counters:")

log.info("{:<70} {:<15} {:<15} {:<15} {:<10}".format("name",

"layer_type",

"exec_type",

"status",

"real_time, us"))

for layer, stats in perf_counts.items():

log.info("{:<70} {:<15} {:<15} {:<15} {:<10}".format(layer,

stats["layer_type"],

stats["exec_type"],

stats["status"],

stats["real_time"]))

output = res[out_blob]

print(output.shape)

for suffix, flow_output in zip(['flow', 'inv_flow'], output):

filename = Path(args.results_directory)/'{}{}'.format(img1_file.stem[:-1], suffix)

Path(args.results_directory).makedirs_p()

if args.output_value in['vis', 'both']:

rgb_flow = flow2rgb(args.div_flow * flow_output, max_value=args.max_flow)

to_save = (rgb_flow * 255).astype(np.uint8).transpose(1,2,0)

imwrite(filename + '.png', to_save)

print("saving to..",filename + '.png')

if args.output_value in ['raw', 'both']:

# Make the flow map a HxWx2 array as in .flo files

to_save = (args.div_flow*flow_output).transpose(1,2,0)

np.save(filename + '.npy', to_save)

with open(os.path.join(png_directory, f'stats_{job_id}.txt'), 'w') as f:

f.write("{:.5f}\n".format(average_inference))

f.write("{}\n".format(args.number_iter))

f.write("Average running time of one batch: {:.5f} s\n".format(average_inference))

f.write("Images per second = {:.3f}".format(batch_size * 1.0 / average_inference))

job_id = os.environ['PBS_JOBID']

print(job_id)

png_directory = args.results_directory

if not os.path.exists(png_directory):

os.makedirs(png_directory)

args.stats = True

progress_file_path = os.path.join(png_directory,f"i_progress_{job_id}.txt")

process_time_start = time.time()

if args.stats:

# Print the latency and throughput for inference

print_stats(exec_net, input_var, n_channels, batch_size, input_blob, out_blob, args)

progressUpdate(progress_file_path, time.time()-process_time_start, 1, 1)

try:

import applicationMetricWriter

applicationMetricWriter.send_application_metrics(model_xml, args.device)

except:

print("applicationMetricWriter not installed")

=====

Here is my good result from CPUs

=====

########################################################################

# Date: Thu Feb 18 05:58:29 PST 2021

# Job ID: 70401.v-qsvr-2.devcloud-edge

# User: u60570

# Resources: neednodes=2:idc003a10,nodes=2:idc003a10,walltime=00:10:00

########################################################################

[setupvars.sh] OpenVINO environment initialized

Imported Python modules successfully.

=> will save raw output and RGB visualization

=> fetching img pairs in './test/'

=> will save everything to ./test/flow

1 samples found

input_var shape torch.Size([1, 6, 384, 512])

[ INFO ] Initializing plugin for CPU device...

check

An Inference Engine object has been created

CPU

[ INFO ] Loading FlowNetS model to the plugin

[ INFO ] Loading network files:

models/FP16/saved_model.xml

models/FP16/saved_model.bin

network loaded check!

name_output Conv_40

batch_size is: 1

70401.v-qsvr-2.devcloud-edge

Starting inference (60 iterations)

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

torch.Size([1, 6, 384, 512])

Average running time of one batch: 0.12851 ms

Images per second = 7781.442

[ INFO ] Performance counters:

[ INFO ] name layer_type exec_type status real_time, us

[ INFO ] Concat_24 Concat unknown_FP32 EXECUTED 3

[ INFO ] Concat_24_nchw_nChw8c_ConvTranspose_27 Reorder ref_any_FP32 EXECUTED 64

[ INFO ] Concat_24_nchw_nChw8c_Conv_25 Reorder ref_any_FP32 EXECUTED 65

[ INFO ] Concat_29 Concat unknown_FP32 EXECUTED 2

[ INFO ] Concat_29_nchw_nChw8c_ConvTranspose_32 Reorder ref_any_FP32 EXECUTED 163

[ INFO ] Concat_29_nchw_nChw8c_Conv_30 Reorder ref_any_FP32 EXECUTED 240

[ INFO ] Concat_34 Concat unknown_FP32 EXECUTED 3

[ INFO ] Concat_34_nchw_nChw8c_ConvTranspose_37 Reorder ref_any_FP32 EXECUTED 479

[ INFO ] Concat_34_nchw_nChw8c_Conv_35 Reorder ref_any_FP32 EXECUTED 573

[ INFO ] Concat_39 Concat unknown_FP32 EXECUTED 3

[ INFO ] Concat_39_nchw_nChw8c_Conv_40 Reorder ref_any_FP32 EXECUTED 1044

[ INFO ] ConvTranspose_21 Deconvolution jit_gemm_FP32 EXECUTED 1582

[ INFO ] ConvTranspose_22 Deconvolution jit_avx2_FP32 EXECUTED 3048

[ INFO ] ConvTranspose_26 Deconvolution jit_gemm_FP32 EXECUTED 12

[ INFO ] ConvTranspose_27 Deconvolution jit_avx2_FP32 EXECUTED 5390

[ INFO ] ConvTranspose_31 Deconvolution jit_gemm_FP32 EXECUTED 30

[ INFO ] ConvTranspose_32 Deconvolution jit_avx2_FP32 EXECUTED 7657

[ INFO ] ConvTranspose_36 Deconvolution jit_gemm_FP32 EXECUTED 102

[ INFO ] ConvTranspose_37 Deconvolution jit_avx2_FP32 EXECUTED 8292

[ INFO ] Conv_0 Convolution jit_sse42_FP32 EXECUTED 26564

[ INFO ] Conv_10 Convolution jit_avx2_FP32 EXECUTED 10198

[ INFO ] Conv_10_nChw8c_nchw_Concat_29 Reorder jit_uni_FP32 EXECUTED 105

[ INFO ] Conv_12 Convolution jit_avx2_FP32 EXECUTED 3041

[ INFO ] Conv_14 Convolution jit_avx2_FP32 EXECUTED 2726

[ INFO ] Conv_14_nChw8c_nchw_Concat_24 Reorder jit_uni_FP32 EXECUTED 21

[ INFO ] Conv_16 Convolution jit_avx2_FP32 EXECUTED 1789

[ INFO ] Conv_18 Convolution jit_avx2_FP32 EXECUTED 3186

[ INFO ] Conv_2 Convolution jit_avx2_FP32 EXECUTED 13999

[ INFO ] Conv_20 Convolution jit_avx2_FP32 EXECUTED 84

[ INFO ] Conv_20_nChw8c_nchw_ConvTranspose_21 Reorder ref_any_FP32 EXECUTED 7

[ INFO ] Conv_25 Convolution jit_avx2_FP32 EXECUTED 219

[ INFO ] Conv_25_nChw8c_nchw_ConvTranspose_26 Reorder ref_any_FP32 EXECUTED 6

[ INFO ] Conv_2_nChw8c_nchw_Concat_39 Reorder jit_uni_FP32 EXECUTED 789

[ INFO ] Conv_30 Convolution jit_avx2_FP32 EXECUTED 593

[ INFO ] Conv_30_nChw8c_nchw_ConvTranspose_31 Reorder ref_any_FP32 EXECUTED 8

[ INFO ] Conv_35 Convolution jit_avx2_FP32 EXECUTED 1175

[ INFO ] Conv_35_nChw8c_nchw_ConvTranspose_36 Reorder ref_any_FP32 EXECUTED 14

[ INFO ] Conv_4 Convolution jit_avx2_FP32 EXECUTED 14126

[ INFO ] Conv_40 Convolution jit_avx2_FP32 EXECUTED 2298

[ INFO ] Conv_40_nChw8c_nchw_out_Conv_40 Reorder ref_any_FP32 EXECUTED 30

[ INFO ] Conv_6 Convolution jit_avx2_FP32 EXECUTED 10050

[ INFO ] Conv_6_nChw8c_nchw_Concat_34 Reorder jit_uni_FP32 EXECUTED 321

[ INFO ] Conv_8 Convolution jit_avx2_FP32 EXECUTED 5448

[ INFO ] LeakyRelu_111863 ReLU undef NOT_RUN 0

[ INFO ] LeakyRelu_11867 ReLU undef NOT_RUN 0

[ INFO ] LeakyRelu_131871 ReLU undef NOT_RUN 0

[ INFO ] LeakyRelu_151847 ReLU undef NOT_RUN 0

[ INFO ] LeakyRelu_171883 ReLU undef NOT_RUN 0

[ INFO ] LeakyRelu_191887 ReLU undef NOT_RUN 0

[ INFO ] LeakyRelu_231851 ReLU jit_avx2_FP32 EXECUTED 15

[ INFO ] LeakyRelu_231851_nChw8c_nchw_Concat_24 Reorder jit_uni_FP32 EXECUTED 19

[ INFO ] LeakyRelu_281855 ReLU jit_avx2_FP32 EXECUTED 29

[ INFO ] LeakyRelu_281855_nChw8c_nchw_Concat_29 Reorder jit_uni_FP32 EXECUTED 36

[ INFO ] LeakyRelu_31839 ReLU undef NOT_RUN 0

[ INFO ] LeakyRelu_331859 ReLU jit_avx2_FP32 EXECUTED 41

[ INFO ] LeakyRelu_331859_nChw8c_nchw_Concat_34 Reorder jit_uni_FP32 EXECUTED 89

[ INFO ] LeakyRelu_381879 ReLU jit_avx2_FP32 EXECUTED 173

[ INFO ] LeakyRelu_381879_nChw8c_nchw_Concat_39 Reorder jit_uni_FP32 EXECUTED 342

[ INFO ] LeakyRelu_51875 ReLU undef NOT_RUN 0

[ INFO ] LeakyRelu_71843 ReLU undef NOT_RUN 0

[ INFO ] LeakyRelu_91891 ReLU undef NOT_RUN 0

[ INFO ] input.1_nchw_nChw8c_Conv_0 Reorder ref_any_FP32 EXECUTED 1276

[ INFO ] out_Conv_40 Output unknown_FP32 NOT_RUN 0

(1, 2, 96, 128)

saving to.. results/core/imageflow.png

Create progress tracker

CPU

applicationMetricWriter not installed

########################################################################

# End of output for job 70401.v-qsvr-2.devcloud-edge

# Date: Thu Feb 18 05:59:17 PST 2021

########################################################################

sys.argv[0]=[/etc/collectd/telemetrySender.py]

sys.argv[1]=[u60570]

sys.argv[2]=[70401.v-qsvr-2.devcloud-edge]

sys.argv[3]=[45]

sys.argv[4]=[1613656712]

sys.argv[5]=[1613656757]

sys.argv[6]=[idc003a10_compnode_openvino-lts_intel-core_i5-6500te_intel-hd-530_ram8gb_arria10-fpga_tank-870_iei-mustang-f100-a10]

USER_ID=[u60570]

JOB_ID=[70401]

JOB_RUNTIME=[45]

FROM_TIME=[1613656712]

TO_TIME=[1613656757]

HOST_TYPE=[idc003a10_compnode_openvino-lts_intel-core_i5-6500te_intel-hd-530_ram8gb_arria10-fpga_tank-870_iei-mustang-f100-a10]

EDGE_NAME=[s003-n014]

PBS_O_WORKDIR=[/home/u60570/My-Notebooks/flownet]

APPLICATION_NAME=[flownet]

INTEL_SKU=[core-i5]

skipping application metrics

InfluxDBClient.write_points(metric_list) result_success:[True]

=====

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi yenfu9921132,

Thank you for your patience, the FPGA team has confirmed FlowNetS is not supported. The model has not been tested before and there are currently no plans to support.

If there are unsupported layers they should fall back to CPU. However, the segmentation fault could be happening if there is a layer that the FPGA plugin thinks it’s supported. An option would be to add the -pc flag as demonstrated on the benchmark_app.py application to display which device executes each layer. Take a look at this guide, it contains information on modifying the layer affinity.

Regards,

Jesus

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi yenfu9921132,

Thanks for reaching out! You are correct, if a layer is not supported on FPGA it should fall back to CPU when using the HETERO plugin. Could you share your model (Flownets.onnx) and model optimizer command used to convert to IR?

Regards,

Jesus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, Here is the command. I switched input_shape to batch size configuration to see if there is any difference but no. I use FP16 on every job.

=== mo.py command===

# Create FP16 IR files

!mo.py \

--input_model ./Flownets.onnx \

--framework onnx \

# --input_shape=[1,6,384,512] \

--data_type FP16 \

--output_dir models/FP16 \

--model_name saved_model \

--batch 1

# Create FP32 IR files

!mo.py \

--input_model ./Flownets.onnx \

--framework onnx \

# --input_shape=[1,6,384,512] \

--data_type FP32 \

--output_dir models/FP32 \

--model_name saved_model \

--batch 1

# find all resulting IR files

!echo "\nAll IR files that were created:"

!find ./models -name "*.xml" -o -name "*.bin"

===end===

The file size of onnx model is 155MB. I put it on my Google drive.

Here is the link: https://drive.google.com/file/d/1--QUqpRhVH02mDfE1j85vT-8b_-26o7q/view?usp=sharing

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi yenfu9921132,

Thanks for sharing the model, could you also share your python code as a .py file? All the spacing and formatting was lost when posted to the discussion.

I converted your model and loaded onto HETRO:FPGA,GPU,CPU but did not see a segmentation error. I'll let you know what else I find out once you try it with your code.

Regards,

Jesus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jesus,

Yes, I uploaded my whole working directory on my google drive as following link.

https://drive.google.com/file/d/1nL_Vh3b8z10EHQrOiKE7axWvB4leNe41/view?usp=sharing

Thanks for helping me.

Regards,

Yen-Fu

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi yenfu9921132,

Thanks for providing your project files, I was able to reproduce the reported behavior. I am still debugging and determining if this is a bug. One thing that comes to mind is the bitstream file (aocx) being used, this one is specific to the tiny-yolo-ssd300 model.

Let me look into it a little more and get back to you.

Regards,

Jesus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, I also tried some other bitstream files but it is the same.

Is there anyway to know how to write openVINO function in OpenCL FPGAs SDK?

Regards,

Yen Fu

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi yenfu9921132,

I apologize for the delay, I am still waiting to hear back from the development team. I also sent a note to the OpenVINO team for guidance on the FPGA bitstream files. I will let you know as soon as I hear back.

Regards,

Jesus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi yenfu9921132,

Thank you for your patience, the FPGA team has confirmed FlowNetS is not supported. The model has not been tested before and there are currently no plans to support.

If there are unsupported layers they should fall back to CPU. However, the segmentation fault could be happening if there is a layer that the FPGA plugin thinks it’s supported. An option would be to add the -pc flag as demonstrated on the benchmark_app.py application to display which device executes each layer. Take a look at this guide, it contains information on modifying the layer affinity.

Regards,

Jesus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jesus,

OK. Thank you very much!!

Regards,

Yen Fu

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

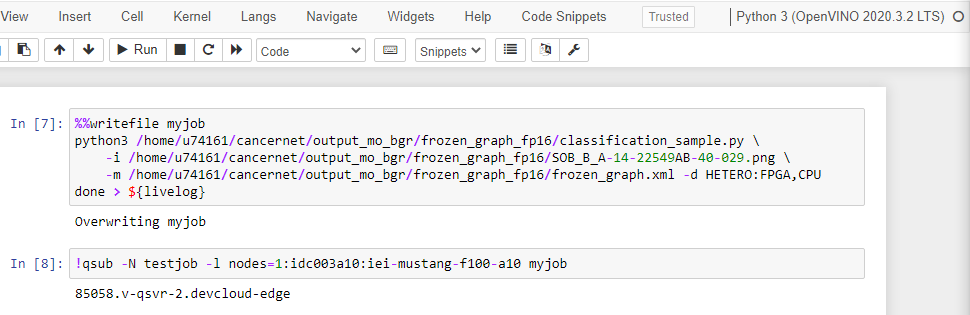

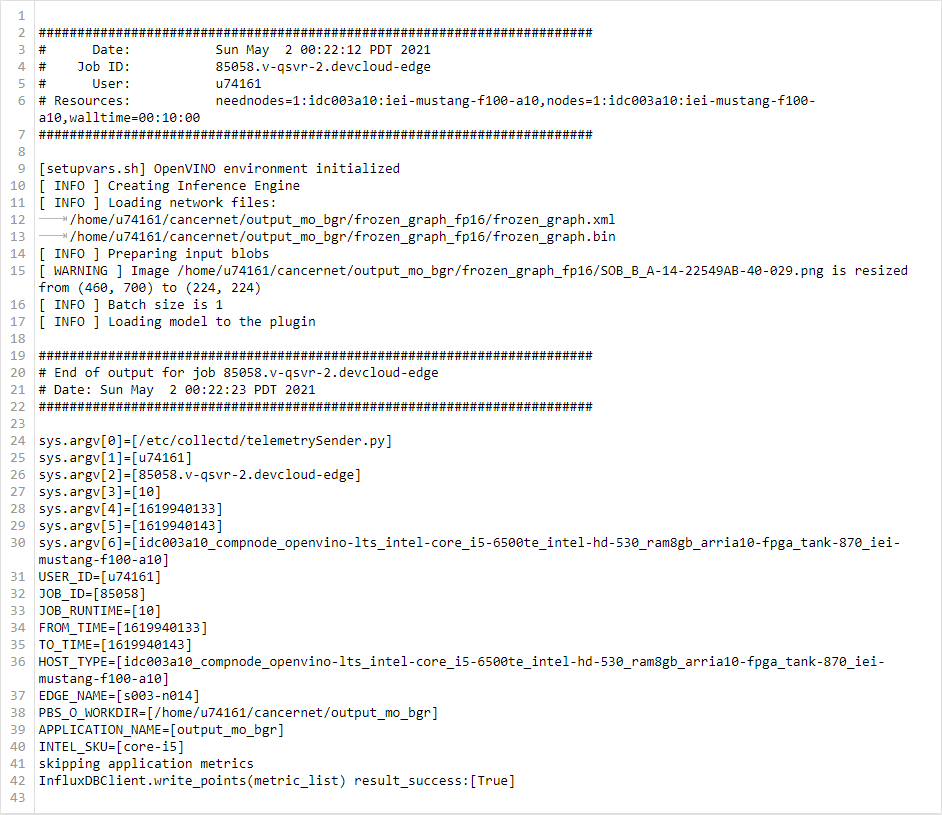

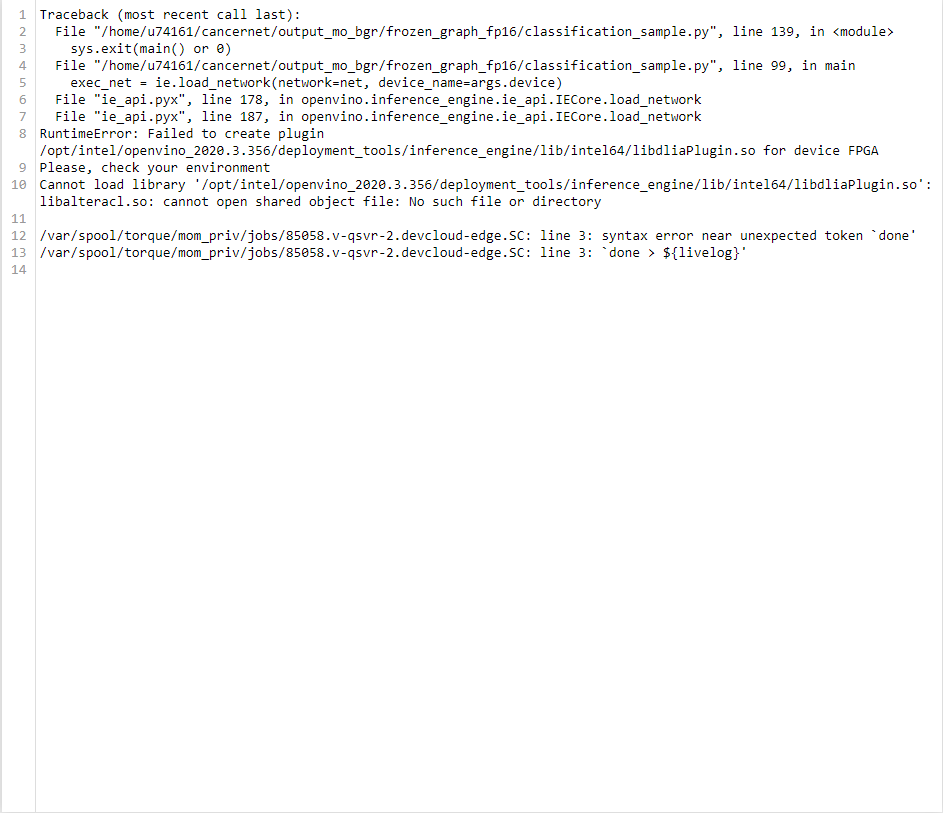

Hi all,

I have an almost identical problem, any expertise would be much appreciated.

I am using OpenVINO 2020.3 LTS, but it kept saying FPGA plugin not supported, can anyone advise on this issue?

.

testjob.o85058:

.

testjob.e85058:

I tracked back to the directory "/opt/intel/openvino_2020.3.356/deployment_tools/inference_engine/lib/intel64/libdliaPlugin.so", libdliaPlugin.so is there, but still it shows this error.

.

I will really appreciate for any help given. Thank you and stay safe.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page