- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

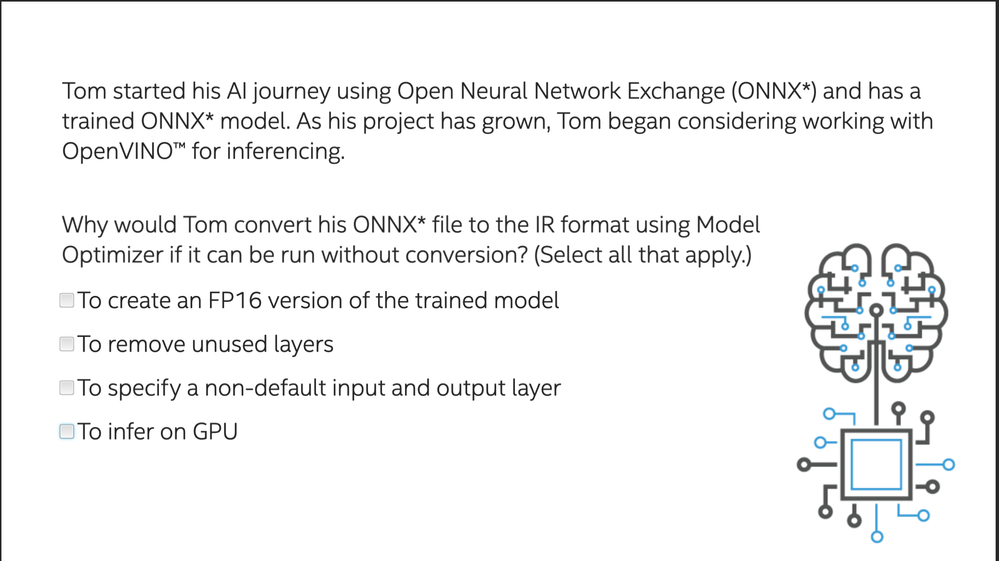

So, I'm taking the tests for each Edge AI Certification section and I've hit one that I can't decipher. It's in the test for lesson 5: Model Optimizer.

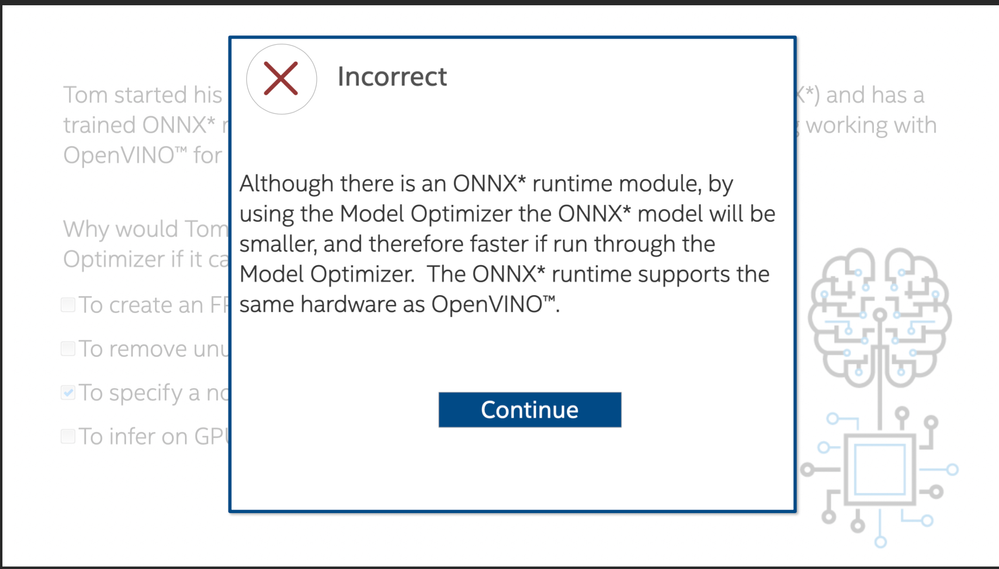

Here are screenshots of the question and the response I get regardless of which items I select for an answer.

Removed unused layers doesn't work.

Permutations with it don't work.

FP 16 and GPU don't work (as the incorrect response sort of suggests (if ONNX runs on the same hardware, it therefore supports FP16 and infers on GPUs, right?)

So--what's the right answer?

Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No, converting an ONNX model to IR format is not required to perform inference on the GPU - the ONNX runtime supports the same hardware as OpenVINO; the ONNX model can directly be used without a prior conversion to IR format.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's a combination of three: "remove unused layers", plus "FP16 version" plus "non-default input and output".

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks! But not to run on a GPU (which requires FP16)?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No, converting an ONNX model to IR format is not required to perform inference on the GPU - the ONNX runtime supports the same hardware as OpenVINO; the ONNX model can directly be used without a prior conversion to IR format.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page