- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good day everyone

I am doing my project using an AI model to detect the car plate in a video.

I created a model, optimized it, and generated 3 files (plate_detection.bin, plate_detection.xml, plate_detection.mapping).

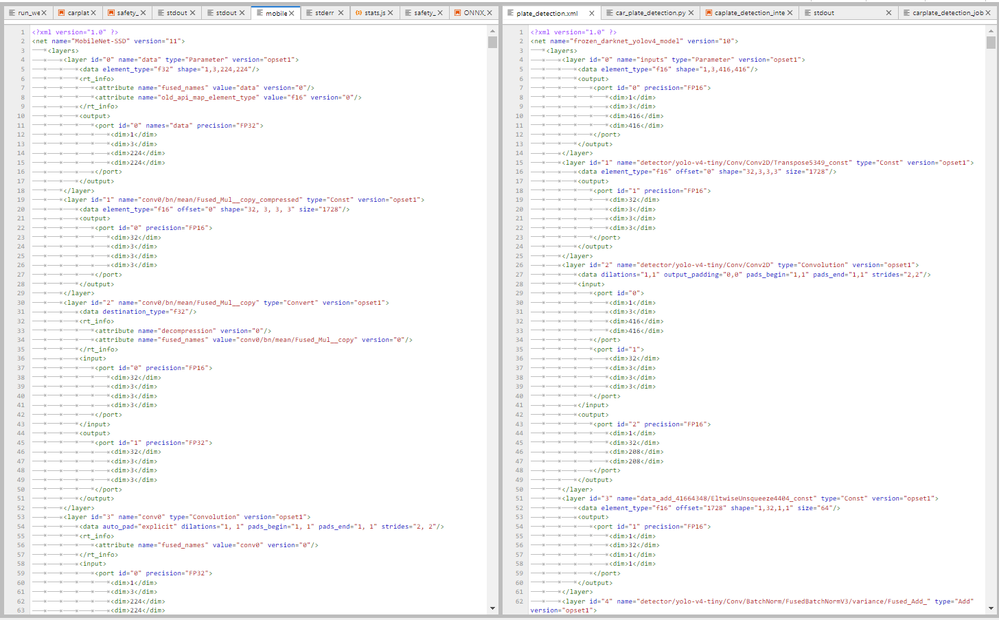

However when I look into the .xml file content and compare it with the samples inside the DevCloud for edge (Reference-samples/iot-devcloud/openvino-dev-latest/developer-samples/python/safety-gear-detection-python/models/mobilenet-ssd/FP16/mobilenet-ssd.xml).

The figure below shows the content of .xml files(left: sample in devcloud, right: own project)

The contents are a bit different.

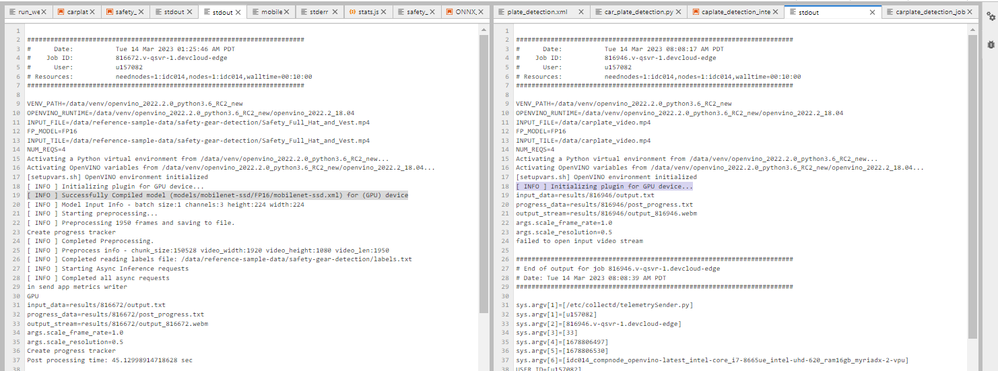

This resulted in unsuccessfully compiled model as shown in the figure below (left: sample in devcloud, right: own project):

Is this the reason causing the failure to execute own .xml file?

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Wen Jie,

Thank you for sending the model and video file over! I was able to inference the model and obtain valid detections. I noticed the model you shared is based on the yolo-v4-tiny architecture. We have a yolo-v4-tiny sample on Intel Developer Cloud for the Edge that requires minimal changes to run your model and video.

The sample can be found here: Tiny YOLO* V4 Object Detection

To run with your files:

I uploaded your model (.bin/.xml, not .mapping) and video file to the Reference-samples/iot-devcloud/openvino-dev-latest/developer-samples/python/tiny-yolo-v4-python directory.

I changed the following in 1.5.4.1. Configure input:

# Set the path to the input video to use for the rest of this sample

#InputVideo = "classroom.mp4"

InputVideo = "car.mp4"

I also changed the following in 1.6.0.1. Create the job file

# Set inference model IR files using specified precision

#MODELPATH=models/tinyyolov4/${FP_MODEL}/yolov4-tiny.xml

MODELPATH=plate_detection.xml

# Run the Tiny YOLO V4 object detection code

python3 object_detection_demo_yolov4_async.py -m $MODELPATH \

-i $INPUT_FILE \

-o $OUTPUT_FILE \

-d $DEVICE \

-t $THRESHOLD \

-nireq $NUMREQUEST \

-nstreams $NUMSTREAMS \

-no_show #\

#--labels $LABELS Please note that in the python3 command, I only commented out the labels argument and removed the forward slash in the previous line.

Could you give that a try and let me know if it works for you?

Regards,

Jesus

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Wen_Jie,

Thanks for reaching out, I don't believe the issue is related to your model. I think the sample failed to open the video file, the sample runs inference on a file located in /data/reference-sample-data/safety-gear-detection/Safety_Full_Hat_and_Vest.mp4. However, in your screenshot it's trying to open /data/carplate_video.mp4. Can you confirm the file is located in the correct location?

Would it be possible for you to share your model and video input for me to test on my end?

Regards,

Jesus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi JesusE_Intel

Ya, sure. How can the sharing be done?

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Wen_Jie,

I sent you a private note to your email. Please check your inbox and let me know if you don't receive it.

Regards,

Jesus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jesus

I have replied to the email you sent.

Do you receive the files?

Regards

Wen Jie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Wen Jie,

Thank you for sending the model and video file over! I was able to inference the model and obtain valid detections. I noticed the model you shared is based on the yolo-v4-tiny architecture. We have a yolo-v4-tiny sample on Intel Developer Cloud for the Edge that requires minimal changes to run your model and video.

The sample can be found here: Tiny YOLO* V4 Object Detection

To run with your files:

I uploaded your model (.bin/.xml, not .mapping) and video file to the Reference-samples/iot-devcloud/openvino-dev-latest/developer-samples/python/tiny-yolo-v4-python directory.

I changed the following in 1.5.4.1. Configure input:

# Set the path to the input video to use for the rest of this sample

#InputVideo = "classroom.mp4"

InputVideo = "car.mp4"

I also changed the following in 1.6.0.1. Create the job file

# Set inference model IR files using specified precision

#MODELPATH=models/tinyyolov4/${FP_MODEL}/yolov4-tiny.xml

MODELPATH=plate_detection.xml

# Run the Tiny YOLO V4 object detection code

python3 object_detection_demo_yolov4_async.py -m $MODELPATH \

-i $INPUT_FILE \

-o $OUTPUT_FILE \

-d $DEVICE \

-t $THRESHOLD \

-nireq $NUMREQUEST \

-nstreams $NUMSTREAMS \

-no_show #\

#--labels $LABELS Please note that in the python3 command, I only commented out the labels argument and removed the forward slash in the previous line.

Could you give that a try and let me know if it works for you?

Regards,

Jesus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jesus

I had try it using the method you suggested and it is successful.

Thank you very much.

Regards,

Wen Jie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Great! If you need any additional information, please submit a new question as this thread will no longer be monitored.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page