- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello everyone!

I'm having an issue, every time I run long linux commands like

- dd if=/dev/urandom of=bigfile.txt bs=1048576 count=500;

- compressing or decompressing large files using tar

- split command (I want to split a large compressed file and upload as chunks to drive, my script works but I get disconnected)

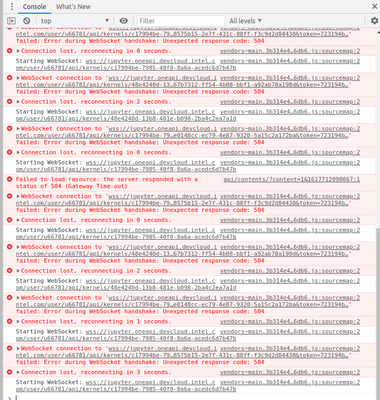

I get disconnected from ssh or jupyter and it hangs for so long leading to forcibly closing the job using qdel (504 server error)

Thanks,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Sorry for the delay.

Regarding your 1st question of using dd if=/dev/urandom of=bigfile.txt bs=1048576 count=500; command in ssh or jupyter, you will not be able to use this command in jupyter so as a workaround you can use ssh .

Try this and let us know.

Regards,

Janani Chandran

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for posting in Intel forums.

Could you give us the reproducer like steps and commands followed?

Regards,

Janani Chandran

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for replying,

A very simple example to encounter this

running this command to generate random garbage of 5GB on ssh or jupyter cell: (or anly long running Linux command such as file compression or splitting)

dd if=/dev/urandom of=bigfile.txt bs=1048576 count=5000

the jupyter disconnects in few seconds if I run this command, and also the ssh hangs.

refreshing the jupyter tab doesn't work as well it hangs for so long, so I have to do a qdel from ssh for the jupyter job

It's worth mentioning those commands were working the last month, maybe something changed?

regards,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We will look into this internally and let you know the updates.

Regards,

Janani Chandran

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Sorry for the delay.

We have yet to receive an update from the admin team. We will let you know once we get an update.

Regards,

Janani Chandran

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Sorry for the delay.

To answer your question

2)compressing or decompressing large files using tar - This may be due to some limitations of the DevCloud, such as disk quota. Also this might happen if you run the command on login node. The login node is not meant for any kind of work and we can't guarantee that a particular scenario won't fail.

3)This is possibly a combination of disk space and or ulimits on the download/upload speeds and times. In order to prevent a degradation of performance we limit the upload/download bandwidths and durations.

We suggest you to use rsync like this:

$ rsync -aP source_folder/* destination_folder/

rsync will let you resume a transfer from where it failed.

Regarding your 1st question we will check and let you know.

Regards,

Janani Chandran

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Sorry for the delay.

Regarding your 1st question of using dd if=/dev/urandom of=bigfile.txt bs=1048576 count=500; command in ssh or jupyter, you will not be able to use this command in jupyter so as a workaround you can use ssh .

Try this and let us know.

Regards,

Janani Chandran

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Is your issue resolved? Do you have any update?

Regards,

Janani Chandran

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks a lot for the great effort.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for accepting our solution. If you need any additional information, please submit a new question as this thread will no longer be monitored.

Regards,

Janani Chandran

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page