- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

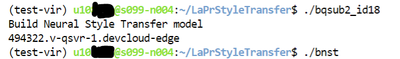

I run a python program from my DevCloudEdge terminal that perform neural style transfer training and inference and it return the styled image. When I submit my python script to run on a node using this command:

qsub runMyst2.sh -l nodes=1:idc018 -F "results/INT8 CPU async INT8"

runMyst2.sh:

VENV_PATH=". ./test-vir"

echo "Activating a Python virtual environment from ${VENV_PATH}..."

source ${VENV_PATH}/bin/activate

python3 myst2.py

In end myst2.py I use matplotlib to save the StyledImage.jpg in my home directory:

plt.imsave('styled_image_starrynight.jpeg', scale_img(final_img))

When I submit my myst2.py to the Edge Compute Nodes queue it runs, but I am not getting the styled image to my home directory. How can I get the result data/image from a Node job?

Thanks

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi pihx

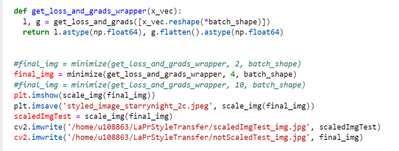

Thank you for contacting us, based on the issue you are facing, I can suggest using cv2.imwrite() will be better since you can specify the path you want to save the file.

For example;

cv2.imwrite('/home/u70000/Test_gray.jpg', image_gray)

Hope this information helps

Thank you

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Hari,

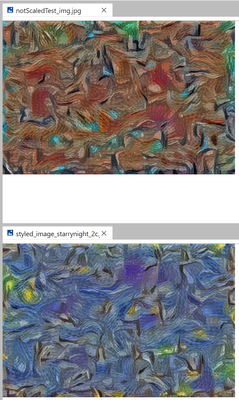

Thank you for your suggestion, and it works if I run my python script from a local terminal(python myst2.py) it works. It just changes the colors a bit see attachment.

The tail of myst2.py

But the same code doesn't work when I submit the script to a compute node.

I looks to me like it ran into an error, but could deal with it and finished the entire script!

How can I debug the execution to find the reason why it doesn't return the styled image to my folder?

How can I see the stage a submitted job is in. Still in queue, executing, finished. Is there a log file I can check?

Appreciate your suggestions!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Pihx,

Sorry for taking some time to get the error message you received.

From the issue you mention, it seems like the job did not complete and push the error message. I can suggest you test only the python file (myst2.py) without submitting it to the job queue.

!python myst2.py <arguiment> or in the terminal, run python myst2.py <arguiment>

From there we can debug the issue in the python code.

Thank you

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Hari,

Thanks for your advise

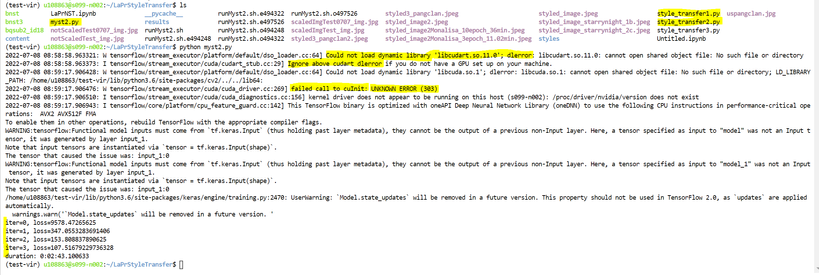

I am able to run "python myst2.py" in my DevCloud terminal, despite some CUDA warning/errors that was ignored.

But the script ran, and trained and returned the styled Image.

I have the code from a Lazy Programmer Udemy course, myst2.py is a slightly modified version of style_transfer2.py see attachment.

Is using pdb the best way to debug a python app in my DevCloudEdge terminal?

//////////////////////

myst2.py:

style_transfer1.py:

///////////.

bqsub2_id18 script:

VENV_PATH=". ./test-vir"

echo "Activating a Python virtual environment from ${VENV_PATH}..."

source ${VENV_PATH}/bin/activate

python myst2.py

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Pihx,

From the error message you received and the code you share, it seems that some library is required, and Intel® DevCloud for the edge does not provide superuser access to install that.

So, Intel® DevCloud for the edge might not be suitable for your application since it requires some additional library, and for your information, Intel® DevCloud for the edge is designed to simulate and test the performance of your AI models on Intel processors or devices and not fully support for model training.

My suggestion, after you train and generate the model and would like to test it on Intel® DevCloud for the edge, you can port it to the OpenVINO library and test it on Intel® DevCloud for the edge

Next is on pdb debugging tool, yes, the Jupyter notebook still supports pdb or you can use the available UI way to debug.

Hope this information help

Thank you

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Pihx,

This thread will no longer be monitored since we have provided a solution. Please submit a new question if you need any additional information from Intel.

Thank you

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page