- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How do I make jupyterlab extensions to work with Jupyter. I need to install an extension, which I've done but when I run the command:

$ jupyter lab build --dev-build=False --minimize=False --no-minimize --log-level=ERROR

I'm getting this error.

Here is the error log

Build failed. Troubleshooting: If the build failed due to an out-of-memory error, you may be able to fix it by disabling the `dev_build` and/or `minimize` options. If you are building via the `jupyter lab build` command, you can disable these options like so: jupyter lab build --dev-build=False --minimize=False You can also disable these options for all JupyterLab builds by adding these lines to a Jupyter config file named `jupyter_config.py`: c.LabBuildApp.minimize = False c.LabBuildApp.dev_build = False If you don't already have a `jupyter_config.py` file, you can create one by adding a blank file of that name to any of the Jupyter config directories. The config directories can be listed by running: jupyter --paths Explanation: - `dev-build`: This option controls whether a `dev` or a more streamlined `production` build is used. This option will default to `False` (i.e., the `production` build) for most users. However, if you have any labextensions installed from local files, this option will instead default to `True`. Explicitly setting `dev-build` to `False` will ensure that the `production` build is used in all circumstances. - `minimize`: This option controls whether your JS bundle is minified during the Webpack build, which helps to improve JupyterLab's overall performance. However, the minifier plugin used by Webpack is very memory intensive, so turning it off may help the build finish successfully in low-memory environments. An error occurred. RuntimeError: JupyterLab failed to build

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aswin,

We are able to reproduce your issue and also resolved.

The error is because you are trying out the commands from your login node. It can be easily resolved if you use the compute node.

There are Login Node and Compute Node in Intel® DevCloud.

Difference between login node and compute node.

------------------------------------------------

Login node uses a lightweight general-purpose processor. Compute node uses an Intel® Xeon® Gold 6128 processor that is capable of handling heavy workloads.

All the tasks that need extensive memory and compute resources have to be run on compute node, not on login node.

Memory error is thrown if you try to run any heavy tasks on login node.

To check whether you are on login node or compute node.

---------------------------------------------------------

One has “n0xx” in the prompt while the other does not.

When there is no “n0xx” in the prompt it means that you are on the login node.

Whenever there is “n0xx” after c009 in the prompt it indicates that you are on the compute node.

To run in compute node.

-------------------------

Initially you will be in the DevCloud login node. Then you have to enter to the compute node.

To enter into the compute node , please use the below commands

qsub -I

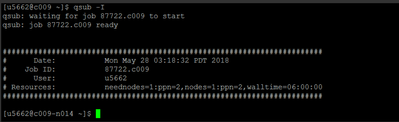

This creates a new job and gives a terminal from the compute node allocated for this job. See the following screenshot:

From here, You can try out the commands which you followed before.

Hope this resolve your issue. If the issue still persist please share the detailed steps you followed along with the screenshot of the error.

Thanks.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aswin,

Thank you for posting in Intel Communities. We are trying from our side meanwhile could you let us know which DevCloud(oneAPI DevCloud/edge DevCloud) you are using?

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Rahila,

I have tried oneAPI kernel and I'm able to replicate the out of memory error. The kernel I created was from conda environment I created by ssh into devcloud in my home folder.

The oneAPI devcloud is what I'm using. Would the memory size issue be a problem for training Neural Networks? That requires some memory right?

Thanks.

Aswin Vijayakumar

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aswin,

Thanks for your quick reply.

In most cases, memory error is caused by trying to run compute-intensive tasks on the login node. All the tasks that need extensive memory and compute resources have to be run on compute node, not on login node.

- Command used to login to compute node:

qsub –I

Could you please share the steps which you followed?

If you are looking for any specific extensions, let us know the extension name. So, we can have it installed globally.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Rahila,

Thanks for asking.

I tried to install Elyra Workflow.

1. So I first installed:

pip install --upgrade elyra[all]

- on the devcloud (through ssh)

2. It gave me "Killed" error, this may have executed as the result requires updating jupyter lab "jupyter lab build" after that:

jupyter lab build

So I installed them individually. (source: Installation — Elyra 3.2.2 documentation)

pip3 install --upgrade elyra-pipeline-editor-extension

pip3 install --upgrade elyra-code-snippet-extension

pip3 install --upgrade elyra-python-editor-extension

pip3 install --upgrade elyra-r-editor-extension

2.1 I installed nodejs using

conda install nodejs

3. I ran jupyter lab build

jupyter lab build --minimize=False --no-minimize --dev-build=False --log-level=DEBUG

The environment I ran has been taken off due to inconsistencies. However, the debug error showed me "Allocation of JavaScript heap memory out of memory", gave me below error (in detail).

[LabBuildApp] Building in /home/u93525/.conda/envs/studyenv/share/jupyter/lab

[LabBuildApp] Node v16.6.1

[LabBuildApp] Yarn configuration loaded.

[LabBuildApp] Building jupyterlab assets (production, not minimized)

[LabBuildApp] > node /home/u93525/.conda/envs/studyenv/lib/python3.9/site-packages/jupyterlab/staging/yarn.js install --non-interactive

[LabBuildApp] yarn install v1.21.1

[1/5] Validating package.json...

[2/5] Resolving packages...

[3/5] Fetching packages...

info fsevents@2.3.2: The platform "linux" is incompatible with this module.

info "fsevents@2.3.2" is an optional dependency and failed compatibility check. Excluding it from installation.

[4/5] Linking dependencies...

warning "@jupyterlab/extensionmanager > react-paginate@6.5.0" has incorrect peer dependency "react@^16.0.0".

warning "@jupyterlab/json-extension > react-highlighter@0.4.3" has incorrect peer dependency "react@^0.14.0 || ^15.0.0 || ^16.0.0".

warning "@jupyterlab/json-extension > react-json-tree@0.15.1" has unmet peer dependency "@types/react@^16.3.0 || ^17.0.0".

warning "@jupyterlab/vdom > @nteract/transform-vdom@4.0.16-alpha.0" has incorrect peer dependency "react@^16.3.2".

warning " > @lumino/coreutils@1.11.1" has unmet peer dependency "crypto@1.0.1".

warning "@jupyterlab/builder > @jupyterlab/buildutils > verdaccio > clipanion@3.1.0" has unmet peer dependency "typanion@*".

warning Workspaces can only be enabled in private projects.

warning Workspaces can only be enabled in private projects.

<--- Last few GCs --->

[6804:0x56324de09460] 6606 ms: Scavenge 56.6 (77.3) -> 48.2 (80.0) MB, 8.9 / 0.0 ms (average mu = 0.910, current mu = 0.905) task

[6804:0x56324de09460] 6883 ms: Scavenge 59.6 (80.0) -> 54.2 (82.5) MB, 10.8 / 0.0 ms (average mu = 0.910, current mu = 0.905) task

[6804:0x56324de09460] 6987 ms: Scavenge 63.2 (82.5) -> 58.9 (88.0) MB, 13.7 / 0.0 ms (average mu = 0.910, current mu = 0.905) task

<--- JS stacktrace --->

FATAL ERROR: NewSpace::Rebalance Allocation failed - JavaScript heap out of memory

1: 0x7fb88fc0c3b9 node::Abort() [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

2: 0x7fb88fc0ce9d node::OnFatalError(char const*, char const*) [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

3: 0x7fb88ffca5d2 v8::Utils::ReportOOMFailure(v8::internal::Isolate*, char const*, bool) [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

4: 0x7fb88ffca88b v8::internal::V8::FatalProcessOutOfMemory(v8::internal::Isolate*, char const*, bool) [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

5: 0x7fb89018acd6 [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

6: 0x7fb8901d9387 [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

7: 0x7fb8901dcb2b v8::internal::MarkCompactCollector::CollectGarbage() [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

8: 0x7fb89019f0cf v8::internal::Heap::MarkCompact() [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

9: 0x7fb89019fa19 v8::internal::Heap::PerformGarbageCollection(v8::internal::GarbageCollector, v8::GCCallbackFlags) [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

10: 0x7fb8901a01b8 v8::internal::Heap::CollectGarbage(v8::internal::AllocationSpace, v8::internal::GarbageCollectionReason, v8::GCCallbackFlags) [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

11: 0x7fb8901a0dbd v8::internal::Heap::CollectAllGarbage(int, v8::internal::GarbageCollectionReason, v8::GCCallbackFlags) [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

12: 0x7fb8901a40a0 v8::internal::IncrementalMarkingJob::Task::Step(v8::internal::Heap*) [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

13: 0x7fb8901a41bc v8::internal::IncrementalMarkingJob::Task::RunInternal() [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

14: 0x7fb8900a5b9e [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

15: 0x7fb88fc810b2 node::PerIsolatePlatformData::RunForegroundTask(std::unique_ptr<v8::Task, std::default_delete<v8::Task> >) [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

16: 0x7fb88fc83d38 node::PerIsolatePlatformData::FlushForegroundTasksInternal() [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

17: 0x7fb891cc8723 [/home/u93525/.conda/envs/studyenv/bin/../lib/./libuv.so.1]

18: 0x7fb891cd850f uv__io_poll [/home/u93525/.conda/envs/studyenv/bin/../lib/./libuv.so.1]

19: 0x7fb891cc8e22 uv_run [/home/u93525/.conda/envs/studyenv/bin/../lib/./libuv.so.1]

20: 0x7fb88fb47776 node::SpinEventLoop(node::Environment*) [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

21: 0x7fb88fc546f5 node::NodeMainInstance::Run(int*, node::Environment*) [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

22: 0x7fb88fc54b56 node::NodeMainInstance::Run(node::EnvSerializeInfo const*) [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

23: 0x7fb88fbd0333 node::Start(int, char**) [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

24: 0x56324ca28105 main [/home/u93525/.conda/envs/studyenv/bin/node]

25: 0x7fb88edfab97 __libc_start_main [/lib/x86_64-linux-gnu/libc.so.6]

26: 0x56324ca2814f [/home/u93525/.conda/envs/studyenv/bin/node]

[LabBuildApp] > node /home/u93525/.conda/envs/studyenv/lib/python3.9/site-packages/jupyterlab/staging/yarn.js yarn-deduplicate -s fewer --fail

[LabBuildApp] yarn run v1.21.1

$ /home/u93525/.conda/envs/studyenv/share/jupyter/lab/staging/node_modules/.bin/yarn-deduplicate -s fewer --fail

/bin/sh: 1: /home/u93525/.conda/envs/studyenv/share/jupyter/lab/staging/node_modules/.bin/yarn-deduplicate: Permission denied

error Command failed with exit code 126.

info Visit https://yarnpkg.com/en/docs/cli/run for documentation about this command.

[LabBuildApp] > node /home/u93525/.conda/envs/studyenv/lib/python3.9/site-packages/jupyterlab/staging/yarn.js

[LabBuildApp] yarn install v1.21.1

[1/5] Validating package.json...

[2/5] Resolving packages...

[3/5] Fetching packages...

info fsevents@2.3.2: The platform "linux" is incompatible with this module.

info "fsevents@2.3.2" is an optional dependency and failed compatibility check. Excluding it from installation.

[4/5] Linking dependencies...

warning "@jupyterlab/extensionmanager > react-paginate@6.5.0" has incorrect peer dependency "react@^16.0.0".

warning "@jupyterlab/json-extension > react-highlighter@0.4.3" has incorrect peer dependency "react@^0.14.0 || ^15.0.0 || ^16.0.0".

warning "@jupyterlab/json-extension > react-json-tree@0.15.1" has unmet peer dependency "@types/react@^16.3.0 || ^17.0.0".

warning "@jupyterlab/vdom > @nteract/transform-vdom@4.0.16-alpha.0" has incorrect peer dependency "react@^16.3.2".

warning " > @lumino/coreutils@1.11.1" has unmet peer dependency "crypto@1.0.1".

warning "@jupyterlab/builder > @jupyterlab/buildutils > verdaccio > clipanion@3.1.0" has unmet peer dependency "typanion@*".

warning Workspaces can only be enabled in private projects.

warning Workspaces can only be enabled in private projects.

<--- Last few GCs --->

[6849:0x562e52b3c310] 1857 ms: Scavenge 59.5 (81.0) -> 54.7 (85.0) MB, 7.5 / 0.0 ms (average mu = 0.933, current mu = 0.914) task

[6849:0x562e52b3c310] 1904 ms: Scavenge 64.3 (85.3) -> 59.9 (89.8) MB, 10.5 / 0.0 ms (average mu = 0.933, current mu = 0.914) task

[6849:0x562e52b3c310] 2408 ms: Scavenge 68.3 (89.8) -> 63.9 (93.0) MB, 210.9 / 0.0 ms (average mu = 0.933, current mu = 0.914) task

<--- JS stacktrace --->

FATAL ERROR: NewSpace::Rebalance Allocation failed - JavaScript heap out of memory

1: 0x7f102b2ea3b9 node::Abort() [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

2: 0x7f102b2eae9d node::OnFatalError(char const*, char const*) [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

3: 0x7f102b6a85d2 v8::Utils::ReportOOMFailure(v8::internal::Isolate*, char const*, bool) [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

4: 0x7f102b6a888b v8::internal::V8::FatalProcessOutOfMemory(v8::internal::Isolate*, char const*, bool) [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

5: 0x7f102b868cd6 [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

6: 0x7f102b8b7387 [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

7: 0x7f102b8bab2b v8::internal::MarkCompactCollector::CollectGarbage() [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

8: 0x7f102b87d0cf v8::internal::Heap::MarkCompact() [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

9: 0x7f102b87da19 v8::internal::Heap::PerformGarbageCollection(v8::internal::GarbageCollector, v8::GCCallbackFlags) [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

10: 0x7f102b87e1b8 v8::internal::Heap::CollectGarbage(v8::internal::AllocationSpace, v8::internal::GarbageCollectionReason, v8::GCCallbackFlags) [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

11: 0x7f102b87edbd v8::internal::Heap::CollectAllGarbage(int, v8::internal::GarbageCollectionReason, v8::GCCallbackFlags) [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

12: 0x7f102b8820a0 v8::internal::IncrementalMarkingJob::Task::Step(v8::internal::Heap*) [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

13: 0x7f102b8821bc v8::internal::IncrementalMarkingJob::Task::RunInternal() [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

14: 0x7f102b783b9e [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

15: 0x7f102b35f0b2 node::PerIsolatePlatformData::RunForegroundTask(std::unique_ptr<v8::Task, std::default_delete<v8::Task> >) [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

16: 0x7f102b361d38 node::PerIsolatePlatformData::FlushForegroundTasksInternal() [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

17: 0x7f102d3a6723 [/home/u93525/.conda/envs/studyenv/bin/../lib/./libuv.so.1]

18: 0x7f102d3b650f uv__io_poll [/home/u93525/.conda/envs/studyenv/bin/../lib/./libuv.so.1]

19: 0x7f102d3a6e22 uv_run [/home/u93525/.conda/envs/studyenv/bin/../lib/./libuv.so.1]

20: 0x7f102b225776 node::SpinEventLoop(node::Environment*) [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

21: 0x7f102b3326f5 node::NodeMainInstance::Run(int*, node::Environment*) [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

22: 0x7f102b332b56 node::NodeMainInstance::Run(node::EnvSerializeInfo const*) [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

23: 0x7f102b2ae333 node::Start(int, char**) [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

24: 0x562e526dc105 main [/home/u93525/.conda/envs/studyenv/bin/node]

25: 0x7f102a4d8b97 __libc_start_main [/lib/x86_64-linux-gnu/libc.so.6]

26: 0x562e526dc14f [/home/u93525/.conda/envs/studyenv/bin/node]

[LabBuildApp] > node /home/u93525/.conda/envs/studyenv/lib/python3.9/site-packages/jupyterlab/staging/yarn.js run build:prod

[LabBuildApp] yarn run v1.21.1

$ webpack --config webpack.prod.config.js

<--- Last few GCs --->

[6879:0x562cab72d420] 2844 ms: Scavenge 69.6 (87.7) -> 58.1 (90.7) MB, 5.5 / 0.0 ms (average mu = 0.960, current mu = 0.960) allocation failure

[6879:0x562cab72d420] 3095 ms: Scavenge 75.5 (93.0) -> 63.6 (94.5) MB, 4.0 / 0.0 ms (average mu = 0.960, current mu = 0.960) allocation failure

[6879:0x562cab72d420] 3442 ms: Scavenge 74.5 (94.7) -> 67.5 (94.7) MB, 185.6 / 0.0 ms (average mu = 0.960, current mu = 0.960) task

<--- JS stacktrace --->

FATAL ERROR: MarkCompactCollector: young object promotion failed Allocation failed - JavaScript heap out of memory

1: 0x7fe6066b43b9 node::Abort() [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

2: 0x7fe6066b4e9d node::OnFatalError(char const*, char const*) [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

3: 0x7fe606a725d2 v8::Utils::ReportOOMFailure(v8::internal::Isolate*, char const*, bool) [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

4: 0x7fe606a7288b v8::internal::V8::FatalProcessOutOfMemory(v8::internal::Isolate*, char const*, bool) [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

5: 0x7fe606c32cd6 [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

6: 0x7fe606c67898 [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

7: 0x7fe606c6b73e void v8::internal::LiveObjectVisitor::VisitBlackObjectsNoFail<v8::internal::EvacuateNewSpaceVisitor, v8::internal::MajorNonAtomicMarkingState>(v8::internal::MemoryChunk*, v8::internal::MajorNonAtomicMarkingState*, v8::internal::EvacuateNewSpaceVisitor*, v8::internal::LiveObjectVisitor::IterationMode) [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

8: 0x7fe606c818dd v8::internal::FullEvacuator::RawEvacuatePage(v8::internal::MemoryChunk*, long*) [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

9: 0x7fe606c5c64a v8::internal::Evacuator::EvacuatePage(v8::internal::MemoryChunk*) [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

10: 0x7fe606c5c9af [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

11: 0x7fe6069c0e95 [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

12: 0x7fe60672a543 [/home/u93525/.conda/envs/studyenv/bin/../lib/libnode.so.93]

13: 0x7fe605c796db [/lib/x86_64-linux-gnu/libpthread.so.0]

14: 0x7fe6059a288f clone [/lib/x86_64-linux-gnu/libc.so.6]

Aborted

error Command failed with exit code 134.

info Visit https://yarnpkg.com/en/docs/cli/run for documentation about this command.

[LabBuildApp] JupyterLab failed to build

[LabBuildApp] Traceback (most recent call last):

[LabBuildApp] File "/home/u93525/.conda/envs/studyenv/lib/python3.9/site-packages/jupyterlab/debuglog.py", line 48, in debug_logging

yield

[LabBuildApp] File "/home/u93525/.conda/envs/studyenv/lib/python3.9/site-packages/jupyterlab/labapp.py", line 176, in start

raise e

[LabBuildApp] File "/home/u93525/.conda/envs/studyenv/lib/python3.9/site-packages/jupyterlab/labapp.py", line 172, in start

build(name=self.name, version=self.version,

[LabBuildApp] File "/home/u93525/.conda/envs/studyenv/lib/python3.9/site-packages/jupyterlab/commands.py", line 482, in build

return handler.build(name=name, version=version, static_url=static_url,

[LabBuildApp] File "/home/u93525/.conda/envs/studyenv/lib/python3.9/site-packages/jupyterlab/commands.py", line 697, in build

raise RuntimeError(msg)

[LabBuildApp] RuntimeError: JupyterLab failed to build

[LabBuildApp] Exiting application: JupyterLab

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aswin,

We tried out the same from our end and was able to build it successfully.

Could you please follow the below steps:

Step 1: Create a new conda environment and activate the environment as

conda create -n <env_name> python=3.7

conda activate <env_name>

Step 2: Install jupyterlab via conda

conda install jupyterlab

Step 3: Install nodejs via conda

conda install nodejs

Step 4: Install the Elyra Workflow

pip install --upgrade elyra[all]

Step 5: Run jupyter lab build

jupyter lab build --minimize=False --no-minimize --dev-build=False --log-level=DEBUG

[Note: Make sure that you are able to work in the correct conda environment you created]

Hope this resolve your issue.

Thanks for your patience!!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's not working

After elyra installation, you get a "Killed" result.

jupyter lab is still giving me "FATAL ERROR: NewSpace::Rebalance Allocation failed - JavaScript heap out of memory"

I'm sorry it's not working here.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aswin,

We are able to reproduce your issue and also resolved.

The error is because you are trying out the commands from your login node. It can be easily resolved if you use the compute node.

There are Login Node and Compute Node in Intel® DevCloud.

Difference between login node and compute node.

------------------------------------------------

Login node uses a lightweight general-purpose processor. Compute node uses an Intel® Xeon® Gold 6128 processor that is capable of handling heavy workloads.

All the tasks that need extensive memory and compute resources have to be run on compute node, not on login node.

Memory error is thrown if you try to run any heavy tasks on login node.

To check whether you are on login node or compute node.

---------------------------------------------------------

One has “n0xx” in the prompt while the other does not.

When there is no “n0xx” in the prompt it means that you are on the login node.

Whenever there is “n0xx” after c009 in the prompt it indicates that you are on the compute node.

To run in compute node.

-------------------------

Initially you will be in the DevCloud login node. Then you have to enter to the compute node.

To enter into the compute node , please use the below commands

qsub -I

This creates a new job and gives a terminal from the compute node allocated for this job. See the following screenshot:

From here, You can try out the commands which you followed before.

Hope this resolve your issue. If the issue still persist please share the detailed steps you followed along with the screenshot of the error.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for accepting our solution. If you need any additional information, please post a new question as this thread will no longer be monitored by Intel.

Thanks and Regards,

Rahila T

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page