- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I am training my model using xgboost and processing data in parallel using Dask. It is running on CPU but I need to run it on GPU and enable multinode. In the previous solution, I used the way suggested in https://devcloud.intel.com/oneapi/documentation/job-submission/

I tried to run the commands to see the device it is running on but I could not get the output. Also, the job goes into a queue for about 5-6 hours and then Devcloud session times out. Is it supposed to happen? If not, could you let me know why this could be happening? I am attaching a screenshot for your reference.

Thank you

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thank you for posting in Intel Communities.

Could you please check out the below suggestions.

1. Increase the walltime

=======================

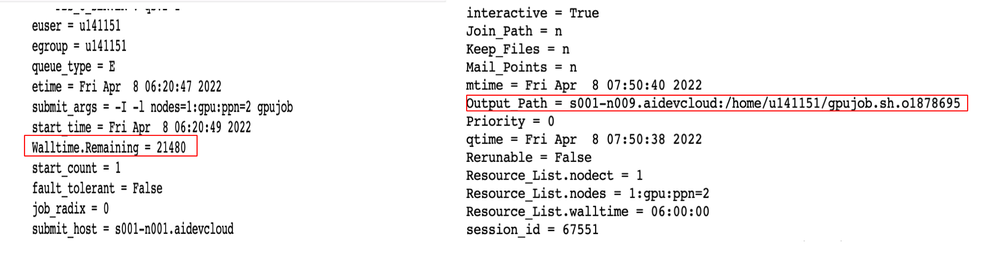

From the screenshot , we could observe that the wall time is set to 6hrs.The wall time can be set to a maximum of 24 hours to ensure a fair utilization of resources by all the DevCloud users.

Please change the wall time to the maximum limit that is 24hrs and submit again. To extend the default limit to 24hrs, use "#PBS -l walltime=24:00:00" in your job file.

You can follow the below steps:

1. Create a job file

$ vi job

2. Inside the file please add

#PBS -l walltime=24:00:00

conda activate env

python project.py

3. Save the file and Run the job

$ qsub job

2. Use XGBoost Optimized for Intel Architecture

===================================================

You can find the details of XGBoost GPU Support in the below link

https://xgboost.readthedocs.io/en/stable/gpu/index.html#xgboost-gpu-support

where it is saying " The GPU algorithms in XGBoost require a graphics card with compute capability 3.5 or higher, with CUDA toolkits 10.1 or later."

You can use XGBoost Optimized for Intel Architecture

https://www.intel.com/content/www/us/en/developer/tools/oneapi/onedal.html#gs.zzktzh

Hope this helps. If the issue still persist, please let us know which DevCloud(oneAPI DevCloud/edge DevCloud/FPGA DevCloud) you are using?

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your help.

Will the wall time make sure the job does not crash? And how long would the job take to run considering it is in the queue?

I tried to run it but it was not clear if the job was running and then it stopped. I am using oneAPI DevCloud.

Could you please look into it? Thank you

Regards,

Manjari

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We have not heard back from you. Is your issue resolved? Could you please give us an update ?

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

By default, any jobs will be terminated automatically at the 6 hours mark. Use the following syntax if your job requires more than 6 hours to complete:

qsub […] -l walltime=hh:mm:ss

example :

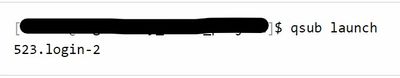

Once the job enters the queue, qsub will return the job ID, in this example 523.login-2. Make a note of the job number, which in this example is 523.

The job will be scheduled for execution, and, once it completes, you will find files <job-name>.o523 and <job-name>.e523.

Here,

<job-name> is the job name

523 is the job number

When the job finishes, you will get files:

1. <job-name>.o523 is the standard output stream of the job

2. <job-name>.e523 is the standard error stream of the job

Managing Submitted Jobs

===============================

Querying Job Status

------------------------

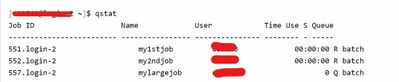

To get a list of running and queued jobs, use qstat:

Status R means the job is running, status Q means it is waiting in the queue.

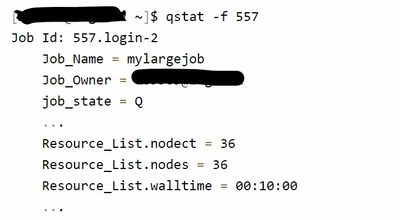

To get full information about the job, use qstat -f:

For more details please refer the below links:

https://devcloud.intel.com/oneapi/documentation/advanced-queue/

https://devcloud.intel.com/oneapi/documentation/job-submission/

If this resolves your issue, make sure to accept this as a solution. This would help others with similar issue.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have started my job as mentioned above. However, I was wondering how much does it take for a simple job for a basic python script shall take?

When I run it on Jupyter notebook it runs in seconds and therefore I would want to know how much time would a job submission take for that simple python script? Thank you!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

Thank you for all your help. I am sorry I am bothering you so much but I have a deliverable next week for which I have to complete almost 4-5 job submissions. My scripts are working but when I submit the job, OneAPI Devcloud just crashes after 30min since the server gets restarted due to getting disconnected for some reason. It would be great if you could let me help with the issue. Also, is it possible to run the job through terminal in Jupyter Hub?

Thank you!

Regards,

Manjari Misra

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We are sorry you are experiencing issues using the DevCloud.

Could you please check whether there is any running jobs by using the command "qstat".

If there is any, please kill the process using the command "qdel <job ID>" . You will get the job ID from the qstat view.

You can use the command "logout" to exit from the compute node. Again use the command "qsub -I" to enter into a new compute node where you can try to submit the job again.

Also Please find the below responses and let us know if you have any doubts.

1. Regarding 4-5 job submissions :

We are working to optimize our queue policies to balance the needs of all users and currently we allow every user to run two jobs, one job running on two nodes simultaneously or two jobs each running on one node.

2. Regarding OneAPI Devcloud crashes after 30min since the server gets restarted :

Server is not kept alive due to inactivity and is getting killed in your case.

To resolve open your ssh config file(~/.ssh/config). And in ssh configurations, for the below hosts

- devcloud

- *.aidevcloud

- devcloud-vscode

add the below lines to the ssh configuration

Host *

ServerAliveInterval 300

TCPKeepAlive no

eg:

Host devcloud

User uXXXX

IdentityFile ~/.ssh/devcloud-access-key-uXXXX.txt

ProxyCommand ssh -T -i ~/.ssh/devcloud-access-key-uXXXX.txt guest@ssh.devcloud.intel.com

ServerAliveInterval 300

TCPKeepAlive no Once the configuration is added please try again and let me know if you are still facing connection timed out issues.

3. Regarding running the job through terminal in Jupyter Hub :

To access DevCloud using jupyter lab, please follow the below steps:

Go to the link: https://devcloud.intel.com/oneapi/get_started/

At the end of the page, Under Connect with Jupyter* Lab, click on Launch JupyterLab*.

After launching the jupyterLab follow below path.

File--> New --> Terminal, you will get a terminal with compute node.

If the issue still persists, please share a sample reproducer along with the complete steps to reproduce the issue.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We have not heard back from you. Is your issue resolved?

Please let us know if we can go ahead and close this case.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thank you for your help so far. The issue has not been resolved yet. Is it possible for you to give me more information about job submission on multiple nodes?

Thank you

Regards,

Manjari

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for your updates.

To run distributed-memory jobs, you can request more than one node assigned to your job.

Number of nodes Syntax:

============================

-l nodes=<count>:ppn=2Your command file and application must invoke MPI or other distributed-memory frameworks to launch processing on the other nodes specified in the file referred to by the environment variable PBS_NODEFILE. You can combine a request for multiple nodes with a request for their specific features.

Example:

echo cat \$PBS_NODEFILE | qsub -l nodes=4:ppn=2If you want to start multiple jobs with different parameters, you can use the same command file with different arguments.

For requesting multiple nodes :

------------------------------------

When you launch a distributed-memory job, you have to explicitly request multiple compute nodes

For example, to request 4 nodes, use the following arguments in the command file:

PBS -l nodes=4:ppn=2

Please refer the below link to know more about job on multiple nodes.

https://devcloud.intel.com/oneapi/documentation/advanced-queue/

If you want to run a job on more than one compute node (i.e., a distributed-memory calculation), please follow the instruction in section "Distributed-Memory Architecture".

In the previous one, the command file was running only on one node. All other nodes were waiting. To start a parallel job, we will need an MPI program.

Running an MPI Program

-------------------------

In the Intel DevCloud for oneAPI Projects, compute nodes are interconnected with a Gigabit Ethernet network; connection to the NFS-shared storage has higher-speed uplinks. The easiest and the most portable way to handle communication in the Intel DevCloud for oneAPI Projects is using Message Passing Interface (MPI) library for communication. Intel MPI is installed on all nodes.

Please refer the link given to know more about Running an MPI Program

Hope this will clarify your doubts.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We have not heard back from you. Is your issue resolved?

Please let us know if we can go ahead and close this case.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We have not heard back from you. This thread will no longer be monitored by Intel. If you need further assistance, please post a new question.

Thanks

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page