- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

i think i made about 5 accounts now to learn how GANs work. i created a code that should generate human faces. this needs tensorflow. everytime i execute "pip install tensorflow" my account gets kicked out. than i get an email:

Your account for user ID uxxxxx has been deactivated as we saw prohibited use of Intel DevCloud, such as cryptocurrency mining, data scraping, or tampering with Intel DevCloud services. If you would like to use Intel DevCloud, please create a new account and restrict your use to education and computer science research only.

the devcloud seems to be a good solution because this needs much ram but if that happens it is waste of time.

This should become reworked.

Installing from pip is not "cryptocurrency mining, data scraping, or tampering"

This happens too if i download the tensorflow .whl and install it from there

edit:

I was asked to create a new account. I did and tensorflow was installed like it should.

Then i got banned as i downloaded a dataset for training.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

In our efforts to thwart the progress of cryptocurrency miners and keep DevCloud compute resources free and available to our valued developers, we have had to take both automated and manual action against unauthorized activities by terminated accounts. Unfortunately, we are aware that, while infrequent, several valid accounts have been terminated inadvertently during this process.

We apologize for any inconvenience it may have caused and we are continually working to avoiding this and create new capabilities for account recovery.

At this time, we do recommend all our users to back-up their data to avoid losing any work and please register to open a new account here : http://software.intel.com/devcloud/oneapi

Regards,

Alekhya

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is the training Code. It is mostly like the one in the tensorflow docs

import os

os.system("git clone https://github.com/dercodeKoenig/faces.git")

os.system("pip install tensorflow numpy opencv-python")

import matplotlib.pyplot as plt

import tensorflow as tf

import glob

import numpy as np

import os

from tensorflow.keras import layers

import time

import cv2

from google.colab.patches import cv2_imshow

from IPython import display

train_images = []

z=0

total = len(os.listdir("faces"))

for i in os.listdir("faces"):

try:

img = cv2.imread("faces/"+i)

img = cv2.resize(img, (256,256))

train_images.append(img)

z+=1

print("\r" + str(z) + " / " + str(total),end="")

except:

pass

train_images = np.array(train_images,dtype=object)

print("")

print(train_images.shape)

train_images = train_images.reshape(train_images.shape[0], 256, 256, 3).astype('float32')

train_images = (train_images - 127.5) / 127.5

BUFFER_SIZE = 60000

BATCH_SIZE = 256

train_dataset = tf.data.Dataset.from_tensor_slices(train_images).shuffle(BUFFER_SIZE).batch(BATCH_SIZE)

def make_generator_model():

model = tf.keras.Sequential()

model.add(layers.Dense(16*16*256, use_bias=False, input_shape=(100,)))

model.add(layers.BatchNormalization())

model.add(layers.LeakyReLU())

model.add(layers.Reshape((16, 16, 256)))

assert model.output_shape == (None, 16, 16, 256) # Note: None is the batch size

model.add(layers.Conv2DTranspose(128, (5, 5), strides=(1, 1), padding='same', use_bias=False))

assert model.output_shape == (None, 16, 16, 128)

model.add(layers.BatchNormalization())

model.add(layers.LeakyReLU())

model.add(layers.Conv2DTranspose(128, (5, 5), strides=(2, 2), padding='same', use_bias=False))

assert model.output_shape == (None, 32, 32, 64)

model.add(layers.BatchNormalization())

model.add(layers.LeakyReLU())

model.add(layers.Conv2DTranspose(128, (5, 5), strides=(2, 2), padding='same', use_bias=False))

assert model.output_shape == (None, 64, 64, 64)

model.add(layers.BatchNormalization())

model.add(layers.LeakyReLU())

model.add(layers.Conv2DTranspose(128, (5, 5), strides=(2, 2), padding='same', use_bias=False))

assert model.output_shape == (None, 128, 128, 64)

model.add(layers.BatchNormalization())

model.add(layers.LeakyReLU())

model.add(layers.Conv2DTranspose(3, (5, 5), strides=(2, 2), padding='same', use_bias=False, activation='tanh'))

assert model.output_shape == (None, 256, 256, 3)

return model

generator = make_generator_model()

def make_discriminator_model():

model = tf.keras.Sequential()

model.add(layers.Conv2D(64, (5, 5), strides=(1, 1),input_shape=[256, 256, 3]))

model.add(layers.LeakyReLU())

model.add(layers.Dropout(0.3))

model.add(layers.Conv2D(128, (5, 5), strides=(1, 1)))

model.add(layers.LeakyReLU())

model.add(layers.Dropout(0.3))

model.add(layers.Conv2D(128, (5, 5), strides=(1, 1)))

model.add(layers.LeakyReLU())

model.add(layers.Dropout(0.3))

model.add(layers.Conv2D(128, (5, 5), strides=(1, 1)))

model.add(layers.LeakyReLU())

model.add(layers.Dropout(0.3))

model.add(layers.Conv2D(256, (5, 5), strides=(1, 1)))

model.add(layers.LeakyReLU())

model.add(layers.Dropout(0.3))

model.add(layers.Flatten())

model.add(layers.Dense(64,activation="relu"))

model.add(layers.Dense(1))

return model

discriminator = make_discriminator_model()

cross_entropy = tf.keras.losses.BinaryCrossentropy(from_logits=True)

def discriminator_loss(real_output, fake_output):

real_loss = cross_entropy(tf.ones_like(real_output), real_output)

fake_loss = cross_entropy(tf.zeros_like(fake_output), fake_output)

total_loss = real_loss + fake_loss

return total_loss

def generator_loss(fake_output):

return cross_entropy(tf.ones_like(fake_output), fake_output)

generator_optimizer = tf.keras.optimizers.Adam(1e-4)

discriminator_optimizer = tf.keras.optimizers.Adam(1e-4)

EPOCHS = 400

noise_dim = 100

num_examples_to_generate = 20

seed = tf.random.normal([num_examples_to_generate, noise_dim])

checkpoint_dir = './training_checkpoints'

checkpoint_prefix = os.path.join(checkpoint_dir, "ckpt")

checkpoint = tf.train.Checkpoint(generator_optimizer=generator_optimizer,discriminator_optimizer=discriminator_optimizer,generator=generator,discriminator=discriminator)

checkpoint.restore(tf.train.latest_checkpoint(checkpoint_dir))

@TF.function

def train_step(images):

noise = tf.random.normal([BATCH_SIZE, noise_dim])

with tf.GradientTape() as gen_tape, tf.GradientTape() as disc_tape:

generated_images = generator(noise, training=True)

real_output = discriminator(images, training=True)

fake_output = discriminator(generated_images, training=True)

gen_loss = generator_loss(fake_output)

disc_loss = discriminator_loss(real_output, fake_output)

gradients_of_generator = gen_tape.gradient(gen_loss, generator.trainable_variables)

gradients_of_discriminator = disc_tape.gradient(disc_loss, discriminator.trainable_variables)

generator_optimizer.apply_gradients(zip(gradients_of_generator, generator.trainable_variables))

discriminator_optimizer.apply_gradients(zip(gradients_of_discriminator, discriminator.trainable_variables))

def train(dataset, epochs):

for epoch in range(epochs):

start = time.time()

for image_batch in dataset:

train_step(image_batch)

if(epoch % 20 == 0):

print("save chkp")

checkpoint.save(file_prefix = checkpoint_prefix)

display.clear_output(wait=True)

generate_images(generator,epoch + 1,seed)

print ('Time for epoch {} is {} sec'.format(epoch + 1, time.time()-start))

def generate_images(model, epoch, test_input):

predictions = model(test_input, training=False)

generated_images = np.array(predictions,dtype= 'float32')

generated_images = (generated_images+1)*127.5

cz=0

heighest = 0

while(True):

heighest +=1

if(os.path.exists("outputs/0/"+str(heighest)+".jpg")==False):

break

for i in generated_images:

cv2.imwrite("outputs/"+str(cz)+"/"+str(heighest)+".jpg",i)

cz+=1

os.system("mkdir outputs")

for i in range(num_examples_to_generate):

os.system("mkdir outputs/"+str(i))

train(train_dataset, EPOCHS)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thank you for posting in Intel Forums. We're checking on this and get back to you with an update. Meanwhile can you let us know if you've installed any other packages apart from tensorflow?

And which dataset did you download? Also please share the screenshot of the error message.

Regards,

Alekhya

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I downloaded a Dataset from Github with git command but I get banned when i only execute "pip install tensorflow".

I created an account for oneapi and open the jupyter workspace. Than i opened a terminal and typed in "pip install tensorflow". nothing else.

When it comes to the part where pip installes the collected packages i get kicked out. pip works with other packages like "opencv-python" but it kicks me out with tensorflow. Maybe a screen recorded video would help more than a screenshot? but I can only upload 71MB and i think this is not enough for a video. There is not really an error message. Jupyter just freezes and when i check my mail i get the messege i already posted

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We apologize for the inconvenience caused. We've contacted the DevCloud team regarding this issue. We will get back to you soon with an update.

Regards,

Alekhya

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Anyway, I've done it with google Colab now. But thanks for trying to help

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

In our efforts to thwart the progress of cryptocurrency miners and keep DevCloud compute resources free and available to our valued developers, we have had to take both automated and manual action against unauthorized activities by terminated accounts. Unfortunately, we are aware that, while infrequent, several valid accounts have been terminated inadvertently during this process.

We apologize for any inconvenience it may have caused and we are continually working to avoiding this and create new capabilities for account recovery.

At this time, we do recommend all our users to back-up their data to avoid losing any work and please register to open a new account here : http://software.intel.com/devcloud/oneapi

Regards,

Alekhya

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

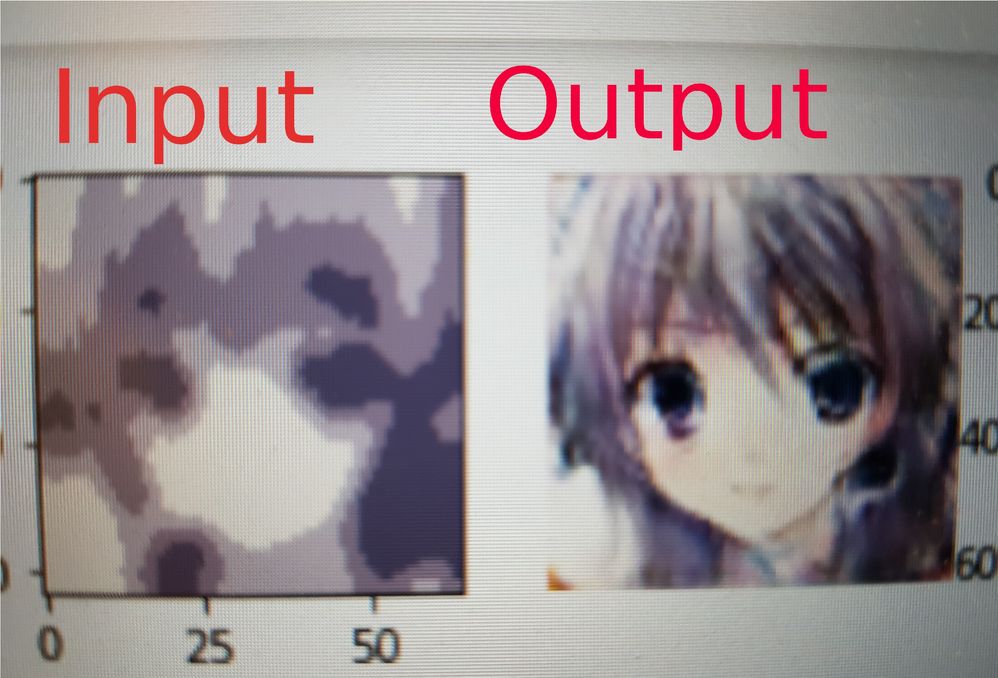

Ok I thought something like that. Luckily there was no important data on the devcloud. Oneapi is great. Look at this nice results:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thank you for accepting our solution. If you need any additional information, please submit a new thread as this thread will no longer be monitored.

Regards,

Alekhya

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page