- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello everyone,

I started using OPENVINO one month ago so i'm still a beginner but I already have used OPENVINO's toolkit to infer a Inception-Resnet-V2 model and it worked just fine. Now I am trying to do the same for the densenet201 model, but the results I got were completely different than the ones I had on tensorflow

First I used this command on Windows 10 terminal to convert my tensorflow model to Intel's IR : py mo.py --saved_model_dir C:\PATHTOMYMODEL -b 1 --mean_values [123.68,116.78,103.94] --scale_values [58.395,57.12,57.375] --> for the mean and scale values i used the documentation from this link : https://docs.openvinotoolkit.org/latest/omz_models_model_densenet_201_tf.html

I also followed a link which described the supported topologies for a Tensorflow/IntermediateRepresentation conversion and Densenet was described as "Other supported topologies" : https://docs.openvinotoolkit.org/latest/openvino_docs_MO_DG_prepare_model_convert_model_Convert_Model_From_TensorFlow.html

For other models such as Inception the parameters to do the conversion were given in this section, so my question is : Is Tensorflow's Densenet201 supported for a conversion with OpenVINO ? Or I am missing something to do a correct conversion.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ayaan,

Thanks for reaching out to us.

By referring to your command line, you have satisfied the minimum required arguments for the Model Optimizer to convert the densenet-201-tf model into IR format. However, there are also few missing arguments that might be the reason for not getting expected predictions.

You may find all the required model_optimizer arguments in this file:

<installed_dir>\deployment_tools\open_model_zoo\models\public\densenet-201-tf\model.yml

These information are also available here:

On the other hand, I would like to share with you an easier way to convert the densenet-201-tf model into IR format without specify all the arguments which is converter.py.

python “<installed_dir\deployment_tools\tools\model_downloader\converter.py” --name densenet-201-tf

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sir,

First, thank you for your reply, it's really helpful. But I still have two questions :

I used this command line to create the IR representation following the link you gave me, and it didn't work when I used the model in my custom application (error when loading the model). I just didn't used this parameter "--output", is it essential for my conversion ?

py mo.py --reverse_input_channels --input_shape=[1,224,224,3] --input=input_1 --mean_values=input_1[123.68,116.78,103.94] --scale_values=input_1[58.395,57.12,57.375] --saved_model_dir=PATH_TO_TENSORFLOW_SAVED_MODEL_DIRECTORY

Finally I tried to use converter.py but I don't understand which parameter to give to this scipt, I tried to give it my ".pb" representation and the directory where I saved the tf2 model (with the pb file and the variables directory) and it didn't work as well.

Thanks again for your help and have a nive day !

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ayaan,

For your first question, the --output is used to provide the information to the Model Optimizer about which layer is the output layer. This parameter can be optional but sometimes it might take an important role especially for complex models which have many output layers.

However, this parameter only affects the success in conversion of the model to IR. As such, I tried running the classification_sample_async.py sample with these two IR models (with --output parameter and without –output parameter in converting). I got the same inference results from these two IR models.

Please note that this densenet-201-tf model is designed to perform image classification.

For your next question, running converter.py is an alternative way to convert downloaded Intel Public models with the condition you must run downloader.py to download the available Intel Public models first. You don’t have to specify the parameters when running converter.py because all the parameters will be added based on its respective model.yml.

For this densenet-201-tf model:

- Run downloader.py to download a densenet201_weights_tf_dim_ordering_tf_kernels.h5 file.

- Run converter.py

- Run the pre-convert script for densenet-201-tf to get a savedmodel directory which contains the .pb file, the variables and assets directories.

- Convert the .pb file from the savedmodel directory into IR models with all the parameters based on its model.yml.

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Peh,

Ok I understand what you meant for converter.py, indeed it seems easier but the models I used are densenet201-tf with custom dense layers at the end of it, so I don't think that the solution would be to dowload an Intel Public Model that hasn't been pre-trained the way I did it. Or maybe should I save my model to the .h5 format to make the conversion with OPENVINO tools ?

I will test again the model optimizer method with the --ouput option as my IR was the reason I got errors when i wanted to load the model with the Inference Engine library (error code when I was loading the CNN).

Regards,

Ayaan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ayaan,

You can try to save your custom densenet-201-tf model into .h5 format file and run the pre-convert.py file to generate a savedmodel directory that contains the .pb file, the variables and assets directories. And, then convert the generated .pb file into IR format with mo.py.

The command for pre-convert.py:

pre-convert.py <input_dir> <out_dir>

Note: There must be a .h5 file inside the <input_dir> and the name of .h5 file must match with the name in the script of pre-convert.py. You can either rename your .h5 file as densenet201_weights_tf_dim_ordering_tf_kernels.h5 or replace the name in the script of pre-convert.py. The savedmodel directory will generate in the <out_dir>.

For instance:

python “<install_dir>\deployment_tools\open_model_zoo\models\public\densenet-201-tf\pre-convert.py" “Desktop\Input” “Desktop\Output”

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Peh,

I followed your instructions and I got the following errors (attached to my answer).

It seems that the number of layers isn't the one which is expected. As I told you my model is a custom one so I removed the top layers and replaced them by dense and dropout layers, it is maybe the reason I have errors..

To do this I also had to reinstall OPENVINO (the latest release) because I didn't have the densenet-201-tf folder before.

Regards,

Ayaan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ayaan,

Alright. It seems this way is not going to work due to different number of layers.

On the other hand, please try converting your custom densenet-201-tf model with the OpenVINO™ 2021.3.

Note: Don’t forget to run the install_prerequisites_tf2.bat and setupvars.bat of OpenVINO™ 2021.3.

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Peh,

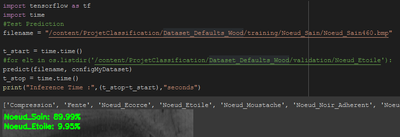

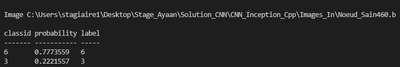

I reinstalled this version of openvino (I already used it last time), but I still got different results between the model I have on google colaboratory and the one converted thanks to openvino. I still want to point the fact that the prediction are pretty good and most of the time correct with the openvino's representation. But I still can't explain the different outputs between the two version :

Label 6 being "Noeud_Sain" and label 3 being "Noeud_Etoile". The two first predictions are indeed the same, but the probability of being the good result is never the same. So knowing that with Inception-Resnet-V2 I still have no difference between the two versions, I really don't know where I missed the point.

Thank you for your help,

Ayaan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi folks,

After installing a local version of tensorflow on my computer and computing the inference, I got the same results I had with OPENVINO, so my problem seems to come from my Google Colaboratory's application, even if I use exactly the same python code to infer the images in my local app.

So as my problem doesn't seem to come from OPENVINO I think we can close this subject.

Thank you for everything !

Ayaan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ayaan,

Thank you for your question. If you need any additional information from Intel, please submit a new question as this thread is no longer being monitored.

Regards,

Peh

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page