- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I am using DL workbench for model conversion. Got this error while converting Tensorflow maskrcnn model available at: https://github.com/tensorflow/models/blob/master/research/object_detection/g3doc/tf2_detection_zoo.md

Error log:

Model Optimizer arguments:

Common parameters:

- Path to the Input Model: None

- Path for generated IR: /opt/intel/openvino_2022.1.0.643/tools/workbench/.

- IR output name: saved_model

- Log level: ERROR

- Batch: Not specified, inherited from the model

- Input layers: Not specified, inherited from the model

- Output layers: Not specified, inherited from the model

- Input shapes: Not specified, inherited from the model

- Source layout: Not specified

- Target layout: Not specified

- Layout: Not specified

- Mean values: Not specified

- Scale values: Not specified

- Scale factor: Not specified

- Precision of IR: FP32

- Enable fusing: True

- User transformations: Not specified

- Reverse input channels: False

- Enable IR generation for fixed input shape: False

- Use the transformations config file: None

Advanced parameters:

- Force the usage of legacy Frontend of Model Optimizer for model conversion into IR: False

- Force the usage of new Frontend of Model Optimizer for model conversion into IR: False

TensorFlow specific parameters:

- Input model in text protobuf format: False

- Path to model dump for TensorBoard: None

- List of shared libraries with TensorFlow custom layers implementation: None

- Update the configuration file with input/output node names: None

- Use configuration file used to generate the model with Object Detection API: None

- Use the config file: None

OpenVINO runtime found in: /home/workbench/.workbench/environments/1/lib/python3.8/site-packages/openvino

OpenVINO runtime version: 2022.1.0-7019-cdb9bec7210-releases/2022/1

Model Optimizer version: 2022.1.0-7019-cdb9bec7210-releases/2022/1

[ ERROR ] -------------------------------------------------

[ ERROR ] ----------------- INTERNAL ERROR ----------------

[ ERROR ] Unexpected exception happened.

[ ERROR ] Please contact Model Optimizer developers and forward the following information:

[ ERROR ] Descriptors cannot not be created directly.

If this call came from a _pb2.py file, your generated code is out of date and must be regenerated with protoc >= 3.19.0.

If you cannot immediately regenerate your protos, some other possible workarounds are:

1. Downgrade the protobuf package to 3.20.x or lower.

2. Set PROTOCOL_BUFFERS_PYTHON_IMPLEMENTATION=python (but this will use pure-Python parsing and will be much slower).

More information: https://developers.google.com/protocol-buffers/docs/news/2022-05-06#python-updates

[ ERROR ] Traceback (most recent call last):

File "/home/workbench/.workbench/environments/1/lib/python3.8/site-packages/openvino/tools/mo/main.py", line 533, in main

ret_code = driver(argv)

File "/home/workbench/.workbench/environments/1/lib/python3.8/site-packages/openvino/tools/mo/main.py", line 489, in driver

graph, ngraph_function = prepare_ir(argv)

File "/home/workbench/.workbench/environments/1/lib/python3.8/site-packages/openvino/tools/mo/main.py", line 374, in prepare_ir

argv = arguments_post_parsing(argv)

File "/home/workbench/.workbench/environments/1/lib/python3.8/site-packages/openvino/tools/mo/main.py", line 259, in arguments_post_parsing

ret_code = check_requirements(framework=argv.framework)

File "/home/workbench/.workbench/environments/1/lib/python3.8/site-packages/openvino/tools/mo/utils/versions_checker.py", line 247, in check_requirements

env_setup = get_environment_setup(framework)

File "/home/workbench/.workbench/environments/1/lib/python3.8/site-packages/openvino/tools/mo/utils/versions_checker.py", line 225, in get_environment_setup

exec("import tensorflow")

File "<string>", line 1, in <module>

File "/home/workbench/.workbench/environments/1/lib/python3.8/site-packages/tensorflow/__init__.py", line 41, in <module>

from tensorflow.python.tools import module_util as _module_util

File "/home/workbench/.workbench/environments/1/lib/python3.8/site-packages/tensorflow/python/__init__.py", line 40, in <module>

from tensorflow.python.eager import context

File "/home/workbench/.workbench/environments/1/lib/python3.8/site-packages/tensorflow/python/eager/context.py", line 32, in <module>

from tensorflow.core.framework import function_pb2

File "/home/workbench/.workbench/environments/1/lib/python3.8/site-packages/tensorflow/core/framework/function_pb2.py", line 16, in <module>

from tensorflow.core.framework import attr_value_pb2 as tensorflow_dot_core_dot_framework_dot_attr__value__pb2

File "/home/workbench/.workbench/environments/1/lib/python3.8/site-packages/tensorflow/core/framework/attr_value_pb2.py", line 16, in <module>

from tensorflow.core.framework import tensor_pb2 as tensorflow_dot_core_dot_framework_dot_tensor__pb2

File "/home/workbench/.workbench/environments/1/lib/python3.8/site-packages/tensorflow/core/framework/tensor_pb2.py", line 16, in <module>

from tensorflow.core.framework import resource_handle_pb2 as tensorflow_dot_core_dot_framework_dot_resource__handle__pb2

File "/home/workbench/.workbench/environments/1/lib/python3.8/site-packages/tensorflow/core/framework/resource_handle_pb2.py", line 16, in <module>

from tensorflow.core.framework import tensor_shape_pb2 as tensorflow_dot_core_dot_framework_dot_tensor__shape__pb2

File "/home/workbench/.workbench/environments/1/lib/python3.8/site-packages/tensorflow/core/framework/tensor_shape_pb2.py", line 36, in <module>

_descriptor.FieldDescriptor(

File "/home/workbench/.workbench/environments/1/lib/python3.8/site-packages/google/protobuf/descriptor.py", line 560, in __new__

_message.Message._CheckCalledFromGeneratedFile()

TypeError: Descriptors cannot not be created directly.

If this call came from a _pb2.py file, your generated code is out of date and must be regenerated with protoc >= 3.19.0.

If you cannot immediately regenerate your protos, some other possible workarounds are:

1. Downgrade the protobuf package to 3.20.x or lower.

2. Set PROTOCOL_BUFFERS_PYTHON_IMPLEMENTATION=python (but this will use pure-Python parsing and will be much slower).

More information: https://developers.google.com/protocol-buffers/docs/news/2022-05-06#python-updates

[ ERROR ] ---------------- END OF BUG REPORT --------------

[ ERROR ] -------------------------------------------------

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Vishnuj,

Thank you for reaching out to us.

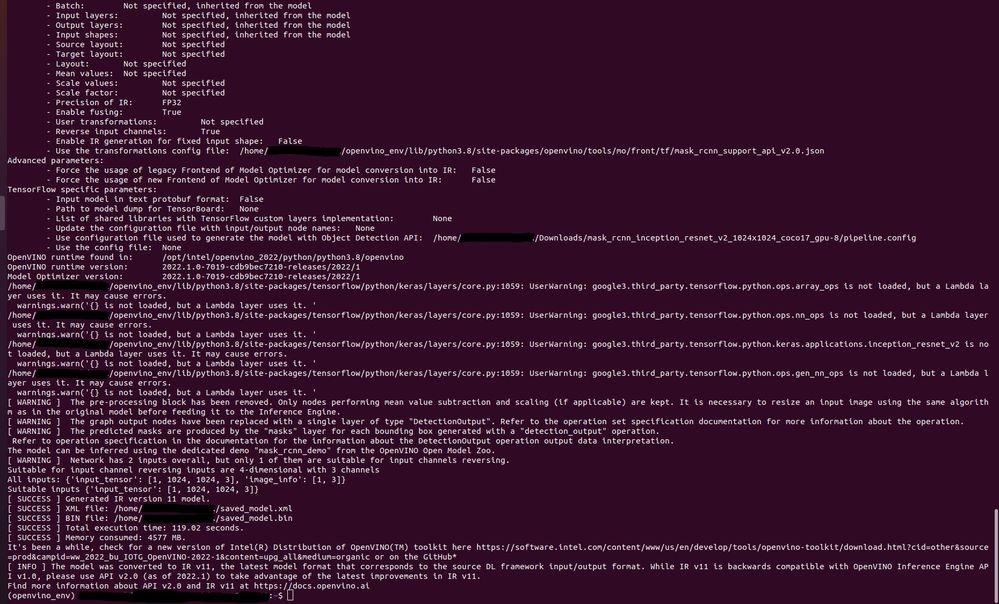

I've validated from my end using Mask R-CNN Inception ResNet V2 1024x1024 and encountered similar errors when converting the model using OpenVINO DL Workbench 2022.1.

We are investigating this issue and in the meantime, I suggest you use openvino-dev Python package in converting your models.

For your information, I was able to successfully convert Mask R-CNN Inception ResNet V2 1024x1024 using openvino-dev[tensorflow2] Python package as shown below:

Regards,

Hairul

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Vishnuj,

Thank you for your patience.

We've just got a definitive reply from the Engineering team.

The locally installed version of DL Workbench will be deprecated and moved to critical-bug-fix only mode. On the other hand, the cloud instance of DL Workbench on Developer Cloud for the Edge will continue to be developed and maintained.

Therefore, we recommend you migrate to DL Workbench on Developer Cloud for the Edge.

Please refer to 'Support Change and Deprecation Notices' subsection under the 'New and Changed in 2022.3 LTS' section in Release Notes for Intel® Distribution of OpenVINO™ Toolkit 2022.3 LTS for more information.

Regards,

Hairul

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Vishnuj,

This thread will no longer be monitored since we have provided information. If you need any additional information from Intel, please submit a new question.

Regards,

Hairul

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page