- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

+ Description:

Inferring an IR (multi-input LSTM) in mode 'GNA_HW' doesn’t work.

+ Question:

*Q1: I can infer an IR (multi-input LSTM ) in mode ‘GNA_SW_FP32’.

I can infer an IR (single-input LSTM ) in mode ‘GNA_HW’.

However, I can’t infer an IR (multi-input LSTM) in mode ‘GNA_HW’.

Can I get any chance to infer multi-input LSTM in mode ‘GNA_HW’ (python syntax)?

*Note: LSTM-based IR are converted from pytorch.

+ Sample code: attached file

* Requirement packages : VEN_requirement_date20211129.txt

* Script 1 (infer single-input LSTM with GNA_HW):

Issue01_OpenVINO_SingleInput_LSTMCell__GNA_HW.ipynb

* Script 2 (infer multi-input LSTM with GNA_SW_FP32):

Issue01_OpenVINO_MultiInput_LSTMCell__GNA_SW_FP32.ipynb

* Script 3 (infer multi-input LSTM with GNA_HW) :

Issue01_OpenVINO_MultiInput_LSTMCell__GNA_HW.ipynb

* LSTM( single-input LSTM with Pytorh framwork):

Export_Main00\CPU_TorchModle_LSTM_CellTEST

* LSTM( multi-input LSTM with Pytorh framwork):

Export_Main00\CPU_TorchModle_LSTM_CellTEST_WithState

* LSTM( single-input LSTM with ONNX framwork) :

Export_Main00\CPU_TraceONNX_LSTM_CellTEST.onnx

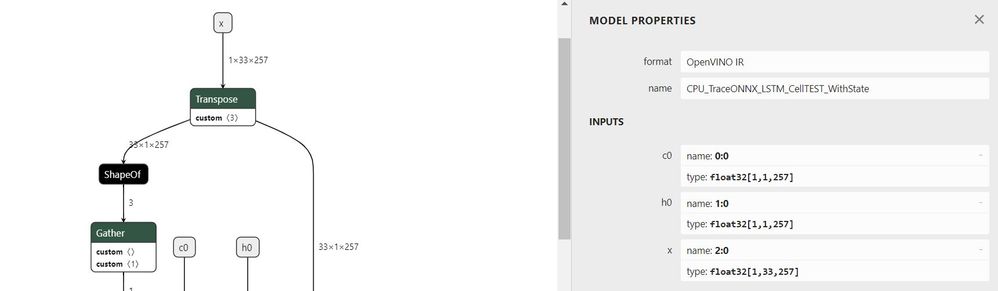

* LSTM( multi-input LSTM with ONNX framwork) :

Export_Main00\CPU_TraceONNX_LSTM_CellTEST_WithState.onnx

* LSTM(single-input LSTM with IR framwork) :

CPU_TraceONNX_LSTM_CellTEST.xml

CPU_TraceONNX_LSTM_CellTEST.bin

* LSTM(multi-input LSTM with IR framwork) :

CPU_TraceONNX_LSTM_CellTEST_WithState.xml

CPU_TraceONNX_LSTM_CellTEST_WithState.bin

+Example & Test:

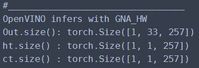

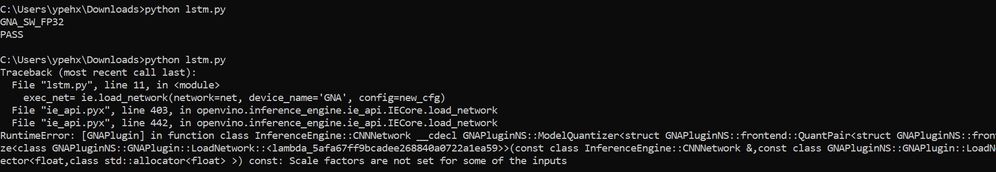

* Test 1: inferring single-input LSTM _IR with mode ‘GNA_HW’ works.

- Input x : A tensor with the shape [batch size :1, time step:33, feature bins:257].

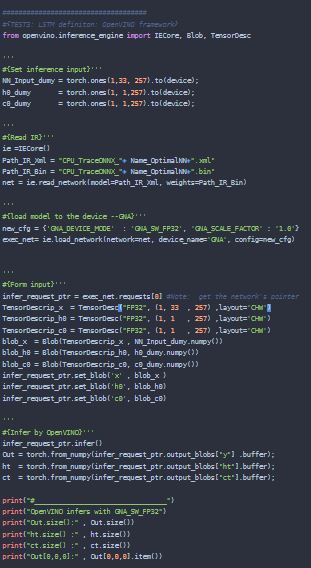

* Test 2: inferring multi-input LSTM _IR with mode ‘GNA_SW_FP32’ works.

- Input x : A tensor with the shape [batch size :1 , time step:33, feature bins:257].

- Input h0: A tensor with the shape [feature bins:257, batch size :1, feature bins:257].

- Input c0: A tensor with the shape [feature bins:257, batch size :1, feature bins:257].

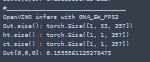

* Test 3: inferring multi-input LSTM _IR with mode ‘GNA_HW’ didn't work.

- Input x : A tensor with the shape [batch size :1 , time step:33, feature bins:257].

- Input h0: A tensor with the shape [feature bins:257, batch size :1, feature bins:257].

- Input c0: A tensor with the shape [feature bins:257, batch size :1, feature bins:257].

+Result & source code of test 1:

* Test 1 -- script & result:

* Test 2 -- script & result:

* Test 3 -- script & result:

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi tea6329714,

Thanks for sharing your findings with us.

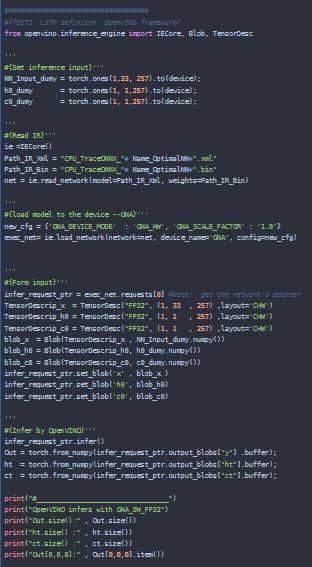

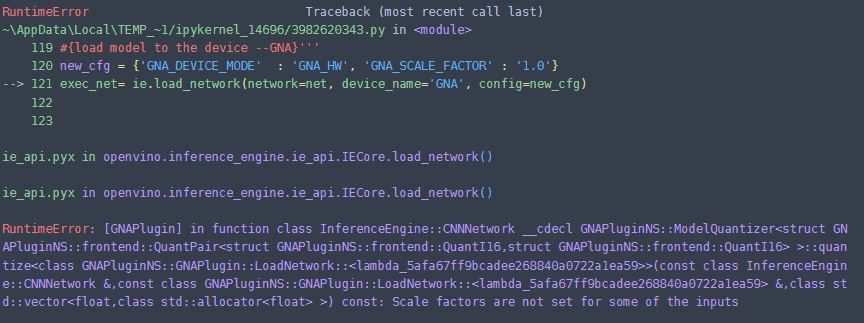

I was able to duplicate the same issue with minimally code below:

from openvino.inference_engine import IECore

ie =IECore()

net = ie.read_network(model=<Path_xml>, weights=<Path_bin>)

new_cfg = {'GNA_DEVICE_MODE' : 'GNA_HW', 'GNA_SCALE_FACTOR’ : '1.0'}

exec_net= ie.load_network(network=net, device_name='GNA', config=new_cfg)

According to gna_config.hpp, after setting individual scale factors for all inputs, it is able to run with GNA_HW.

Input layer name: 0:0, 1:0, 2:0

Replaced codes:

new_cfg = {'GNA_DEVICE_MODE' : 'GNA_HW', 'GNA_SCALE_FACTOR_0:0' : '1.0', 'GNA_SCALE_FACTOR_1:0' : '1.0','GNA_SCALE_FACTOR_2:0' : '1.0'}

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi tea6329714,

This thread will no longer be monitored since we have provided a solution. If you need any additional information from Intel, please submit a new question.

Regards,

Peh

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page