- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi!

I'm trying to quantize my model using OpenVINO.

I require that all model operations are executed with integer arithmetic and I want the inputs and outputs of the model to be in int8.

I have a model in fp32 in ONNX format. I used the model optimizer tool to get the OpenVINO IR in fp32.

Then I used post-training quantization to quantize my model to int8. I used the DefaultQuantization algorithm.

I also run the `compress_model_weights` function to reduce the size of my bin file after performing PTQ.

My code is based on the example from here.

Then I compiled the model and noticed that the output of the model is in fp32. Since all of the calculations should be done in int8 this was surprising.

I ran the model on some random data and the output was all decimal numbers.

Since the arithmetic operations should be done in int8 during inference I would expect my output to be an integer number even though the return type of the model is fp32.

Why is the output of the model fp32 and not int8 if I quantized it? Is this expected behavior and if so why?

Thank you in advance for the answer!

NOTE:

I'm running this on Intel(R) Core(TM) i9-10900X CPU @ 3.70GHz.

Also, I found the parameter INFERENCE_PRECISION_HINT which is a "Hint for device to use specified precision for inference." (source here ).

The only values this property allows are fp32 and bf16 which also seems confusing since it appears to say that I can't use integer precision on inference but I might be misinterpreting the documentation. Please correct me if I am wrong.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

could you share:

- Your relevant model files (ONNX and also IR)

- Your conversion commands

Cordially,

Iffa

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi!

Thank you for the quick response. I created a dummy model to showcase the problem since I can't share the original one.

I'm attaching the following files:

- dummy.onnx model

- dummy fp32 IR files

- dummy quantized IR files

I used the command

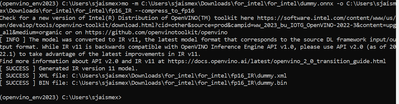

mo --input_model onnx-parts/dummy.onnx --output_dir dummy

to convert the model from ONNX to OpenVINO fp32 IR.

I did PTQ with the following script:

import numpy as np

from addict import Dict

from openvino.tools.pot import IEEngine

from openvino.tools.pot import load_model, save_model

from openvino.tools.pot import compress_model_weights

from openvino.tools.pot import create_pipeline

from openvino.tools.pot import DataLoader

input_shape = (1,3,256,256)

class DummyDataLoader(DataLoader):

""" Loads images from a folder """

def __init__(self):

pass

def __len__(self):

return 1

def __getitem__(self, index):

return np.random.rand(*input_shape), None

model_xml = "./dummy/dummy.xml"

model_bin = "./dummy/dummy.bin"

MODEL_NAME = "dummy"

COMPRESSED_PATH = "dummy"

COMPRESSED_NAME = "q_dummy"

# Model config specifies the name of the model and paths to .xml and .bin files of the model.

model_config = {

"model_name": MODEL_NAME,

"model": model_xml,

"weights": model_bin,

}

# Engine config.

engine_config = Dict({"device": "CPU"})

algorithms = [

{

"name": "DefaultQuantization",

"params": {

'target_device': 'CPU',

'preset': 'mixed'

},

}

]

data_loader = DummyDataLoader()

# Step 2: Load a model.

print("Loading model...")

model = load_model(model_config=model_config)

print("Loaded model.")

# Step 3: Initialize the engine for metric calculation and statistics collection.

engine = IEEngine(config=engine_config, data_loader=data_loader)

# Step 4: Create a pipeline of compression algorithms and run it.

pipeline = create_pipeline(algorithms, engine)

compressed_model = pipeline.run(model=model)

# Step 5 (Optional): Compress model weights to quantized precision

# to reduce the size of the final .bin file.

compress_model_weights(compressed_model)

# Step 6: Save the compressed model to the desired path.

# Set save_path to the directory where the model should be saved.

compressed_model_paths = save_model(

model=compressed_model,

save_path=COMPRESSED_PATH,

model_name=COMPRESSED_NAME,

)

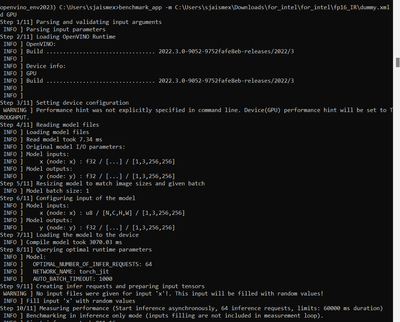

I also used the following script to compile the model.

from openvino.runtime import Core

compressed_model_xml = "dummy/q_dummy.xml"

compressed_model_bin = "dummy/q_dummy.bin"

ie = Core()

# Read and load a quantized model.

quantized_model = ie.read_model(

model=compressed_model_xml, weights=compressed_model_bin

)

quantized_compiled_model = ie.compile_model(model=quantized_model, device_name="CPU")

# quantized_compiled_model.inputs prints [<ConstOutput: names[x] shape[1,3,256,256] type: f32>]

# quantized_compiled_model.outputs prints [<ConstOutput: names[y] shape[1,3,256,256] type: f32>]Additionally I want to add that I tried using the benchmark_app as well. The benchmark app converts the input to u8 in a preprocessing step but it does so only because of the shape of the input. I want my input to be u8 regardless of the shape and I also want to set that myself, and not have the benchmark_app do it.

I also have one new question.

In the zipped file you will also find a file q_dummy_graph.xml in the folder benchmark_app_output. This is the output graph I got by passing the option -exec_graph_path to the benchmark_app. I'm assuming this is the graph of the model optimized for execution on the device after calling compile_model. Can you please direct me to the part of the documentation that describes what happens during those steps?

Thank you for your help,

Karlo

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I checked and verified your model.

My finding is that the compressed model holds FP32 data type for most layers even after being converted into FP16 or Quantized.

The way this OpenVINO compression works is that, if the original model has FP32 weights or biases, they are compressed to FP16/INT8. However, all intermediate data is kept in original precision. This is why you keep seeing FP32 precision instead of FP16/INT8.

You may refer here for comparison of the official OpenVINO model that is quantized vs the original format.

Cordially,

Iffa

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for answer but a few problems still remain unclear.

1. If all the calculations are done in int8 (using integer arithmetic) why are the outputs of the model decimal numbers such as 0.6483 and not integer numbers stored as f32. for example 7.0?

2. How can I make the model inputs to have int8 type? Note that in the example I sent you the inputs become int8 only in the benchmark app and only because the input is an image. If i try to run inference manually the input is expected to be fp32.

Thank you for your answer.

Best,

Karlo

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

After doing some clarification, it is confirmed that during quantization not all operations are actually quantized, but only ones that are required in perspective of performance.

For example, the Softmax operation will be computed in fp precision even in a quantized model. Hence, in other words, FP32 outputs for the quantized model are expected behavior.

Please look at https://docs.openvino.ai/latest/openvino_docs_OV_UG_lpt.html for more details on how OV runtime deals with quantized models.

Cordially,

Iffa

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Intel will no longer monitor this thread since we have provided a solution. If you need any additional information from Intel, please submit a new question.

Cordially,

Iffa

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page