- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, I have a custom VGG 16 model (trained with transfer learning on a custom dataset) that I am trying to inference on a Raspberry Pi 4B with the MYRIAD NCS2 device. I have managed to successfully install the OpenVino for Raspberry Pi and used windows for the model conversion. Unfortunately, my problem is that I can't seem to get inference running on my device.

I have tried to apply the suggestions here:

The classification_demo.py gives me an error that I had similar when using the hello classification and async classification demos, until I was informed that those models were only validated with AlexNet, in this instance, I get the following error when trying that classification_demo.py:

Traceback (most recent call last):

File "classification_demo.py", line 25, in <module>

from openvino.model_zoo.model_api.models import Classification, OutputTransform

ModuleNotFoundError: No module named 'openvino.model_zoo'

I don't think I know how to install this module, this is one of the things I'd like to ask for help with.

Secondly, the tutorial on how to build your own classifier gives me the following error:

E: [global] [ 121889] [Scheduler00Thr] dispatcherEventSend:54 Write failed (header) (err -4) | event XLINK_WRITE_REQ

E: [xLink] [ 121889] [Scheduler00Thr] sendEvents:1132 Event sending failed

E: [global] [ 121889] [python3] addEvent:360 Condition failed: event->header.flags.bitField.ack != 1

E: [global] [ 121889] [python3] addEventWithPerf:372 addEvent(event, timeoutMs) method call failed with an error: 3

E: [global] [ 121889] [python3] XLinkReadData:156 Condition failed: (addEventWithPerf(&event, &opTime, 0xFFFFFFFF))

E: [ncAPI] [ 121889] [python3] ncFifoReadElem:3295 Packet reading is failed.

Traceback (most recent call last):

File "inf.py", line 85, in <module>

result = classify(image_new)

File "inf.py", line 79, in classify

result = exec_net.infer(inputs = {input_placeholder_key:image})

File "ie_api.pyx", line 1062, in openvino.inference_engine.ie_api.ExecutableNetwork.infer

File "ie_api.pyx", line 1441, in openvino.inference_engine.ie_api.InferRequest.infer

File "ie_api.pyx", line 1463, in openvino.inference_engine.ie_api.InferRequest.infer

RuntimeError: Failed to read output from FIFO: NC_ERROR

E: [global] [ 121927] [Scheduler00Thr] dispatcherEventSend:54 Write failed (header) (err -4) | event XLINK_WRITE_REQ

E: [xLink] [ 121928] [Scheduler00Thr] sendEvents:1132 Event sending failed

E: [global] [ 121928] [Scheduler00Thr] dispatcherEventSend:54 Write failed (header) (err -4) | event XLINK_WRITE_REQ

E: [xLink] [ 121928] [Scheduler00Thr] sendEvents:1132 Event sending failed

E: [global] [ 122377] [python3] addEvent:360 Condition failed: event->header.flags.bitField.ack != 1

E: [global] [ 122377] [python3] addEventWithPerf:372 addEvent(event, timeoutMs) method call failed with an error: 3

E: [global] [ 122377] [python3] XLinkReadData:156 Condition failed: (addEventWithPerf(&event, &opTime, 0xFFFFFFFF))

E: [ncAPI] [ 122377] [python3] getGraphMonitorResponseValue:1902 XLink error, rc: X_LINK_ERROR

E: [global] [ 122377] [Scheduler00Thr] dispatcherEventSend:54 Write failed (header) (err -4) | event XLINK_WRITE_REQ

E: [xLink] [ 122377] [Scheduler00Thr] sendEvents:1132 Event sending failed

E: [global] [ 122377] [python3] addEvent:360 Condition failed: event->header.flags.bitField.ack != 1

E: [global] [ 122377] [python3] addEventWithPerf:372 addEvent(event, timeoutMs) method call failed with an error: 3

E: [global] [ 122377] [python3] XLinkReadData:156 Condition failed: (addEventWithPerf(&event, &opTime, 0xFFFFFFFF))

E: [ncAPI] [ 122377] [python3] getGraphMonitorResponseValue:1902 XLink error, rc: X_LINK_ERROR

E: [global] [ 122377] [Scheduler00Thr] dispatcherEventSend:54 Write failed (header) (err -4) | event XLINK_CLOSE_STREAM_REQ

E: [xLink] [ 122378] [Scheduler00Thr] sendEvents:1132 Event sending failed

E: [global] [ 122378] [Scheduler00Thr] dispatcherEventSend:54 Write failed (header) (err -4) | event XLINK_WRITE_REQ

E: [xLink] [ 122378] [Scheduler00Thr] sendEvents:1132 Event sending failed

E: [global] [ 122378] [python3] addEvent:360 Condition failed: event->header.flags.bitField.ack != 1

E: [global] [ 122378] [python3] addEventWithPerf:372 addEvent(event, timeoutMs) method call failed with an error: 3

E: [global] [ 122378] [python3] XLinkReadData:156 Condition failed: (addEventWithPerf(&event, &opTime, 0xFFFFFFFF))

E: [ncAPI] [ 122378] [python3] getGraphMonitorResponseValue:1902 XLink error, rc: X_LINK_ERROR

E: [global] [ 122382] [Scheduler00Thr] dispatcherEventSend:54 Write failed (header) (err -4) | event XLINK_RESET_REQ

E: [xLink] [ 122382] [Scheduler00Thr] sendEvents:1132 Event sending failed

E: [ncAPI] [ 132382] [python3] ncDeviceClose:1852 Device didn't appear after reboot

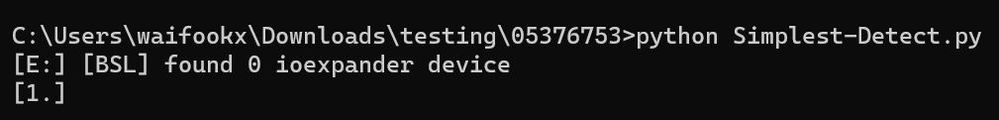

And lastly, the 5 line inference example, I am not sure if it works for image classification but, it gives me [[1.]] for any sample image I test it on, I am not sure what is wrong here either.

My model uses thermal images for classification and it has two classes positive and negative as it uses binary cross-entropy. For reproducibility of the errors I encountered, I have uploaded the model to google drive:

The h5 model has been attached to the question

I'd like to ask for help in running my classification model with the NCS2 device on my Raspberry Pi device

- Tags:

- Code Samples

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Publio,

Thanks for your information.

We've validated one of your images using your custom code, and this is the result:

You can continue for the rest of the images.

For your second question, normally we would recommend to look at this page for supported configurations and devices.

On another note, do take note that we’ve replicated your issue, and provided solution for inferencing VGG16 and your custom models, on your custom code, using random image and thermal image.

We believe that our support so far would enable you to continue and complete your work.

Regards,

Wan

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Publio,

Thanks for reaching out to us.

We are investigating this issue and we will update you at the earliest.

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Wan, thanks for replying to my question.

Some additional information:

- The model was trained using Tensorflow 2.8

- I converted the model using the following command on Windows 10:

The command used for conversion was: python mo.py --data_type=FP16 -b=1 --reverse_input_channels --model_name custom_model --input_model "C:\Users\Downloads\custom_model.pb" --output_dir "C:\Users\Documents\model_output"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Publio,

Thanks for your information.

Referring to your first post, we noticed that you have created inference scripts based on this video: Inference in Five Lines of Code. Also, you have successfully ran your classification model with the NCS2 device on your Raspberry Pi device. However, you obtained a weird inference result ( [[1.]] ) for any sample image you tested.

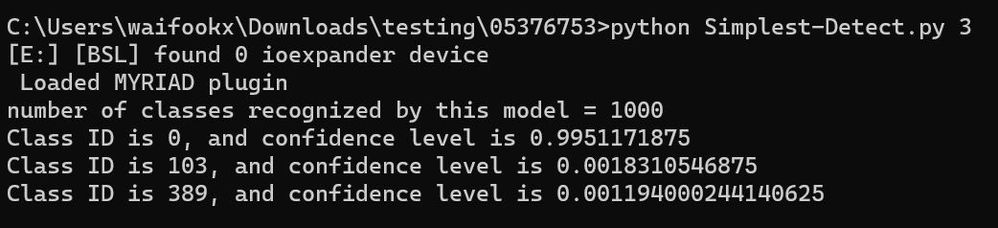

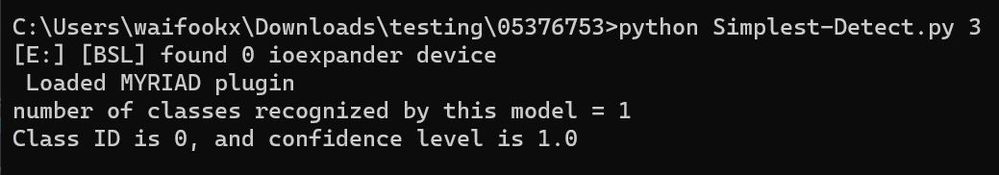

Do take note that you can run your custom model with MYRIAD plugin in OpenCV environment.

From our testing using your model with MYRIAD plugin in an inference pipeline with the Inference Engine Python API, we can confirm that the execution gets halted. We suspect that this is due to incompatibility of your model with Inference Engine Python API.

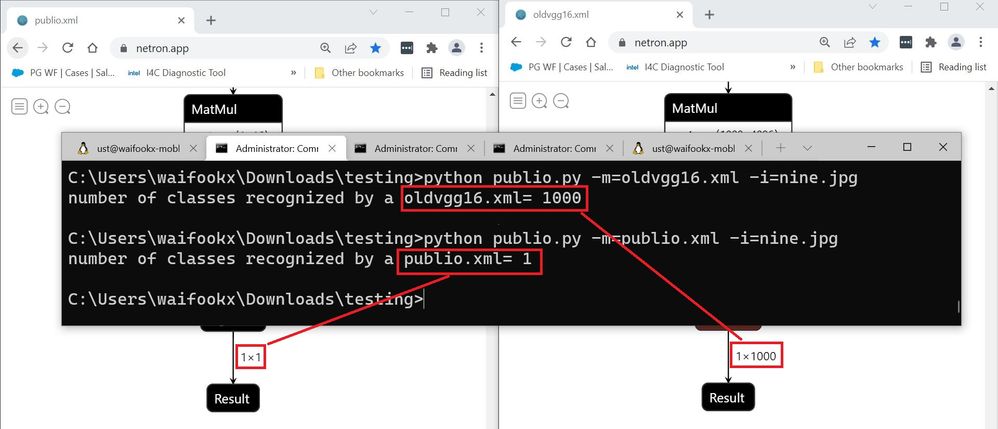

We validated Open Model Zoo Public Pre-trained VGG16 model and your custom model using your custom inference script. We visualized our Public Pre-trained VGG16 Intermediate Representation (IR) and your custom VGG16 (IR) using Netron, which is a visualizer for neural network, deep learning and machine learning model.

The results are as follows:

1. Public Pre-Trained VGG16 model

· We obtained correct results when running your custom inference script with Public Pre-Trained VGG16 model.

· Explanation: For Public Pre-Trained VGG16 model, the output shape is 1000, which means there are 1000 different classifications, matching those in the ImageNet 2012 database.

2. Your custom VGG16 model

· We get similar result as you, which is 1.0 confidence level, when running your custom inference script with your custom VGG16 model.

· Explanation: For your custom VGG16 model, the output shape is 1, which means there is only 1 class to be classified.

Therefore, we can conclude the result that you obtained was expected, based on your current IR model.

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Wan, thanks for your efforts in helping me with this issue. Is there any way to verify what in the model is not compatible with the inference engine API? Additionally, my model gives the same confidence level for any image that I attempt to classify. Are you able to reproduce the results with these images?

I wonder if there is something wrong with the model itself

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Publio,

Thanks for your information.

We've validated one of your images using your custom code, and this is the result:

You can continue for the rest of the images.

For your second question, normally we would recommend to look at this page for supported configurations and devices.

On another note, do take note that we’ve replicated your issue, and provided solution for inferencing VGG16 and your custom models, on your custom code, using random image and thermal image.

We believe that our support so far would enable you to continue and complete your work.

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Publio,

This thread will no longer be monitored since this issue has been resolved. If you need any additional information from Intel, please submit a new question.

Regards,

Munesh

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page