- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

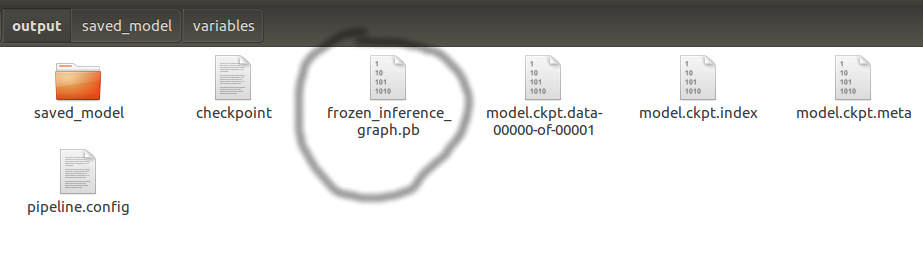

I want to compile the TensorFlow Graph to Movidius Graph. I have used Model Zoo's ssd_mobilenet_v1_coco model to train it on my own dataset.

Which gives me out a frozen_inference_graph.pb for object_detection.py

I don't have anything like output_node_name [https://movidius.github.io/ncsdk/tf_modelzoo.html] as shown in your documentation

After training my model on ssd_mobilenet_v1. I have created `inteference_frozen_graph.pb`. I don't have output node names....

Help me figure this out. Thanks

- Tags:

- NCSDK

- Tensorflow

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What TF Slim has been discontinued by TF? https://github.com/tensorflow/models/issues/539#issuecomment-363954823 and NCS supports SLIM implementation. You guys need to remove blog post saying tensorflow support. It doesn't(correct me if I'm wrong)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello abhi,

In order to compile the SSD models, you need to use the --tf-ssd-config parameter which was recently added to version 2.10.

You can follow this guide to create a ssd.config file:

https://movidius.github.io/ncsdk/tools/tf_ssd_config.html

Then you can run the following command to compile your model:

mvNCCompile -s 12 <Path to .pb file> --tf-ssd-config <Path to ssd.config file>

Regards,

Aroop

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Aroop_at_Intel also once I have the graph from compile command. How do I use it for interpretation from my webcam feed? I know how to do "Object Detection" using TensorFlow graph but I don't know, how to use 'movidius graph' for object detection.....plz suggest something thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Or the caffee interpretation works here also? ...which is shown here: https://www.pyimagesearch.com/2018/02/19/real-time-object-detection-on-the-raspberry-pi-with-the-movidius-ncs/

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Aroop_at_Intel This my trained model. I have only one class for detection.

ncs@ncs-VirtualBox:~/Downloads$ mvNCCompile -s 12 frozen_inference_graph.pb --tf--ssd-config pipeline.config

/usr/lib/python3/dist-packages/scipy/stats/morestats.py:16: DeprecationWarning: Importing from numpy.testing.decorators is deprecated, import from numpy.testing instead.

from numpy.testing.decorators import setastest

/usr/lib/python3.5/importlib/_bootstrap.py:222: RuntimeWarning: numpy.dtype size changed, may indicate binary incompatibility. Expected 96, got 88

return f(*args, **kwds)

/usr/local/bin/ncsdk/Controllers/Parsers/TensorFlowParser/Convolution.py:47: SyntaxWarning: assertion is always true, perhaps remove parentheses?

assert(False, "Layer type not supported by Convolution: " + obj.type)

mvNCCompile v02.00, Copyright @ Intel Corporation 2017

usage: mvNCCompile [-h] [-w WEIGHTS] [-in INPUTNODE] [-on OUTPUTNODE]

[-o OUTFILE] [-s NSHAVES] [--nce-slices NCE_SLICES]

[-is INPUTSIZE INPUTSIZE] [-ec]

[--accuracy_adjust [ACCURACY_ADJUST]] [--ma2480]

[--scheduler SCHEDULER] [--old-parser]

[--force_TF_symmetric_pad] [-i IMAGE] [-S SCALE] [-M MEAN]

[--input-layout ] [--output-layout ] [--cpp]

[--tf-ssd-config [TF_SSD_CONFIG]]

network

mvNCCompile: error: unrecognized arguments: --tf--ssd-config pipeline.configI get this weird error. I think my command is absolutely correct....verified here: https://forums.intel.com/s/question/0D50P00004NM0gISAT/unable-to-compile-tensorflow-mobilenet-ssd-coco-model-from-tf-object-detection-app-zoo-using-ncsdk2

https://forums.intel.com/s/question/0D50P00004NM0glSAD/ncsdk-210-option-tfssdconfig#latest

Also, here this guy gets same error that I'm getting. Which told him that NCSDK support has been dropped. Move to OpenVIVO

Now I have 2 questions:

1) Can intel provide better support with clear steps for tensorflow model ssd-mobilnet-v1-coco....trained on custom dataset?

2) or I continue with caffe implemetation that everyone is doing online

Thanks. It's such a pain to get quick support from intel even after investing money in chip. Also documentation is extremely unclear :(

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi abhi,

Your command appears to be incorrect. The correct parameter flag is "--tf-ssd-config" (as shown in my previous reply and in line 17 of your error message), and you need to specify the path to your ssd.config file after the flag.

To create the ssd.config file you need to visit this link:

https://movidius.github.io/ncsdk/tools/tf_ssd_config.html

Then, create an ssd.config file that matches the example shown in the documentation linked above. You can alter the example to match your model if needed.

Lastly, run this command:

mvNCCompile -s 12 <Path to .pb file> --tf-ssd-config <Path to ssd.config file>Make sure that you replace <Path to ssd.config file> with the path to the ssd.config file you created.

To install version 2.10, you need to specify the branch in your git clone command:

git clone -b ncsdk2 https://github.com/movidius/ncsdk.git

Take a look at the following sample code to inference with the Movidius Graph:

https://github.com/movidius/ncappzoo/tree/ncsdk2/apps/video_objects

Regards,

Aroop

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I get this error while trying to compile the graph

ncs@ncs-VirtualBox:~/Downloads$ mvNCCompile -s 12 ~/Downloads/frozen_inference_graph.pb --tf-ssd-config ~/Downloads/ssd_mobilenet_v1_coco.config

/usr/lib/python3/dist-packages/scipy/stats/morestats.py:16: DeprecationWarning: Importing from numpy.testing.decorators is deprecated, import from numpy.testing instead.

from numpy.testing.decorators import setastest

/usr/lib/python3.5/importlib/_bootstrap.py:222: RuntimeWarning: numpy.dtype size changed, may indicate binary incompatibility. Expected 96, got 88

return f(*args, **kwds)

/usr/local/bin/ncsdk/Controllers/Parsers/TensorFlowParser/Convolution.py:47: SyntaxWarning: assertion is always true, perhaps remove parentheses?

assert(False, "Layer type not supported by Convolution: " + obj.type)

mvNCCompile v02.00, Copyright @ Intel Corporation 2017

/home/ncs/Downloads/frozen_inference_graph.pb

Traceback (most recent call last):

File "/usr/local/lib/python3.5/dist-packages/tensorflow/python/framework/importer.py", line 418, in import_graph_def

graph._c_graph, serialized, options) # pylint: disable=protected-access

tensorflow.python.framework.errors_impl.InvalidArgumentError: NodeDef mentions attr 'T' not in Op<name=NonMaxSuppressionV3; signature=boxes:float, scores:float, max_output_size:int32, iou_threshold:float, score_threshold:float -> selected_indices:int32>; NodeDef: {{node Postprocessor/BatchMultiClassNonMaxSuppression/map/while/MultiClassNonMaxSuppression/non_max_suppression/NonMaxSuppressionV3}} = NonMaxSuppressionV3[T=DT_FLOAT](Postprocessor/BatchMultiClassNonMaxSuppression/map/while/MultiClassNonMaxSuppression/unstack, Postprocessor/BatchMultiClassNonMaxSuppression/map/while/MultiClassNonMaxSuppression/Reshape, Postprocessor/BatchMultiClassNonMaxSuppression/map/while/MultiClassNonMaxSuppression/Minimum, Postprocessor/BatchMultiClassNonMaxSuppression/map/while/MultiClassNonMaxSuppression/non_max_suppression/iou_threshold, Postprocessor/BatchMultiClassNonMaxSuppression/map/while/MultiClassNonMaxSuppression/non_max_suppression/score_threshold). (Check whether your GraphDef-interpreting binary is up to date with your GraphDef-generating binary.).

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/local/bin/mvNCCompile", line 208, in <module>

args.old_parser, args.cpp, args)

File "/usr/local/bin/mvNCCompile", line 186, in create_graph

load_ret = load_network(args, parser, myriad_config)

File "/usr/local/bin/ncsdk/Controllers/Scheduler.py", line 67, in load_network

p.loadNetworkObjects(arguments.net_description, arguments.net_weights)

File "/usr/local/bin/ncsdk/Controllers/Parsers/TensorFlow.py", line 562, in loadNetworkObjects

tf.import_graph_def(graph_def, name="")

File "/usr/local/lib/python3.5/dist-packages/tensorflow/python/util/deprecation.py", line 488, in new_func

return func(*args, **kwargs)

File "/usr/local/lib/python3.5/dist-packages/tensorflow/python/framework/importer.py", line 422, in import_graph_def

raise ValueError(str(e))

ValueError: NodeDef mentions attr 'T' not in Op<name=NonMaxSuppressionV3; signature=boxes:float, scores:float, max_output_size:int32, iou_threshold:float, score_threshold:float -> selected_indices:int32>; NodeDef: {{node Postprocessor/BatchMultiClassNonMaxSuppression/map/while/MultiClassNonMaxSuppression/non_max_suppression/NonMaxSuppressionV3}} = NonMaxSuppressionV3[T=DT_FLOAT](Postprocessor/BatchMultiClassNonMaxSuppression/map/while/MultiClassNonMaxSuppression/unstack, Postprocessor/BatchMultiClassNonMaxSuppression/map/while/MultiClassNonMaxSuppression/Reshape, Postprocessor/BatchMultiClassNonMaxSuppression/map/while/MultiClassNonMaxSuppression/Minimum, Postprocessor/BatchMultiClassNonMaxSuppression/map/while/MultiClassNonMaxSuppression/non_max_suppression/iou_threshold, Postprocessor/BatchMultiClassNonMaxSuppression/map/while/MultiClassNonMaxSuppression/non_max_suppression/score_threshold). (Check whether your GraphDef-interpreting binary is up to date with your GraphDef-generating binary.).I'm posting my ssd-mobilenet-v1.config that I used to train the model

# SSD with Mobilenet v1 configuration for MSCOCO Dataset.

# Users should configure the fine_tune_checkpoint field in the train config as

# well as the label_map_path and input_path fields in the train_input_reader and

# eval_input_reader. Search for "PATH_TO_BE_CONFIGURED" to find the fields that

# should be configured.

model {

ssd {

num_classes: 1

box_coder {

faster_rcnn_box_coder {

y_scale: 10.0

x_scale: 10.0

height_scale: 5.0

width_scale: 5.0

}

}

matcher {

argmax_matcher {

matched_threshold: 0.5

unmatched_threshold: 0.5

ignore_thresholds: false

negatives_lower_than_unmatched: true

force_match_for_each_row: true

}

}

similarity_calculator {

iou_similarity {

}

}

anchor_generator {

ssd_anchor_generator {

num_layers: 6

min_scale: 0.2

max_scale: 0.95

aspect_ratios: 1.0

aspect_ratios: 2.0

aspect_ratios: 0.5

aspect_ratios: 3.0

aspect_ratios: 0.3333

}

}

image_resizer {

fixed_shape_resizer {

height: 300

width: 300

}

}

box_predictor {

convolutional_box_predictor {

min_depth: 0

max_depth: 0

num_layers_before_predictor: 0

use_dropout: false

dropout_keep_probability: 0.8

kernel_size: 1

box_code_size: 4

apply_sigmoid_to_scores: false

conv_hyperparams {

activation: RELU_6,

regularizer {

l2_regularizer {

weight: 0.00004

}

}

initializer {

truncated_normal_initializer {

stddev: 0.03

mean: 0.0

}

}

batch_norm {

train: true,

scale: true,

center: true,

decay: 0.9997,

epsilon: 0.001,

}

}

}

}

feature_extractor {

type: 'ssd_mobilenet_v1'

min_depth: 16

depth_multiplier: 1.0

conv_hyperparams {

activation: RELU_6,

regularizer {

l2_regularizer {

weight: 0.00004

}

}

initializer {

truncated_normal_initializer {

stddev: 0.03

mean: 0.0

}

}

batch_norm {

train: true,

scale: true,

center: true,

decay: 0.9997,

epsilon: 0.001,

}

}

}

loss {

classification_loss {

weighted_sigmoid {

}

}

localization_loss {

weighted_smooth_l1 {

}

}

hard_example_miner {

num_hard_examples: 3000

iou_threshold: 0.99

loss_type: CLASSIFICATION

max_negatives_per_positive: 3

min_negatives_per_image: 0

}

classification_weight: 1.0

localization_weight: 1.0

}

normalize_loss_by_num_matches: true

post_processing {

batch_non_max_suppression {

score_threshold: 1e-8

iou_threshold: 0.6

max_detections_per_class: 100

max_total_detections: 100

}

score_converter: SIGMOID

}

}

}

train_config: {

batch_size: 24

optimizer {

rms_prop_optimizer: {

learning_rate: {

exponential_decay_learning_rate {

initial_learning_rate: 0.004

decay_steps: 800720

decay_factor: 0.95

}

}

momentum_optimizer_value: 0.9

decay: 0.9

epsilon: 1.0

}

}

fine_tune_checkpoint: "/home/redtwo/nsir/ssd_mobilenet_v1_coco_2018_01_28/model.ckpt"

from_detection_checkpoint: true

# Note: The below line limits the training process to 200K steps, which we

# empirically found to be sufficient enough to train the pets dataset. This

# effectively bypasses the learning rate schedule (the learning rate will

# never decay). Remove the below line to train indefinitely.

num_steps: 200000

data_augmentation_options {

random_horizontal_flip {

}

}

data_augmentation_options {

ssd_random_crop {

}

}

}

train_input_reader: {

tf_record_input_reader {

input_path: "/home/redtwo/nsir/pascal.record"

}

label_map_path: "/home/redtwo/nsir/label.pbtxt"

}

eval_config: {

num_examples: 8000

# Note: The below line limits the evaluation process to 10 evaluations.

# Remove the below line to evaluate indefinitely.

max_evals: 10

}

eval_input_reader: {

tf_record_input_reader {

input_path: "/home/redtwo/nsir/pascal.record"

}

label_map_path: "/home/redtwo/nsir/label.pbtxt"

shuffle: false

num_readers: 1

}

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Aroop_at_Intel not sure if I get what you mean by ssd.config. Do I need to write my own custom .config file?

Is the image size of training & validation image? which is 640x300

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Aroop_at_Intel also I used this mymodel.config(https://drive.google.com/file/d/1w0BEfq0hohJ5I3wxfqYJe9CPyng5cAZI/view)

later I found someone with mobile zoo ssd using it.

mvNCCompile -s 12 frozen_inference_graph.pb --tf--ssd-config mymodel.configinput_height: 300

input_width: 300

postprocessing_params {

num_classes: 1

background_label_id: 0

max_detections: 100

nms_params {

score_threshold: 9.99999993923e-09

iou_threshold: 0.600000023842

max_detections_per_class: 100

}

box_params {

var: 10.0

var: 10.0

var: 5.0

var: 5.0

}

}

score_converter: SIGMOID

but it gave me an error

ncs@ncs-VirtualBox:~/Downloads$ mvNCCompile -s 12 frozen_inference_graph.pb --tf--ssd-config mymodel.config

/usr/lib/python3/dist-packages/scipy/stats/morestats.py:16: DeprecationWarning: Importing from numpy.testing.decorators is deprecated, import from numpy.testing instead.

from numpy.testing.decorators import setastest

/usr/lib/python3.5/importlib/_bootstrap.py:222: RuntimeWarning: numpy.dtype size changed, may indicate binary incompatibility. Expected 96, got 88

return f(*args, **kwds)

/usr/local/bin/ncsdk/Controllers/Parsers/TensorFlowParser/Convolution.py:47: SyntaxWarning: assertion is always true, perhaps remove parentheses?

assert(False, "Layer type not supported by Convolution: " + obj.type)

mvNCCompile v02.00, Copyright @ Intel Corporation 2017

usage: mvNCCompile [-h] [-w WEIGHTS] [-in INPUTNODE] [-on OUTPUTNODE]

[-o OUTFILE] [-s NSHAVES] [--nce-slices NCE_SLICES]

[-is INPUTSIZE INPUTSIZE] [-ec]

[--accuracy_adjust [ACCURACY_ADJUST]] [--ma2480]

[--scheduler SCHEDULER] [--old-parser]

[--force_TF_symmetric_pad] [-i IMAGE] [-S SCALE] [-M MEAN]

[--input-layout ] [--output-layout ] [--cpp]

[--tf-ssd-config [TF_SSD_CONFIG]]

network

mvNCCompile: error: unrecognized arguments: --tf--ssd-config mymodel.configncs@ncs-VirtualBox:~/Downloads$ mvNCCompile -s 12 frozen_inference_graph.pb --tf--ssd-config mymodel.config

/usr/lib/python3/dist-packages/scipy/stats/morestats.py:16: DeprecationWarning: Importing from numpy.testing.decorators is deprecated, import from numpy.testing instead.

from numpy.testing.decorators import setastest

/usr/lib/python3.5/importlib/_bootstrap.py:222: RuntimeWarning: numpy.dtype size changed, may indicate binary incompatibility. Expected 96, got 88

return f(*args, **kwds)

/usr/local/bin/ncsdk/Controllers/Parsers/TensorFlowParser/Convolution.py:47: SyntaxWarning: assertion is always true, perhaps remove parentheses?

assert(False, "Layer type not supported by Convolution: " + obj.type)

mvNCCompile v02.00, Copyright @ Intel Corporation 2017

usage: mvNCCompile [-h] [-w WEIGHTS] [-in INPUTNODE] [-on OUTPUTNODE]

[-o OUTFILE] [-s NSHAVES] [--nce-slices NCE_SLICES]

[-is INPUTSIZE INPUTSIZE] [-ec]

[--accuracy_adjust [ACCURACY_ADJUST]] [--ma2480]

[--scheduler SCHEDULER] [--old-parser]

[--force_TF_symmetric_pad] [-i IMAGE] [-S SCALE] [-M MEAN]

[--input-layout ] [--output-layout ] [--cpp]

[--tf-ssd-config [TF_SSD_CONFIG]]

network

mvNCCompile: error: unrecognized arguments: --tf--ssd-config mymodel.confighowever this .config works on this model. The guy who posted this model: https://drive.google.com/file/d/17HdXplYI6OYINULMBuaYf_aH-ewt9B0c/view

NOW AROOP Please tell what should we do? We ordered 4 sticks....version2 & v1 both....& the support is extermely show bad. The documentation sucks. We are stuck with no solution at all. Also caffe is an extremely non-popular framework that intel is working with...we can't seem to use caffe. We can only go forward with tensorflow or pytorch(in worst case)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can download my model from here: https://drive.google.com/open?id=1U5jp-Wow5alxJ-UjCPwU37QXna3gkKku

the frozen graph is in this folder

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi abhi,

I am not able to access your model from the link you provided, please check the share permissions.

I apologize you are having trouble getting started.

The ssd-moblienet-v1.config file is used for training the network and will not work. The ssd.config file should look similar to the following:

input_height: 300

input_width: 300

postprocessing_params {

num_classes: 91

background_label_id: 0

max_detections: 100

nms_params {

score_threshold: 0.300000011921

iou_threshold: 0.600000023842

max_detections_per_class: 100

}

box_params {

var: 0.1

var: 0.1

var: 0.2

var: 0.2

}

}

score_converter: SIGMOIDYour command has an extra "-" between "tf" and "ssd". Please use the following command:

mvNCCompile -s 12 frozen_inference_graph.pb --tf-ssd-config mymodel.config

However, since you purchased both versions of the Intel Neural Compute Sticks I recommend that you use the OpenVINO toolkit as it has support for both sticks. The Intel Movidius Neural Compute SDK only supports the original Neural Compute Stick.

Regards,

Aroop

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Aroop_at_Intel you can have a look at the model: sent in PM

changed sharing permissions. I'm in talks with another person from intel. Sad that even that doesn't work. Please let's figure out this process here....

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi abhi,

The issue seems to be related to a mismatched Tensorflow version used to train and the version installed with the NCSDK. Please use the following steps to upgrade your Tensorflow version and compile your model.

#Remove Tensorflow

pip3 uninstall tensorflow

#Install Tensorflow Version 1.12

pip3 install tensorflow==1.12

#Compile the model

mvNCCompile frozen_inference_graph.pb --tf-ssd-config ssd.config -s 12The ssd.config file should be exactly like the following:

input_height: 300

input_width: 300

postprocessing_params {

num_classes: 1

background_label_id: 0

max_detections: 100

nms_params {

score_threshold: 9.99999993923e-09

iou_threshold: 0.600000023842

max_detections_per_class: 100

}

box_params {

var: 10.0

var: 10.0

var: 5.0

var: 5.0

}

}

score_converter: SIGMOIDRegards,

Aroop

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Aroop_at_Intel first all thanks a lot. It worked. Intel SDK 2 with compute stick one. SDK2 doesn't work with compute stick 1. However, I still need OpenVINO to working which @Intel_Jesus is kind of failing to convert. I'll follow up on the other thread for OpenVINO support https://forums.intel.com/s/question/0D70P000006R8Fb/failed-to-convert-tensorflow-ssd-mobilnetv1-coco-model-with-openvino?language=en_US&s1oid=00DU0000000YT3c&s1nid=0DB0P000000U1Hq&emkind=chatterCommentNotification&s1uid=0050P000008W6MN&emtm=1564009027332&fromEmail=1&s1ext=0

But again thanks. Now I'm using graph to detect. The output seems fine predict well. But not able to put detection(bounding boxes). I think I have messed up with some classes & labelling. I'll fix that & will update you. Thanks a lot. Great work + Support :)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi abhi,

I am glad that I could help you with your problem. Feel free to follow up if you have any further questions.

Regards,

Aroop

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page