- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello everyone,

I have a question regarding converting tensorflow v2 saved_model to an IR model.

Can you please provide some information on this?

After training the following sample code using keras.LSTM in tensorflow v2, I tried to save the trained model in saved_model format and convert it using mo, but I encountered the following error.

Is it true that openVINO 2022.3 does not support keras.LSTM ?

If there is a workaround, please let me know.

Thank you in advance.

----------------------------------------------

OS Ubuntu20.04

Python 3.9.2

tensorflow 2.9.3

cuda 11.6

cpu intel i9

GPU nvidia RTX2080

openVINO 2022.3

----------------------------------------------

//

////////////////////////////////////////////////

// sample code : lstm_code.py

//

import numpy as np

from pathlib import Path

import shutil

import tensorflow as tf

#

from tensorflow.keras import Model

from tensorflow.keras.layers import Dense, LSTM, Input

#

cwdPath = Path().cwd()

savedModelPath = cwdPath / "saved_model"

if savedModelPath.exists():

shutil.rmtree(savedModelPath)

savedModelPath.mkdir()

#

# time series data

nSteps = 100

num_samples = 1000

#

wt = np.linspace(0, 20*np.pi, num_samples)

w = np.exp(-wt/wt[-1])

signal = w * np.sin(wt)

print(f'*signal.shape = {signal.shape}')

X = []

y = []

batchSiz = len(signal) - nSteps - 1

print(f'*Batch L = {batchSiz}')

for i in range(batchSiz):

X.append(signal[i:i+nSteps])

y.append(signal[i+nSteps]) # L-1 = i + nSteps

X = np.array(X)

y = np.array(y)

X = X.reshape((X.shape[0], X.shape[1], 1))

y = y.reshape((y.shape[0], 1))

#

# keras model

hdim = 50

miniBatchSiz= 32

nOutputs = 1

nEpochs = 5

#

# keras model

inputs = Input(shape=(nSteps, 1), name="inp")

x = LSTM(hdim, input_shape=inputs.shape)(inputs)

outputs= Dense(1, activation="linear", name="outp")(x)

model = Model(inputs=inputs, outputs=outputs)

model.compile(loss='mean_squared_error', optimizer='adam')

model.summary()

#

model.fit(X, y, epochs=nEpochs, batch_size=miniBatchSiz)

#

tf.saved_model.save(model, str(savedModelPath))

#

print(f"-> prediction ... ", end="")

future_signal = signal[-nSteps:]

for i in range(nSteps):

X_pred = np.reshape(future_signal[-nSteps:], (1, nSteps, 1))

y_pred = model.predict(X_pred, verbose=None)[0][0]

future_signal = np.append(future_signal, y_pred)

print(f"done.")

#

import matplotlib.pyplot as plt

plt.plot(signal, label='original signal')

plt.plot(range(len(signal)-nSteps, len(signal)), future_signal[nSteps:], label='predicted signal')

plt.legend()

plt.savefig('result.png')

# node name and shape

saved_model = tf.saved_model.load(str(str(savedModelPath)))

print(f"\n======================================")

print(f" node information")

# Print the input and output node names for a signature

for signature_name in saved_model.signatures:

print(f" signature name : {signature_name}")

print(f" in : {saved_model.signatures[signature_name].inputs[0]}" )

print(f" out: {saved_model.signatures[signature_name].outputs[0]}")

print(f"======================================\n")

//

////////////////////////////////////////////////

// tf node information on screen

//

======================================

node information

signature name : serving_default

in : Tensor("inp:0", shape=(None, 100, 1), dtype=float32)

out: Tensor("Identity:0", shape=(None, 1), dtype=float32)

======================================

//

////////////////////////////////////////////////

// mo command

//

mo --use_new_frontend \

--log_level DEBUG \

--saved_model_dir ~/Projects/ovConvert/saved_model \

--input inp \

--input_shape "[?,100,1]" \

--output Identity \

--output_dir ~/Projects/ovConvert/ir_model

//

////////////////////////////////////////////////

// mo output message

//

[ ERROR ] -------------------------------------------------

[ ERROR ] ----------------- INTERNAL ERROR ----------------

[ ERROR ] Unexpected exception happened.

[ ERROR ] Please contact Model Optimizer developers and forward the following information:

[ ERROR ] Check 'translate_map.count(operation_decoder->get_op_type())' failed at src/frontends/tensorflow/src/frontend.cpp:177:

FrontEnd API failed with OpConversionFailure: :

No translator found for TensorListFromTensor node.

[ ERROR ] Traceback (most recent call last):

File "/home/tats/Projects/ovConvert/venv/lib/python3.9/site-packages/openvino/tools/mo/main.py", line 50, in main

ngraph_function = convert_model(**argv)

File "/home/tats/Projects/ovConvert/venv/lib/python3.9/site-packages/openvino/tools/mo/convert.py", line 47, in convert_model

return _convert(**args)

File "/home/tats/Projects/ovConvert/venv/lib/python3.9/site-packages/openvino/tools/mo/convert_impl.py", line 937, in _convert

raise e.with_traceback(None)

openvino._pyopenvino.OpConversionFailure: Check 'translate_map.count(operation_decoder->get_op_type())' failed at src/frontends/tensorflow/src/frontend.cpp:177:

FrontEnd API failed with OpConversionFailure: :

No translator found for TensorListFromTensor node.

[ ERROR ] ---------------- END OF BUG REPORT --------------

[ ERROR ] -------------------------------------------------

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Tats,

Thank you for your patience.

We are able to convert your LSTM model into Intermediate Representation (IR) format using the latest OpenVINO pre-release. You may install the pre-release version with this command:

pip install openvino-dev[tensorflow2,tensorflow]==2023.0.0.dev20230427

In your conversion with Model Optimizer, please exclude your input and output parameters since we observed there are no such input and output in the model shared.

Model Optimizer command:

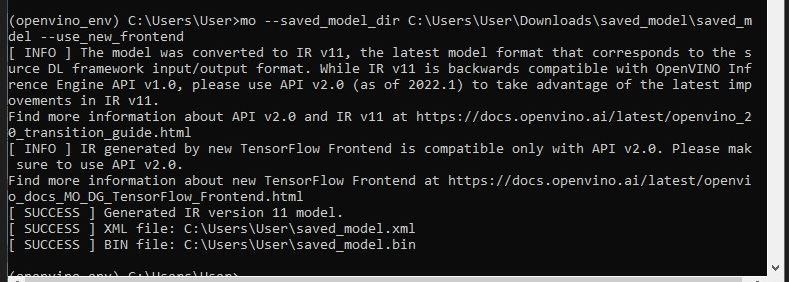

mo --saved_model_dir <PATH_TO_SAVED_MODEL> --use_new_frontend

Regards,

Aznie

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Tats,

Thanks for reaching out.

Could you share your model and any relevant files for us to further investigate this?

You can share it here or privately to my email: noor.aznie.syaarriehaahx.binti.baharuddin@intel.com

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Aznie

There is no additional data or file in particular.

The attached file is the same as the text of the above post.

By executing "python3 lstm_code.py" on terminal window, it generates data internally, trains the model, and saves the trained model in the ./saved_model directory.

It then outputs the comparison of inferred results as waveform comparison images in PNG format.

I asked the question in order to use an LSTM model trained on a PC (Intel i9 + NVIDIA RTX 2080) with TensorFlow + CUDA to perform inference only on a separate small-sized board PC equipped with Intel ATOM using OpenVINO.

Best regards.

tats

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Tats,

In your previous post, the "FrontEnd API failed with OpConversionFailure: :No translator found for TensorListFromTensor node" error generated from Model Optimizer operation might due to unsupported Tensorflow Frontend in your model.

In order to run an inference with OpenVINO, you need to successfully generate the Intermediate Representation (IR) files. This is why we need your model (.pb) file to further validate.

On the other hand, with regards to Inference Engine, LSTM model is supported by some limitations. You may refer to the Supported Layers documentation for the list of supported layers with OpenVINO.

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Aznie

I apologize for the inconvenience.

I will attach the requested file for your review.

Best regards.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Tats,

Thank you for sharing the model files. We are checking on this and will update you with the information soon.

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Tats,

Thank you for your patience.

We are able to convert your LSTM model into Intermediate Representation (IR) format using the latest OpenVINO pre-release. You may install the pre-release version with this command:

pip install openvino-dev[tensorflow2,tensorflow]==2023.0.0.dev20230427

In your conversion with Model Optimizer, please exclude your input and output parameters since we observed there are no such input and output in the model shared.

Model Optimizer command:

mo --saved_model_dir <PATH_TO_SAVED_MODEL> --use_new_frontend

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Aznie

Thank you for your prompt response. I will try it out.

By the way, does version 2023.0(or later) toolkit support HDDL (MyriadX)?

Best regards.

Tats

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Aznie

Thank you for your support.

I confirmed the generation of the IR model on my PC (i9 cpu) using the pre-release version.

Best regards.

Tats

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Tats,

OpenVINO 2023.0 and higher will not have Myriad or HDDL.

The last release of OpenVINO with Myriad and HDDL will be 2022.3.1. Which should be released in 2-3 weeks.

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Tats,

This thread will no longer be monitored since this issue has been resolved. If you need any additional information from Intel, please submit a new question.

Regards,

Aznie

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page