- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I am trying to deploy an Intel Smart Edge Open Developer Experience Kit, in a Multi-node Cluster without ESP (in my case, one master and one worker for testing).

I am deploying it in VMs (in openstack) and following these instructions:

https://edc.intel.com/content/www/us/en/design/technologies-and-topics/iot/deploy-smart-edge-open-developer-experience-kit-in-a-multi-node-cluster-w/overview/

The instructions are fine, except with minor hiccups, but then when I get to the deploy part, it starts deploying, but eventually fails.

Here, this is I think the relevant part.

TASK [validate Intel-secl] **********************************************************************************************************************************************************

task path: /home/ubuntu/dek/tasks/settings_check_ne.yml:62

Thursday 04 May 2023 10:48:15 +0000 (0:00:00.020) 0:00:02.065 **********

included: /home/ubuntu/dek/tasks/../roles/security/isecl/common/tasks/precheck.yml for node01, controller

TASK [Check control plane IP] *******************************************************************************************************************************************************

task path: /home/ubuntu/dek/roles/security/isecl/common/tasks/precheck.yml:7

Thursday 04 May 2023 10:48:15 +0000 (0:00:00.030) 0:00:02.096 **********

fatal: [node01]: FAILED! => {

"changed": false

}

MSG:

Isecl control plane IP not set!

fatal: [controller]: FAILED! => {

"changed": false

}

MSG:

Isecl control plane IP not set!

NO MORE HOSTS LEFT ******************************************************************************************************************************************************************

PLAY RECAP **************************************************************************************************************************************************************************

controller : ok=12 changed=0 unreachable=0 failed=1 skipped=15 rescued=0 ignored=0

node01 : ok=12 changed=0 unreachable=0 failed=1 skipped=15 rescued=0 ignored=0

Thursday 04 May 2023 10:48:15 +0000 (0:00:00.025) 0:00:02.121 **********

===============================================================================

gather_facts ------------------------------------------------------------ 1.15s

shell ------------------------------------------------------------------- 0.36s

fail -------------------------------------------------------------------- 0.25s

include_tasks ----------------------------------------------------------- 0.14s

include_vars ------------------------------------------------------------ 0.05s

set_fact ---------------------------------------------------------------- 0.04s

debug ------------------------------------------------------------------- 0.03s

command ----------------------------------------------------------------- 0.03s

assert ------------------------------------------------------------------ 0.02s

stat -------------------------------------------------------------------- 0.02s

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

total ------------------------------------------------------------------- 2.08s

2023-05-04 10:48:18.622 INFO: ====================

2023-05-04 10:48:18.623 INFO: DEPLOYMENT RECAP:

2023-05-04 10:48:18.623 INFO: ====================

2023-05-04 10:48:18.623 INFO: DEPLOYMENT COUNT: 1

2023-05-04 10:48:18.623 INFO: SUCCESSFUL DEPLOYMENTS: 0

2023-05-04 10:48:18.623 INFO: FAILED DEPLOYMENTS: 1

2023-05-04 10:48:18.623 INFO: DEPLOYMENT "demo_mep": FAILED

2023-05-04 10:48:18.623 INFO: ====================

2023-05-04 10:48:18.623 INFO: Deployment failed, pulling logs

2023_05_04_10_48_18_SmartEdge_experience_kit_archive.tar.gz 100% 795KB 13.1MB/s 00:00

log_collector 100% 15KB 2.1MB/s 00:00

log_collector.json 100% 3928 11.3MB/s 00:00

ubuntu@controlplane:~/dek$

It seems that it failed with the lsecl-control-plane-ip-not-set error, as found on this link

https://www.intel.com/content/www/us/en/docs/ei-for-amr/get-started-guide-server-kit/2022-3/troubleshooting.html#ISECL-CONTROL-PLANE-IP-NOT-SET-ERROR

I had the “platform_attestation_node: false” ; it was already fixed in the instructions in the tutorial.

So do you have any other advice? It seems as if it is the same problem, but probably due to something else. I am happy to send the relevant config files if needed.

Javier

- Tags:

- Intel Smart Edge Open - Developer Experience Kit

- Intel® Smart Edge Open Developer Experience Kit in a Multi-node Cluster without Edge Software Provisioner (ESP)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi javierfh,

> How many interfaces you attached to each of the VMs? In my setup, I set two interfaces for each of the VMs, one with NAT used as control/management interface and then a second one, host-only adapter, which is the one I used for the configurations. Maybe this is what causes some trouble at some point.

Both VMs only have 1 interface configured as bridge.

> Would you be so kind and share your /etc/hosts files from both nodes? I configured there IP address of both nodes, because I had trouble with setting the env variables, when following the tutorial.

$ cat /ete/hosts #server1

127.0.0.1 localhost server1

127.0.1.1 server1

#The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback server1 server1

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

$ cat /ete/hosts #server2

127.0.0.1 localhost server2

127.0.1.1 server2

#The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback server2

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

The IP address are assigned by the router's DHCP server and the local DNS takes care of the routing in my network. I didn't have to make any additional changes to the hosts file to specify the machines IP address with the hostname.

>I believe in this line # set variable for later useexport worker="TBD" # replace with actual value I have to set the name of the worker node, correct?

Following commands were ran on server1 (control-plane node)

export host=$(hostname)

export worker=server2

> And then, what do you exactly mean by that you used 20.04 server? Do you mean that you deployed ubuntu 20.04 server on your VMs? If that is the case is the same I am doing from my side, so if you managed with ubuntu server, we should be good

Correct, I was using Ubuntu 20.04 server on my VM, I thought you were using the desktop version of Ubuntu 20.04. I will keep it the same as yours.

Regards,

Jesus

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Javier,

That was going to be my suggestion, last time I ran into that problem I had to modify the 10-default.yml config file located in ~/dek/inventory/default/group_vars/all/10-default.yml with the changes described in the link you shared. https://www.intel.com/content/www/us/en/docs/ei-for-amr/get-started-guide-server-kit/2022-3/troubleshooting.html#ISECL-CONTROL-PLANE-IP-NOT-SET-ERROR

I'm not sure if the issue is related to running this software package in a Virtual Machine. I'll have to try to set them up and try them from my end. In the meantime, if you have another physical machine, try installing the package there and see if you run into the same issue.

Regards,

Jesus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hola Jesus,

Thanks a lot for your interest.

In the instructions, it says that this should be working with VMs too. In fact, I managed to deploy the Intel Smart Edge single node on a VM, even though I wasn't able to see my onboarded services in harbor, but that may be another issue. At the moment, unfortunately I don't have spare machines to try with.

Let see if we can make this work, it look incredibly interesting.

At the same time, I need to check an idea I got. I was looking at the security groups in Openstack and I thought that maybe I need to explicitly enable TCP and UDP traffic between VMs in the tenant (It shouldn't be needed, but just in case the configuration of the network is filtering some traffic).

I´ll keep you posted if I manage to advance and I appreciate if you could also keep me posted if you discover something.

Thanks a lot,

Javier

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I have been trying to see if I can make this work with the current setup, but we are having difficulties.

Things that I have tried:

- I was thinking if the configurations, were not modified properly and noticed that there is a folder automatically created when trying the deployment, with the name of the deployment (in my case demo_mep)

ubuntu@controlplane:~/dek/inventory/automated$ ls

demo_mep

And there it seems that if again it copies the group_vars and the host_vars

ubuntu@controlplane:~/dek/inventory/automated/demo_mep$ ls

group_vars host_vars inventory_demo_mep.yml

So, I tried to remove the created folder, after doing changes in the config files and prior to trying to re-deploy, to see if the files were not properly updated, but it did not help. Still the same problem.

- I was also reviewing /dek/inventory/automated/demo_mep/group_vars/all/10-default.yml and there, out of desperation and trying to simplify things, I disabled

# Disable sriov kernel flags (intel_iommu=on iommu=pt)

# iommu_enabled: true

iommu_enabled: falseHoping to disable sriov, in case this is the thing messing things here.

Or should I explicitly disable the sriov here:

## SR-IOV Network Operator

sriov_network_operator_enable: true

## SR-IOV Network Operator configuration

sriov_network_operator_configure_enable: true

## SR-IOV Network Nic's Detection Application

sriov_network_detection_application_enable: false

As you can see, I was guessing here, since it is a problem with connectivity, I thought if SRIOV here was the culprit and I was trying to simplify things, but I may be totally wrong.

- I looked into the openstack connectivity and explicitly enabled TCP and UDP traffic between the instances in the same tenant (even if it shouldn't be necessary). It didn't help either.

- Next thing I will try is to create two individual VMs with VirtualBox or VMware in case Openstack is the one preventing the deployment.

- And last but not least, I would like if you guys have some public slack or irc where developers meet and help each other?

Any help would be much appreciated,

Javier

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Javier,

I appreciate the detailed explanation of what you have tried. I've been trying to configure a local environment to test this, unfortunately, I have not been successful. Let me try to setup another system. would also recommend checking that you are able to ping both VMs using their hostname. This should be configured at the DHCP server side and not on each client/VM.

I will provide another update shortly, appreciate your patience.

Regards,

Jesus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jesus,

On the contrary, thanks to you for your efforts.

I wanted also to update you on my work.

We decided to skip the deployment in VMs provided by Openstack, just in case that Openstack Neutron, the one taking care of networking, is doing something that prevents the scripts from running properly.

Now what we decided, was to create two different VMs with Virtualbox and it seems that we are very close. I still think we have some problems, maybe related to the hostname and DNS.

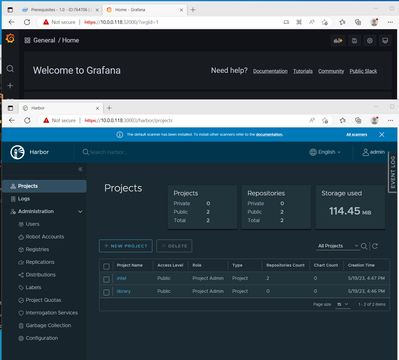

I have seen that at some point, it shows the url and even the user and password for harbor, and using the hostname name stored in $host wont work, but if instead I use the IP address itself, I can log in into harbor and I see that it has deployed things there.

After that, what I did was to add the IP and hostname of the control_plane machine in the /etc/hosts, in order for the script to be find the right address, but still there seems to be some trouble.

Attached here, the last part of the log file.

I hope this clarifies little more what is the current solution.

Let me please know if you would need any more info to help you understanding the situation

Javier

TASK [kubernetes/harbor_registry/controlplane : Check if intel project exists] ****************************************************************************

task path: /home/javier/dek/roles/kubernetes/harbor_registry/controlplane/tasks/main.yml:24

Tuesday 16 May 2023 16:56:13 +0000 (0:00:00.026) 0:01:23.166 ***********

FAILED - RETRYING: Check if intel project exists (10 retries left).

FAILED - RETRYING: Check if intel project exists (9 retries left).

FAILED - RETRYING: Check if intel project exists (8 retries left).

FAILED - RETRYING: Check if intel project exists (7 retries left).

FAILED - RETRYING: Check if intel project exists (6 retries left).

FAILED - RETRYING: Check if intel project exists (5 retries left).

FAILED - RETRYING: Check if intel project exists (4 retries left).

FAILED - RETRYING: Check if intel project exists (3 retries left).

FAILED - RETRYING: Check if intel project exists (2 retries left).

FAILED - RETRYING: Check if intel project exists (1 retries left).

fatal: [controller]: FAILED! => {

"attempts": 10,

"changed": false,

"connection": "close",

"content": "",

"content_length": "150",

"content_type": "text/html",

"date": "Tue, 16 May 2023 16:58:08 GMT",

"elapsed": 1,

"redirected": false,

"server": "nginx",

"status": 502,

"url": "https://isecontrolplane:30003/api/v2.0/projects?project_name=intel"

}

MSG:

Status code was 502 and not [200, 404]: HTTP Error 502: Bad Gateway

NO MORE HOSTS LEFT ****************************************************************************************************************************************

PLAY RECAP ************************************************************************************************************************************************

controller : ok=173 changed=18 unreachable=0 failed=1 skipped=168 rescued=0 ignored=0

node01 : ok=130 changed=10 unreachable=0 failed=0 skipped=130 rescued=0 ignored=0

Tuesday 16 May 2023 16:58:08 +0000 (0:01:54.596) 0:03:17.762 ***********

===============================================================================

kubernetes/harbor_registry/controlplane ------------------------------- 115.00s

infrastructure/install_dependencies ------------------------------------ 18.76s

infrastructure/docker -------------------------------------------------- 18.35s

baseline_ansible/infrastructure/install_packages ------------------------ 9.01s

gather_facts ------------------------------------------------------------ 5.56s

kubernetes/install ------------------------------------------------------ 4.81s

kubernetes/cni/calico/controlplane -------------------------------------- 4.08s

infrastructure/os_setup ------------------------------------------------- 3.76s

baseline_ansible/infrastructure/os_requirements/disable_swap ------------ 2.19s

infrastructure/firewall_open_ports -------------------------------------- 1.93s

baseline_ansible/infrastructure/os_requirements/dns_stub_listener ------- 1.34s

infrastructure/conditional_reboot --------------------------------------- 1.14s

baseline_ansible/infrastructure/os_proxy -------------------------------- 1.01s

infrastructure/install_hwe_kernel --------------------------------------- 0.96s

kubernetes/cni ---------------------------------------------------------- 0.81s

baseline_ansible/infrastructure/configure_udev -------------------------- 0.74s

baseline_ansible/infrastructure/time_verify_ntp ------------------------- 0.72s

baseline_ansible/infrastructure/install_openssl ------------------------- 0.71s

kubernetes/helm --------------------------------------------------------- 0.71s

fail -------------------------------------------------------------------- 0.49s

kubernetes/customize_kubelet -------------------------------------------- 0.47s

baseline_ansible/infrastructure/install_userspace_drivers --------------- 0.46s

kubernetes/controlplane ------------------------------------------------- 0.45s

shell ------------------------------------------------------------------- 0.42s

kubernetes/cert_manager ------------------------------------------------- 0.40s

infrastructure/build_noproxy -------------------------------------------- 0.35s

baseline_ansible/infrastructure/install_golang -------------------------- 0.33s

infrastructure/check_redeployment --------------------------------------- 0.30s

include_tasks ----------------------------------------------------------- 0.29s

baseline_ansible/infrastructure/os_requirements/enable_ipv4_forwarding --- 0.26s

infrastructure/git_repo_tool -------------------------------------------- 0.23s

debug ------------------------------------------------------------------- 0.19s

infrastructure/e810_driver_update --------------------------------------- 0.18s

baseline_ansible/infrastructure/time_setup_ntp -------------------------- 0.17s

baseline_ansible/infrastructure/configure_cpu_isolation ----------------- 0.17s

baseline_ansible/infrastructure/selinux --------------------------------- 0.14s

infrastructure/provision_sgx_enabled_platform --------------------------- 0.11s

baseline_ansible/infrastructure/configure_cpu_idle_driver --------------- 0.10s

baseline_ansible/infrastructure/configure_additional_grub_parameters ---- 0.10s

baseline_ansible/infrastructure/configure_hugepages --------------------- 0.09s

baseline_ansible/infrastructure/configure_sriov_kernel_flags ------------ 0.09s

baseline_ansible/infrastructure/disable_fingerprint_authentication ------ 0.07s

set_fact ---------------------------------------------------------------- 0.05s

include_vars ------------------------------------------------------------ 0.05s

baseline_ansible/infrastructure/install_rt_package ---------------------- 0.04s

infrastructure/setup_baseline_ansible ----------------------------------- 0.03s

assert ------------------------------------------------------------------ 0.03s

baseline_ansible/infrastructure/install_tuned_rt_profile ---------------- 0.03s

command ----------------------------------------------------------------- 0.02s

stat -------------------------------------------------------------------- 0.02s

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

total ----------------------------------------------------------------- 197.72s

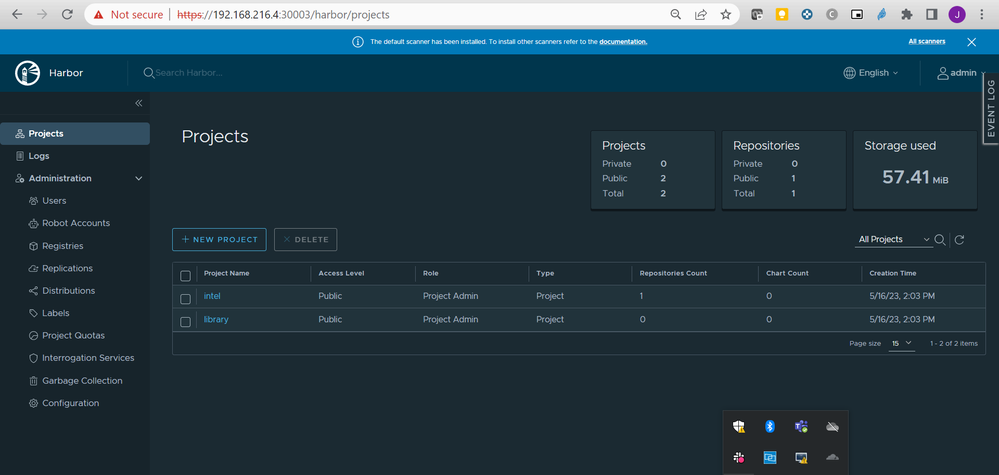

And here attached a picture of the harbor as it is right now, but replacing the hostname by the ip

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi javierfh,

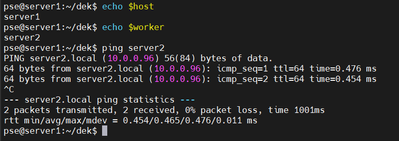

I setup two virtual machines running on the same Windows 10 host system. Using VirtualBox to run both VMs with default settings with the exception of network type, I used bridged instead of the default NAT. Once both VMs were up and running, I confirmed I can ping each other using their hostname.

I proceeded to install Smart Edge Open using the same guide as you with no modifications, and had a successful deployment.

I was able to access both Grafana and Harbor web servers. This leads me to believe, the issue you are experiencing is related to your local network. I also want to mention that I used Ubuntu 20.04 server. I will try to setup two new Virtual Machines with Ubuntu 20.04 Desktop next week.

Regards,

Jesus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jesus,

Let me first thank you for your support and patience.

So I will try to start from scratch (in case something from the old deployment will cause troubles). I will try to follow your suggestion and configure the network interface in the VM as bridge instead of NAT.

But let me please ask you couple of questions:

- How many interfaces you attached to each of the VMs? In my setup, I set two interfaces for each of the VMs, one with NAT used as control/management interface and then a second one, host-only adapter, which is the one I used for the configurations. Maybe this is what causes some trouble at some point.

- Would you be so kind and share your /etc/hosts files from both nodes? I configured there IP address of both nodes, because I had trouble with setting the env variables, when following the tutorial.

- I believe in this line

# set variable for later use export worker="TBD" # replace with actual value

I have to set the name of the worker node, correct?

- And then, what do you exactly mean by that you used 20.04 server? Do you mean that you deployed ubuntu 20.04 server on your VMs? If that is the case is the same I am doing from my side, so if you managed with ubuntu server, we should be good

Anyway thanks for all your help. It sounds very good to hear you managed to deploy with VMs, I feel optimistic hoping I can get it deployed with you help.

Javier

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi javierfh,

> How many interfaces you attached to each of the VMs? In my setup, I set two interfaces for each of the VMs, one with NAT used as control/management interface and then a second one, host-only adapter, which is the one I used for the configurations. Maybe this is what causes some trouble at some point.

Both VMs only have 1 interface configured as bridge.

> Would you be so kind and share your /etc/hosts files from both nodes? I configured there IP address of both nodes, because I had trouble with setting the env variables, when following the tutorial.

$ cat /ete/hosts #server1

127.0.0.1 localhost server1

127.0.1.1 server1

#The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback server1 server1

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

$ cat /ete/hosts #server2

127.0.0.1 localhost server2

127.0.1.1 server2

#The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback server2

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

The IP address are assigned by the router's DHCP server and the local DNS takes care of the routing in my network. I didn't have to make any additional changes to the hosts file to specify the machines IP address with the hostname.

>I believe in this line # set variable for later useexport worker="TBD" # replace with actual value I have to set the name of the worker node, correct?

Following commands were ran on server1 (control-plane node)

export host=$(hostname)

export worker=server2

> And then, what do you exactly mean by that you used 20.04 server? Do you mean that you deployed ubuntu 20.04 server on your VMs? If that is the case is the same I am doing from my side, so if you managed with ubuntu server, we should be good

Correct, I was using Ubuntu 20.04 server on my VM, I thought you were using the desktop version of Ubuntu 20.04. I will keep it the same as yours.

Regards,

Jesus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jesus,

I finally have good news. I managed to deploy successfully the multi node smart edge in two virtual box VMs.

I did as you said, configured only one interface as a bridged adapter. VirtualBox selected the default connectivity in my laptop, which was the WIFI. I didn't have connectivity, so when I changed it to an ethernet interface, connectivity returned.

What I did next was to save the env variables (export hostname on the master and worker) in the .profile file, so that they will survive reboots. Then I followed your suggestions and configured the /etc/hosts as yours, but in my case it wouldn't work. I had to add the IP of the worker node in the /etc/hosts of the master, because otherwise wouldn't be able to send the ID to the worker for the SSH (# to worker ssh-copy-id $USER@$worker).

Once that was clear, I started with the deployment, but then got stuck on the ufw configuration.

I rebooted and looked at the logs, and failed somehow with problems that I assumed were related to the worker node not being able to locate the master node. So once I added the IP of the master in the /etc/hosts file at the worker, the deployment succeeded. Hurray!!

I wonder why in your case you didn't need to add the IPs to the /etc/hosts file, but oh well.. now it works.

So now, once the deployment is done, I would like to ask for your help, in case you have some pointers to documentation on how to use the deployed edge. In particular, we would like to know if there is an architecture document and some guide for next steps on how to onboard services, how to configure them and see if we can control placement for the instantiated services, in other words, if we can select in which node services or pods will be instantiated. It would be also helpful, if there is some doc on how to use the different deployed tools. We also want to understand the interfaces or APIs to see if we can integrate it with our MEC orchestrator.

Anyway, it has been great all your help and I don't know if possible, but maybe it would be good to change the title of the post to something like Problems(/Solutions/lessons learned) on how to deploy Intel Smart Edge Open in virtual machines, in case it could help someone else.

Thanks once more and looking forward to hearing from you,

Javier

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Javier,

I'm glad to hear that it is working for you! I believe my local DHCP and DNS server took care of the routing without needing to update the /etc/hosts files.

Please find additional information about Smart Edge Open in the documentation below, there is also information about the API.

Intel® Smart Edge Open | Developer Experience Kit Default Install (intelsmartedge.github.io)

If you have any questions, please start a new discussion as this one will no longer be monitored.

Regards,

Jesus

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page