- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have modified the basic vector add buffers example from OneAPI to support the execution in two devices at the same time.

I have two problems:

1. I don't know how to achieve concurrent execution in both devices. Can you provide what is the most efficient way to use OneAPI to achieve that? I need to execute multiple kernels in the same devices at the same time, getting the results as soon and sending again to the devices more chunks of work. Should I use host C++ threads to create each queue in an independent thread? I would appreciate some help and example, instead of trial and error per every idea.

2. When I increase the array size from 32 * 1024 * 10 to 32 * 1024 * 20 (in my system), the program segfaults (signal 11), with no errors. Why? Is not even close to the limits per device. What am I doing wrong using OneAPI?

I paste the example code:

//==============================================================

// Copyright © 2020 Intel Corporation

#include <CL/sycl.hpp>

#include <array>

#include <iostream>

#include <iomanip>

#include "dpc_common.hpp"

#define ARRAY_SIZE_SIGNAL11 (32 * 1024 * 20)

#define ARRAY_SIZE_WORKING (32 * 1024 * 10)

#define ARRAY_SIZE ARRAY_SIZE_WORKING

//#define ARRAY_SIZE ARRAY_SIZE_SIGNAL11

#define DEVS_ORDER_CPU_GPU 0

//#define DEVS_ORDER_CPU_GPU 1

using namespace cl::sycl;

enum class CheckpointName {

InitQueue1,

InitQueue2,

Submitted1,

Submitted2,

Waited1,

Waited2,

};

std::string checkpoint_name_to_str(CheckpointName name){

switch(name){

case CheckpointName::InitQueue1: {

return "InitQueue1";

} break;

case CheckpointName::InitQueue2: {

return "InitQueue2";

} break;

case CheckpointName::Submitted1: {

return "Submitted1";

} break;

case CheckpointName::Submitted2: {

return "Submitted2";

} break;

case CheckpointName::Waited1: {

return "Waited1";

} break;

case CheckpointName::Waited2: {

return "Waited2";

} break;

}

}

struct Checkpoint {

CheckpointName name;

std::chrono::time_point<std::chrono::high_resolution_clock> ts;

};

Checkpoint checkpoints[20];

int ncheckpoint = 0;

void ts_checkpoint(CheckpointName name){

auto now = std::chrono::high_resolution_clock::now();

Checkpoint ch{name, now};

checkpoints[ncheckpoint++] = ch;

}

constexpr size_t array_size = ARRAY_SIZE;

typedef std::array<int, array_size> IntArray;

static void ReportTime(const std::string &msg, event e) {

cl_ulong time_start =

e.get_profiling_info<info::event_profiling::command_start>();

cl_ulong time_end =

e.get_profiling_info<info::event_profiling::command_end>();

double elapsed = (time_end - time_start) / 1e6;

std::cout << msg << elapsed << std::endl;

}

auto VectorAddOffset(queue &q, const IntArray &a_array, const IntArray &b_array,

IntArray &sum_parallel, size_t offset, size_t size, int dev) {

size_t part_size = size;

range<1> num_items{part_size};

buffer a_buf(a_array);

buffer b_buf(b_array);

auto *ptr = sum_parallel.data();

auto *ptr_offset = (ptr + (offset * sizeof(int)));

buffer sum_buf(ptr, range<1>(sum_parallel.size())); // i cannot remove part of it

event ev = q.submit([&](handler &h) {

auto a = a_buf.get_access<access::mode::read>(h);

auto b = b_buf.get_access<access::mode::read>(h);

auto sum = sum_buf.get_access<access::mode::write>(h);

h.parallel_for<class offset1>(range<1>(part_size), id<1>(0), [=](id<1> idx) {

auto idx_offset = idx[0] + offset;

int value = a[idx_offset] * b[idx_offset] / (b[idx_offset] + 1) + a[idx_offset] + b[idx_offset] / (b[idx_offset] + 2);

sum[idx_offset] = value + a[idx_offset] + b[idx_offset] / (b[idx_offset] + 2);

});

});

if (dev == 1) {

ts_checkpoint(CheckpointName::Submitted1);

} else {

ts_checkpoint(CheckpointName::Submitted2);

}

return ev;

}

void InitializeArray(IntArray &a) {

for (size_t i = 0; i < a.size(); i++) a[i] = i;

}

void InitializeArray(IntArray &a, int value) {

for (size_t i = 0; i < a.size(); i++) a[i] = value;

}

int main() {

// Create device selector for the device of your interest.

#if DEVS_ORDER_CPU_GPU == 1

cpu_selector d_selector;

gpu_selector d2_selector;

#else

gpu_selector d_selector;

cpu_selector d2_selector;

#endif

IntArray a, b, sum_sequential, sum_parallel;

InitializeArray(a);

InitializeArray(b);

InitializeArray(sum_parallel, -1);

auto devs_str = getenv("DEVS");

auto devs = 2;

if (devs_str != NULL) {

devs = atoi(devs_str);

}

event e1, e2;

auto start = std::chrono::high_resolution_clock::now();

std::chrono::time_point<std::chrono::high_resolution_clock> end1, end2, end3, end4;

auto prop_list = property_list{property::queue::enable_profiling()};

if (devs == 1) {

try {

queue q(d_selector, dpc::exception_handler, prop_list);

std::cout << "Running on device1: "

<< q.get_device().get_info<info::device::name>() << "\n";

std::cout << "Vector size: " << a.size() << "\n";

e1 = VectorAddOffset(q, a, b, sum_parallel, 0, array_size, 1);

end1 = std::chrono::high_resolution_clock::now();

q.wait_and_throw();

ts_checkpoint(CheckpointName::Waited1);

} catch (exception const &e) {

std::cout << "An exception is caught for vector add.\n";

std::terminate();

}

} else if (devs == 2) {

try {

queue q(d2_selector, dpc::exception_handler, prop_list);

std::cout << "Running on device2: "

<< q.get_device().get_info<info::device::name>() << "\n";

std::cout << "Vector size: " << a.size() << "\n";

e1 = VectorAddOffset(q, a, b, sum_parallel, 0, array_size, 1);

end1 = std::chrono::high_resolution_clock::now();

q.wait_and_throw();

ts_checkpoint(CheckpointName::Waited1);

} catch (exception const &e) {

std::cout << "An exception is caught for vector add.\n";

std::terminate();

}

} else {

try {

queue q(d_selector, dpc::exception_handler, prop_list);

queue q2(d2_selector, dpc::exception_handler, prop_list);

std::cout << "Running on device1: "

<< q.get_device().get_info<info::device::name>() << "\n";

std::cout << "Running on device2: "

<< q2.get_device().get_info<info::device::name>() << "\n";

std::cout << "Vector size: " << a.size() << "\n";

e1 = VectorAddOffset(q, a, b, sum_parallel, 0, array_size / 2, 1);

end1 = std::chrono::high_resolution_clock::now();

e2 = VectorAddOffset(q2, a, b, sum_parallel, array_size / 2, array_size / 2, 2);

end2 = std::chrono::high_resolution_clock::now();

q.wait_and_throw();

ts_checkpoint(CheckpointName::Waited1);

q2.wait_and_throw();

ts_checkpoint(CheckpointName::Waited2);

} catch (exception const &e) {

std::cout << "An exception is caught for vector add.\n";

std::terminate();

}

}

end3 = std::chrono::high_resolution_clock::now();

for (size_t i = 0; i < sum_sequential.size(); i++){

int value = a[i] * b[i] / (b[i] + 1) + a[i] + b[i] / (b[i] + 2);

sum_sequential[i] = value + a[i] + b[i] / (b[i] + 2);

}

for (size_t i = 0; i < sum_sequential.size(); i++) {

if (sum_parallel[i] != sum_sequential[i]) {

std::cout << "Vector add failed on device.\n";

}

}

int indices[]{0, 1, 2, (a.size() - 1)};

constexpr size_t indices_size = sizeof(indices) / sizeof(int);

for (int i = 0; i < indices_size; i++) {

int j = indices[i];

if (i == indices_size - 1) std::cout << "...\n";

std::cout << "[" << j << "]: " << a[j] << " + " << b[j] << " = "

<< sum_parallel[j] << "\n";

}

std::cout << "Vector add successfully completed on device.\n";

end4 = std::chrono::high_resolution_clock::now();

std::chrono::duration<double> diff1 = end1 - start;

std::chrono::duration<double> diff2 = end2 - start;

std::chrono::duration<double> diff3 = end3 - start;

std::chrono::duration<double> diff4 = end4 - start;

std::cout << "diff vector add1: " << std::fixed << std::setprecision(5) << diff1.count() << "\n";

if (devs == 3) {

std::cout << "diff vector add2: " << std::fixed << std::setprecision(5) << diff2.count() << "\n";

}

std::cout << "diff trycatch end: " << std::fixed << std::setprecision(5) << diff3.count() << "\n";

std::cout << "diff end: " << std::fixed << std::setprecision(5) << diff3.count() << "\n";

ReportTime("e1: ", e1);

if (devs == 3) {

ReportTime("e2: ", e2);

}

for (int i=0; i<ncheckpoint; ++i){

Checkpoint cp = checkpoints[i];

std::chrono::duration<double> diff = cp.ts - start;

std::cout << i << " " << checkpoint_name_to_str(cp.name) << ": " << std::fixed << std::setprecision(5) << (diff.count()) << "\n";

}

return 0;

}

And how it should be executed:

$ DEVS=1 /usr/bin/time ./vector-add

Running on device1: Intel(R) Core(TM) i5-6200U CPU @ 2.30GHz

Vector size: 327680

[0]: 0 + 0 = 0

[1]: 1 + 1 = 2

[2]: 2 + 2 = 5

...

[327679]: 327679 + 327679 = 655357

Vector add successfully completed on device.

diff vector add1: 1.00805

diff trycatch end: 1.00807

diff end: 1.00807

e1: 0.70930

0 Submitted1: 1.00693

1 Waited1: 1.00806

1.09user 0.12system 0:01.24elapsed 97%CPU (0avgtext+0avgdata 186668maxresident)k

0inputs+0outputs (0major+19561minor)pagefaults 0swaps

$ DEVS=2 /usr/bin/time ./vector-add

Running on device2: Intel(R) Gen9

Vector size: 327680

[0]: 0 + 0 = 0

[1]: 1 + 1 = 2

[2]: 2 + 2 = 5

...

[327679]: 327679 + 327679 = 655357

Vector add successfully completed on device.

diff vector add1: 0.94280

diff trycatch end: 0.94289

diff end: 0.94289

e1: 0.60930

0 Submitted1: 0.94129

1 Waited1: 0.94281

0.84user 0.16system 0:01.04elapsed 96%CPU (0avgtext+0avgdata 161052maxresident)k

0inputs+0outputs (0major+25701minor)pagefaults 0swaps

$ DEVS=3 /usr/bin/time ./vector-add

Running on device1: Intel(R) Core(TM) i5-6200U CPU @ 2.30GHz

Running on device2: Intel(R) Gen9

Vector size: 327680

[0]: 0 + 0 = 0

[1]: 1 + 1 = 2

[2]: 2 + 2 = 5

...

[327679]: 327679 + 327679 = 655357

Vector add successfully completed on device.

diff vector add1: 0.82789

diff vector add2: 1.27030

diff trycatch end: 1.27035

diff end: 1.27035

e1: 0.39423

e2: 0.24327

0 Submitted1: 0.82691

1 Submitted2: 1.26955

2 Waited1: 1.27031

3 Waited2: 1.27032

1.26user 0.18system 0:01.47elapsed 97%CPU (0avgtext+0avgdata 261140maxresident)k

0inputs+0outputs (0major+36073minor)pagefaults 0swaps

With `DEVS=1` we select the CPU, with `DEVS=2` the iGPU and with `DEVS=3` both devices. As we can see, the kernel time execution (report time) is less because the workload is splitted, but the total times in submitting and waiting are accumulative, not splitted (not parallel). So, the execution is not parallel between both devices.

If I swap the queues execution (VectorAddOffset, first gpu then cpu with DEVS_ORDER_CPU_GPU to 0):

$ DEVS=3 /usr/bin/time ./vector-add

Running on device1: Intel(R) Gen9

Running on device2: Intel(R) Core(TM) i5-6200U CPU @ 2.30GHz

Vector size: 327680

[0]: 0 + 0 = 0

[1]: 1 + 1 = 2

[2]: 2 + 2 = 5

...

[327679]: 327679 + 327679 = 655357

Vector add successfully completed on device.

diff vector add1: 1.18999

diff vector add2: 1.58355

diff trycatch end: 1.58394

diff end: 1.58394

e1: 0.23140

e2: 0.82824

0 Submitted1: 1.18879

1 Submitted2: 1.58198

2 Waited1: 1.58358

3 Waited2: 1.58359

1.57user 0.17system 0:01.77elapsed 98%CPU (0avgtext+0avgdata 252960maxresident)k

0inputs+0outputs (0major+34734minor)pagefaults 0swaps

Not really different results from the previous order.

It is really important to know the "official" way to do this by OneAPI, what is the most performant way. Since I will compare with pure OpenCL. How to do parallel offload appropriately?

I thought about using C++ threads and each thread with an independent queue, but better to know directly from Intel.

Finally, just change the preprocessor var to ARRAY_SIZE_SIGNAL11 to see the segfault (using a intel cpu i5 +gpu intel graphics).

$ DEVS=1 /usr/bin/time ./vector-add

Command terminated by signal 11

0.01user 0.00system 0:00.96elapsed 1%CPU (0avgtext+0avgdata 7560maxresident)k

0inputs+0outputs (0major+491minor)pagefaults 0swaps

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Possible explanation for your questions:

- For the kernels to run concurrently on multiple devices (or even on the same device for that matter), there must be no data dependency amongst them. Accessors are responsible for creating Directed Acyclic Graph(DAG) to represent data dependencies across various kernels(within the queue as well as across queues). If there is no data dependency found, the execution will take place concurrently across multiple devices(or within the same device as well). In your case, concurrent kernel execution is limited by host side(since the host execution is serial). I'd suggest you to modify the code in this way, in order to achieve concurrency.

#include <CL/sycl.hpp> #include <iostream> int main() { const int N = 1024; auto R = sycl::range<1>(N/2); //Half Range for split std::vector<int> v1(N); //Input Vector 1 std::vector<int> v2(N); //Input Vector 2 for(int i=0;i<N;i++) { v1[i] = i; v2[i] = N-i; } sycl::queue cpuQ(sycl::cpu_selector{}); //CPU Queue sycl::queue gpuQ(sycl::gpu_selector{}); //GPU Queue { //Splitting input data into 2 halves for CPU and iGPU sycl::buffer<int,1> bufgpuv1(v1.data(), R); sycl::buffer<int,1> bufgpuv2(v2.data(), R); sycl::buffer<int,1> bufcpuv1((v1.data()+(N/2)), R); sycl::buffer<int,1> bufcpuv2((v2.data()+(N/2)), R); std::cout<<"Running on: "<<gpuQ.get_device().get_info<sycl::info::device::name>()<<" and " <<cpuQ.get_device().get_info<sycl::info::device::name>()<<"\n"; gpuQ.submit([&](sycl::handler &h){ auto agpuv1 = bufgpuv1.get_access<sycl::access::mode::read_write>(h); auto agpuv2 = bufgpuv2.get_access<sycl::access::mode::read_write>(h); h.parallel_for (R, [=](sycl::id<1> i){ agpuv1[i]+=agpuv2[i]; //Stpring output in Input 1 }); }); cpuQ.submit([&](sycl::handler &h){ auto acpuv1 = bufcpuv1.get_access<sycl::access::mode::read_write>(h); auto acpuv2 = bufcpuv2.get_access<sycl::access::mode::read_write>(h); h.parallel_for(R, [=](sycl::id<1> i){ acpuv1[i]+=acpuv2[i]; //Storing output in Input 1 }); }); } /*std::cout<<"Sample values on GPU and CPU\n"; for(int i =0; i < 10; i++) std::cout<<v1[i]<<" "<<v1[N/2+i]<<"\n";*/ std::vector<int> validate_vector(N,N); //Validating validate_vector==v1?std::cout<<"Vector addition: Success\n":std::cout<<"Vector addition: Failure\n"; return 0; } - Every device comes with its own maximum allocatable memory, which is fixed by OpenCL/SYCL standards. Essentially, it is the maximum memory that can be allocated on a single data structure(array in your case). To know about the limits, run "clinfo" command on the terminal. For instance on Intel iGPU(Gen 9), you should see something like:

Max memory allocation 4294959104 (4GiB)

In your case, its probably exceeding this limit(4 GB)

Let me know if you face any issues with the code.

Regards,

Rahul

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, Rahul.

Thanks for the code example, really. I wrote the answer but it was suddenly authenticated and I lost everything... I was writing for 2h, carefully, so I will summarize:

1.1. I modified the example to study the performance.

#include <CL/sycl.hpp>

#include <iostream>

void cpu(int N, bool check) {

std::cout << "CPU N: " << N << " size: " << sizeof(N) * N << " B\n";

auto R = sycl::range<1>(N);

std::vector<int> v1(N); //Input Vector 1

std::vector<int> v2(N); //Input Vector 2

for (int i = 0; i < N; i++) {

v1[i] = i;

v2[i] = N - i;

}

sycl::queue cpuQ(sycl::cpu_selector{});

{

sycl::buffer<int, 1> bufcpuv1(v1.data(), R);

sycl::buffer<int, 1> bufcpuv2(v2.data(), R);

std::cout << "Running on: " << cpuQ.get_device().get_info<sycl::info::device::name>() << "\n";

cpuQ.submit([&](sycl::handler &h) {

auto acpuv1 = bufcpuv1.get_access<sycl::access::mode::read_write>(h);

auto acpuv2 = bufcpuv2.get_access<sycl::access::mode::read_write>(h);

h.parallel_for(R, [=](sycl::id<1> i) {

acpuv1[i] += acpuv2[i]; //Storing output in Input 1

});

});

}

/*std::cout<<"Sample values on GPU and CPU\n";

for(int i =0; i < 10; i++)

std::cout<<v1[i]<<" "<<v1[N/2+i]<<"\n";*/

if (check) {

std::vector<int> validate_vector(N, N); //Validating

validate_vector == v1 ? std::cout << "Vector addition: Success\n" : std::cout << "Vector addition: Failure\n";

}

}

void gpu(int N, bool check) {

std::cout << "GPU N: " << N << " size: " << sizeof(N) * N << " B\n";

auto R = sycl::range<1>(N);

std::vector<int> v1(N); //Input Vector 1

std::vector<int> v2(N); //Input Vector 2

for (int i = 0; i < N; i++) {

v1[i] = i;

v2[i] = N - i;

}

sycl::queue gpuQ(sycl::gpu_selector{});

{

sycl::buffer<int, 1> bufgpuv1(v1.data(), R);

sycl::buffer<int, 1> bufgpuv2(v2.data(), R);

std::cout << "Running on: " << gpuQ.get_device().get_info<sycl::info::device::name>() << "\n";

gpuQ.submit([&](sycl::handler &h) {

auto agpuv1 = bufgpuv1.get_access<sycl::access::mode::read_write>(h);

auto agpuv2 = bufgpuv2.get_access<sycl::access::mode::read_write>(h);

h.parallel_for(R, [=](sycl::id<1> i) {

agpuv1[i] += agpuv2[i]; //Storing output in Input 1

});

});

}

/*std::cout<<"Sample values on GPU and CPU\n";

for(int i =0; i < 10; i++)

std::cout<<v1[i]<<" "<<v1[N/2+i]<<"\n";*/

if (check) {

std::vector<int> validate_vector(N, N); //Validating

validate_vector == v1 ? std::cout << "Vector addition: Success\n" : std::cout << "Vector addition: Failure\n";

}

}

void cpugpu(int N, float prop_cpu, bool check) {

std::cout << "CPUGPU N: " << N << " size: " << sizeof(N) * N << " B\n";

int part_cpu = N * prop_cpu; // 0.0 > prop_cpu < 1.0

int part_gpu = N - part_cpu;

std::cout << " CPU with N: " << part_cpu << " prop: " << 100 * (prop_cpu) << " %\n";

std::cout << " GPU with N: " << part_gpu << " prop: " << 100 * (1 - prop_cpu) << " %\n";

auto Rcpu = sycl::range<1>(part_cpu);

auto Rgpu = sycl::range<1>(part_gpu);

std::vector<int> v1(N); //Input Vector 1

std::vector<int> v2(N); //Input Vector 2

for (int i = 0; i < N; i++) {

v1[i] = i;

v2[i] = N - i;

}

sycl::queue cpuQ(sycl::cpu_selector{}); //CPU Queue

sycl::queue gpuQ(sycl::gpu_selector{}); //GPU Queue

{

sycl::buffer<int, 1> bufgpuv1(v1.data(), Rgpu);

sycl::buffer<int, 1> bufgpuv2(v2.data(), Rgpu);

sycl::buffer<int, 1> bufcpuv1((v1.data() + (part_gpu)), Rcpu);

sycl::buffer<int, 1> bufcpuv2((v2.data() + (part_gpu)), Rcpu);

std::cout << "Running on: " << gpuQ.get_device().get_info<sycl::info::device::name>() << " and "

<< cpuQ.get_device().get_info<sycl::info::device::name>() << "\n";

gpuQ.submit([&](sycl::handler &h) {

auto agpuv1 = bufgpuv1.get_access<sycl::access::mode::read_write>(h);

auto agpuv2 = bufgpuv2.get_access<sycl::access::mode::read_write>(h);

h.parallel_for(Rgpu, [=](sycl::id<1> i) {

agpuv1[i] += agpuv2[i]; //Stpring output in Input 1

});

});

cpuQ.submit([&](sycl::handler &h) {

auto acpuv1 = bufcpuv1.get_access<sycl::access::mode::read_write>(h);

auto acpuv2 = bufcpuv2.get_access<sycl::access::mode::read_write>(h);

h.parallel_for(Rcpu, [=](sycl::id<1> i) {

acpuv1[i] += acpuv2[i]; //Storing output in Input 1

});

});

}

/*std::cout<<"Sample values on GPU and CPU\n";

for(int i =0; i < 10; i++)

std::cout<<v1[i]<<" "<<v1[N/2+i]<<"\n";*/

if (check) {

std::vector<int> validate_vector(N, N); //Validating

validate_vector == v1 ? std::cout << "Vector addition: Success\n" : std::cout << "Vector addition: Failure\n";

}

}

int main(int argc, char *argv[]) {

std::string type;

std::string n;

bool check = false;

float prop = 0.5;

int N = 0;

if (argc > 1) {

type = argv[1];

}

if (argc > 2) {

N = std::stoi(argv[2]);

}

if (argc > 3) {

prop = std::stof(argv[3]);

if (prop <= 0.0 || prop >= 1.0){

std::cout << "prop cpu should be between 0.0 and 1.0 (both exclusive)\n";

return 1;

}

}

if (argc > 4) {

check = std::string(argv[4]) == "check";

}

bool valid = false;

if (N > 0) {

if (type == "cpugpu") {

cpugpu(N, prop, check);

valid = true;

} else if (type == "cpu") {

cpu(N, check);

valid = true;

} else if (type == "gpu") {

gpu(N, check);

valid = true;

}

}

if (!valid) {

std::cout << "usage: (cpu|gpu|cpugpu) <N> [<prop float> (check)]\n";

std::cout << " prop cpu: 0.5 (default)\n";

return 1;

}

return 0;

}

I run some experiments, first, comparing cpu with gpu and cpugpu:

$ for dev in cpu gpu cpugpu; do /usr/bin/time ./vector-add $dev $(( 1024 * 1024 * 200 )); done

CPU N: 209715200 size: 838860800 B

Running on: Intel(R) Core(TM) i5-6200U CPU @ 2.30GHz

3.92user 0.60system 0:03.99elapsed 113%CPU (0avgtext+0avgdata 1815676maxresident)k

0inputs+0outputs (0major+426952minor)pagefaults 0swaps

GPU N: 209715200 size: 838860800 B

Running on: Intel(R) Gen9

3.53user 1.35system 0:05.02elapsed 97%CPU (0avgtext+0avgdata 1793872maxresident)k

57480inputs+0outputs (112major+433506minor)pagefaults 0swaps

CPUGPU N: 209715200 size: 838860800 B

CPU with N: 104857600 prop: 50 %

GPU with N: 104857600 prop: 50 %

Running on: Intel(R) Gen9 and Intel(R) Core(TM) i5-6200U CPU @ 2.30GHz

4.12user 0.97system 0:04.83elapsed 105%CPU (0avgtext+0avgdata 1885656maxresident)k

0inputs+0outputs (0major+441738minor)pagefaults 0swaps

As we can see, the CPU < CPUGPU < GPU. Since the CPUGPU is far from optimal, I try to search better proportions than 50%50%. New experiment:

$ for cpuprop in 0.3 0.4 0.5 0.6 0.7 0.8; do /usr/bin/time ./vector-add cpugpu $(( 1024 * 1024 * 200 )) $cpuprop check; done

CPUGPU N: 209715200 size: 838860800 B

CPU with N: 62914564 prop: 30 %

GPU with N: 146800636 prop: 70 %

Running on: Intel(R) Gen9 and Intel(R) Core(TM) i5-6200U CPU @ 2.30GHz

Vector addition: Success

4.79user 1.43system 0:05.99elapsed 103%CPU (0avgtext+0avgdata 2705084maxresident)k

0inputs+0outputs (0major+646545minor)pagefaults 0swaps

CPUGPU N: 209715200 size: 838860800 B

CPU with N: 83886080 prop: 40 %

GPU with N: 125829120 prop: 60 %

Running on: Intel(R) Gen9 and Intel(R) Core(TM) i5-6200U CPU @ 2.30GHz

Vector addition: Success

4.74user 1.34system 0:05.90elapsed 103%CPU (0avgtext+0avgdata 2704356maxresident)k

0inputs+0outputs (0major+646538minor)pagefaults 0swaps

CPUGPU N: 209715200 size: 838860800 B

CPU with N: 104857600 prop: 50 %

GPU with N: 104857600 prop: 50 %

Running on: Intel(R) Gen9 and Intel(R) Core(TM) i5-6200U CPU @ 2.30GHz

Vector addition: Success

4.82user 1.22system 0:05.78elapsed 104%CPU (0avgtext+0avgdata 2704516maxresident)k

0inputs+0outputs (0major+646539minor)pagefaults 0swaps

CPUGPU N: 209715200 size: 838860800 B

CPU with N: 125829128 prop: 60 %

GPU with N: 83886072 prop: 40 %

Running on: Intel(R) Gen9 and Intel(R) Core(TM) i5-6200U CPU @ 2.30GHz

Vector addition: Success

4.89user 1.19system 0:05.77elapsed 105%CPU (0avgtext+0avgdata 2704872maxresident)k

0inputs+0outputs (0major+646546minor)pagefaults 0swaps

CPUGPU N: 209715200 size: 838860800 B

CPU with N: 146800640 prop: 70 %

GPU with N: 62914560 prop: 30 %

Running on: Intel(R) Gen9 and Intel(R) Core(TM) i5-6200U CPU @ 2.30GHz

Vector addition: Success

4.85user 1.13system 0:05.62elapsed 106%CPU (0avgtext+0avgdata 2704576maxresident)k

0inputs+0outputs (0major+646542minor)pagefaults 0swaps

CPUGPU N: 209715200 size: 838860800 B

CPU with N: 167772160 prop: 80 %

GPU with N: 41943040 prop: 20 %

Running on: Intel(R) Gen9 and Intel(R) Core(TM) i5-6200U CPU @ 2.30GHz

Vector addition: Success

4.87user 1.10system 0:05.52elapsed 108%CPU (0avgtext+0avgdata 2705004maxresident)k

0inputs+0outputs (0major+646544minor)pagefaults 0swaps

Now, the more proportion for the CPU, the better, but still far from the good results in a single device. Also, it is quite interesting that the time is higher than in the previous experiment (why?)

What is really important, is to know why doing CPU+GPU has bad results.

You mentioned the DAG, and if it finds no dependencies, it will run in parallel. Is there any option to see if was or not detected any dependencies? (maybe compilation metadata/logs).

Because, if these are the expected results, I would say that there are two options:

- They are not running in parallel. First the chunk for the GPU, then the rest of the vector for the CPU. The time has been increased due to overheads using 2 queues, sync, submits, etc.

- They are running in parallel in both devices, but the synchronizations are so bad that the overheads are higher than running in a single device.

Am I missing anything?

1.2. Since you provided another code to show the real parallelism (DAG not detecting dependencies), I would like to know what was wrong in my original example (to learn how to avoid buffer dependencies), since I have also different buffer regions (ptr + offset) and ranges?

auto VectorAddOffset(queue &q, const IntArray &a_array, const IntArray &b_array,

IntArray &sum_parallel, size_t offset, size_t size, int dev) {

size_t part_size = size;

range<1> num_items{part_size};

buffer a_buf(a_array);

buffer b_buf(b_array);

auto *ptr = sum_parallel.data();

auto *ptr_offset = (ptr + (offset * sizeof(int)));

buffer sum_buf(ptr, range<1>(sum_parallel.size()));

event ev = q.submit([&](handler &h) {

auto a = a_buf.get_access<access::mode::read>(h);

auto b = b_buf.get_access<access::mode::read>(h);

auto sum = sum_buf.get_access<access::mode::write>(h);

h.parallel_for<class offset1>(range<1>(part_size), id<1>(0), [=](id<1> idx) {

auto idx_offset = idx[0] + offset;

int value = a[idx_offset] * b[idx_offset] / (b[idx_offset] + 1) + a[idx_offset] + b[idx_offset] / (b[idx_offset] + 2);

sum[idx_offset] = value + a[idx_offset] + b[idx_offset] / (b[idx_offset] + 2);

});

});

if (dev == 1) {

ts_checkpoint(CheckpointName::Submitted1);

} else {

ts_checkpoint(CheckpointName::Submitted2);

}

return ev;

}

// ....

void main(){

// ...

try {

queue q(d_selector, dpc::exception_handler, prop_list);

queue q2(d2_selector, dpc::exception_handler, prop_list);

std::cout << "Running on device1: "

<< q.get_device().get_info<info::device::name>() << "\n";

std::cout << "Running on device2: "

<< q2.get_device().get_info<info::device::name>() << "\n";

std::cout << "Vector size: " << a.size() << "\n";

e1 = VectorAddOffset(q, a, b, sum_parallel, 0, array_size / 2, 1);

end1 = std::chrono::high_resolution_clock::now();

e2 = VectorAddOffset(q2, a, b, sum_parallel, array_size / 2, array_size / 2, 2);

end2 = std::chrono::high_resolution_clock::now();

q.wait_and_throw();

ts_checkpoint(CheckpointName::Waited1);

q2.wait_and_throw();

ts_checkpoint(CheckpointName::Waited2);

} catch (exception const &e) {

std::cout << "An exception is caught for vector add.\n";

std::terminate();

}

// ...

}

2. Regarding the max buffer allocation, clinfo gives 1.596GiB for GPU and 1.915GiB for CPU. If I run the 1.1 example directly in GPU or CPU independently, the sizes are near to the maximum buffer allocation. That is good. But if I run in the CPUGPU, with 50% proportion (0.5) but doubling the size (or even less than twice), I cannot run the same amount per device:

$ for dev in cpu; do /usr/bin/time ./vector-add $dev $(( 1024 * 1024 * 490 )); done

CPU N: 513802240 size: 2055208960 B

Running on: Intel(R) Core(TM) i5-6200U CPU @ 2.30GHz

9.00user 1.40system 0:09.07elapsed 114%CPU (0avgtext+0avgdata 4191944maxresident)k

0inputs+0outputs (0major+1020859minor)pagefaults 0swaps

$ for dev in gpu; do /usr/bin/time ./vector-add $dev $(( 1024 * 1024 * 396 )); done

GPU N: 415236096 size: 1660944384 B

Running on: Intel(R) Gen9

6.95user 3.07system 0:10.84elapsed 92%CPU (0avgtext+0avgdata 3380752maxresident)k

429968inputs+0outputs (1270major+835467minor)pagefaults 0swaps

$ for dev in cpugpu; do /usr/bin/time ./vector-add $dev $(( 1024 * 1024 * 600 )); done

CPUGPU N: 629145600 size: 2516582400 B

CPU with N: 314572800 prop: 50 %

GPU with N: 314572800 prop: 50 %

Running on: Intel(R) Gen9 and Intel(R) Core(TM) i5-6200U CPU @ 2.30GHz

Command terminated by signal 9

8.65user 2.64system 0:11.37elapsed 99%CPU (0avgtext+0avgdata 5100868maxresident)k

0inputs+0outputs (0major+1253569minor)pagefaults 0swaps

$ for dev in cpugpu; do /usr/bin/time ./vector-add $dev $(( 1024 * 1024 * 520 )); done

CPUGPU N: 545259520 size: 2181038080 B

CPU with N: 272629760 prop: 50 %

GPU with N: 272629760 prop: 50 %

Running on: Intel(R) Gen9 and Intel(R) Core(TM) i5-6200U CPU @ 2.30GHz

9.43user 2.87system 0:12.70elapsed 96%CPU (0avgtext+0avgdata 4445584maxresident)k

586392inputs+0outputs (1758major+1097574minor)pagefaults 0swaps

Host memory used: v1 and v2 buffers, N * 4.

Device memory: sycl buffers v1 and v2, 50% each => N * 4 per device

So, if using the GPU only, with N = 1024 * 1024 * 396 => 1.54GiB (of 1.59). In CPU, N = 1024 * 1024 * 490 => 1.914 GiB (of 1.915).

But when using CPU and GPU, using N = 520 => CPU and GPU uses the same N part of 260 each, 1.01GiB. And it works, but when we write N = 600, the part is 300, 1.17GiB each and it explodes. Am I missing something?

Thank you for your time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for providing your findings. There is no data dependency in your code and ideally the kernel execution should be concurrent. Let me investigate and get back to you.

Thanks,

Rahul

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @RN1 ,

Apologies for the late response.

Under the current implementation, CPU and iGPU devices have different contexts (due to different OpenCL platforms). As a result, the context creation/context sync overhead could be quite significant, considering the workload of your application.

The vector add application which you are trying to run does not utilize much of the iGPU (even if you try to increase it's size). In other words, the computation which you have been trying to perform is insignificant when compared to the context creation/context sync overhead.

You may try to increase your workload to mitigate the effect of context creation/context sync overheads and check for concurrency on Vtune profiler with the help of the following command.

vtune -collect-with runsa -knob collectMemBandwidth=true -knob collectPCIeBandwidth=true -knob enable-system-cswitch=true -knob dram-bandwidth-limits=true -knob event-config=CPU_CLK_UNHALTED.THREAD:sa=3500000,CPU_CLK_UNHALTED.REF_TSC:sample:sa=3500000,INST_RETIRED.ANY:sample:sa=3500000,CPU_CLK_UNHALTED.REF_XCLK:sa=100003,CPU_CLK_UNHALTED.ONE_THREAD_ACTIVE:sa=100003 -knob enable-gpu-usage=true -knob gpu-counters-mode=global-local-accesses -knob collect-programming-api=true -knob enable-gpu-level-zero=true -knob enable-driverless-collection=false -- ./conc_chk

Here ./conc_chk is your executable.

I'm also a attaching a sample program with compute intensive kernel. Please note that I've split the input vectors for buffer creation but this shouldn't matter. The same input vector can also be used for creating multiple buffers with half range (like in the above code snippet which I had provided).

To compile:

dpcpp -g conc_chk.cpp -o conc_chk

Pass this executable to the above Vtune command to generate the reports.

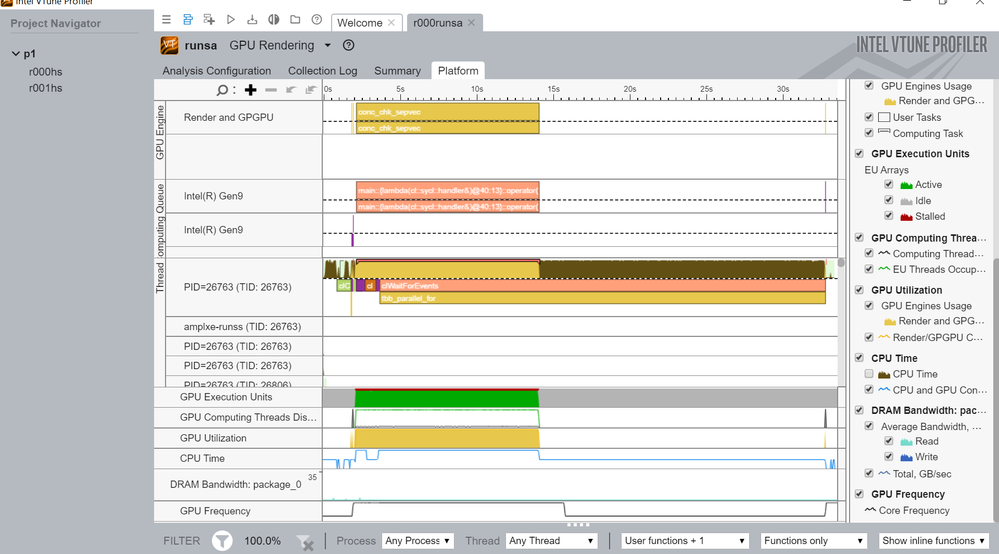

As you can see in the above screenshot, for almost close to 12 sec, the CPU and GPU ran concurrently for 50-50 split. (GPU execution units and CPU time graph's concurrency)

In your earlier code, the reason could be that, when you are trying to call VectorAddOffset function, the buffers are getting created within the function scope. So, as a result the host thread will wait until the buffer destructor gets called (Your host code is single threaded I see). Once the kernel execution completes, the data gets copied back to the host before buffer destructor is called. You can try increasing your workload and see if you are able to notice concurrency using Vtune profiler.

Coming to your 2nd question regarding memory allocation:

A point to note here is that iGPU doesn't have its own dedicated memory. The memory allocation of iGPU happens on the host. I do not know the exact reason for this kind of behavior. I will investigate further and get back to you on this.

Hope this helps.

--Rahul

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @RN1 ,

I don't see any issue with max memory allocation on CPU as well as iGPU. Kindly refer to the sample code that I have attached.

I have used the max limit on both CPU as well as iGPU. Created 2 different queues. Multiplied each element by 10 and compared the results towards the end. The results are correct.

Let me know if it helps.

Regards,

Rahul

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I have not heard back from you, so I will go ahead and close this thread. Intel will no longer monitor this thread. Feel free to post a new question if you still have any issues.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

Sorry for my late response, but I will try. Something quick, I tried to reproduce these results and I got some problems regarding vtune and DPC++/OpenCL.

I have all the permissions and the sepdk driver loaded, also the TRACEPOINTS=y in the kernel. If I run vtune as an user, it is freezed here:

$ vtune -collect-with runsa -knob collectMemBandwidth=true -knob collectPCIeBandwidth=true -knob enable-system-cswitch=true -knob dram-bandwidth-limits=true -knob event-config=CPU_CLK_UNHALT

ED.THREAD:sa=3500000,CPU_CLK_UNHALTED.REF_TSC:sample:sa=3500000,INST_RETIRED.ANY:sample:sa=3500000,CPU_CLK_UNHALTED.REF_XCLK:sa=100003,CPU_CLK_UNHALTED.ONE_THREAD_ACTIVE:sa=100003 -knob enable-gpu-usage=true -kno

b gpu-counters-mode=global-local-accesses -knob collect-programming-api=true -knob enable-gpu-level-zero=true -knob enable-driverless-collection=false -- ./build/vector-add cpugpu $(( 20480000 )) 1024000 0.9 che

ck;

vtune: Warning: Can't find 32-bit pin tool. 32-bit processes will not be profiled.

vtune: Peak bandwidth measurement started.

vtune: Peak bandwidth measurement finished.

vtune: Collection started. To stop the collection, either press CTRL-C or enter from another console window: vtune -r /home/radon/vector-add/r006runsa -command stop.

vtune: Warning: [2020.09.17 10:37:21] /usr/lib/libc.so.6 _init() instrumentation failed. Profiling data may be missing.

vcs/tpss2/tpss/src/tpss/runtime/linux/exe/tpss_deepbind.c:235 tpss_deepbind_notify_on_pthread_loaded: Assertion '((tpss_pthread_key_create_call_t)(((((tpss_probe_t*)g_tpss_probes_table) + g_tpss_pt_id[(tpss_pi_pthread_key_create)]))->trampoline)) != ((void *)0) && ((tpss_pthread_setspecific_call_t)(((((tpss_probe_t*)g_tpss_probes_table) + g_tpss_pt_id[(tpss_pi_pthread_setspecific)]))->trampoline)) != ((void *)0) && ((tpss_pthread_getspecific_call_t)(((((tpss_probe_t*)g_tpss_probes_table) + g_tpss_pt_id[(tpss_pi_pthread_getspecific)]))->trampoline)) != ((void *)0) && ((tpss_pthread_getattr_np_call_t)(((((tpss_probe_t*)g_tpss_probes_table) + g_tpss_pt_id[(tpss_pi_pthread_getattr_np)]))->trampoline)) != ((void *)0) && ((tpss_pthread_attr_getstack_call_t)(((((tpss_probe_t*)g_tpss_probes_table) + g_tpss_pt_id[(tpss_pi_pthread_attr_getstack)]))->trampoline)) != ((void *)0) && ((tpss_pthread_attr_getstacksize_call_t)(((((tpss_probe_t*)g_tpss_probes_table) + g_tpss_pt_id[(tpss_pi_pthread_attr_getstacksize)]))->trampoline)) != ((void *)0) && ((tpss_pthread_attr_setstack_call_t)(((((tpss_probe_t*)g_tpss_probes_table) + g_tpss_pt_id[(tpss_pi_pthread_attr_setstack)]))->trampoline)) != ((void *)0) && ((tpss_pthread_attr_setstacksize_call_t)(((((tpss_probe_t*)g_tpss_probes_table) + g_tpss_pt_id[(tpss_pi_pthread_attr_setstacksize)]))->trampoline)) != ((void *)0) && ((tpss__pthread_cleanup_push_call_t)(((((tpss_probe_t*)g_tpss_probes_table) + g_tpss_pt_id[(tpss_pi__pthread_cleanup_push)]))->trampoline)) != ((void *)0) && ((tpss__pthread_cleanup_pop_call_t)(((((tpss_probe_t*)g_tpss_probes_table) + g_tpss_pt_id[(tpss_pi__pthread_cleanup_pop)]))->trampoline)) != ((void *)0)' failed.

On the other side, if I run with sudo:

$ sudo vtune -collect-with runsa -knob collectMemBandwidth=true -knob collectPCIeBandwidth=true -knob enable-system-cswitch=true -knob dram-bandwidth-limits=true -knob event-config=CPU_CLK_UNHALTED.THREAD:sa=3500000,CPU_CLK_UNHALTED.REF_TSC:sample:sa=3500000,INST_RETIRED.ANY:sample:sa=3500000,CPU_CLK_UNHALTED.REF_XCLK:sa=100003,CPU_CLK_UNHALTED.ONE_THREAD_ACTIVE:sa=100003 -knob enable-gpu-usage=true -knob gpu-counters-mode=global-local-accesses -knob collect-programming-api=true -knob enable-gpu-level-zero=true -knob enable-driverless-collection=false -- ./build/vector-add cpugpu $(( 20480000 )) 1024000 0.9 check; time

real 0m0.000s

user 0m0.000s

sys 0m0.000s

[sudo] password for radon:

vtune: Warning: Can't find 32-bit pin tool. 32-bit processes will not be profiled.

vtune: Peak bandwidth measurement started.

vtune: Peak bandwidth measurement finished.

vtune: Collection started. To stop the collection, either press CTRL-C or enter from another console window: vtune -r /home/user/vector-add/r005runsa -command stop.

./build/vector-add: error while loading shared libraries: libsycl.so.1: cannot open shared object file: No such file or directory

vtune: Collection stopped.

vtune: Using result path `/home/user/vector-add/r005runsa'

vtune: Executing actions 20 % Resolving information for `ld-2.32.so'

vtune: Warning: Cannot locate debugging information for file `/usr/lib/ld-2.32.so'.

vtune: Warning: Cannot locate file `vmlinux'.

vtune: Executing actions 21 % Resolving information for `libtpsstool.so'

vtune: Warning: Cannot locate debugging information for file `/opt/intel/vtune_profiler_2020.2.0.610396/lib64/libtpsstool.so'.

vtune: Warning: Cannot locate debugging information for the Linux kernel. Source-level analysis will not be possible. Function-level analysis will be limited to kernel symbol tables. See the Enabling Linux Kernel Analysis topic in the product online help for instructions.

vtune: Executing actions 75 % Generating a report

Collection and Platform Info

----------------------------

Parameter r005runsa

------------------------ ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

Application Command Line ./build/vector-add "cpugpu" "20480000" "1024000" "0.9" "check"

Computer Name lhp

Result Size 11858123

Collection start time 08:34:20 17/09/2020 UTC

Collection stop time 08:34:20 17/09/2020 UTC

Collector Type Event-based sampling driver

CPU

---

Parameter r005runsa

--------------------------------- -----------------------------------------

Name Intel(R) Processor code named Skylake ULT

Frequency 2399997738

Logical CPU Count 4

Max DRAM Single-Package Bandwidth 16000000000.000

GPU

---

Parameter r005runsa

------------------- -----------------

Name

Vendor Intel Corporation

EU Count 24

Max EU Thread Count 7

Max Core Frequency 1000000000

GPU OpenCL Info

---------------

Parameter r005runsa

------------------- ---------

Version

Max Compute Units

Max Work Group Size

Local Memory

SVM Capabilities

Summary

-------

Elapsed Time: 0.097

Paused Time: 0.0

CPU Time: 0.098

Average CPU Utilization: 0.950

CPI Rate: 1.261

Average DRAM Bandwidth

----------------------

Package Total, GB/sec:Self

--------- ------------------

package_0 4.444

Event summary

-------------

Hardware Event Type Hardware Event Count:Self Hardware Event Sample Count:Self Events Per Sample Precise:Self

---------------------------------- ------------------------- -------------------------------- ----------------- ------------

INST_RETIRED.ANY 161000000 46 3500000 False

CPU_CLK_UNHALTED.THREAD 203000000 58 3500000 False

CPU_CLK_UNHALTED.REF_TSC 234500000 67 3500000 False

CPU_CLK_UNHALTED.REF_XCLK 2400072 24 100003 False

CPU_CLK_UNHALTED.ONE_THREAD_ACTIVE 1800054 18 100003 False

Uncore Event summary

--------------------

Uncore Event Type Uncore Event Count:Self

------------------------ -----------------------

GpuTime 0

GpuCoreClocks 0

AvgGpuCoreFrequencyMHz 0

GpuBusy 0

VsThreads 0

HsThreads 0

DsThreads 0

GsThreads 0

PsThreads 0

CsThreads 0

EuActive 0

EuStall 0

EuAvgIpcRate 0

EuFpuBothActive 0

Fpu0Active 0

Fpu1Active 0

EuSendActive 0

EuThreadOccupancy 0

RasterizedPixels 0

HiDepthTestFails 0

EarlyDepthTestFails 0

SamplesKilledInPs 0

PixelsFailingPostPsTests 0

SamplesWritten 0

SamplesBlended 0

SamplerTexels 0

SamplerTexelMisses 0

SlmBytesRead 0

SlmBytesWritten 0

ShaderMemoryAccesses 0

ShaderAtomics 0

L3ShaderThroughput 0

ShaderBarriers 0

TypedBytesRead 0

TypedBytesWritten 0

UntypedBytesRead 0

UntypedBytesWritten 0

GtiReadThroughput 0

GtiWriteThroughput 0

UNC_IMC_DRAM_DATA_READS 4371981

UNC_IMC_DRAM_DATA_WRITES 2376939

GPU Utilization

---------------

GPU Engine GPU Time:Self

----------------- -------------

Render and GPGPU 0.027

Blitter 0.0

Video Codec 0.0

Video Enhancement 0.0

vtune: Executing actions 100 % done

So, without sudo, it never finishes, ftrace is kept in use and I need to reboot the machine. But with sudo it gives that 'sycl.so' message, and later, although I can see the vtune panels and windows, I cannot see more than 97-100ms of execution, and there are no Command Queue horizontal graphs (it is not listed).

It happens the same if I execute directly with vtune-gui and execute different analysis (eg. GPU Compute/Media Hotspots).

Example of GPU Rendering > Platform for this 1s execution (only listed 97ms):

Do you know what could be wrong?

If I try to execute directly from a root account:

# vtune -collect-with runsa -knob collectMemBandwidth=true -knob collectPCIeBandwidth=true -knob enable-system-cswitch=true -knob dram-bandwidth-limits=true -knob event-config=CPU_CLK_UNHALTED.THREAD:sa=3500000,CPU_CLK_UNHALTED.REF_TSC:sample:sa=3500000,INST_RETIRED.ANY:sample:sa=3500000,CPU_CLK_UNHALTED.REF_XCLK:sa=100003,CPU_CLK_UNHALTED.ONE_THREAD_ACTIVE:sa=100003 -knob enable-gpu-usage=true -knob gpu-counters-mode=global-local-accesses -knob collect-programming-api=true -knob enable-gpu-level-zero=true -knob enable-driverless-collection=false -- ./build/vector-add cpugpu $(( 20480000 )) 1024000 0.9 check

vtune: Warning: Can't find 32-bit pin tool. 32-bit processes will not be profiled.

vtune: Peak bandwidth measurement started.

vtune: Peak bandwidth measurement finished.

vtune: Collection started. To stop the collection, either press CTRL-C or enter from another console window: vtune -r /home/radon/vector-add/r010runsa -command stop.

vtune: Warning: [2020.09.17 12:23:41] /usr/lib/libc.so.6 _init() instrumentation failed. Profiling data may be missing.

vcs/tpss2/tpss/src/tpss/runtime/linux/exe/tpss_deepbind.c:235 tpss_deepbind_notify_on_pthread_loaded: Assertion '((tpss_pthread_key_create_call_t)(((((tpss_probe_t*)g_tpss_probes_table) + g_tpss_pt_id[(tpss_pi_pthread_key_create)]))->trampoline)) != ((void *)0) && ((tpss_pthread_setspecific_call_t)(((((tpss_probe_t*)g_tpss_probes_table) + g_tpss_pt_id[(tpss_pi_pthread_setspecific)]))->trampoline)) != ((void *)0) && ((tpss_pthread_getspecific_call_t)(((((tpss_probe_t*)g_tpss_probes_table) + g_tpss_pt_id[(tpss_pi_pthread_getspecific)]))->trampoline)) != ((void *)0) && ((tpss_pthread_getattr_np_call_t)(((((tpss_probe_t*)g_tpss_probes_table) + g_tpss_pt_id[(tpss_pi_pthread_getattr_np)]))->trampoline)) != ((void *)0) && ((tpss_pthread_attr_getstack_call_t)(((((tpss_probe_t*)g_tpss_probes_table) + g_tpss_pt_id[(tpss_pi_pthread_attr_getstack)]))->trampoline)) != ((void *)0) && ((tpss_pthread_attr_getstacksize_call_t)(((((tpss_probe_t*)g_tpss_probes_table) + g_tpss_pt_id[(tpss_pi_pthread_attr_getstacksize)]))->trampoline)) != ((void *)0) && ((tpss_pthread_attr_setstack_call_t)(((((tpss_probe_t*)g_tpss_probes_table) + g_tpss_pt_id[(tpss_pi_pthread_attr_setstack)]))->trampoline)) != ((void *)0) && ((tpss_pthread_attr_setstacksize_call_t)(((((tpss_probe_t*)g_tpss_probes_table) + g_tpss_pt_id[(tpss_pi_pthread_attr_setstacksize)]))->trampoline)) != ((void *)0) && ((tpss__pthread_cleanup_push_call_t)(((((tpss_probe_t*)g_tpss_probes_table) + g_tpss_pt_id[(tpss_pi__pthread_cleanup_push)]))->trampoline)) != ((void *)0) && ((tpss__pthread_cleanup_pop_call_t)(((((tpss_probe_t*)g_tpss_probes_table) + g_tpss_pt_id[(tpss_pi__pthread_cleanup_pop)]))->trampoline)) != ((void *)0)' failed.

So, I am a bit lost here.

I am running VTune 2020 Update 2 but this is appearing...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page