- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is there any specific oneAPI macro (or other compile time construct as macros seem to be interpreted at dpcpp step) to specifically target a given backend/platform (gpu, cpu) in a kernel (JIT compiled)?

something like

#ifdef ON_CPU

// some code that does not compile on GPU (or is optimized for CPU)

#elif ON_GPU

// an alternative for the GPU

#else

// throw?

#endif

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for reaching out to us.

>>Is there any specific oneAPI macro..

Please find the sample code and steps we have followed to target specific devices from our end.

vec_add.cpp:

#include <CL/sycl.hpp>

#include <iostream>

#include <vector>

using namespace sycl;

#define size 1024

int main() {

std::vector<int> A(size, size), B(size, size), C(size, 0);

{

queue cpuQ(cpu_selector{});

queue gpuQ(gpu_selector{});

range<1> R(size);

buffer<int,1> buffA(A.data(), R);

buffer<int,1> buffB(B.data(), R);

buffer<int,1> buffC(C.data(), R);

#ifdef CPU

std::cout<<"Running on: "<<cpuQ.get_device().get_info<sycl::info::device::name>()<<"\n";

cpuQ.submit([&](handler &cgh) {

auto acc_buffA = buffA.get_access<access::mode::read>(cgh);

auto acc_buffB = buffB.get_access<access::mode::read>(cgh);

auto acc_buffC = buffC.get_access<access::mode::write>(cgh);

cgh.parallel_for(R, [=](id<1> i) {

acc_buffC[i] = acc_buffA[i] + acc_buffB[i];

}

);

});

#elif GPU

std::cout<<"Running on: "<<gpuQ.get_device().get_info<sycl::info::device::name>()<<"\n";

gpuQ.submit([&](handler &cgh) {

auto acc_buffA = buffA.get_access<access::mode::read>(cgh);

auto acc_buffB = buffB.get_access<access::mode::read>(cgh);

auto acc_buffC = buffC.get_access<access::mode::write>(cgh);

cgh.parallel_for(R, [=](id<1> i) {

acc_buffC[i] = acc_buffA[i] + acc_buffB[i];

}

);

});

#endif

}

std::vector<int> vecValidate(size, 2*size);

(C==vecValidate) ? std::cout << "Success\n" : std::cout<<"Failure\n";

return 0;

}

Command to execute:

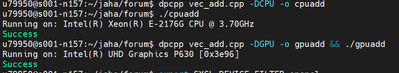

dpcpp vec_add.cpp -DCPU -o cpuadd && ./cpuadd

dpcpp vec_add.cpp -DGPU -o gpuadd && ./gpuadd

Please find the attached screenshot for more details:

We use the -D option to define a macro name while compiling the source code.

Please refer to the below link for more details:

We can target specific backend using the SYCL_DEVICE_FILTER environment variable.

Syntax:

export SYCL_DEVICE_FILTER=backend:device_type:device_num

Possible values of backend are:

host

level_zero

opencl

Possible values of device_type are:

host

cpu

gpu

acc

device_num is an integer that indexes the enumeration of devices from the sycl-ls utility tool and will return all devices with an index from all different backends.

Please find below link for more details:

Thanks & Regards,

Noorjahan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HI,

Has the information provided above helped? If yes, Could you please confirm whether we can close this thread from our end?

Thanks & Regards,

Noorjahan.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I understood that what I had in mind cannot be done,

will do otherwise.

thanks for the help.

Please close the thread.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

>>I understood that what I had in mind cannot be done

Could you please elaborate more on your issue so that we will try to help you in another way?

Thanks & Regards,

Noorjahan.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Say I have a kernel that I want to run on GPU and CPU but a small part I wish to use an optimized routine that will NOT compile in the other and I do not wnat (as in your example) to duplicate all the code and I do not want to split my kernel

this will not work.

auto kernel = [=](auto i,....) {

//

// a lot of code

//

#ifdef ONGPU

optimizedForGPU // may not parse on HOST

#else

optimizedForCPU

#endif

//

// more code

//

};

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In any case my questions were:

1) can macros be used in a sycl kernels to drive JIT compilation?

My understanding is: NO, they are parsed by the host compiler ahead-of-time (a in your example)

2) is there any other mechanism in sycl to compile part of a kernel for a specific target?

my understanding is: NO, there is no "special" mechanism in sycl to perform JIT conditional compilation.

Thanks again for the support and please close the thread.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

>>Thanks again for the support. please close the thread

We have discussed this case with concerned team and they are interested to get some more details. So, could you please let us know is there any particular

use-case behind compiling part of the kernel on a specific target so that we could check the possibilities internally and will try to address your questions specifically?

Before proceeding further in closing this thread as per your request,

I think it would also become a good reference to anybody looking at this topic with similar thoughts as yours by providing your use-case.

Thanks & Regards,

Noorjahan.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

for instance in some kernel I run either on GPU or on CPU under cuda/nvcc at some point I need to perform a sort and I use CUDA_ARCH to select either a bucket-sort for GPU (single block) or quick sort on CPU (single thread).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Could you please provide us a sample reproducer with CUDA_ARCH, so that we can understand it better?

Thanks & regards,

Noorjahan.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am surprised this kind of issue has not been raised nor encountered yet

anyhow:

please find here an example

CUDA

Alpaka

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We are working on your issue. We will get back to you soon.

Could you please confirm whether your are using nvrtc Cuda runtime library in your application?

Thanks & Regards,

Noorjahan.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We have reported this issue to the development team, they are looking into this issue.

Thanks & Regards,

Noorjahan.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page