- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I am reading this guide on Optimizing Memory Movement between Host and Accelerator (intel.com) that uses "use_host_ptr" to avoid data transfers between host and device. This seems exactly what I need for my application.

I copied the example code (and the other file) from oneAPI-samples/vec-buffer-host.cpp at master · oneapi-src/oneAPI-samples (github.com) and ran on my machine with intel GPU. According to the post, the addresses for all three (host, in the buffer, and on the accelerator) should be same when the property use_host_ptr is set.

Platform Name: Intel(R) Level-Zero

Platform Version: 1.3

Device Name: Intel(R) UHD Graphics [0x9b41]

Max Work Group: 256

Max Compute Units: 24

add1: buff memory address =0x7fef3450c000

add1: address of vector aa = 0x7fef3450c000

add1: dev addr = 0x7fef344a6000However, only the buffer memory address and the address of vector are same, the dev addr is different.

Am I missing some settings? Thank you

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for reaching out to us.

Could you please try changing the platform to OpenCL using the below command to get the same memory address in all cases (host, in the buffer, and on the accelerator)?

export SYCL_DEVICE_FILTER=opencl(linux)

set SYCL_DEVICE_FILTER=opencl(windows)

We have tried this from our end and it worked as expected.

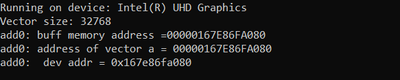

Please refer to the below screenshot for more details

Thanks & Regards,

Noorjahan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for the confirmation.

As this issue has been resolved, we will no longer respond to this thread. If you need any additional information, please post a new question.

Thanks & Regards,

Noorjahan.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page