- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I have compiled my code using intel-oneapi-mpi@2021.5.0 on our institute supercomputer.

When I submit the job which runs the mpi executable, it gets killed when I export the environment variable "export I_MPI_ASYNC_PROGRESS=1" for asynchronous progress control. This environment variable needs to be set for non blocking allreduce (MPI_Iallreduce) that I use in my code.

My code has been compiled using the following packages:

-

module load spack/0.17

-

. /home-ext/apps/spack/share/spack/setup-env.sh

-

spack load intel-oneapi-compilers@2022.0.1

-

spack load intel-oneapi-tbb

-

spack load intel-oneapi-mkl

-

spack load intel-oneapi-mpi@2021.5.0

As you can see, I am using intel-oneapi-mpi@2021.5.0 for which release and release_mt have been merged (https://www.intel.com/content/www/us/en/developer/articles/release-notes/mpi-library-release-notes-linux.html) so I don't need to source any other file. Why does my job gets killed then? My job runs fine when "export I_MPI_ASYNC_PROGRESS=0".

I am attaching error and ouput files of the job. Following is my job script:

#!/bin/bash

#SBATCH -N 6

#SBATCH --ntasks-per-node=48

#SBATCH --exclusive

#SBATCH --time=00:30:00

#SBATCH --job-name=ex2

#SBATCH --error=ex2.e%J

#SBATCH --output=ex2.o%J

##SBATCH --partition=small

module load spack/0.17

. /home-ext/apps/spack/share/spack/setup-env.sh

spack load intel-oneapi-compilers@2022.0.1

spack load intel-oneapi-tbb

spack load intel-oneapi-mkl

spack load intel-oneapi-mpi@2021.5.0

#export MCA param routed=direct

# // Below are Intel MPI specific settings //

export I_MPI_FALLBACK=disable

export I_MPI_FABRICS=ofi:ofi

export I_MPI_DEBUG=9

export I_MPI_ASYNC_PROGRESS=true

#export I_MPI_ASYNC_PROGRESS_THREADS=1

cd $SLURM_SUBMIT_DIR

nprocs=288

ulimit -aH

ulimit -c unlimited

ulimit -s unlimited

mpiexec.hydra -n $nprocs ./ex2 -m 80 -n 80 -ksp_monitor_short -ksp_type pipecg2 -pc_type jacobi

***************end of script*****************

Regards,

Manasi

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for reaching out to us.

Could you please provide us the below details:

1. Operating system being used and specify its version.

2. Output of the below command which will generate the information of CPU.

lscpu

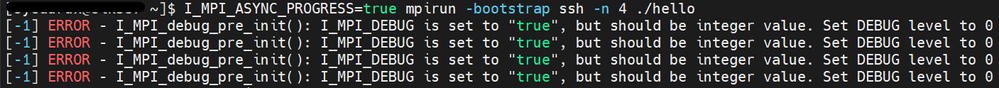

>>"export I_MPI_ASYNC_PROGRESS=true"

We can see from your job script, that you have passed "true" as a value to I_MPI_ASYNC_PROGRESS. You might get an error like the below:

So, it is recommended to use the below command:

export I_MPI_ASYNC_PROGRESS=1

>>"Why does my job gets killed then? My job runs fine when "export I_MPI_ASYNC_PROGRESS=0"."

Could you please provide us with a sample reproducer code so that we can investigate your issue from our end?

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for the reply.

I was submitting the job to compute nodes from a login node through a batch scheduler previously and I have shared the same in my previous post. But as you are asking OS version and lscpu info, I have run the same code on the login node itself through command line and I am encountering the same issues as when I was submitting the job on the compute node. I will be giving details about the login node executions in this post.

OS and its version:

[cdsmanas@login10 tutorials]$ cat /etc/os-release

NAME="CentOS Linux"

VERSION="7 (Core)"

ID="centos"

ID_LIKE="rhel fedora"

VERSION_ID="7"

PRETTY_NAME="CentOS Linux 7 (Core)"

ANSI_COLOR="0;31"

CPE_NAME="cpe:/o:centos:centos:7"

HOME_URL="https://www.centos.org/"

BUG_REPORT_URL="https://bugs.centos.org/"

CENTOS_MANTISBT_PROJECT="CentOS-7"

CENTOS_MANTISBT_PROJECT_VERSION="7"

REDHAT_SUPPORT_PRODUCT="centos"

REDHAT_SUPPORT_PRODUCT_VERSION="7"

lscpu:

[cdsmanas@login10 tutorials]$ lscpu

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 80

On-line CPU(s) list: 0-79

Thread(s) per core: 2

Core(s) per socket: 20

Socket(s): 2

NUMA node(s): 2

Vendor ID: GenuineIntel

CPU family: 6

Model: 85

Model name: Intel(R) Xeon(R) Gold 6248 CPU @ 2.50GHz

Stepping: 7

CPU MHz: 1000.061

CPU max MHz: 3900.0000

CPU min MHz: 1000.0000

BogoMIPS: 5000.00

Virtualization: VT-x

L1d cache: 32K

L1i cache: 32K

L2 cache: 1024K

L3 cache: 28160K

NUMA node0 CPU(s): 0-19,40-59

NUMA node1 CPU(s): 20-39,60-79

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc art arch_perfmon pebs bts rep_good nopl xtopology nonstop_tsc aperfmperf eagerfpu pni pclmulqdq dtes64 monitor ds_cpl vmx smx est tm2 ssse3 sdbg fma cx16 xtpr pdcm pcid dca sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand lahf_lm abm 3dnowprefetch epb cat_l3 cdp_l3 invpcid_single intel_ppin intel_pt ssbd mba ibrs ibpb stibp ibrs_enhanced tpr_shadow vnmi flexpriority ept vpid fsgsbase tsc_adjust bmi1 hle avx2 smep bmi2 erms invpcid rtm cqm mpx rdt_a avx512f avx512dq rdseed adx smap clflushopt clwb avx512cd avx512bw avx512vl xsaveopt xsavec xgetbv1 cqm_llc cqm_occup_llc cqm_mbm_total cqm_mbm_local dtherm ida arat pln pts pku ospke avx512_vnni md_clear spec_ctrl intel_stibp flush_l1d arch_capabilities

Modules loaded (we are using spack package manager):

module load spack/0.17

. /home-ext/apps/spack/share/spack/setup-env.sh

spack load intel-oneapi-compilers@2022.0.1

spack load intel-oneapi-tbb

spack load intel-oneapi-mkl

spack load intel-oneapi-mpi@2021.5.0

Sample reproducer code:

I am running a PETSc code so it's not exactly written in C but it is written in PETSc API. I will give a short reproducer of what is happening inside the code in test.c below. Basically, the PETSc code calls MPI_Bcast early after MPI_Init and there the program freezes.

#include <stdio.h>

#include "mpi.h"

int main( int argc, char *argv[])

{

int myrank, size;

MPI_Init(&argc, &argv);

MPI_Comm_rank( MPI_COMM_WORLD, &myrank );

MPI_Comm_size( MPI_COMM_WORLD, &size );

MPI_Status status;

MPI_Request request;

int x;

if(myrank==0)

x=5;

MPI_Bcast(&x,1,MPI_INT,0,MPI_COMM_WORLD);

printf("[%d]: After Bcast, x is %d\n", myrank, x);

/*MPI_Ibcast(&x,1,MPI_INT,0,MPI_COMM_WORLD,&request);

MPI_Wait(&request,&status);

printf("[%d]: After Bcast, x is %d\n", myrank, x);

*/

/*int number;

if (myrank == 0) {

number = -1;

MPI_Send(&number, 1, MPI_INT, 1, 0, MPI_COMM_WORLD);

} else if (myrank == 1) {

MPI_Recv(&number, 1, MPI_INT, 0, 0, MPI_COMM_WORLD,MPI_STATUS_IGNORE);

printf("Process 1 received number %d from process 0\n", number);

}*/

MPI_Finalize();

return 0;

}

Compiling this as: mpicc test.c -o test.out

Running this as: I_MPI_DEBUG=10 mpirun -n 4 ./test.out

Output:

[0] MPI startup(): Intel(R) MPI Library, Version 2021.5 Build 20211102 (id: 9279b7d62)

[0] MPI startup(): Copyright (C) 2003-2021 Intel Corporation. All rights reserved.

[0] MPI startup(): library kind: release

[0] MPI startup(): shm segment size (801 MB per rank) * (4 local ranks) = 3207 MB total

[0] MPI startup(): libfabric version: 1.13.2rc1-impi

[0] MPI startup(): libfabric provider: mlx

[0] MPI startup(): File "/home-ext/apps/spack/opt/spack/linux-centos7-cascadelake/oneapi-2022.0.0/intel-oneapi-mpi-2021.5.0-mrtwwdh34kzg5sfihrlcvlsduomngfwr/mpi/2021.5.0/etc/tuning_skx_shm-ofi_mlx_100.dat" not found

[0] MPI startup(): Load tuning file: "/home-ext/apps/spack/opt/spack/linux-centos7-cascadelake/oneapi-2022.0.0/intel-oneapi-mpi-2021.5.0-mrtwwdh34kzg5sfihrlcvlsduomngfwr/mpi/2021.5.0/etc/tuning_skx_shm-ofi.dat"

[0] MPI startup(): Rank Pid Node name Pin cpu

[0] MPI startup(): 0 190035 login10 {0,1,2,3,4,5,6,7,8,9,40,41,42,43,44,45,46,47,48,49}

[0] MPI startup(): 1 190036 login10 {10,11,12,13,14,15,16,17,18,19,50,51,52,53,54,55,56,57,58,59}

[0] MPI startup(): 2 190037 login10 {20,21,22,23,24,25,26,27,28,29,60,61,62,63,64,65,66,67,68,69}

[0] MPI startup(): 3 190038 login10 {30,31,32,33,34,35,36,37,38,39,70,71,72,73,74,75,76,77,78,79}

[0] MPI startup(): I_MPI_ROOT=/home-ext/apps/spack/opt/spack/linux-centos7-cascadelake/oneapi-2022.0.0/intel-oneapi-mpi-2021.5.0-mrtwwdh34kzg5sfihrlcvlsduomngfwr/mpi/2021.5.0

[0] MPI startup(): I_MPI_MPIRUN=mpirun

[0] MPI startup(): I_MPI_HYDRA_TOPOLIB=hwloc

[0] MPI startup(): I_MPI_INTERNAL_MEM_POLICY=default

[0] MPI startup(): I_MPI_DEBUG=10

[0] MPI startup(): threading: mode: direct

[0] MPI startup(): threading: vcis: 1

[0] MPI startup(): threading: app_threads: 1

[0] MPI startup(): threading: runtime: generic

[0] MPI startup(): threading: is_threaded: 0

[0] MPI startup(): threading: async_progress: 0

[0] MPI startup(): threading: num_pools: 64

[0] MPI startup(): threading: lock_level: global

[0] MPI startup(): threading: enable_sep: 0

[0] MPI startup(): threading: direct_recv: 1

[0] MPI startup(): threading: zero_op_flags: 1

[0] MPI startup(): threading: num_am_buffers: 1

[0] MPI startup(): threading: library is built with per-vci thread granularity

[0]: After Bcast, x is 5

[1]: After Bcast, x is 5

[2]: After Bcast, x is 5

[3]: After Bcast, x is 5

Running this as: I_MPI_DEBUG=10 I_MPI_ASYNC_PROGRESS=1 mpiexec.hydra -n 4 ./test.out

Output:

[0] MPI startup(): Intel(R) MPI Library, Version 2021.5 Build 20211102 (id: 9279b7d62)

[0] MPI startup(): Copyright (C) 2003-2021 Intel Corporation. All rights reserved.

[0] MPI startup(): library kind: release

[0] MPI startup(): shm segment size (801 MB per rank) * (4 local ranks) = 3207 MB total

[0] MPI startup(): libfabric version: 1.13.2rc1-impi

[0] MPI startup(): libfabric provider: mlx

[0] MPI startup(): File "/home-ext/apps/spack/opt/spack/linux-centos7-cascadelake/oneapi-2022.0.0/intel-oneapi-mpi-2021.5.0-mrtwwdh34kzg5sfihrlcvlsduomngfwr/mpi/2021.5.0/etc/tuning_skx_shm-ofi_mlx_100.dat" not found

[0] MPI startup(): Load tuning file: "/home-ext/apps/spack/opt/spack/linux-centos7-cascadelake/oneapi-2022.0.0/intel-oneapi-mpi-2021.5.0-mrtwwdh34kzg5sfihrlcvlsduomngfwr/mpi/2021.5.0/etc/tuning_skx_shm-ofi.dat"

[1] MPI startup(): global_rank 1, local_rank 1, local_size 4, threads_per_node 4

[3] MPI startup(): global_rank 3, local_rank 3, local_size 4, threads_per_node 4

[2] MPI startup(): global_rank 2, local_rank 2, local_size 4, threads_per_node 4

[0] MPI startup(): Rank Pid Node name Pin cpu

[0] MPI startup(): 0 190146 login10 {0,1,2,3,4,5,6,7,8,9,40,41,42,43,44,45,46,47,48,49}

[0] MPI startup(): 1 190147 login10 {10,11,12,13,14,15,16,17,18,19,50,51,52,53,54,55,56,57,58,59}

[0] MPI startup(): 2 190148 login10 {20,21,22,23,24,25,26,27,28,29,60,61,62,63,64,65,66,67,68,69}

[0] MPI startup(): 3 190149 login10 {30,31,32,33,34,35,36,37,38,39,70,71,72,73,74,75,76,77,78,79}

[0] MPI startup(): I_MPI_ROOT=/home-ext/apps/spack/opt/spack/linux-centos7-cascadelake/oneapi-2022.0.0/intel-oneapi-mpi-2021.5.0-mrtwwdh34kzg5sfihrlcvlsduomngfwr/mpi/2021.5.0

[0] MPI startup(): I_MPI_HYDRA_TOPOLIB=hwloc

[0] MPI startup(): I_MPI_INTERNAL_MEM_POLICY=default

[0] MPI startup(): I_MPI_ASYNC_PROGRESS=1

[0] MPI startup(): I_MPI_DEBUG=10

[0] MPI startup(): threading: mode: handoff

[0] MPI startup(): threading: vcis: 1

[0] MPI startup(): threading: progress_threads: 0

[0] MPI startup(): threading: is_threaded: 1

[0] MPI startup(): threading: async_progress: 1

[0] MPI startup(): threading: num_pools: 64

[0] MPI startup(): threading: lock_level: nolock

[0] MPI startup(): threading: enable_sep: 0

[0] MPI startup(): threading: direct_recv: 0

[0] MPI startup(): threading: zero_op_flags: 1

[0] MPI startup(): threading: num_am_buffers: 1

[0] MPI startup(): threading: library is built with per-vci thread granularity

[0] MPI startup(): global_rank 0, local_rank 0, local_size 4, threads_per_node 4

[0] MPI startup(): threading: thread: 0, processor: 79

[0] MPI startup(): threading: thread: 1, processor: 78

[0] MPI startup(): threading: thread: 2, processor: 77

[0] MPI startup(): threading: thread: 3, processor: 76

********************************the program freezes over here and has to be killed with keyboard interrupt*************************

If you uncomment other functions in the code i.e. MPI_Ibcast and even normal MPI_send and MPI_Recv and then compile and run, the code freezes when I_MPI_ASYNC_PROGRESS=1, otherwise it runs fine.

Why is this happening?

Regards,

Manasi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We were able to reproduce your issue at our end using the latest Intel MPI Library 2021.5 on a Linux machine.

We have reported this issue to the concerned development team. They are looking into your issue.

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Santosh. Thanks for the response.

With the help of my system administrator, I was able to compile and run my code with a different version of intel compiler (2020.4.0) which does not have the issues that I am facing with intel mpi 2021.5.0. Now my code runs successfully without any errors or freezing. However, I am now facing a new problem for which I have opened a new ticket at https://community.intel.com/t5/Intel-oneAPI-HPC-Toolkit/Asynchronous-progress-slows-down-my-program/m-p/1367494#M9284 .

My code runs really slow when I try to use asynchronous progress environment variables. Can you please look into this issue instead? This issue has also been posted by another user at https://community.intel.com/t5/Intel-oneAPI-HPC-Toolkit/Intel-MPI-Compiler-2018-5-274/td-p/1364990 . I think it's a problem faced by everyone trying to use asynchronous progress control with intel mpi.

Regards,

Manasi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

>>>"With the help of my system administrator, I was able to compile and run my code with a different version of intel compiler (2020.4.0) which does not have the issues that I am facing with intel mpi 2021.5.0. "

Could you please try with Intel MPI 2021.6 and let us know if the issue persists?

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We haven't heard back from you. Could you please try with Intel MPI 2021.6(or higher) and let us know if the issue persists?

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We haven't heard back from you. Your issue has been fixed in Intel MPI 2021.6 version. You can use Intel MPI 2021.6(or higher versions) to resolve your issue. We tried from our end with the latest Intel MPI 2021.8 & we were able to run your code successfully as shown in the attachment.

This thread will no longer be monitored by Intel. If you need further assistance, please post a new question.

Thanks & Regards,

Santosh

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page