- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello all,

We use to run some parallel applications with Intel-MPI - these applications are fully packaged, so I do not see all the options & settings - meanwhile from the running pocesses, I see "hypdra_pmi_proxy" processes running, with several arguments among which "--control-code", which is very useful to map the processing belonging to the same HPC job over several nodes.

For another application, I want to use Intel-MPI 2021.8 "manually" - code the complete mpi command line: mpirun -n ... - The job would start, I can still see the "hydra_pmi_proxy" processes, yet to my surprise there is no "--control-code" parameter:

$ pgrep -a hydra_pmi_proxy

21632 /intel-2021.8.0/mpi/2021.8.0//bin//hydra_pmi_proxy --usize -1 --auto-cleanup 1 --abort-signal 9

Hence my question - Is the "--control-code" something to be configured / tuned? Is there a way to "activate" it from manual mpirun execution?

I did not find any hint into the documentation on this topic...

Thanks!

- Tags:

- control-code

- mpi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Santosh,

Thanks for your feedback - I will investigate this way and check how it could apply in our case.

Thanks!

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for posting in the Intel communities.

What is the previous version of Intel MPI in which you observed the option "--control-code"?

>>>" I see "hypdra_pmi_proxy" processes running, with several arguments among which "--control-code""

Could you please let us know how you could find all the arguments while running the program? Please provide us with the command to try from our end.

Also, please provide us with your environment details such as:

- Operating system & its version

- Interconnect hardware

- CPU details

- Job scheduler

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Santosh,

Actually I did not realize my simulation environment was that exotic...

So here is the "old" Intel mpi version used:

$ mpirun --version

Intel(R) MPI Library for Linux* OS, Version 2018 Update 1 Build 20171011 (id: 17941)

Copyright (C) 2003-2017, Intel Corporation. All rights reserved.

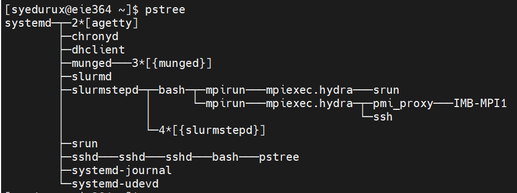

When I run a little testcase on 4 cpus with mpirun, pstree would return something like this:

mpirun(149889)───mpiexec.hydra(149894)───pmi_proxy(149895)─┬─ls-dyna_mpp_s_R(149899)───lstc_client(149910)

├─ls-dyna_mpp_s_R(149900)

├─ls-dyna_mpp_s_R(149901)

└─ls-dyna_mpp_s_R(149902)

Among which the pmi_proxy process looks like this:

$ pgrep -a pmi_proxy

149895 /test/mpi/intel/intel64/bin/pmi_proxy --control-port compute2:38419 --pmi-connect alltoall --pmi-aggregate -s 0 --rmk lsf --demux poll --pgid 0 --enable-stdin 1 --retries 10 --control-code 1925763116 --usize -2 --proxy-id 0

Then maybe we could take the point the other way around? In case of multi-node computation, is there a way to find to which mpirun any remote processes are attached? To take another exemple, for instance with Platform-MPI the mpirun process ID is set as argument to all remote "mpid" processes:

[host 1] $ pgrep mpirun

151502

[host 2] $ pgrep -a mpid

15386 /test/mpi/platform/bin/mpid 1 0 151061507 172.16.0.205 40352 151502 /test/mpi/platform

Again this is very convenient to manage process cleaning with some applications prone to leave "orphan" processes in case of computation errors - meanwhile I wonder how we actually inherited of such exclusive feature in version 2018...

Thanks for your support!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Santosh, and thanks for your feedback,

We are using RedHat/CentOS 7 servers with Intel Xeon processors, Ethernet or Infiniband interconnect, and operated by LSF jobscheduler; The previous Intel-MPI version used was 2018.1.163.

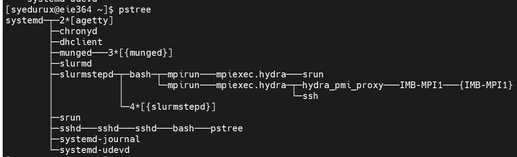

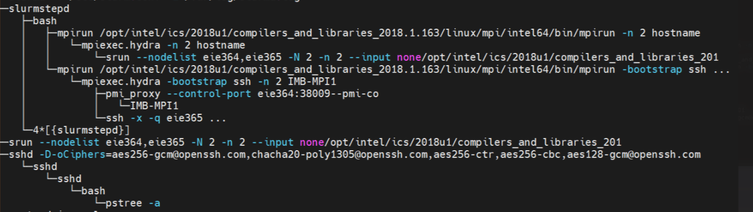

I have performed some additional tests at my end - If I understand well, Intel-MPI 2018 mpirun would execute a "pmi_proxy" process, and Intel-MPI 2021 mpirun would execute a "hydra_pmi_proxy" (not sure my understanding is right at this stage...)

It seems that I am able to reproduce this behavior with both "embedded mpi" application, and "manual mpirun" execution:

With Intel-MPI 2018, "pstree" will show a process of this type:

intel/2018.1.163/linux-x86_64/rto/intel64/bin/pmi_proxy --control-port host2:46487 --pmi-connect alltoall --pmi-aggregate -s 0 --cleanup --rmk user --launcher rsh --demux poll --pgid 0 --enable-stdin 1 --retries 10 --control-code 417151781 --usize -2 --proxy-id 1

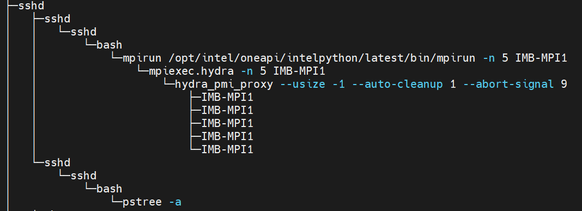

With Intel-MPi 2021, it would simply be of the form:

intel/2021.2/linux-x86_64/bin//hydra_pmi_proxy --usize -1 --auto-cleanup 1 --abort-signal 9

Not sure to be fully clear at this stage?

The use case is: In case of multi-node computations and eventual job crashs, this control code is very useful to identify eventual "orphan" processes among those running on the cluster, and not clean up by mistake "regular" processes - i.e. processes associated to a pmi_process on any other cluster node with the same control-code.

Does this mean that these control codes are indeed no longer used in recent flavors of Intel-MPI? If so, is there a way to identifiy to which "master process" any remote execution process is attached?

Thanks - Have a nice day

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

1. Intel-MPI 2018 mpirun would execute a "pmi_proxy" process:

2. Intel-MPI 2021 mpirun would execute a "hydra_pmi_proxy" as shown below:

3. With Intel-MPI 2018, "pstree -a" provided the following results on the RHEL8.6 machine:

4. With Intel-MPI 2021, below is the result for "pstree -a":

However, we couldn't find --control-code as an argument in the IMPI 2018 update1.

Thanks for providing the use case of the --control-code option. Could you please provide us with any referral link for the same?

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

>>>" is there a way to find to which mpirun any remote processes are attached?"

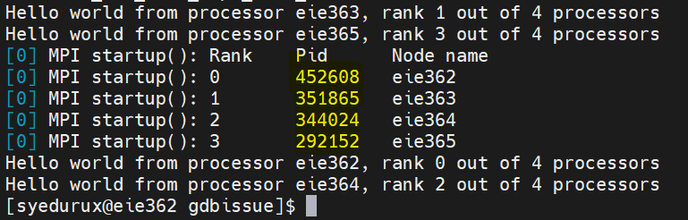

I assume you are looking for the process IDs assigned to the corresponding nodes which we can fetch using the I_MPI_DEBUG environment variable.

Example:

I_MPI_DEBUG=3 mpirun -n 4 ./helloThe above command will give the below debug information in which we can get the processes attached to each node.

Could you please let us know if this is what you are looking for?

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Santosh,

Thanks for your feedback - I will investigate this way and check how it could apply in our case.

Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for accepting our solution. If you need any additional information, please post a new question as this thread will no longer be monitored by Intel.

Thanks & Regards,

Santosh

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page