- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

We have a set of regression tests and some of them end up terminating with MPI_Abort intentionally. After switching to IMPI 2021.6 those tests on Windows now reproducibly hang inside MPI_Abort when running inside a task scheduler job. This occurs on both Windows test bots (one Intel and the other AMD based).

All ranks have the same stack trace (obtained using debug/impi.dll and the corresponding pdb file):

[0x3] WS2_32!recv + 0x170

[0x4] impi!PMIU_readline + 0x115

[0x5] impi!GetResponse + 0x106

[0x6] impi!VPMI_Abort + 0xde

[0x7] impi!PMI_Abort + 0x71

[0x8] impi!MPIR_pmi_abort + 0x59

[0x9] impi!MPID_Abort + 0x3f4

[0xa] impi!PMPI_Abort + 0x825

[0xb] impi!PMPI_ABORT + 0x58

It does not hang when running the same test on the same machine from the command line or from our GUI. The GUI does start an associated conhost process but I'm not sure about task scheduler jobs. Anyway, I'd appreciate if someone could help me find a solution to this problem.

Alexei

EDIT: I've run the same program with exactly the same environment from command line and from the Windows Task Scheduler on my development laptop and the latter hangs exactly as on the Windows test bots. Changing various Scheduler job options did not change anything.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thank you for posting in Intel Communities.

Could you please provide the windows version and CPU details?

Are you using a single node or cluster of nodes?

Could you please provide us with a sample reproducer code?

What are the different job schedulers being used for launching MPI jobs? How do you change the job schedulers while launching the MPI application?

How are you launching the MPI job in a test bot? Could you please provide the steps to reproduce your issue along with the commands you used?

Could you please provide the complete debug log by using the below command:

example command: mpiexec -n <no of procesess> -genv I_MPI_DEBUG=30 application.exe

Thanks & Regards,

Hemanth

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Hemanth,

Thank you for getting back to me. I was able to reproduce the problem without involving the Windows Task Scheduler.

> Could you please provide the windows version and CPU details?

Windows 10 Pro 19044.1766 on Intel(R) Core(TM) i7-8850H CPU @ 2.60GHz 2.59 GHz

> Are you using a single node or cluster of nodes?

On a single Windows laptop.

> What are the different job schedulers being used for launching MPI jobs? How do you change the job schedulers while launching the MPI application?

No MPI job schedulers involved. Jobs were started from the command line.

> How are you launching the MPI job in a test bot? Could you please provide the steps to reproduce your issue along with the commands you used?

See below.

I've changed the test.f90 files from the Intel-MPI test folder replacing

call MPI_FINALIZE (ierr)

with

call MPI_Abort(MPI_COMM_WORLD, 666, ierr)

(the complete file is attached). Then I compiled it with

ifort test.f90 impi.lib

and ran it in a Windows Command Prompt window as

"c:\Program Files (x86)\Intel\oneAPI\mpi\2021.6.0\bin\mpiexec" -n 2 -genv I_MPI_DEBUG=30 test.exe

The output is attached as working.txt

Then I started another cmd.exe window from Far Manager and made sure the the environment variable in both windows are exactly the same. The above command run in this second window hangs after printing the Hello World messages (attached as hanging.txt)

The only difference between the two outputs (besides PIDs) seems to be the Hello World part that's missing in working.txt. I guess that's because it wasn't flushed before the job got killed.

Kind regards,

Alexei

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for providing the details.

>>" I started another cmd.exe window from Far Manager and made sure the the environment variable in both windows are exactly the same"

Could you please provide the steps to create the cmd.exe using the Far Manager?

Thanks & Regards,

Hemanth

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Hemanth,

I don't know what you mean by "create the cmd.exe using the Far Manager" but you can start cmd.exe (that's Windows command-line tool) in a separate window from Far if you type cmd on the Far's command line and press Shift-Enter. It will pop up a new terminal window with a command prompt in it.

Alexei

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for the information.

Unfortunately, Far manager is not supported by Intel MPI library. Could you please try with any one of the Supported job schedulers on Windows from the below link:

Thanks & Regards,

Hemanth

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Hemanth,

Far manager is NOT a job scheduler. It's just a file manager/command-line tool/terminal like the Norton commander, the ancient grandfather. Does Intel-MPI support running from the command line on Windows?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We have exactly the same problem on Windows 10 64bit (LTSB, LTSC, Enterprise etc.)

MPI_Abort hangs sometimes for no reason.

Intel MPI 2021.6

A workaround, solution or fix would be helpful

Best regards

Frank

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Frank_R_1 @Alexei_Yakovlev ,

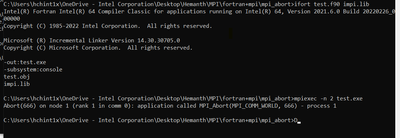

We can successfully run the test.f90 on the windows command prompt(Enterprise edition) as shown below screenshot.

Could you please try the below steps:

1. Open the Intel oneAPI command prompt

2 .Compile the code using the below command:

ifort test.f90 impi.lib

3. Run the executable binary using the below command:

mpiexec -n 2 -genv I_MPI_DEBUG=30 test.exe

It might be an environment/integration issue with the Far manager.

Thanks & Regards,

Hemanth

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We haven't heard back from you. Could you please provide an update on your issue?

Thanks & Regards,

Hemanth

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's clearly something related to the terminal settings, for example open handles passed from the parent process to mpiexec or something similar. As I wrote in the original post I can also successfully run it in some terminal windows but not in others, depending on how the terminal was launched. So the test you asked me to run would not add anything to what I already did.

Anyway, here's output of when it does not hang:

[0] MPI startup(): Intel(R) MPI Library, Version 2021.6 Build 20220227

[0] MPI startup(): Copyright (C) 2003-2022 Intel Corporation. All rights reserved.

[0] MPI startup(): library kind: release

[0] MPI startup(): shm segment size (1068 MB per rank) * (2 local ranks) = 2136 MB total

[0] MPI startup(): libfabric version: 1.13.2-impi

[0] MPI startup(): max_ch4_vnis: 1, max_reg_eps 64, enable_sep 0, enable_shared_ctxs 0, do_av_insert 0

[0] MPI startup(): max number of MPI_Request per vci: 67108864 (pools: 1)

libfabric:26740:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_CUDA not supported

libfabric:26740:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_ROCR not supported

libfabric:26740:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_ZE not supported

libfabric:26740:core:core:ofi_register_provider():474<info> registering provider: netdir (113.20)

libfabric:26740:core:core:ofi_register_provider():474<info> registering provider: ofi_rxm (113.20)

libfabric:26740:core:core:ofi_register_provider():474<info> registering provider: sockets (113.20)

libfabric:26740:core:core:ofi_register_provider():474<info> registering provider: tcp (113.20)

libfabric:26740:core:core:ofi_register_provider():474<info> registering provider: ofi_hook_perf (113.20)

libfabric:26740:core:core:ofi_register_provider():474<info> registering provider: ofi_hook_noop (113.20)

libfabric:26740:core:core:fi_getinfo():1138<info> Found provider with the highest priority tcp, must_use_util_prov = 1

libfabric:26740:core:core:fi_getinfo():1138<info> Found provider with the highest priority tcp, must_use_util_prov = 1

libfabric:26740:core:core:ofi_layering_ok():1001<info> Need core provider, skipping ofi_rxm

libfabric:26740:core:core:fi_getinfo():1161<info> Since tcp can be used, netdir has been skipped. To use netdir, please, set FI_PROVIDER=netdir

libfabric:26740:core:core:fi_getinfo():1161<info> Since tcp can be used, sockets has been skipped. To use sockets, please, set FI_PROVIDER=sockets

libfabric:26740:core:core:fi_getinfo():1138<info> Found provider with the highest priority tcp, must_use_util_prov = 1

libfabric:26740:core:core:ofi_layering_ok():1001<info> Need core provider, skipping ofi_rxm

libfabric:26740:core:core:fi_getinfo():1161<info> Since tcp can be used, netdir has been skipped. To use netdir, please, set FI_PROVIDER=netdir

libfabric:26740:core:core:fi_getinfo():1161<info> Since tcp can be used, sockets has been skipped. To use sockets, please, set FI_PROVIDER=sockets

libfabric:26740:core:core:fi_getinfo():1138<info> Found provider with the highest priority tcp, must_use_util_prov = 1

libfabric:26740:core:core:ofi_layering_ok():1001<info> Need core provider, skipping ofi_rxm

libfabric:26740:core:core:fi_getinfo():1161<info> Since tcp can be used, netdir has been skipped. To use netdir, please, set FI_PROVIDER=netdir

libfabric:26740:core:core:fi_getinfo():1161<info> Since tcp can be used, sockets has been skipped. To use sockets, please, set FI_PROVIDER=sockets

libfabric:26740:core:core:fi_getinfo():1138<info> Found provider with the highest priority tcp, must_use_util_prov = 1

libfabric:26740:core:core:ofi_layering_ok():1001<info> Need core provider, skipping ofi_rxm

libfabric:26740:core:core:fi_getinfo():1161<info> Since tcp can be used, netdir has been skipped. To use netdir, please, set FI_PROVIDER=netdir

libfabric:26740:core:core:fi_getinfo():1161<info> Since tcp can be used, sockets has been skipped. To use sockets, please, set FI_PROVIDER=sockets

libfabric:26740:core:core:fi_getinfo():1161<info> Since tcp can be used, netdir has been skipped. To use netdir, please, set FI_PROVIDER=netdir

libfabric:26740:core:core:fi_getinfo():1161<info> Since tcp can be used, sockets has been skipped. To use sockets, please, set FI_PROVIDER=sockets

libfabric:26740:core:core:fi_getinfo():1138<info> Found provider with the highest priority tcp, must_use_util_prov = 1

libfabric:26740:core:core:fi_getinfo():1123<warn> Can't find provider with the highest priority

libfabric:26740:core:core:fi_getinfo():1138<info> Found provider with the highest priority tcp, must_use_util_prov = 1

libfabric:26740:core:core:fi_getinfo():1161<info> Since tcp can be used, netdir has been skipped. To use netdir, please, set FI_PROVIDER=netdir

libfabric:26740:core:core:fi_getinfo():1161<info> Since tcp can be used, sockets has been skipped. To use sockets, please, set FI_PROVIDER=sockets

libfabric:26740:core:core:fi_getinfo():1138<info> Found provider with the highest priority tcp, must_use_util_prov = 1

libfabric:26740:core:core:ofi_layering_ok():1001<info> Need core [0] MPI startup(): libfabric provider: tcp;ofi_rxm

[0] MPI startup(): detected tcp;ofi_rxm provider, set device name to "tcp-ofi-rxm"

[0] MPI startup(): addrnamelen: zu

[0] MPI startup(): File "C:\Program Files (x86)\Intel\oneAPI\mpi\2021.6.0\env\../etc/tuning_skx_shm-ofi_tcp-ofi-rxm.dat" not found

[0] MPI startup(): Load tuning file: "C:\Program Files (x86)\Intel\oneAPI\mpi\2021.6.0\env\../etc/tuning_skx_shm-ofi.dat"

[0] MPI startup(): threading: mode: direct

[0] MPI startup(): threading: vcis: 1

[0] MPI startup(): threading: app_threads: -1

[0] MPI startup(): threading: runtime: generic

[0] MPI startup(): threading: progress_threads: 0

[0] MPI startup(): threading: async_progress: 0

[0] MPI startup(): threading: lock_level: global

[0] MPI startup(): threading: num_pools: 1

[0] MPI startup(): threading: enable_sep: 0

[0] MPI startup(): threading: direct_recv: 1

[0] MPI startup(): threading: zero_op_flags: 0

[0] MPI startup(): threading: num_am_buffers: 8

[0] MPI startup(): tag bits available: 19 (TAG_UB value: 524287)

[0] MPI startup(): source bits available: 20 (Maximal number of rank: 1048575)

[0] MPI startup(): Imported environment partly inaccesible. Map=0 Info=0

[1] MPI startup(): Imported environment partly inaccesible. Map=0 Info=0

[0] MPI startup(): Rank Pid Node name Pin cpu

[0] MPI startup(): 0 26740 alexei-laptop 0,1,2,3,4,5

[0] MPI startup(): 1 12272 alexei-laptop 6,7,8,9,10,11

[0] MPI startup(): I_MPI_ROOT=C:\Program Files (x86)\Intel\oneAPI\mpi\2021.6.0\env\..

[0] MPI startup(): I_MPI_ONEAPI_ROOT=C:\Program Files (x86)\Intel\oneAPI\mpi\2021.4.0

[0] MPI startup(): I_MPI_HYDRA_TOPOLIB=hwloc

[0] MPI startup(): I_MPI_DEBUG=30

Abort(666) on node 1 (rank 1 in comm 0): application called MPI_Abort(MPI_COMM_WORLD, 666) - process 1

And here's when it does hang.

[0] MPI startup(): Intel(R) MPI Library, Version 2021.6 Build 20220227

[0] MPI startup(): Copyright (C) 2003-2022 Intel Corporation. All rights reserved.

[0] MPI startup(): library kind: release

[0] MPI startup(): shm segment size (1068 MB per rank) * (2 local ranks) = 2136 MB total

[0] MPI startup(): libfabric version: 1.13.2-impi

[0] MPI startup(): max_ch4_vnis: 1, max_reg_eps 64, enable_sep 0, enable_shared_ctxs 0, do_av_insert 0

[0] MPI startup(): max number of MPI_Request per vci: 67108864 (pools: 1)

libfabric:23896:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_CUDA not supported

libfabric:23896:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_ROCR not supported

libfabric:23896:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_ZE not supported

libfabric:23896:core:core:ofi_register_provider():474<info> registering provider: netdir (113.20)

libfabric:23896:core:core:ofi_register_provider():474<info> registering provider: ofi_rxm (113.20)

libfabric:23896:core:core:ofi_register_provider():474<info> registering provider: sockets (113.20)

libfabric:23896:core:core:ofi_register_provider():474<info> registering provider: tcp (113.20)

libfabric:23896:core:core:ofi_register_provider():474<info> registering provider: ofi_hook_perf (113.20)

libfabric:23896:core:core:ofi_register_provider():474<info> registering provider: ofi_hook_noop (113.20)

libfabric:23896:core:core:fi_getinfo():1138<info> Found provider with the highest priority tcp, must_use_util_prov = 1

libfabric:23896:core:core:fi_getinfo():1138<info> Found provider with the highest priority tcp, must_use_util_prov = 1

libfabric:23896:core:core:ofi_layering_ok():1001<info> Need core provider, skipping ofi_rxm

libfabric:23896:core:core:fi_getinfo():1161<info> Since tcp can be used, netdir has been skipped. To use netdir, please, set FI_PROVIDER=netdir

libfabric:23896:core:core:fi_getinfo():1161<info> Since tcp can be used, sockets has been skipped. To use sockets, please, set FI_PROVIDER=sockets

libfabric:23896:core:core:fi_getinfo():1138<info> Found provider with the highest priority tcp, must_use_util_prov = 1

libfabric:23896:core:core:ofi_layering_ok():1001<info> Need core provider, skipping ofi_rxm

libfabric:23896:core:core:fi_getinfo():1161<info> Since tcp can be used, netdir has been skipped. To use netdir, please, set FI_PROVIDER=netdir

libfabric:23896:core:core:fi_getinfo():1161<info> Since tcp can be used, sockets has been skipped. To use sockets, please, set FI_PROVIDER=sockets

libfabric:23896:core:core:fi_getinfo():1138<info> Found provider with the highest priority tcp, must_use_util_prov = 1

libfabric:23896:core:core:ofi_layering_ok():1001<info> Need core provider, skipping ofi_rxm

libfabric:23896:core:core:fi_getinfo():1161<info> Since tcp can be used, netdir has been skipped. To use netdir, please, set FI_PROVIDER=netdir

libfabric:23896:core:core:fi_getinfo():1161<info> Since tcp can be used, sockets has been skipped. To use sockets, please, set FI_PROVIDER=sockets

libfabric:23896:core:core:fi_getinfo():1138<info> Found provider with the highest priority tcp, must_use_util_prov = 1

libfabric:23896:core:core:ofi_layering_ok():1001<info> Need core provider, skipping ofi_rxm

libfabric:23896:core:core:fi_getinfo():1161<info> Since tcp can be used, netdir has been skipped. To use netdir, please, set FI_PROVIDER=netdir

libfabric:23896:core:core:fi_getinfo():1161<info> Since tcp can be used, sockets has been skipped. To use sockets, please, set FI_PROVIDER=sockets

libfabric:23896:core:core:fi_getinfo():1161<info> Since tcp can be used, netdir has been skipped. To use netdir, please, set FI_PROVIDER=netdir

libfabric:23896:core:core:fi_getinfo():1161<info> Since tcp can be used, sockets has been skipped. To use sockets, please, set FI_PROVIDER=sockets

libfabric:23896:core:core:fi_getinfo():1138<info> Found provider with the highest priority tcp, must_use_util_prov = 1

libfabric:23896:core:core:fi_getinfo():1123<warn> Can't find provider with the highest priority

libfabric:23896:core:core:fi_getinfo():1138<info> Found provider with the highest priority tcp, must_use_util_prov = 1

libfabric:23896:core:core:fi_getinfo():1161<info> Since tcp can be used, netdir has been skipped. To use netdir, please, set FI_PROVIDER=netdir

libfabric:23896:core:core:fi_getinfo():1161<info> Since tcp can be used, sockets has been skipped. To use sockets, please, set FI_PROVIDER=sockets

libfabric:23896:core:core:fi_getinfo():1138<info> Found provider with the highest priority tcp, must_use_util_prov = 1

libfabric:23896:core:core:ofi_layering_ok():1001<info> Need core [0] MPI startup(): libfabric provider: tcp;ofi_rxm

[0] MPI startup(): detected tcp;ofi_rxm provider, set device name to "tcp-ofi-rxm"

[0] MPI startup(): addrnamelen: zu

[0] MPI startup(): File "c:\Program Files (x86)\Intel\oneAPI\mpi\2021.6.0\env\../etc/tuning_skx_shm-ofi_tcp-ofi-rxm.dat" not found

[0] MPI startup(): Load tuning file: "c:\Program Files (x86)\Intel\oneAPI\mpi\2021.6.0\env\../etc/tuning_skx_shm-ofi.dat"

[0] MPI startup(): threading: mode: direct

[0] MPI startup(): threading: vcis: 1

[0] MPI startup(): threading: app_threads: -1

[0] MPI startup(): threading: runtime: generic

[0] MPI startup(): threading: progress_threads: 0

[0] MPI startup(): threading: async_progress: 0

[0] MPI startup(): threading: lock_level: global

[0] MPI startup(): threading: num_pools: 1

[0] MPI startup(): threading: enable_sep: 0

[0] MPI startup(): threading: direct_recv: 1

[0] MPI startup(): threading: zero_op_flags: 0

[0] MPI startup(): threading: num_am_buffers: 8

[0] MPI startup(): tag bits available: 19 (TAG_UB value: 524287)

[0] MPI startup(): source bits available: 20 (Maximal number of rank: 1048575)

[1] MPI startup(): Imported environment partly inaccesible. Map=0 Info=0

[0] MPI startup(): Imported environment partly inaccesible. Map=0 Info=0

[0] MPI startup(): Rank Pid Node name Pin cpu

[0] MPI startup(): 0 23896 alexei-laptop 0,1,2,3,4,5

[0] MPI startup(): 1 13868 alexei-laptop 6,7,8,9,10,11

Abort(666) on node 1 (rank 1 in comm 0): application called MPI_Abort(MPI_COMM_WORLD, 666) - process 1

[0] MPI startup(): I_MPI_ROOT=c:\Program Files (x86)\Intel\oneAPI\mpi\2021.6.0\env\..

[0] MPI startup(): I_MPI_ONEAPI_ROOT=C:\Program Files (x86)\Intel\oneAPI\mpi\2021.4.0

[0] MPI startup(): I_MPI_HYDRA_TOPOLIB=hwloc

[0] MPI startup(): I_MPI_DEBUG=30

Hello world: rank 0 of 2 running on

alexei-laptop

Hello world: rank 1 of 2 running on

alexei-laptop

Abort(666) on node 0 (rank 0 in comm 0): application called MPI_Abort(MPI_COMM_WORLD, 666) - process 0Kind regards,

Alexei

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

The far manager is not supported by Intel MPI Library but, we can run successfully on the Intel oneAPI command prompt. Could you please let us know whether you facing any issues other than the far manager? Else, can we go ahead and close the thread?

Thanks & Regards,

Hemanth

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

If you read the original post, you'll see that it started with IMPI hanging inside Windows task scheduler jobs. Do you also not support Windows task scheduler? I guess you can say the same about any problem.

I would appreciate if you could escalate this problem to developers so we could have a more to the point discussion.

Alexei

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Alexei,

We have encountered a similar behavior.

Our application.exe starts mpiexec -localroot -delegate -np #cpu path/to/solver arguments.

When we start our application from windows explorer or by clicking via icon the the mpi jobs (which calls abort) hangs exactly as you described. When we start application.exe from a cmd.exe or msys.exe shell it does not hang!

We also did the same as you by using identical environment variables, but this does not have any influence.

It might be a problem of how windows start applications from explorer or icon or from cmd.exe

@intel support

Build a program that spawns mpiexec with the program Alexei provide and start it from explorer or from cmd.exe and investigate the different behavior, please!

Best regards

Frank

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Frank,

Thank you for your comments. Our problem is that our test set is started by the Windows task scheduler at a certain time once a day. The task first starts a bat file that starts an MSYS bash script. The latter downloads an MSI package (the day's snapshot) of our software, installs it and then runs all the tests. There's about a thousand such tests that run for 10-12 hours. Some of them may call MPI_Abort and then, if we use the IMPI 2021.6 RTE, the whole thing gets stuck.

We currently use a workaround, a legacy RTE from IMPI 2018.1, which appears to work just fine with applications built with IMPI 2021.6, but we would of course prefer to not have to resort to such hacky means.

Kind regards,

Alexei

P.S. By RTE I mean the mpiexec/pmi_proxy or mpiexec/hydra_pmi_proxy pair.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Alexei_Yakovlev ,

Could you please confirm whether this is the overview of the above posts,

1) By using Intel oneAPI command prompt, you can run the program as expected without hanging.

2) By using Far manager(not supported by Intel), when you run the sample program it got hanged.

3) By using Task scheduler when you run the sample program it got hanged only when you run/start a bash file after loading/running the MSYS bash script and downloading the MSI package.

>>"This occurs on both Windows test bots (one Intel and the other AMD based)."

Are you using both the CPUs altogether as multi-node or if you are running on a single node, were you able to execute the program successfully on Intel CPU using Task Scheduler?

>>"The GUI does start an associated conhost process but I'm not sure about task scheduler jobs"

Could you please elaborate on the above statement of yours? Does conhost process involve running the MPI Application?

It is recommended to use the MPI_Abort within a condition statement in your provided code.

Can we run the mpi application using Task scheduler without loading the MYSYS bash script? If yes, please provide the steps and debug log using Task Scheduler.

>>"Some of them may call MPI_Abort and then if we use the IMPI 2021.6 RTE, the whole thing gets stuck."

Could you please confirm whether the process is a success or if it got hanged using the Task scheduler?

Thanks & Regards,

Hemanth

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Frank_R_1 ,

As you are facing a different issue, could you please open a new thread from the below link:

https://community.intel.com/t5/Intel-oneAPI-HPC-Toolkit/bd-p/oneapi-hpc-toolkit

Thanks & Regards

Hemanth

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We haven't heard back from you. Could you please provide an update on your issue?

Thanks & Regards

Hemanth

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We have not heard back from you. This thread will no longer be monitored by Intel. If you need further assistance, please post a new question.

Thanks & Regards,

Hemanth

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Hemanth,

Sorry I was on holiday for a few weeks.

I just checked the following command

"c:\Program Files (x86)\Intel\oneAPI\mpi\2021.6.0\bin\mpiexec" -n 2 -genv I_MPI_DEBUG=30 test.exe

executed directly from the MS Task Scheduler (i.e. without an intermediate MSYS2 shell) hangs the same way as it does in our regression tests.

Kind regards,

Alexei

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page