- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry, I cannot reply any comments. So I post a new one.

I use macOS Big Sur, version 11.1(20C69), and Google Chrome version 100.0.4896.75. I change many ways, still cannot reply an comments. Such as, safari(on macOS), Microsoft Edge.

#include "mpi.h"

#include <stdio.h>

#include <string.h>

int main(int argc, char *argv[])

{

int i, rank, size, namelen;

char name[MPI_MAX_PROCESSOR_NAME];

MPI_Status stat;

MPI_Init(&argc, &argv);

MPI_Comm_size(MPI_COMM_WORLD, &size);

MPI_Comm_rank(MPI_COMM_WORLD, &rank);

MPI_Get_processor_name(name, &namelen);

if (rank == 0) {

printf("Hello world: rank %d of %d running on %s\n", rank, size, name);

for (i = 1; i < size; i++) {

MPI_Recv(&rank, 1, MPI_INT, i, 1, MPI_COMM_WORLD, &stat);

MPI_Recv(&size, 1, MPI_INT, i, 1, MPI_COMM_WORLD, &stat);

MPI_Recv(&namelen, 1, MPI_INT, i, 1, MPI_COMM_WORLD, &stat);

MPI_Recv(name, namelen + 1, MPI_CHAR, i, 1, MPI_COMM_WORLD, &stat);

printf("Hello world: rank %d of %d running on %s\n", rank, size, name);

}

} else {

MPI_Send(&rank, 1, MPI_INT, 0, 1, MPI_COMM_WORLD);

MPI_Send(&size, 1, MPI_INT, 0, 1, MPI_COMM_WORLD);

MPI_Send(&namelen, 1, MPI_INT, 0, 1, MPI_COMM_WORLD);

MPI_Send(name, namelen + 1, MPI_CHAR, 0, 1, MPI_COMM_WORLD);

}

MPI_Finalize();

return (0);

}I use the following command. The envfile is mpi enviroment in container.

mpirun -np 1 podman run --env-host --env-file envfile --userns=keep-id --network=host --pid=host --ipc=host -w /exports -v .:/exports:z dptech/vasp:5.4.4 /exports/mpitest

The following is envfile.

MKLROOT=/opt/intel/oneapi/mkl/2022.0.2

MANPATH=/opt/intel/oneapi/mpi/2021.5.1/man:/opt/intel/oneapi/debugger/2021.5.0/documentation/man:/opt/intel/oneapi/compiler/2022.0.2/documentation/en/man/common::

LIBRARY_PATH=/opt/intel/oneapi/tbb/2021.5.1/env/../lib/intel64/gcc4.8:/opt/intel/oneapi/mpi/2021.5.1//libfabric/lib:/opt/intel/oneapi/mpi/2021.5.1//lib/release:/opt/intel/oneapi/mpi/2021.5.1//lib:/opt/intel/oneapi/mkl/2022.0.2/lib/intel64:/opt/intel/oneapi/compiler/2022.0.2/linux/compiler/lib/intel64_lin:/opt/intel/oneapi/compiler/2022.0.2/linux/lib

OCL_ICD_FILENAMES=libintelocl_emu.so:libalteracl.so:/opt/intel/oneapi/compiler/2022.0.2/linux/lib/x64/libintelocl.so

LD_LIBRARY_PATH=/home/rsync/.local/container/lib64:/home/rsync/.local/container/lib:/opt/intel/oneapi/tbb/2021.5.1/env/../lib/intel64/gcc4.8:/opt/intel/oneapi/mpi/2021.5.1//libfabric/lib:/opt/intel/oneapi/mpi/2021.5.1//lib/release:/opt/intel/oneapi/mpi/2021.5.1//lib:/opt/intel/oneapi/mkl/2022.0.2/lib/intel64:/opt/intel/oneapi/debugger/2021.5.0/gdb/intel64/lib:/opt/intel/oneapi/debugger/2021.5.0/libipt/intel64/lib:/opt/intel/oneapi/debugger/2021.5.0/dep/lib:/opt/intel/oneapi/compiler/2022.0.2/linux/lib:/opt/intel/oneapi/compiler/2022.0.2/linux/lib/x64:/opt/intel/oneapi/compiler/2022.0.2/linux/lib/oclfpga/host/linux64/lib:/opt/intel/oneapi/compiler/2022.0.2/linux/compiler/lib/intel64_lin

FPGA_VARS_DIR=/opt/intel/oneapi/compiler/2022.0.2/linux/lib/oclfpga

FI_PROVIDER_PATH=/opt/intel/oneapi/mpi/2021.5.1//libfabric/lib/prov:/usr/lib64/libfabric

CMPLR_ROOT=/opt/intel/oneapi/compiler/2022.0.2

CPATH=/opt/intel/oneapi/tbb/2021.5.1/env/../include:/opt/intel/oneapi/mpi/2021.5.1//include:/opt/intel/oneapi/mkl/2022.0.2/include:/opt/intel/oneapi/dev-utilities/2021.5.2/include

INTELFPGAOCLSDKROOT=/opt/intel/oneapi/compiler/2022.0.2/linux/lib/oclfpga

NLSPATH=/opt/intel/oneapi/mkl/2022.0.2/lib/intel64/locale/%l_%t/%N:/opt/intel/oneapi/compiler/2022.0.2/linux/compiler/lib/intel64_lin/locale/%l_%t/%N

PATH=/home/rsync/.local/container/bin:/home/rsync/.local/container/sbin:/opt/intel/oneapi/mpi/2021.5.1//libfabric/bin:/opt/intel/oneapi/mpi/2021.5.1//bin:/opt/intel/oneapi/mkl/2022.0.2/bin/intel64:/opt/intel/oneapi/dev-utilities/2021.5.2/bin:/opt/intel/oneapi/debugger/2021.5.0/gdb/intel64/bin:/opt/intel/oneapi/compiler/2022.0.2/linux/lib/oclfpga/bin:/opt/intel/oneapi/compiler/2022.0.2/linux/bin/intel64:/opt/intel/oneapi/compiler/2022.0.2/linux/bin:/home/rsync/.local/bin:/public1/clurm/bin:/usr/lib64/qt-3.3/bin:/usr/local/bin:/usr/bin:/usr/local/sbin:/usr/sbin:/opt/ibutils/bin:/home/rsync/.local/bin:/home/rsync/bin:/opt/vasp.5.4.4/bin

TBBROOT=/opt/intel/oneapi/tbb/2021.5.1/env/..

INTEL_PYTHONHOME=/opt/intel/oneapi/debugger/2021.5.0/dep

CLASSPATH=/opt/intel/oneapi/mpi/2021.5.1//lib/mpi.jar

PKG_CONFIG_PATH=/opt/intel/oneapi/tbb/2021.5.1/env/../lib/pkgconfig:/opt/intel/oneapi/mpi/2021.5.1/lib/pkgconfig:/opt/intel/oneapi/mkl/2022.0.2/lib/pkgconfig:/opt/intel/oneapi/compiler/2022.0.2/lib/pkgconfig

GDB_INFO=/opt/intel/oneapi/debugger/2021.5.0/documentation/info/

INFOPATH=/opt/intel/oneapi/debugger/2021.5.0/gdb/intel64/lib

CMAKE_PREFIX_PATH=/opt/intel/oneapi/tbb/2021.5.1/env/..:/opt/intel/oneapi/compiler/2022.0.2/linux/IntelDPCPP

ONEAPI_ROOT=/opt/intel/oneapi

I_MPI_ROOT=/opt/intel/oneapi/mpi/2021.5.1

_=/opt/intel/oneapi/mpi/2021.5.1/bin/mpiexec.hydraLink Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for posting in Intel communities.

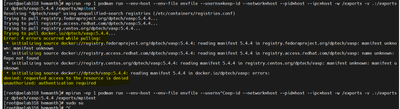

We tried to run the mpi program using podman, but we are facing the issue as permission denied from the docker registry as shown below screenshot.

Is it a private registry? If yes, is it possible to give access to the registry?

Thanks & Regards,

Hemanth

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

As you are facing issues to respond to the existing post in the Intel communities. So, we have contacted you privately, please check your inbox and respond back to us.

Thanks & Regards,

Hemanth.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

As we are communicating internally through emails, Since this is a duplicate thread of https://community.intel.com/t5/Intel-oneAPI-HPC-Toolkit/Re-Intel-oneAPI-with-Podman/m-p/1394023#M9611, we will no longer monitor this thread. We will continue addressing this issue in the other thread

Thanks & Regards,

Hemanth

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page