- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, I've been having some issues with some test programs failing from seg faults.

It occurs while using the intel-provided oneapi hpc-kit container (https://hub.docker.com/r/intel/oneapi-hpckit/) with I_MPI_FABRICS='shm' (seems to be required when running in a container). The failures only occurred on jobs running on one node, on a call to MPI_IRECV.

I noticed this thread had a similar issue:

https://community.intel.com/t5/Intel-oneAPI-HPC-Toolkit/Intel-oneAPI-2021-4-SHM-Issue/m-p/1324805

And it's claimed that the issue is fixed with the new version, but in both the container and when run directly the newest versions give these errors whenver 'shm' is set. Switching to 'ofi' fixes the issue when run directly, but that's not an option in the container. Here's my ifort and mpi versions:

ifort (IFORT) 2021.6.0 20220226

Intel(R) MPI Library for Linux* OS, Version 2021.6 Build 20220227 (id: 28877f3f32)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

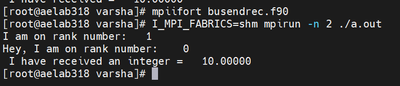

We can observe in your code that there are no wait calls for non-blocking send and receive calls. It is recommended to use the MPI_Wait calls after the ISend and IReceive calls.

And also, we recommend you use 2 or more ranks for launching your MPI Applications.

I_MPI_FABRICS=shm mpirun -n 2 ./a.out

Please try using the attached sample code where we were able to run the code successfully with the FI Provider as shm/ofi . Please find the below screenshot for more details:

Thanks & Regards,

Varsha

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Since this is an MPI question, moving this to the oneAPI HPC Toolkit forum.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for posting in Intel Communities.

Could you please provide us with the OS details and CPU details you are using?

And also, could you please provide us with the complete sample reproducer code along with steps to reproduce the issue at our end?

Thanks & Regards,

Varsha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We have not heard back from you. Could you please provide us with all the details mentioned in the previous post?

Thanks & Regards,

Varsha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

program shmbugtest

use mpi

implicit none

integer, allocatable :: get_data(:), put_data(:)

integer :: ierr

type(MPI_REQUEST) :: req

call MPI_init(ierr)

allocate(get_data(1), put_data(1))

put_data(1) = -1

call MPI_ISEND( put_data, 1, MPI_INT, 0, 1, MPI_COMM_WORLD, req, ierr)

call MPI_IRECV( get_data, 1, MPI_INT, 0, 1, MPI_COMM_WORLD, req, ierr)

call MPI_finalize(ierr)

print *, get_data(1)

end program

Here's a simple program that shows the bug. Prints '-1' if I_MPI_FABRICS is set to 'ofi', but seg faults with the following when set to 'shm':

forrtl: severe (71): integer divide by zero

Image PC Routine Line Source

a.out 00000000004044BB Unknown Unknown Unknown

libpthread-2.28.s 00007F0445C3FCE0 Unknown Unknown Unknown

libmpi.so.12.0.0 00007F04468790A4 Unknown Unknown Unknown

libmpi.so.12.0.0 00007F0446752035 MPI_Isend Unknown Unknown

libmpifort.so.12. 00007F0447B8E9F0 PMPI_ISEND Unknown Unknown

a.out 0000000000403511 MAIN__ 16 shm.F90

a.out 0000000000403162 Unknown Unknown Unknown

libc-2.28.so 00007F044551FCF3 __libc_start_main Unknown Unknown

a.out 000000000040306E Unknown Unknown Unknown

This happened on both the machines I tested on, centos 8(stream) with AMD EPYC 7742 and centos 7 with Intel Xeon Platinum 8160. It also happens in the docker hub containers (shm seems to be required to use mpirun in the container, thats the main reason this is an issue).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry I thought I sent in a reply earlier, looks like it didn't go through.

Here's a short example I wrote up:

program shmbugtest

use mpi

implicit none

integer, allocatable :: get_data(:), put_data(:)

integer :: ierr

type(MPI_REQUEST) :: req

call MPI_init(ierr)

allocate(get_data(1), put_data(1))

put_data(1) = -1

call MPI_ISEND( put_data, 1, MPI_INT, 0, 1, MPI_COMM_WORLD, req, ierr)

call MPI_IRECV( get_data, 1, MPI_INT, 0, 1, MPI_COMM_WORLD, req, ierr)

call MPI_finalize(ierr)

print *, get_data(1)

end program

It'll print and exit successfully when I_MPI_FABRICS is set to 'ofi', but fail and seg fault when set to 'shm'. This happens with the newest compiler/mpi (oneapi 2022.2) on two systems, cent os 8 with AMD EPYC 7742 and centos 7 with a Xeon Platinum 8160. My main issue is running in the container since shm is required to use mpirun.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for the details and information.

Could you please let us know what steps you have followed after downloading the image?

Thanks & Regards,

Varsha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

To cause the error?Just compile the code above and:

export I_MPI_FABRICS=shm

mpirun -n 1 ./a.out

The issue is not container specific so maybe I shouldn't have mentioned it, it's just where I first encountered it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We are working on your issue. We will get back to you soon.

Thanks & Regards,

Varsha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We can observe in your code that there are no wait calls for non-blocking send and receive calls. It is recommended to use the MPI_Wait calls after the ISend and IReceive calls.

And also, we recommend you use 2 or more ranks for launching your MPI Applications.

I_MPI_FABRICS=shm mpirun -n 2 ./a.out

Please try using the attached sample code where we were able to run the code successfully with the FI Provider as shm/ofi . Please find the below screenshot for more details:

Thanks & Regards,

Varsha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We have not heard back from you. Could you please provide an update on your issue?

Thanks & Regards,

Varsha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We have not heard back from you. This thread will no longer be monitored by Intel. If you need any additional information, please post a new question.

Thanks & Regards,

Varsha

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page