- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I get a segmentation fault when running the test suite for parallel HDF5 (1.10.8) when compiling with oneAPI 2021 update 4. The tests pass OK with the Gnu compiler (4.8.5 or 9.1), with an older Intel compiler (2019 update 5 with 2019 update 8 MPI), or when using OpenMPI 4.1.2 compiled with the oneAPI compiler. The system specs are

% cat /etc/redhat-release

Red Hat Enterprise Linux Server release 7.9 (Maipo)

% ucx_info -v

# UCT version=1.8.1 revision 6b29558

# configured with: --build=x86_64-redhat-linux-gnu --host=x86_64-redhat-linux-gnu --program-prefix= --disable-dependency-tracking --prefix=/usr --exec-prefix=/usr --bindir=/usr/bin --sbindir=/usr/sbin --sysconfdir=/etc --datadir=/usr/share --includedir=/usr/include --libdir=/usr/lib64 --libexecdir=/usr/libexec --localstatedir=/var --sharedstatedir=/var/lib --mandir=/usr/share/man --infodir=/usr/share/info --disable-optimizations --disable-logging --disable-debug --disable-assertions --disable-params-check --enable-examples --without-java --enable-cma --without-cuda --without-gdrcopy --with-verbs --without-cm --without-knem --with-rdmacm --without-rocm --without-xpmem --without-ugni

From the hdf5 directory, the steps to produce the error are:

setenv CC icc

setenv CXX icpc

setenv FC ifort

setenv F77 ifort

setenv F90 ifort

setenv CFLAGS "-O3 -fp-model precise"

setenv CXXFLAGS "-O3 -fp-model precise"

setenv FFLAGS "-O3 -fp-model precise"

setenv FCFLAGS "-O3 -fp-model precise"

setenv RUNPARALLEL "mpirun -np 4"

./configure --prefix=/usr/local/apps/phdf5-1.10.8/intel-21.4 --enable-fortran --enable-shared --enable-parallel --with-pic CC=mpiicc FC=mpiifort

make >& make.pintelmpi.log

make check >& make.pintelmpi.check

All is fine until the test for t_bigio. It can be run standalone from the testpar directory:

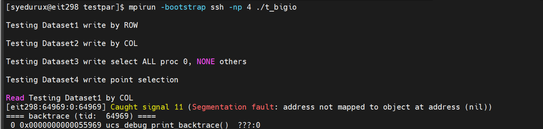

% mpirun -np 4 ./t_bigio

Testing Dataset1 write by ROW

Testing Dataset2 write by COL

Testing Dataset3 write select ALL proc 0, NONE others

Testing Dataset4 write point selection

Read Testing Dataset1 by COL

[atmos5:24597:0:24597] Caught signal 11 (Segmentation fault: address not mapped to object at address (nil))

==== backtrace (tid: 24597) ====

0 0x000000000004d445 ucs_debug_print_backtrace() ???:0

1 0x00000000006e5850 MPII_Dataloop_stackelm_offset() /build/impi/_buildspace/release/../../src/mpi/datatype/typerep/dataloop/segment.c:1026

2 0x00000000005216b9 segment_init() /build/impi/_buildspace/release/../../src/mpi/datatype/typerep/dataloop/looputil.c:318

3 0x00000000005216b9 MPIR_Segment_alloc() /build/impi/_buildspace/release/../../src/mpi/datatype/typerep/dataloop/looputil.c:369

4 0x000000000078fbef MPIR_Typerep_pack() /build/impi/_buildspace/release/../../src/mpi/datatype/typerep/src/typerep_pack.c:64

5 0x000000000081d215 pack_frame() /build/impi/_buildspace/release/../../src/mpid/ch4/shm/posix/eager/include/intel_transport_send.h:560

6 0x000000000081d215 progress_serialization_frame() /build/impi/_buildspace/release/../../src/mpid/ch4/shm/posix/eager/include/intel_transport_send.h:1235

7 0x000000000081603d impi_shm_progress() /build/impi/_buildspace/release/../../src/mpid/ch4/shm/posix/eager/include/intel_transport_progress.h:198

8 0x00000000001de023 MPIDI_SHM_progress() /build/impi/_buildspace/release/../../src/mpid/ch4/shm/src/../src/shm_noinline.h:106

9 0x00000000001de023 MPIDI_Progress_test() /build/impi/_buildspace/release/../../src/mpid/ch4/src/ch4_progress.c:152

10 0x00000000001de023 MPID_Progress_test() /build/impi/_buildspace/release/../../src/mpid/ch4/src/ch4_progress.c:218

11 0x00000000001de023 MPID_Progress_wait() /build/impi/_buildspace/release/../../src/mpid/ch4/src/ch4_progress.c:279

12 0x000000000079c74a MPIR_Waitall_impl() /build/impi/_buildspace/release/../../src/mpi/request/waitall.c:46

13 0x000000000079c74a MPID_Waitall() /build/impi/_buildspace/release/../../src/mpid/ch4/include/mpidpost.h:186

14 0x000000000079c74a MPIR_Waitall() /build/impi/_buildspace/release/../../src/mpi/request/waitall.c:173

15 0x000000000079c74a PMPI_Waitall() /build/impi/_buildspace/release/../../src/mpi/request/waitall.c:331

16 0x0000000000088824 ADIOI_R_Exchange_data() /build/impi/_buildspace/release/src/mpi/romio/../../../../../src/mpi/romio/adio/common/ad_read_coll.c:991

17 0x000000000008743d ADIOI_Read_and_exch() /build/impi/_buildspace/release/src/mpi/romio/../../../../../src/mpi/romio/adio/common/ad_read_coll.c:802

18 0x000000000008743d ADIOI_GEN_ReadStridedColl() /build/impi/_buildspace/release/src/mpi/romio/../../../../../src/mpi/romio/adio/common/ad_read_coll.c:247

19 0x0000000000adc6d9 MPIOI_File_read_all() /build/impi/_buildspace/release/src/mpi/romio/../../../../../src/mpi/romio/mpi-io/read_all.c:115

20 0x0000000000adc992 PMPI_File_read_at_all() /build/impi/_buildspace/release/src/mpi/romio/../../../../../src/mpi/romio/mpi-io/read_atall.c:58

21 0x00000000003b222a H5FD_get_mpio_atomicity() ???:0

22 0x000000000014ef20 H5FD_read() ???:0

23 0x0000000000126725 H5F__accum_read() ???:0

24 0x00000000002613e9 H5PB_read() ???:0

25 0x0000000000134959 H5F_block_read() ???:0

26 0x00000000003a3bd2 H5D__mpio_select_read() ???:0

27 0x00000000003a3e32 H5D__contig_collective_read() ???:0

28 0x00000000000eea22 H5D__read() ???:0

29 0x00000000000ee1cf H5Dread() ???:0

30 0x000000000040a86c ???() /work/STAFF/eanderso/hdf5-1.10.8/testpar/.libs/t_bigio:0

31 0x0000000000404436 ???() /work/STAFF/eanderso/hdf5-1.10.8/testpar/.libs/t_bigio:0

32 0x0000000000022555 __libc_start_main() ???:0

33 0x0000000000404269 ???() /work/STAFF/eanderso/hdf5-1.10.8/testpar/.libs/t_bigio:0

=================================

===================================================================================

= BAD TERMINATION OF ONE OF YOUR APPLICATION PROCESSES

= RANK 0 PID 24597 RUNNING AT atmos5

= KILLED BY SIGNAL: 11 (Segmentation fault)

===================================================================================

===================================================================================

= BAD TERMINATION OF ONE OF YOUR APPLICATION PROCESSES

= RANK 1 PID 24598 RUNNING AT atmos5

= KILLED BY SIGNAL: 9 (Killed)

===================================================================================

===================================================================================

= BAD TERMINATION OF ONE OF YOUR APPLICATION PROCESSES

= RANK 2 PID 24599 RUNNING AT atmos5

= KILLED BY SIGNAL: 9 (Killed)

===================================================================================

===================================================================================

= BAD TERMINATION OF ONE OF YOUR APPLICATION PROCESSES

= RANK 3 PID 24600 RUNNING AT atmos5

= KILLED BY SIGNAL: 9 (Killed)

===================================================================================

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for reaching out to us.

Thanks for providing the steps to reproduce your issue at our end.

We have tried to reproduce your issue on both Ubuntu 18.04 and Rocky Linux 8.5 machines using oneAPI 2021.3 and oneAPI 2021.4 by following the below steps:

1. git clone https://github.com/HDFGroup/hdf5.git

2. cd hdf5

3. source /opt/intel/oneapi/setvars.sh

4. CC=icc CXX=icpc FC=ifort F77=ifort F90=ifort CFLAGS="-O3 -fp-model precise" CXXFLAGS="-O3 -fp-model precise" FFLAGS="-O3 -fp-model precise" FCFLAGS="-O3 -fp-model precise" RUNPARALLEL="mpirun -np 4" ./configure --prefix=/usr/local/apps/phdf5-1.10.8/intel-21.4 --enable-fortran --enable-shared --enable-parallel --with-pic CC=mpiicc FC=mpiifort

5. make >& make.pintelmpi.log

6. make check >& make.pintelmpi.check

(or)

cd testpar/ && mpirun -np 4 ./t_bigio

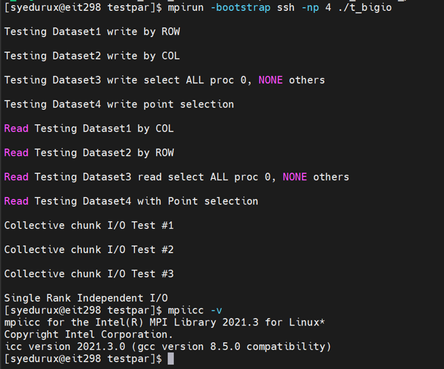

**Using oneAPI 2021.3, it is working fine on both Ubuntu 18.04 and Rocky Linux 8.5 machines as shown in the below screenshot.

But, using oneAPI 2021.4, we are getting the segmentation fault error on both Ubuntu 18.04 and Rocky Linux 8.5 machines as shown in the below screenshot.

We are working on your issue internally and will get back to you soon.

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello!

As workaround for this issue I can suggest you to set I_MPI_SHM=off. We are working on fix for this.

Thanks,

Vadim.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thank you for your patience. The issue raised by you has been fixed in Intel MPI 2021.6 version(HPC Toolkit 2022.2). Please download and let us know if this resolves your issue.

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We assume that your issue is resolved. If you need any additional information, please post a new question as this thread will no longer be monitored by Intel.

Thanks & Regards,

Santosh

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page