- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello!

I was wondering if anybody had suggestions on how to implement a summed area table with Intel TBB. The general idea of the algorithm:

1. Given an input, do an independent (inclusive) prefix scan on every row. Call this Intermediate.

2. Transpose Intermediate, call this IntermediateTranspose.

3. Do step (1) again, only do an inclusive prefix scan on every row of IntermediateTranspose. Call this OutputTranspose.

4. Transpose from (3) OutputTranspose -> Output.

To be clear, I'm *NOT* asking for anybody to code it for me! I'm very new to TBB and am struggling to find a way to do a prefix scan on independent rows. I am curious if there are any kinds of fancy iterators that I have not found yet. For example, a really dumb way to implement step (1):

for (row : input)

tbb:prefix_scan(/* just this row */);

Are there any ways to basically unfold the outer "for each row in the input" into a prefix_scan? I can't really seem to figure out how to approach the 2D case here...

In some senses you could think of it as a matrix multiplication (for just step (1)), so maybe doing that instead of prefix_scan would be better? I don't know how I would approach that with TBB either, but the concept is multiplying with an (excuse my bad math nomenclature) "upper right triangular matrix of 1s".

# +- -+ +- -+ +- -+ # | 1 2 3 | | 1 1 1 | | 1 3 6 | # | 4 5 6 | * | 0 1 1 | = | 4 9 15 | # | 7 8 9 | | 0 0 1 | | 7 15 24 | # +- -+ +- -+ +- -+

I wouldn't want to explicitly store the matrix though, and don't think doing an implicit matrix multiply is something that would work with TBB? Also, I'm working with images (non-square), so I don't think I can use this approach anyway...

Thank you for any suggestions!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Stephen,

I would parallelize the outer loop (over all rows) with parallel_for, using serial prefix sum for each row - unless the amount of rows is too small to feed all CPU cores with work. The implementation of parallel_scan needs to do almost twice as much work as the serial one, so if you have enough outer-level parallelism, you will save CPU cycles. Possibly you can merge it with Transpose step, filling columns of the intermediate storage as you process rows of the initial image; though this might potentially result in bad cache locality, so you should check which way gives better performance. Then you repeat the same procedure for the intermediate storage.

If you see that the outer-level parallelism leaves some spare CPU cycles, try using tbb::parallel_scan for each row and see if it will help.

Another way to think of this problem is the so-called wavefront pattern in 2D. Essentially, computation for each point should only be done when you know the results for the neighbor points above and at the left. These dependencies form a graph which can be used as a guide to explore parallelism. For efficiency, you would want to group points into blocks (rectangles) for sequential processing with good locality; processing of blocks will also form the same dependency pattern. There are several ways to express wavefront in TBB, see e.g. https://www.threadingbuildingblocks.org/docs/help/tbb_userguide/Design_Patterns/Wavefront.html for a parallel_do based example, and you can also use flow graph similarly to https://www.threadingbuildingblocks.org/docs/help/tbb_userguide/Dependence_Graph.html; and googling for "parallel wavefront tbb" might reveal some other useful information.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Stephen,

I would parallelize the outer loop (over all rows) with parallel_for, using serial prefix sum for each row - unless the amount of rows is too small to feed all CPU cores with work. The implementation of parallel_scan needs to do almost twice as much work as the serial one, so if you have enough outer-level parallelism, you will save CPU cycles. Possibly you can merge it with Transpose step, filling columns of the intermediate storage as you process rows of the initial image; though this might potentially result in bad cache locality, so you should check which way gives better performance. Then you repeat the same procedure for the intermediate storage.

If you see that the outer-level parallelism leaves some spare CPU cycles, try using tbb::parallel_scan for each row and see if it will help.

Another way to think of this problem is the so-called wavefront pattern in 2D. Essentially, computation for each point should only be done when you know the results for the neighbor points above and at the left. These dependencies form a graph which can be used as a guide to explore parallelism. For efficiency, you would want to group points into blocks (rectangles) for sequential processing with good locality; processing of blocks will also form the same dependency pattern. There are several ways to express wavefront in TBB, see e.g. https://www.threadingbuildingblocks.org/docs/help/tbb_userguide/Design_Patterns/Wavefront.html for a parallel_do based example, and you can also use flow graph similarly to https://www.threadingbuildingblocks.org/docs/help/tbb_userguide/Dependence_Graph.html; and googling for "parallel wavefront tbb" might reveal some other useful information.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Alexey,

Thank you for such a thoughtful response! I originally ended up doing one of those

tbb::parallel_for(

tbb::blocked_range<unsigned>(0, Height),

[=] (const tbb::blocked_range<unsigned> &range) {

for (unsigned idy = range.begin(); idy != range.end(); ++idy) {

Body<Input, Output> body(input + (idy * Width), output + (idy * Width));

tbb::parallel_scan(tbb::blocked_range<unsigned>(0, Width), body);

}

}

);

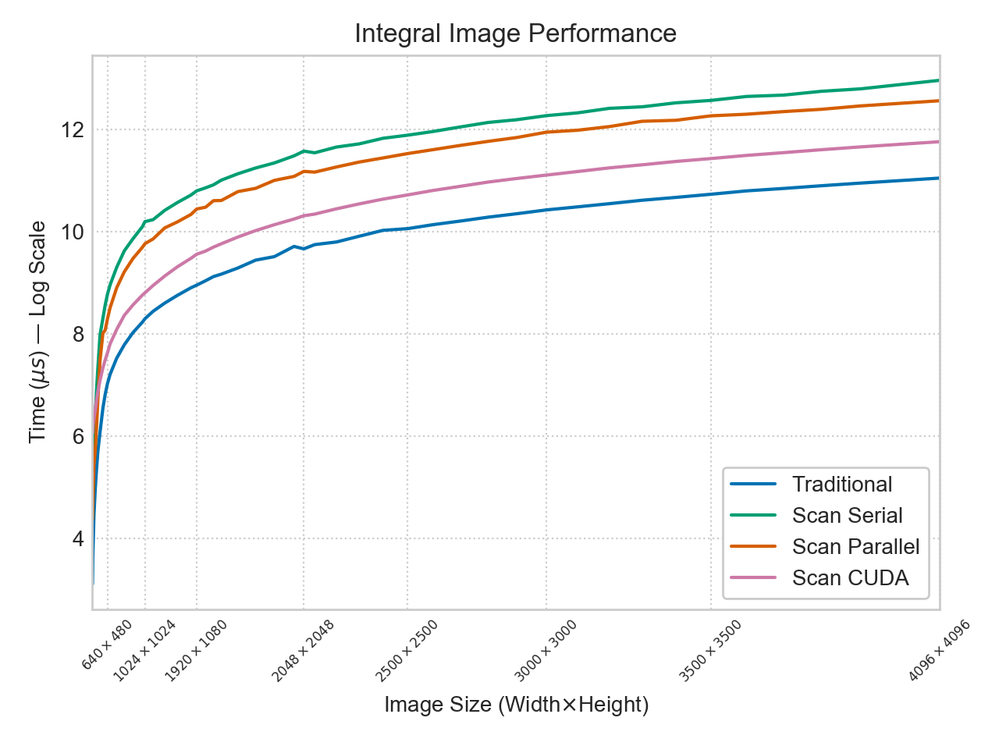

But I think your suspicions are correct, I should get rid of the scan on the inner loop. I expect that to do better than the scan. In the image below, "Traditional" just means do a serial loop for both, noting it does so well in part because Width and Height are compile time constants.

I'll definitely need to give the wavefront approach a go too, sounds fun :)

Out of curiosity, when you say use rectangular blocks, is there a reason why? I tuned the transpose part as best I could using square blocks and it does reasonably well, but maybe rectangular blocks are better?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Forgive me if it is a little off topic but the wavefront pattern (and its TBB implementation with parallel_do) has shown to be very efficient in this case:

It would be great to have a similar way to express this pattern on GPUs.

Laurent

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Very interesting paper, Laurent -- thanks for sharing :) It definitely confirms for me that I'm doing something wrong here.

Do you have any suggestions on choosing block sizes? Right now if I do anything smaller than (Width / 4) it's taking extremely long in the parallel_do. But that's a HUGE block size. Just looking for high level thoughts on block size choice so that I can keep investigating why my code is so slow.

At this point I'm pretty sure i've done this wrong, it gets the right results but I had to use an extra buffer to represent just the row sum. Doing this makes it even more I/O bound. I need to sleep on it and come back to it

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well, I have not measured the latency of the TBB parallel_do construct. This latency determines the minimal task duration i.e. the block size in your case. Obviously the task duration grows faster with the block size in our 3D case. Actually I think that there is two kind latencies to be considered: the latency for task insertion and the latency for its scheduling/launch. We have noticed that the ParSec framework may exhibit less overhead than TBB.

In your case, if you consider that all blocks consume the same time (which is not clear because of memory traffic), you may improve the efficiency by performing a sequence of parallel_for on each diagonal fronts indexed by f=i+j with f \in [0,nx+ny+1].

The most important characteristic of your operation is its low arithmetic intensity. Ideally one should merge such operation with others that operates on the same data. If there is no possibility for such kernel merge, the priority is to minimize the data movements by avoiding multiple cross through the 2D Data. For example, the proposed global transposition is usually quite expensive if you data spills out of the cache. Each blocks should fit in the cache.

Of course the block size should be known statically for the complete unrolling of the block operations. In addition, the block data should be properly aligned for efficient vectorization. This impose some constraints on the block size (multiple of the width of the considered SIMD registers).

As a final remark, it can be interesting to evaluate your implementation by noticing that it can't be faster that the time required to read you N^2 elements on a given architecture. It may provide a useful upper bound for the reachable performance (classical roof-line analysis). The latest versions of Intel's vtune software is a very convenient tool for this kind of parallel performance analysis analysis.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

BTW I like the idea of a multidimensional prefix_scan as a standard parallel pattern.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page