- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

512 items is too small for Haswell. A workgroup of 512 items cannot cover a Haswell half-slice.

The minimum acceptable max work group size for Haswell should be at least 10 EUs x 7 h/w threads x 8 work items = 560.

A 512 item SIMD8 work group size can only occupy 9 EUs.

What am I missing?

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What you missing is usually there are many workgroups for one kernel launch for Haswell to schedule, not only one workgroup.

So your conclusion is wrong, for kernels using shared memory, the workgroup size shall not be too large to reduce the overhead of synchronization.

Actually, the optimization guide recommends 64 workitems per one workgroup.

Last comment, please also describe what is your target platform. I knew you are talking about the integrated graphics of Haswell.

However, the title is confusing as if you are targeting haswell CPU

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I understand that there could be many workgroups but it's often advantageous to coordinate as many items as possible through shared local memory. The synchronization overhead is far less of a cost to pay when compared to launching a second coordinating kernel.

For example, on an HD5x00, for many algorithms it would be advantageous to launch 4 workgroups of 560 work items rather than 8 workgroups of 280 work items + additional kernel launches.

I've already tested launching a 4 x 280 work item grid and it appears to fully occupy the IGP on an HD4600.

The Haswell IGP has 64KB of SLM per sub-slice. That's a gigantic 29 SLM dwords per work item for a hypothetical 560 work item grid.

Your answer is not convincing as shared memory and synchronization aren't the issue. 512 makes no sense as it artificially cripples the IGP for many GPU algorithms and leaves one EU potentially unoccupied. It was not a limit in Ivy Bridge.

This is a bug or oversight unless there is limit determined by the hardware.

Please fix or clarify why 512 is the limit.

Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

512 is the max power-of-two that fits on a sub-slice. Hence the choice.

Thanks,

Raghu

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That's unfortunate.

If this isn't a hardware limitation then the driver should be fixed so that a 70 hardware thread SIMD8/16/32 work group can be launched on a sub-slice.

As noted above, if Intel wants its IGPs to demonstrate top performance then the driver should properly support mapping a work group onto the underlying hardware. This comes up again and again in GPU code (I have many kernels that would benefit).

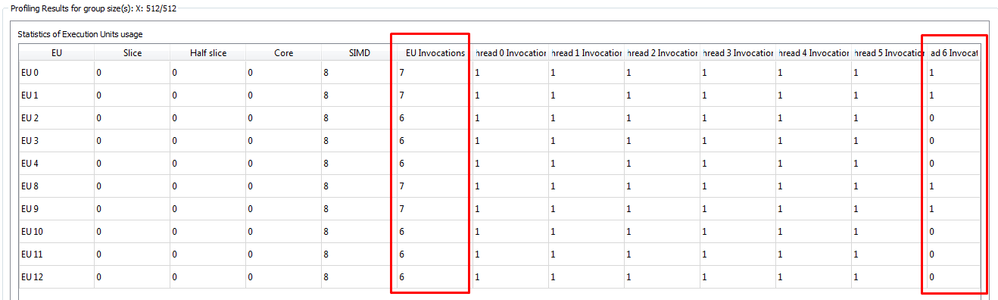

Until then, this unnecessarily results in a situation where 512 item SIMD8 kernels leave 6 EU threads -- and their vast general register files -- idle on a sub-slice:

Only an extremely small (48 item) work group could run simultaneously with this kernel... which means those EU threads are most likely going to be wasted.

Also note that being forced to launch more work groups will usually result in activating a dormant sub-slice. On an HD4600 IGP I'm observing that launching 2 x 280 item work groups will schedule the work across both of the IGP's sub-slices. This is expected and maximizes throughput but is not ideal if the developer would rather have launched a 560/SIMD8 group that needs to communicate via SLM. Again, there are plenty of GPU kernels that perform best by launching as "wide" of a group as possible in order to leverage SLM.

Hopefully this is just a driver issue and not a h/w limitation as there is a lot of GPU code out there that can now be ported to Intel IGPs. Furthermore, I hope this limit gets fixed before the sub-slices become even wider (more EUs) in the future.

Engineers/PMs from Intel: ping me offline if you need examples of why this is a necessary feature.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm not sure, but this looks encouraging: http://lists.freedesktop.org/archives/beignet/2014-June/003496.html

Can the SDK for OpenCL team remove any similar restrictions?

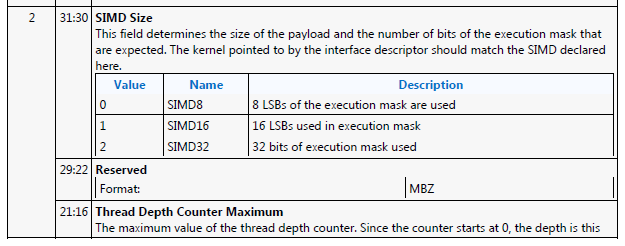

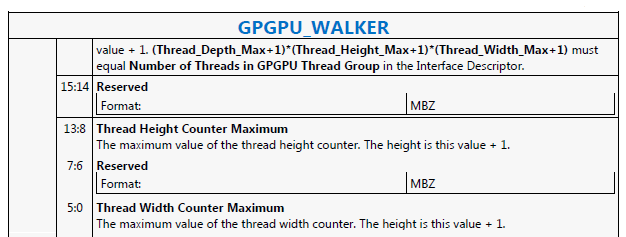

I got an answer from the Beignet list and the number of EU threads in a work group is capped at 64 in each dimension by a 6-bit field in the GPGPU_WALKER command.

So 512 item SIMD8 work groups are the limit for Haswell. The 6-bit field is plenty for pre-Haswell IGPs but is, as I described earlier in this thread, a minor limitation on Haswell.

Hopefully future IGPs use some of those extra reserved bits!

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page