- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I asked a similar question last year and want to know if there is any way to coax the compiler into mapping "vectorized" code onto the IGP?

More specifically, I'd like to launch a workgroup where each work item is a SIMD4 or SIMD4x2 vector and the number of vector registers per work item might approach 128.

I have a few interesting near-embarrassingly parallel kernels that require a few rounds of inter-lane communication across SIMD lanes. The kernels map well to AVX2 and GPU architectures with swizzle/permute/shuffle support. The kernels also work fine without a swizzle but bounce everything through local memory. Avoiding local mem provides a decent speedup.

With the current scalar-per-thread code generation (SIMD8/16/32?) and no access to inter-subgroup swizzles, all communication needs to be bounced through shared.... while executing in SIMD4/4x2 mode would presumably allow me to use the SIMD swizzle support.

I assume the answer is till "no" but vectorizer "knobs" might be a useful feature to add to future versions of the IGP compiler.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Allan,

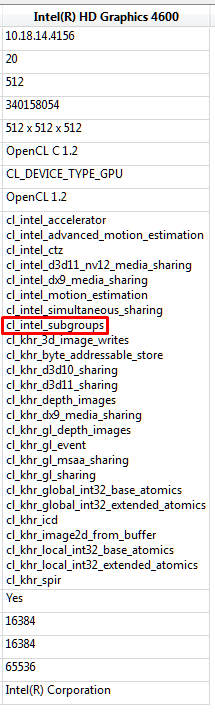

We support Intel subgroups extension in our currently shipping driver:

https://www.khronos.org/registry/cl/extensions/intel/cl_intel_subgroups.txt

Take a look (especially at the intel_sub_group_shuffle functions - there are four of them) and let me know if this is something you could use. We cannot do SIMD4 or SIMD4x2 even with the internal driver controls, but the sub group functions could help your cause.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OK, Wow!

The subgroup_shuffle is exactly what I need for this problem (and many others).

Do you know the general max subgroup size that Broadwell developers should expect to see? Is it 8 or 16 or 32?

Thanks for the pointer -- this is good stuff.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here is the answer from the developer that actually used this feature to speed up his code:

As I understand it, you must compile the kernel first, then query to find the max subgroup size. The reason is we choose the SIMD size after compiling.

A better way will be coming eventually…

My take: In the best case, your kernel is compiled SIMD32, so the max subgroup size is 32, but you wouldn't know it until you query the kernel.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Excellent. I can live with the max subgroup size query for now.

My finger is hovering over clicking to buy a Broadwell NUC. Still waiting on the HD6000 variant to come into stock but sites are showing it's within the next couple weeks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I completely missed that the cl_intel_subgroups extension is also supported on OpenCL 1.2 Haswell IGPs -- at least it is on the HD4600.

I just ported a bunch of kernels from CUDA to the Haswell/Broadwell and having shuffle operations made the job pretty easy.

You need to promote these new features! :)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

FYI, if you're using the cl_intel_subgroups extension on an OpenCL 1.2 device and want to see what size subgroup was selected by the compiler then you're going to need to acquire a handle to the Intel implementation of the OpenCL 2.0-only subgroup info function clGetKernelSubGroupInfoKHR().

The function prototype can be found in <CL/cl_ext.h>.

Getting the function pointer is accomplished with clGetExtensionFunctionAddressForPlatform():

clGetKernelSubGroupInfoKHR_fn pfn_clGetKernelSubGroupInfoKHR = (clGetKernelSubGroupInfoKHR_fn) clGetExtensionFunctionAddressForPlatform(platform_id,"clGetKernelSubGroupInfoKHR");

Curiously, on a Haswell HD4600 I've only seen subgroups of size "8" but that might just be a property of my codebase.

[ Edit: teeny tiny kernels report subgroups of size 16 ]

I'm currently using it like crazy in my sorting algorithm and it appears to work very well.

Here's an enhancement request:

It would be great to get some sort of compile-time indication or guarantee that a certain size subgroup was selected in order to close off certain code paths.

If only the compiler can determine the subgroup size and your codebase is dependent on a certain subgroup size and variations in the code result in changes to the subgroup size then ... it becomes a circular mess.

There is always the option of probing the subgroup size at runtime with get_sub_group_size() but I would caution against that approach. It may be portable but it's probably not performant.

But if get_sub_group_size() was a compile time constant resolved early enough to snip dead code then it would provide portability and performance.

It would be great to hear how Intel actually implements this super useful extension.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page