- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello

I am testing the kernel launch latencies of the CPU and GPU by timing EnqueueNDRangeKernel on a blank kernel (but with arguments). I found the CPU consistently takes about 150us while the GPU much higher. Further tests revealed that the GPU's latency scales with the size of the buffer provided as kernel argument. For my 3072x3072 buffer, the GPU's launch latency is ~1600 us. For a smaller 1024x1024 buffer, it is ~450us. Note that this is while keeping the global work size constant.

Can someone shed some light on:

1) Why the GPU's launch overhead is higher than the CPU and is there any way to mitigate this?

2) Why the GPU's launch overhead scales with the buffer size. Since no PCIe is involved, shouldn't all data transfer happen during execution and not during kernel launch?

I am using the latest OpenCL runtimes for Windows running on Intel 4200u / HD 4400.

Thanks

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Supradeep,

1) GPU kernel launch overhead must be higher, it sets up the kernel on the GPU, GPU execution is feasible for processing and accelerating (i)large data sets, best practice for acceleration by GPU achieved by (ii)pipeline designed solutions.

Warming up. Executing a kernel on the GPU couple of times before using/measuring it would result in a completely different/better results.

2) The driver actually handles the kernel setup, Enqueuing buffers involves data transfers.

- In your experiment, were you using the CPU as an OpenCL device?

- Even though, The GPU is embedded, it still uses its own video memory, it is not shared with the CPU.

- if your dataset can fit into the CPU cash at once, most probably the CPU alone will achieve the fastest results.

Best regards,

Tamer

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the helpful answer. Can you clarify one part of it?

- Even though, The GPU is embedded, it still uses its own video memory, it is not shared with the CPU.

I'm creating my buffers to be zero-copy using CL_MEM_USE_HOST_PTR in the CPU-GPU shared context as in https://software.intel.com/en-us/articles/getting-the-most-from-opencl-12-how-to-increase-performance-by-minimizing-buffer-copies-on-intel-processor-graphics.

I know that the GPU has some dedicated memory reserved in the DRAM. So are you saying that whenever a kernel is launched, all buffers associated with it are first copied to this video memory? But doesn't this violate the 'zero' in 'zero-copy'?

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Surdeep,

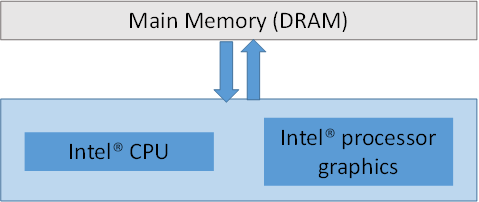

It is true, buffers can be created on shared memory. Referenced article demonstrate shared memory approach, System's shared memory, not GPU's:

-----------------------

Figure 1. Relationship of the CPU, Intel® processor graphics, and main memory. Notice a single pool of memory is shared by the CPU and GPU, unlike discrete GPUs that have their own dedicated memory that must be managed by the driver.

-----------------------

You might want to measure the performance of your specific solution, using both approaches, considering CL memory types and optimizations.

Best regards,

Tamer

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page