- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have an Arria 10 design with a PCIe core (hard-IP, 8 lanes, gen 3, Avalon memory-mapped, w/ DMA). The only timing errors I am getting are related to the reset generated by the PCIe core. The reset is synchronized to the 250 MHz clock domain, and is used for both core-internal logic (DMA controller, etc) and attached external (user-generated) logic. Both set-up and recovery occur, with worst-case negative slack of more than 1 ns. Here is an example:

-1.119 qsys_design.synth_qys_inst.hchip_blob_inst|pcie_wrapper_inst|u0|pcie_a10_hip_0|pcie_a10_hip_0|g_rst_sync.syncrstn_avmm_sriov.app_rstn_altpcie_reset_delay_sync_altpcie_a10_hip_hwtcl|sync_rst[0] qsys_design.synth_qys_inst.hchip_blob_inst|pcie_wrapper_inst|u0|pcie_a10_hip_0|pcie_a10_hip_0|g_avmm_256_dma.avmm_256_dma.altpcieav_256_app|write_data_mover_2|dma_wr_wdalign|desc_lines_release_reg[8] qsys_design.synth_qys_inst.hchip_blob_inst|pcie_wrapper_inst|u0|pcie_a10_hip_0|pcie_a10_hip_0|wys~CORE_CLK_OUT qsys_design.synth_qys_inst.hchip_blob_inst|pcie_wrapper_inst|u0|pcie_a10_hip_0|pcie_a10_hip_0|wys~CORE_CLK_OUT 4.000 -0.178 4.976 1

Initially I was seeing errors for external logic as well, but placing a register at the boundary of the core and retiming the reset before feeding it to the external logic eliminated those errors, so it now appears errors are limited to the PCIe core internals.

I put the sync_rst[] signals on globals, but this did not seem to help (maybe they were already on globals?).

Ideas?

Thanks.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Sir,

Did you try this in the generated example design and get the same timing violation?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have not done that, yet. I did a new compile today with pin location changes, and I get some improvement (worst-case negative slack is now ~800ps). But I also can now see some other timing violations, still in the PCIe/DMA core, but this time in a datapath:

-0.231

from node: qsys_design.synth_qys_inst.hchip_blob_inst|pcie_wrapper_inst|u0|pcie_a10_hip_0|pcie_a10_hip_0|g_avmm_256_dma.avmm_256_dma.altpcieav_256_app|hip_inf|rx_input_fifo|fifo_reg[0][24]

to node: qsys_design.synth_qys_inst.hchip_blob_inst|pcie_wrapper_inst|u0|pcie_a10_hip_0|pcie_a10_hip_0|g_avmm_256_dma.avmm_256_dma.altpcieav_256_app|hip_inf|rx_input_fifo|fifo_reg[7][210]

launch clock: qsys_design.synth_qys_inst.hchip_blob_inst|pcie_wrapper_inst|u0|pcie_a10_hip_0|pcie_a10_hip_0|wys~CORE_CLK_OUT

latch clock: qsys_design.synth_qys_inst.hchip_blob_inst|pcie_wrapper_inst|u0|pcie_a10_hip_0|pcie_a10_hip_0|wys~CORE_CLK_OUT

clock relationship: 4.000

This is more troubling, as it is a datapath. It is also a bit more puzzling, as it is probably a relatively low fanout path, so why shouldn't it make timing? And again, this is entirely within the PCIe macro, so I can't modify the code in any way.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I compile the arria 10 PCIe design and I don't see the timing issue. If the timing violation is come from the core, I should see the same from my design as well. Do you add any user logic to the reset of the IP core?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The timing errors are coming from the DMA portion of the core; the DMA is not hard IP, so place and route, and the resulting timing, are design-dependent. Just because it works for you does not mean it will work for me. Also, you may be using a different version of Quartus and a different operating system than I am using; unlike the old SR system, this ('The Community') lame excuse for customer service does not provide a mechanism to convey that information.

To answer your other question, I did register the reset output from the pcie core, which eliminated the errors in my user-generated code. But that does not solve the problems within the PCIe/DMA core.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I see the negative slack is more than 1ns (-1.119), it is unlikely due to place and route issue (usually place and route variation only give small timing difference). But looks like it is due to some extra constraint or routing that cause the issue.

So, may I know what is the Quartus version that you using? Maybe I should try in the quartus version and see if give worsen timing.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am running QPP version 18.1.0 Build 222.

A few days ago I tried to partition and floorplan the design, but I was getting errors, including:

Error (14566): The Fitter cannot place 1 periphery component(s) due to conflicts with existing constraints (1 clock core fanout(s)). Fix the errors described in the submessages, and then rerun the Fitter.

It's not clear exactly where that error is occurring, but I did declare that reset signal from the PCIe core as a global. If I remove all of the Logic Lock regions, the error goes away. I will have to experiment a bit to find out which partition causes the problem when it is locked down.

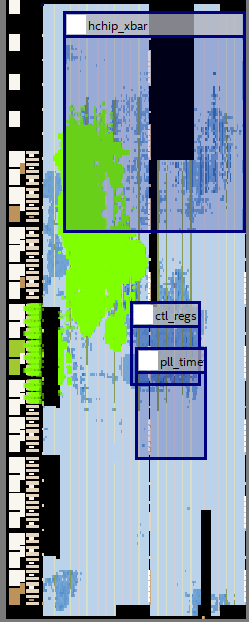

I just did a build with some of the design floor-planned (but not the PCIe core), and the worst-case negative slack is now under 700ps. Here is a screenshot of chip planner; the bright green is the PCIe/DMA core.

That thing takes up a lot of space.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Sir,

I compile PCIe example design in Arria10 using 18.1. The worst recovery slack that i get it 0.792. I think it is impossible the palce and routing cause the violation change from 792ps to -700ps.

What is the device speed grade (or OPN) that you use for your compilation?

Suggestion, can you duplicate a copy of your design to other directory. Remove other logic component as much as possible and remain PCIe IP, compile and see if you still get the same timing results.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OK, so I saved my design as an archive and created a new project from that. I stripped out everything except the PCIe code (w/ DMA) and a PLL, and added a module to sink/source all the Avalon interfaces from the core. This design makes timing with no trouble.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I built another project from that same archive. This time I left all of the IP and RTL in the project, and wiped the main .sdc file clean. I then put just the following into the .sdc file:

#**************************************************************

# Create Clock

#**************************************************************

create_clock -name {~ALTERA_CLKUSR~} -period 8.000 -waveform { 0.000 4.000 } [get_pins -compatibility_mode {~ALTERA_CLKUSR~~ibuf|o}]

create_clock -name {top_inst|emif|top_emif_0_ref_clock} -period 5.000 -waveform { 0.000 2.500 } [get_ports {emif_ref_clk}]

create_clock -name {pcie_refclk} -period 10.000 -waveform { 0.000 5.000 } [get_ports {pcie_refclk}]

create_clock -name {xcvr_refclk} -period 3.103 -waveform { 0.000 1.551 } [get_ports {xcvr_refclk}]

create_clock -name {oth_refclk} -period 8.000 -waveform { 0.000 4.000 } [get_ports {oth_refclk}]

create_clock -name {clk100} -period 10.000 -waveform { 0.000 5.000 } [get_ports {clk100}]

create_clock -name {clk100_1pps} -period 10.000 -waveform { 0.000 5.000 } [get_ports {clk100_1pps}]

create_clock -name {virt_clk_166} -period 6.000 -waveform { 0.000 3.000 }

create_clock -name {virt_clk_16} -period 59.608 -waveform { 0.000 29.803 }

#**************************************************************

# Derive PLL Clocks

#**************************************************************

derive_pll_clocks

#**************************************************************

# Derive Clock Uncertianty

#**************************************************************

derive_clock_uncertainty

I did this to rule out anything I had put in the .sdc that might be causing a problem. I got the same result as before (~ -700ps slack).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That mean is one of the SDC there causing the negative slack. Sorry, my suggestion is remove the SDC one by one and see which constraint cause the negative slack.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OK, so I started doing this, and got about half way through the list (there are 27 .sdc files total) without seeing any improvement. So I took the extreme approach and removed all of the .sdc files except:

- the project .sdc file

- the three (temporary? not sure how this works) .sdc files which appear for a while during build under the qdb folder

When I do this, the timing errors go away, but there are no timing checks at all for the clock domain which was causing the problem; in fact, approximately half of the clocks no longer appear in the clock report (with the .sdc files in, there are ~135 clocks; without the .sdc files, there are only about 62 clocks).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Sir,

Sorry, I just learned from a member that familiar with timing, he said removing the SDC will only masking the issue but not removing the issue.

He mentioned for IP, usually the removal/recovery violation for reset signal that come from external can be safely ignore. This is because the IP already synchronizing the reset.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I understand that removing .sdc files will remove constraints; but I went ahead with this approach in order to eliminate any possible conflicting constraints, based on your response of 3/4/20.

Regarding ignoring the timing errors, I can not do that, because the errors are not confined to the reset signal; theare are also datapath errors, such as:

-0.571

qsys_design.synth_qys_inst.hchip_blob_inst|pcie_wrapper_inst|u0|pcie_a10_hip_0|pcie_a10_hip_0|g_avmm_256_dma.avmm_256_dma.altpcieav_256_app|read_data_mover|avmmwr_burst_cntr[0]

qsys_design.synth_qys_inst.hchip_blob_inst|pcie_wrapper_inst|u0|pcie_a10_hip_0|pcie_a10_hip_0|g_avmm_256_dma.avmm_256_dma.altpcieav_256_app|read_data_mover|rd_status_fifo|fifo_reg[6][8]

qsys_design.synth_qys_inst.hchip_blob_inst|pcie_wrapper_inst|u0|pcie_a10_hip_0|pcie_a10_hip_0|wys~CORE_CLK_OUT

qsys_design.synth_qys_inst.hchip_blob_inst|pcie_wrapper_inst|u0|pcie_a10_hip_0|pcie_a10_hip_0|wys~CORE_CLK_OUT 4.000 -0.199 4.350 1

And I don't understand what you are saying here:

removal/recovery violation for reset signal that come from external can be safely ignore. This is because the IP already synchronizing the reset

All of the timing violations I am seeing are within the IP core, and they are clock-clock violations which only occur (by definition) with synchronous signals.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Sir,

I refer back to your original description, the failure is on reset recovery like below.

-1.119 qsys_design.synth_qys_inst.hchip_blob_inst|pcie_wrapper_inst|u0|pcie_a10_hip_0|pcie_a10_hip_0|g_rst_sync.syncrstn_avmm_sriov.app_rstn_altpcie_reset_delay_sync_altpcie_a10_hip_hwtcl|sync_rst[0] qsys_design.synth_qys_inst.hchip_blob_inst|pcie_wrapper_inst|u0|pcie_a10_hip_0|pcie_a10_hip_0|g_avmm_256_dma.avmm_256_dma.altpcieav_256_app|write_data_mover_2|dma_wr_wdalign|desc_lines_release_reg[8] qsys_design.synth_qys_inst.hchip_blob_inst|pcie_wrapper_inst|u0|pcie_a10_hip_0|pcie_a10_hip_0|wys~CORE_CLK_OUT qsys_design.synth_qys_inst.hchip_blob_inst|pcie_wrapper_inst|u0|pcie_a10_hip_0|pcie_a10_hip_0|wys~CORE_CLK_OUT 4.000 -0.178 4.976 1

but from what you reporting now, the violation is other path and no the recovery?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Seadog ,

Refer to my earlier post, can you help to clarify which actual violation that you are facing in the design? I need an accurate information so that I can discuss with peers that working on timing field.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OK, I have new information.

The module which carries the PCIe core in instantiated with a verilog generate structure, like this:

parameter enable_blob = 1; // 1= yes, 0 = no

generate

begin: qsys_design

if (enable_blob == 1'b1)

begin: synth_qys_inst

blob blob_inst (

. . .

)

end

endgenerate

The PCIe core is instantiated within a wrapper which is instantiated in 'blob'. This version of the design will not make timing.

So I built a simplified version of the design, which has the PCIe wrapper instantiated in the top level module; the only other things in that module are a PLL to generate system clocking, a reset control module, and a dummy 'terminator' to prevent the compiler from optimizing out the PCIe core. The simple design makes timing, and I am able to partition the critical portions of the DMA controller (which are:

. . .|pcie_wrapper_inst|u0|pcie_a10_hip_0|pcie_a10_hip_0|g_avmm_256_dma.avmm_256_dma.altpcieav_256_app

. . .|pcie_wrapper_inst|u0|pcie_a10_hip_0|pcie_a10_hip_0|g_dmacontrol.dmacontrol.dma_control_0

)

and set the preservation level for those partitions to 'Final'. I was then able to hack in various other parts of the design (DDR4 controller, 10GE Ethernet MAC/PHY, bus bridges and various logic functions) and connect them in a more or less meaningful way, while maintaining the simplified hierarchy, which, and this seems to be important, does not include the Verilog generate structure. This design makes timing, with ~ 280ps of positive slack.

So I started rebuilding my original design. I started with just the PLL, the reset control module, and the PCIe wrapper/core, but this time instantiated with the generate structure and the extra layer of hierarchy above the PCIe core. This does not make timing. But if I remove the generate structure, and otherwise leave the hierarchy unchanged, it does make timing.

So to sum it up:

top>generate:blob>pcie_wrapper>pcie_core - does not make timing

top>blob>pcie_wrapper>pcie_core - does make timing

top>pcie_wrapper>pcie_core - does make timing

The difference in performance between good and bad results is about 1.2ns of slack for the 250MHz clock domain, and the errors are confined to the DMA portion of the PCIe core.

I think I can replace the generate structure (which is there to allow simulation of the top-level design without the PCIe or DDR cores, which slow down sim and are not always needed) with simple conditional compile commands. So I think I have a solution, but I am still curious why the generate structure seems to be causing so much trouble.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Seadog

Thanks for your update and glad to know that you are able to get the solution. I have no answer not for why generate structure causing the trouble, but I will feedback this observation to validation team and see if we can make improvement on this in future.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you very much.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Welcome and thanks for your sharing as well.

Hope everyone stay safe and healthy.💪

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page