- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

I was looking to parallelize my code for speedup.

As xeon phi was a NUMA core I used the first touch placement of the data.

while xeon phi is performing better than xeon no doubt, the problem is that totaltime(time for first touch+looptime) is greater.

How do I resolve this issue?

This code when integrated into the main code(cannot post it here) will call state function many times from various different places. So is it possible that even if I dont first touch as I have in the code attached below this overhead is just a onetime problem?

The code attached below as state_test_offload is for MIC and state_test is for Xeon host.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

During the call of state in the actual program, state get called at different places with TEMPK and SALTK having values different at each call.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As xeon phi was a NUMA core I used the first touch placement of the data.

Actually, KNC does not have much NUMA sensitivity since it is a single chip, so the time for any core to reach the memory controllers is similar. (Unlike a multi-socket Xeon where the time to reach a memory controller on another chip is large because the request has to cross the external QPI coherence fabric).

However for portability using first-touch still makes sense.

The overhead is almost certainly the cost of the kernel actually instantiating the page (mapping the virtual address to real, physical, memory) when it is first written to. (Allocating a physical page and zeroing it). You will pay that cost whichever thread first writes to the memory, but, you'll only pay it once. (Since after the page is written it will have memory allocated to it).

So

- Using a first touch policy is sensible for performance portability

- Overall it shouldn't cost any more than touching all the data in one place (you have to pay the cost of instantiating the page somewhere!)

You should be able to time the different loops and observe that the first loopm which writes to all the pages is slow, but subsequent loops are faster. (You can also observe this by looking at the number of page-faults, since the copy-on-write which instantiates the page is entered as a result of a page-fault).

You can, of course, reduce the number of page-faults by using big pages (I haven't measured whether that reduces the overall time significantly or not; if the time is mostly spent in zeroing the memory the number of page faults won't make much difference...)

HTH

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi I repeated the experiment many times.

The numbers are still in favour of xeon.

3.9 ms for xeon

4.0 for xeon phi.

Any idea how to improve on it?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

sorry its actually 2.5ms for xeon.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I mean I added a loop over call state function, when I mean repeated many times.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't think this has anything to do with first touch.

Have you looked at the time to transfer data? https://software.intel.com/en-us/node/524675 should help.

p.s. Forcibly setting the number of threads to 240 is a bad idea (better than forcing 244, but still bad). By default the runtime will do the right thing, and, even better, it will also do the right thing in the future when you run on a machine (like Knights Landing) which has a different optimal number of threads.

In this context, it's also worth experimenting with KMP_PLACE_THREADS ( https://software.intel.com/en-us/node/512745 ) to see whether using only one or two threads/core improves your performance (KMP_PLACE_THREADS=1t or KMP_PLACE_THREADS=2t plus whatever offload prefix you need to have the envirable propagated, of course).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Right now I am not concerned with transfer time. I think I can asynchronously do a few routines.

By Default how do I know how many threads to set? it would just use whats in the OMP_NUM_THREADS or PHI_OMP_NUM_THREADS right?

Will try the last approach.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

By Default how do I know how many threads to set? it would just use whats in the OMP_NUM_THREADS or PHI_OMP_NUM_THREADS right?

You don't need to set anything. Just let the combination of the offload code and the OpenMP runtime do the right thing. As soon as you force values into your code

- It becomes non-portable. (Either to someone else's machine where they may have a different number of cores in their KNC, or to the machine you buy in a few years, after you've forgotten that there's this magic constant in the code).

- It becomes much harder to do a scaling study (you'd have to recompile the code to try each different number of cores)

You can change the number of threads that will be used by setting OMP_NUM_THREADS for the host side and the same envirable with the appropriate prefix (I don't know if that is "PHI_" or "MIC_". or what; you can choose it somehow :-)). Similarly use KMP_PLACE_THREADS (with the appropriate prefix).

By all means use omp_get_max_threads() in the offload to see what you're going to use, (in the serial offload code), and print it, but actively forcing 240 as a constant is nor recommended.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I ran the code with the the setting of threads removed its performing badly?

its now 60ms

Should I set PHI_OMP_NUM_THREADS or unset that?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Assuming you have set MIC_ENV_PREFIX=PHI, you should try PHI_KMP_PLACE_THREADS settings as James suggested. This implies a consistent setting of num_threads. In my experience, where MIC native OpenMP reached peak performance with KMP_PLACE_THREADS=59c,2t and OMP_PROC_BIND=close, offload didn't benefit beyond PHI_KMP_PLACE_THREADS=59c,1t. It's particularly important, as James said, not to force worker threads onto the core which runs MPSS and data transfers. Running more than 1 thread per MIC core typically doesn't benefit, even in native mode, without thread placement so as to share the cache effectively. Page initialization overhead, as James mentioned, also contributes to offload not being able to use as many threads effectively.

If you set OMP_NUM_THREADS for host, MIC used to inherit that setting when you didn't set an appropriate value, so you have no chance if you don't set appropriate values for MIC.

The micsmc gui is an important tool to visualize whether you have distributed threads efficiently on MIC.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi,

This is what I did

1)unset PHI_OMP_NUM_THREADS

2)tried for PHI_KMP_PLACE_THREADS=30c,2t seems to work decently 17ms

3)59c,2t is bad even with OMP_PROC_BIND performance isnt as good as xeon 23ms

any idea if I could find out hotspots/inneffciency using Vtune?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

which metric should I be looking at?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

any idea if I could find out hotspots/inneffciency using Vtune?

You can. Even the VTune product landing page is showing that and my fourth Google hit for the query intel vtune amplifier openmp tuning is https://software.intel.com/en-us/articles/how-to-analyze-openmp-applications-using-intel-vtune-amplifie-xe-2015 which seems to have a step by step example for you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

sorry for being so imprecise on my query.

I apologise.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I apologise

No problem, everyone's Google-fu runs out sometimes :-)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think this may shed some light on where the time is spent:

!dir$ offload begin target(mic:0) begin_time = omp_get_wtime() call omp_set_num_threads(240) !$omp parallel dum_time = omp_get_wtime() ! dummy do something for firts parallel region !$omp end parallel first_time = omp_get_wtime() ... !$omp end parallel end_time = omp_get_wtime() !dir$ end offload print *,"OpenMP thread pool init time is",first_time - begin_time print *,"loop time is", end_time - start_time print *,"total compute time is",end_time - first_time print *,"total time is", end_time - begin_time

You will find that starting the initial thread pool on the KNC has significant overhead. Therefore, when programming in offload model on KNC, it is generally beneficial at program start (host side) to call a subroutine (or place in line as first few statements), code that ostensibly does no work other than initialize the KNC OpenMP thread team.

RE: First Touch on KNC

As James stated,on KNC any code you insert for "first touch" latency is not used for NUMA locality is not beneficial, but doesn't hurt, and when multi-socket KNL arrives, it will be beneficial. What does matter with respect to latency, is after process start, while the process may have many GB of Virtual Memory, only that memory that has been "first touched" (page granularity) since process start is mapped to physical RAM (and/or Page File). Therefore the first time allocation from heap may encounter none/one/several/many page faults as each page in the allocation is "first touched" during use. This overhead is quite significant.

On KNC you still have "first touch" latency as James stated with respect to first time a page in the process VM is touched after allocation from the heap. This is a one-time latency overhead. In writing applications you might want to take this into consideration when timing.

In a real-world performance critical application you typically are not interested in:

Time from double-click on program Icon to program completion time.

What is typically of concern is the time that an internal process loop takes _after_ everything has been initialized. As an example you have a simulation that will iterate millions of times. Your interest is in total time, not time for first iteration.

If you go to http://www.lotsofcores.com/ and scroll down a little way you will see a graphical video comparison of a simulation run using two different programming strategies. If you Pause the simulation and drag the horizontal scroll bar to time 0:00:07 you will find

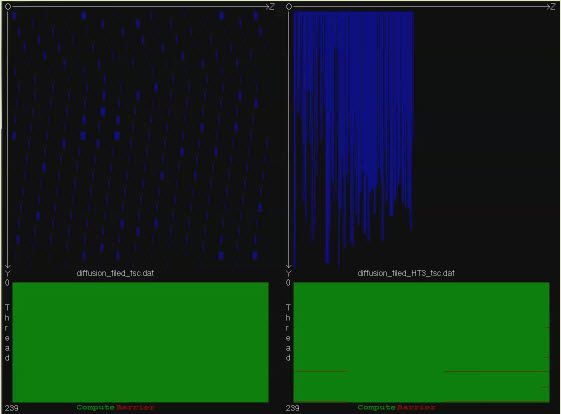

On the left pane is a typical tiled implementation using rectangular tiles. The different sizes of the tiles painted blue indicate the different completion states of each tile as run by different threads (240). The relatively large amount of skew in tile size is attributable to OpenMP thread pool initialization, as well as thread skew on getting threads started in parallel region. Right right pane shows an alternate algorithm using columnar tiles. At the 7 second time step, both sides, some threads have yet to produce output.

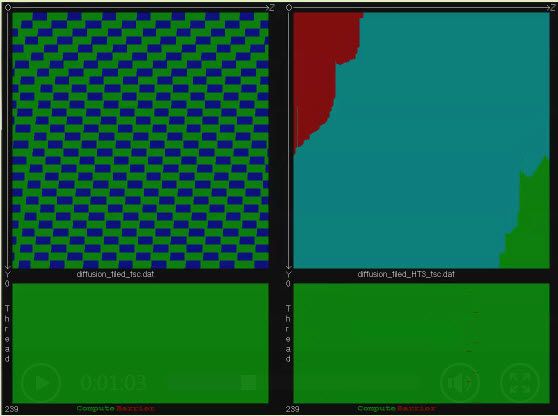

At time 0:01:03, after "first touch" and several iterations:

The left pane shows less skew in thread completion of individual tiles (relatively same size). The right pane also illustrates the thread skew, but in this case, the different algorithm thread skew time becomes immaterial. You will notice in the right pane, left side of red zone, one of the threads is lagging behind (black stripe). This may possibly be due to preemption of the thread to run other processes inside the KNC.

The first screenshot clearly illustrates the initialization overhead that you must be aware of when timing your code.

Jim Dempsey

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page