- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi everyone,

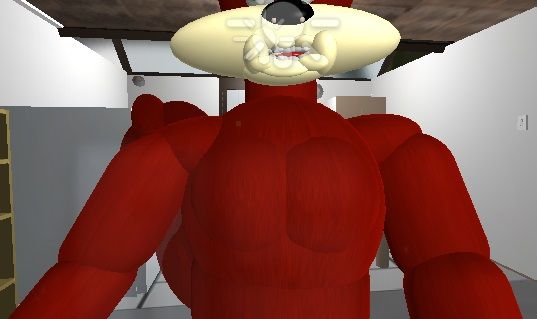

In my RealSense-powered full-body avatar with total shoulder-to-fingertip arm movement, one of the ongoing problems with it was that the shoulder (the primary driver of the arm's movement) had a tendency to over-extend itself when the player crossed one arm over the other in front of the avatar's chest.

The consequence of this was that the shoulder joint would reverse direction and make further use of the arms impossible until the joints were reset.

Essentially, the upper arms consisted of a shoulder rotation joint inside the center-point of the shoulder flesh object, with the upper arm joined onto this shoulder piece with no rotation mechanism in it. So when the shoulder joint rotated, it moved the upper arm with it as well.

We had once had rotation joints in the upper arm too but had removed them long ago in a quest for simplification. We now considered whether we had made the arm *too* simple and that restoring independent rotation in the upper arm might help solve our shoulder over-extension problem. The logic behind this was that if the upper arm could travel then the shoulder would have to travel less distance in order for the hand to reach its destination and so the shoulder joint would be far less likely to overreach.

We spent some time studying real-life human arm bone anatomy, and this seemed to confirm our thoughts. RL arm movement involved a shoulder blade that swung like a hinge, and an upper arm joint that inserted into a hole in the shoulder blade and rotated independently in that socket. Placing one hand on a shoulder and holding it down firmly whilst moving the upper arm confirmed that the upper arm was not totally reliant on the shoulder for its motion, but rather shoulder and upper joint worked together in unison, making it just *look* as though the shoulder was doing all the work.

Our approach to replicating this bone setup in our avatar was to reasign the central rotation joints in the shoulder-pieces from being shoulder joints to being upper arm joints, and created a new pair of shoulder joints in the inner end of the shoulders, approximately where the hinged shoulder blades would be in the real body.

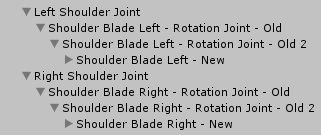

Our object hierarchy for the left and right arms now looked like this: shoulder joint, upper arm joints (the former shoulder joints), and then the shoulder flesh piece.

We did not rename the former shoulder joints to 'upper arm joint' in our hierarchy, as there are scripts in our project that are dependent on finding objects named 'shoulder blade'' and we did not want to break those linkages.

In our arm we use two joints for the (now) upper arm, shown as Old 1 and Old 2 in the hierarchy above. This gives our arm maximum left-right and up-down movement without sacrificing control stability. Old 1 is constrained to only move in the X axis, and Old 2 - attached to the Old 1 joint as a child object - is constrained to be able to move in the X and Y directions. You can think of the first X-only joint as setting the primary direction for the arm, and the second X-Y joint adding further fine-control to the general movement provided by X.

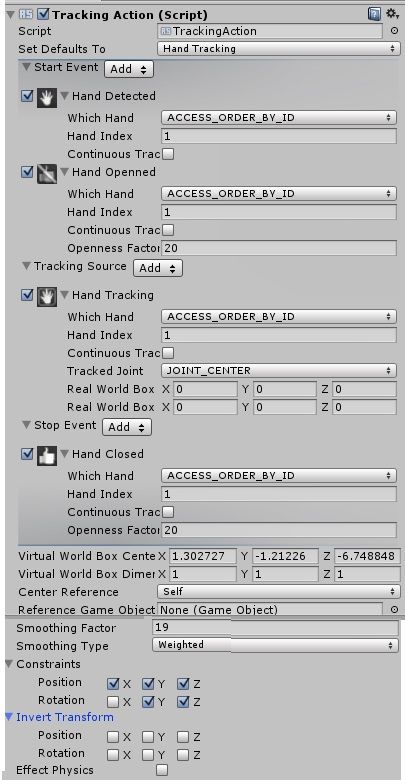

Here are the TrackingAction script settings for each of these joints.

We now needed to set up a TrackingAction for the new shoulder joints that we had just created at the ends of the shoulder flesh so that the shoulder could move the arm a little whilst the upper arm joints provided the majority of the motion. Here are the TrackingAction settings that we used.

The above settings are essentially identical to those used in the 'Old 1' X-constrained upper arm joint, except that we set the 'Smoothing Factor' (which determines how fast the joint will move) to '19', which most of the time is the highest value you can set for maximum slowness before movement freezes. Limiting the shoulders' movement range would help to avoid them moving so far from their start position that they dislocated the shoulder rotation joint.

When we ran the project for the first time after the changes, the avatar was now capable of the same advanced arm positions that it was before but it could now do them without the joints breaking, and control of those movements was easier!

If you are interested in creating your own full arm for your project, here's a link to a guide I wrote last year about making an older version of our avatar arm.

http://sambiglyon.org/sites/default/files/Arm-Construction-Guide.pdf

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

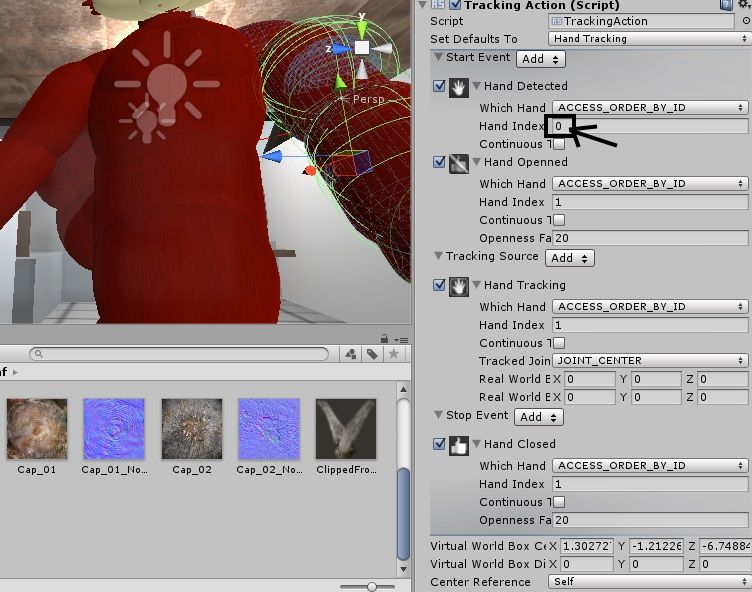

I found a new tip today for improving arm control. It allows objects (such as the above avatar arms) to be controlled independently simultaneously without one of the RL hands having its tracking stalled as the camera prioritizes detecting the other hand at a given moment.

It had been the case ever since I started using the RealSense camera with Unity last year that the camera would only recognize the hand set to the '0' index as the one that could activate the Hand Detected rule. If the '1' hand was pushed towards the screen on its own, with the '0' hand kept out of the camera's view, then it would activate tracking on the object associated with the '0' index.

In other words, if my avatar's right arm was set to '0' and the left arm to '1', pushing the RL left hand towards the camera would only ever cause the avatar's right arm to move.instead of the left one. The left arm could only be activated if both RL hands were held up to the camera, with the right '0' hand being held up first to initiate tracking. Only then could the '1' hand be used to wake up movement for the left arm.

So my logic was: if the camera will only ever pay attention to the '0' hand when detecting a hand, I may as well set the 'Hand Detected' rule on the '1' hand to look for the '0' hand, whilst having all my other rules (Hand Closed, Hand Opened, etc) set to '1'. If my logic worked as I thought it would, then the left-hand controlled object should never be able to stall its tracking so long as the '0' hand was in the camera's view.

I tried it out, setting the TrackingAction of the '1' hand so that Hand Detected was '0' and everything else used '1'. So once the '0' hand woke up the left arm, the '1' rules would all be able to take over and function as normal.

When I ran the project, both arms could now be moved simultaneously in different directions without either of their motions stalling. Success!

Edit: further testing indicated that psychologically (at least in my own case), the hand chosen to be the primary Hand '0' tends to be the hand that your brain is naturally wired for. So if you are right-handed then '0' might be your right hand, and if you are a southpaw like me then the left hand is used as '0'. Instinctually, your natural "main hand" seems to be drawn to the forefront of the camera ahead of the other hand because that is the hand that your brain is most comfortable with using.

It's like being a left-hander and trying to write with your right hand - going against your wiring and using that non-natural hand as the primary hand makes you very self-conscious of the awkwardness and so breaks the immersion in the experience. When your natural hand is the primary hand, you barely even think about it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey Marty, have you tried mapping the controls to other parts of the body of the avatar?. For example controlling the tail using a combination of both the user hands?. I've read somewhere that the brain can easily adjust to these types of mappings in a matter of minutes.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi! Yes, other parts of the body are mapped to the camera. For example, the tail moves up towards the back and down towards the floor when the avatar leans forward or leans back (driven by a nose-powered bending point at the waist) as it tracks the nose face-point.. Originally, it could sway left and right as well. I found that this wasn't practical though, as it kept slapping against objects in the game environment. And getting through a door was a nightmare. So I confined it to up-down. :)

If a solution for full motion control without destroying the contents of his house presents itself, I'll certainly take it!

As for other parts ... the ears move up and down naturally as the RL head is turned, again driven by the nose-point. I did animate the ear-tips that way too, but that much movement was visually distracting so I dropped it. In animation, less is sometimes more!

The head turns left and right when the player's head turns left and right.

The yellow face fur follows the chin point and so bobs up and down a little when the mouth moves, just like human cheeks move when talking.

The eyebrows have lifters that respond to the center-points of the eyebrow tracking points. The shape of the eyebrows changes (e.g from neutral to angry). The eyebrows do not produce enough RL movement to do this flexing using the eyebrow point, so they track the left-side of the mouth, so that when the player smiles the eyebrows flex upwards, and when the mouth makes a downward shape the eyebrows flex downwards into an angry downward-diagonal shape.

The eye pupils have image array scripts that also respond to the left-side mouth. So when the mouth turns down, the eye pupil texture is replaced with a small pupil to represent the emotion of shock, and when the mouth returns to a neutral poise the normal pupil is re-loaded.

The eyelids also change shape via a texture change in response to emotional expressions made by the mouth (e.g from a neutral horizontal eyelid texture to a downward diagonal one). I would have rather used real rotation of the eyelid in real-time instead of instant texture changes but I found that getting the eyelid to rotate into the exact position you needed it to be, and getting both eyes to display the same eyelid shape at the same time, was an unworkable nightmare and produced some terrifying faces.

So since you see eyelid expressions in cartoons change instantly, I decided that using an image array to change the eyelid shape was an acceptable solution.

The mouth moves up and down using a TrackingAction that uses a position-based movement that follows the chin point, with Virtual World Box values used to make sure that the mouth cannot travel up and down so far that it detaches from the face or merges into the cheek fur above it.

The lip pieces rotate up and down to change mouth expression by following the left-side mouth point. I'm considering replacing this with something else though, as the pieces tend to rotate too far and make the lips look broken. Setting limits for rotation isn't as easy as setting them for positional movement.

The legs can crouch down and rise up again by closing the hands to freeze arm control and moving the fists up and down. I would rather this was done by lowering and raising the head but I haven't got that approach to work so far. Originally I set the legs to recognize the knees (as RealSense treats the knees and toes as palms and fingers) so that the legs could move when the player's RL legs moved, but the short tracking distance of the F200 camera (and the need for male players to take their jeans off so the camera could see their knees!) meant that this idea was shelved.

The fingers of the hands open and close, following the Joint 1 (JT 1) point of each hand since that is the most consistently visible joint to the camera, and the thumbs are fully controllable by having the X, Y and Z constraints all unlocked. Here's an image I did yesterday of the avatar doing a thumbs up with real-time movement.

http://sambiglyon.org/sites/default/files/fists.jpg

Finally, leaning forwards in your chair and leaning back again makes the avatar move forwards and stop, with the legs doing an automatic walk animation and the arms automatically swinging backwards and forwards a little to give the illusion that the avatar is actually walking. Turning the head left and right while walking allows the avatar to change direction without stopping, just like a real person.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Interesting piece of trivia: since my project began a year ago, my target benchmark for my avatar animation has always been the canteen song and dance scene from the movie 'My Little Pony: Equestria Girls'. I worked towards that goal constantly, improving my RealSense avatar tech bit by bit, and to date, I've achieved about 75% of the facial and upper body movement poses demonstrated in that scene. Legs will take a bit longer!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The latest news from my Unity 5 RealSense research is that my full-body avatar gained even smoother movement and reliability of joint rotation when I stopped using Start and Stop rules in the TrackingAction (e.g 'hand detected' and 'hand opened' for Start and 'hand closed' for the Stop rule), and instead simply used 'Always' as the Start rule.

I would guess that the reason for the increased smoothness is that if 'Always' is used the SDK does not have to keep stopping and starting tracking as it continuously checks which rule should be currently active.

As a result, the avatar was even more capable than before of producing smooth, quick limb movements like those in the 'Equestria Girls' canteen song scene mentioned in the comment above this one.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page