I recently had the privilege to speak on behalf of Intel at Google Cloud Next 2024 to discuss Confidential AI – the intersection of artificial intelligence and confidential computing. In the “Confidential computing and confidential accelerators for AI workloads” session, I joined experts from Google and NVIDIA to discuss how these technologies will be supported on general purpose C3 VMs and Confidential Space.

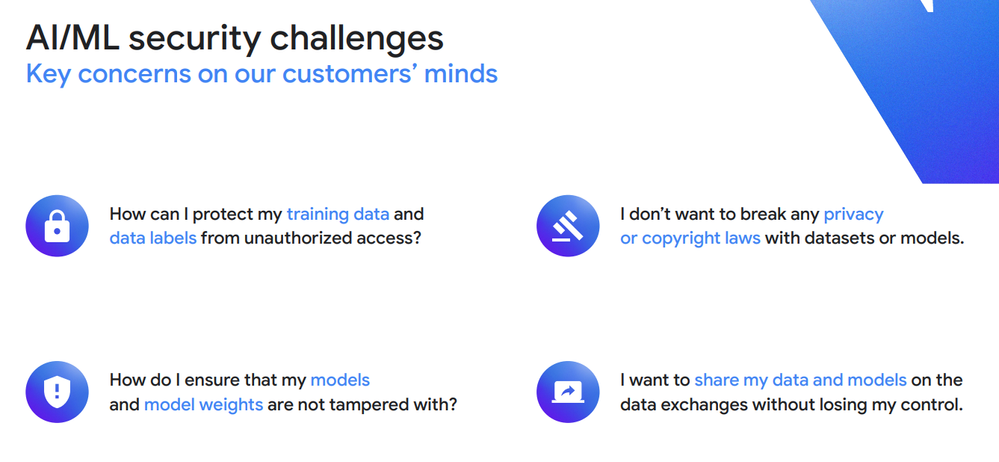

Joanna Young, Product Manager at Google Cloud, laid out four major concerns customers must consider when implementing AI/ML in the cloud. Data privacy is paramount, especially when that data is sensitive or regulated, but both models and model weights need to be protected from tampering to ensure accurate results. There are also new concerns around regulatory compliance, both for AI models and data governance in different parts of the world.

In addition to privacy and security, customers need to control operational costs. Moving workloads to the cloud is often done to optimize OpEx, but AI models can be computationally expensive. Picking the wrong platform could expose customers to both higher instance costs and privacy issues.

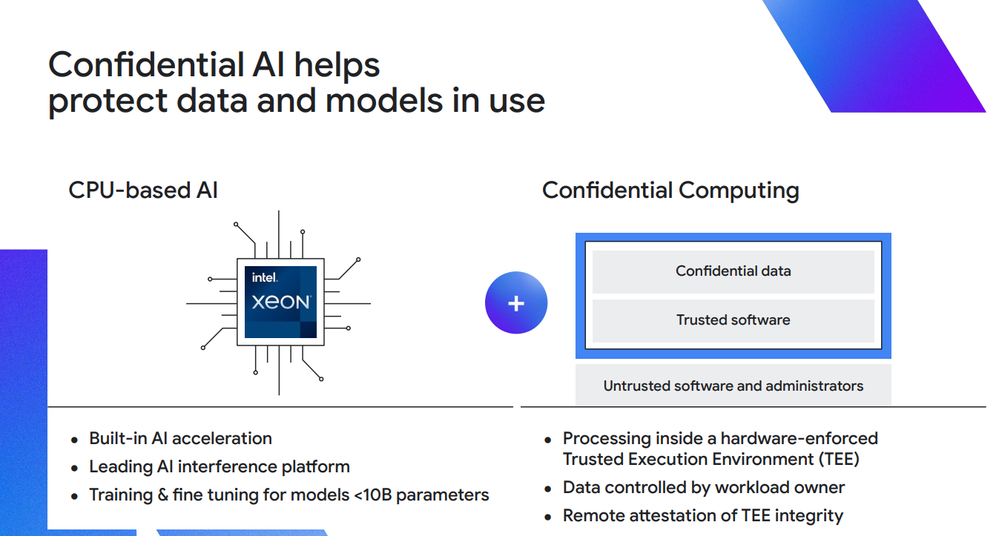

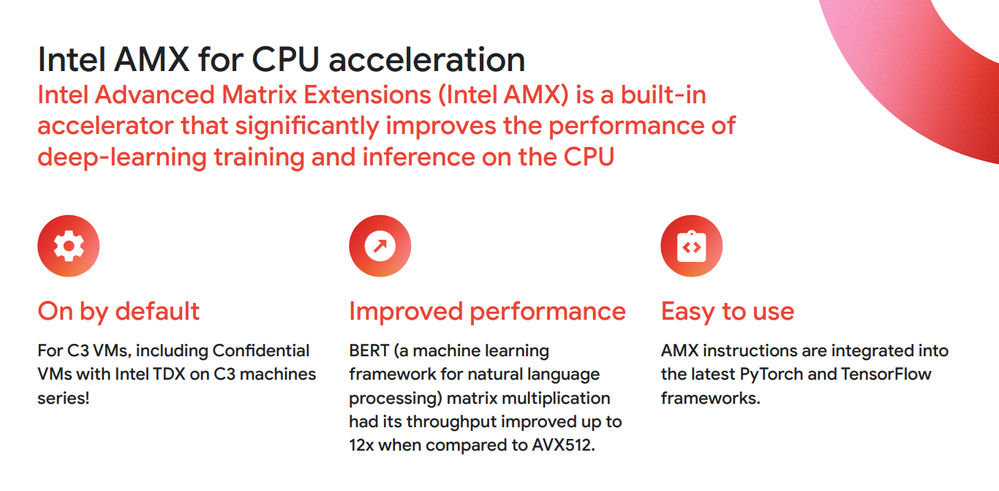

Google recently announced new Confidential VMs on C3 machine series using 4th Gen Intel® Xeon® Scalable processors. These instances, now in public preview, enable Confidential VMs using both Intel® Trust Domain Extensions (Intel® TDX) and Intel® Advanced Matrix Extensions (Intel® AMX). While Intel TDX implements confidential computing at the VM-level, perfect for migrating existing workloads to a trust domain, Intel AMX helps improve the performance of deep-learning training and inference on the CPU. This allows workloads like natural-language processing, recommendation systems, and image recognition to maintain high performance while running within a smaller trust boundary.

Because Intel AMX is already integrated into common frameworks like PyTorch and TensorFlow, existing AI/ML workloads can “lift-and-shift” into a C3 Confidential VM using Intel TDX. Joanna discussed use cases like recommender systems, natural language processing (NLP), and retail e-commerce that would benefit from the combination of Intel TDX and Intel AMX on Google Cloud.

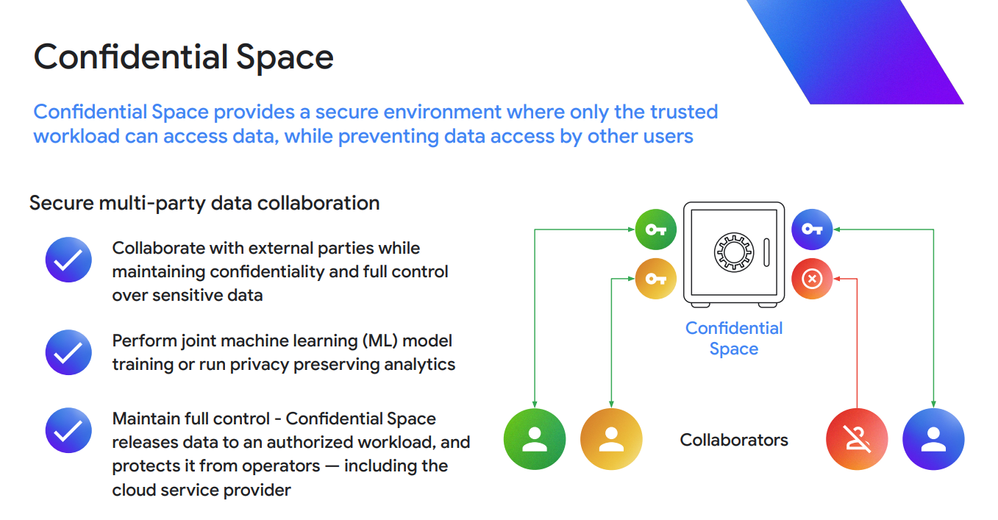

Sam Lugani, Product Lead for Confidential Computing at Google Cloud, talked about secure multi-party collaboration using Confidential Space. Sam demonstrated the interaction between a large-language model (LLM) and vector database for retrieval augmentation generation (RAG), showing how Intel TDX can help secure those interactions in Confidential Space.

I recommend you check out the full session for a look at the demo and to get a better understanding of problems we’re addressing with Confidential AI. Both the session video and slides are available in the Google Cloud Next 2024 Session Library.

As the Director of Confidential Compute Software Engineering at Intel Corporation, I lead a team of engineers who develop and deliver security solutions for cloud computing, blockchain, and IoT applications. With over 12 years of experience in software engineering and product management, I have a strong track record of creating innovative and scalable products that address the needs of customers and partners in various industries and markets.

Prior to my current role, I was an Investment Director for New Business Initiatives at Intel, where I managed a portfolio of ventures in emerging domains such as blockchain, payment technology, cloud computing, and IoT. I was responsible for identifying, evaluating, and funding promising startups and technologies that aligned with Intel's strategic vision and goals. I also supported the incubation and growth of these ventures by providing mentorship, guidance, and resources. Through my leadership and collaboration, I helped launch and scale several new business ventures that generated over $100M in revenue and achieved market-segment leadership.

As the Director of Confidential Compute Software Engineering at Intel Corporation, I lead a team of engineers who develop and deliver security solutions for cloud computing, blockchain, and IoT applications. With over 12 years of experience in software engineering and product management, I have a strong track record of creating innovative and scalable products that address the needs of customers and partners in various industries and markets.

Prior to my current role, I was an Investment Director for New Business Initiatives at Intel, where I managed a portfolio of ventures in emerging domains such as blockchain, payment technology, cloud computing, and IoT. I was responsible for identifying, evaluating, and funding promising startups and technologies that aligned with Intel's strategic vision and goals. I also supported the incubation and growth of these ventures by providing mentorship, guidance, and resources. Through my leadership and collaboration, I helped launch and scale several new business ventures that generated over $100M in revenue and achieved market-segment leadership.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.