Ram Krishnamurthy is a senior principal engineer at Intel Labs, where he leads high-performance and low-voltage circuits research.

Highlights:

- Intel Labs is actively pursuing multiple avenues for In-Memory Computing. Part 2 of this blog series discusses the digital approach and Intel Labs’ work in the area.

- Intel Labs details an eight-core 64-b processor that extends RISC-V to perform multiply–accumulate (MAC) within the shared last level cache (LLC).

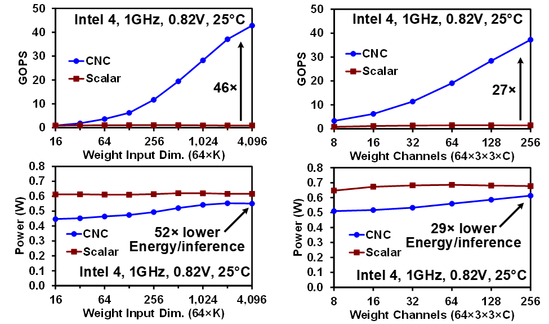

- The 1.15-GHz chip reduces energy consumption by 52 times for fully connected, and 29 times for convolutional deep neural network (DNN) layers, compared to scalar operation.

- Intel’s digital approach to In-Memory Computing performs well on two benchmarks: MLPerf Tiny Anomaly Detection v0.5 latency is reduced by 4.25 times to 40 µs compared to previous work, and inference latency on memory-augmented neural networks is improved by 4.1 times compared to scalar operation.

As discussed in part one of this series, The Analog Approach, the fundamental building block of computer memory is the memory cell, an electronic circuit that stores binary information. The conventional approach to data processing is a lengthy process, and relatively inefficient, so researchers began to seek an alternative. With In-Memory computing, data is processed exactly where it resides in the memory hierarchy. This architectural approach dramatically reduces latency by eliminating the time spent shuttling data through unnecessary levels of caching and buffering to the CPU.

Currently, the concept of in-memory computing is heated in the field of AI hardware implementation. By reducing the distance of data movement, In-Memory computing is expected to achieve unparalleled energy efficiency. Yet, realizing this goal is not an easy task. Although researchers have revisited analog methods, the digital computer has become increasingly popular. Naturally, there are benefits and drawbacks to both digital and analog computing, and as such, Intel Labs is actively pursuing both avenues, with hopes of one day combining the best elements of each into a far superior solution.

Part one focused on the analog approach and detailed Intel Labs’ recent innovations in analog architectures, applications, and materials. Here in part two, we will evaluate the digital approach while discussing Intel Labs’ new eight-core 64-b processor, which extends RISC-V to perform multiply–accumulate (MAC) within the shared last level cache (LLC).

Background

In traditional multi-processors, cores and shared LLCs are physically far apart, with LLC distributed across the chip. They are connected using a network-on-chip (NoC) with limited routing resources, which creates bandwidth bottlenecks for loading and storing data in the caches. Because NoCs span the entire chip, they use long links for communication resulting in higher energy costs. Unfortunately, as chip sizes continue to grow in the pursuit of higher performance, the bottleneck issues will only worsen.

Instead of moving data from memory to the cores, compute near last level cache (CNC) augments LLC with parallel compute units. CNC accesses wide memory bandwidth by reading the LLC’s internal SRAM sub-arrays. Rather than traversing the NoC bandwidth bottlenecks, it delivers data to the co-located near-cache compute units using short local routes. Then, only the final results of these computations are delivered to the core, thus reducing global traffic.

The CNC compute units are digital single-instruction multiple-data (SIMD) MAC units. MAC makes up a large portion of deep learning workloads, so added support for this operation alone helps to reduce the total area overhead. The digital MAC is implemented as a circuit added to the LLC. This facilitates rapid design since the circuit is synthesizable and memory arrays remain unmodified. Compared to analog computation, this digital technique enables bit-accurate computation for higher DNN accuracy and easier verification.

The near-memory in this work is the shared LLC of an eight-core RISC-V processor. LLC has the highest capacity of any on-chip memory, enabling near-memory computation on a larger working dataset of an application. Performing near-memory computation directly on the LLC obviates scratchpad memory, reducing area overhead and maximizing unified cache capacity. Moreover, tight integration allows fine-grained interspersing of SIMD near-memory CNC with standard RISC-V instructions for general-purpose scalar computation.

While CNC can significantly reduce the data movement on the NoC between the LLC and cores, some data movement is still required. For a single MAC CNC instruction, the core sends one 64-b address and one 64-b vector to the LLC. The equivalent functionality in scalar operation would load the 512-b matrix into the core. This would send four 64-b addresses to the LLC since the data L1 line size is 128 b. Thus, CNC reduces NoC traffic by six times. However, this improvement is contingent upon the data for scalar operation not being privately cached initially.

Intel Labs’ Digital Solution

Overview

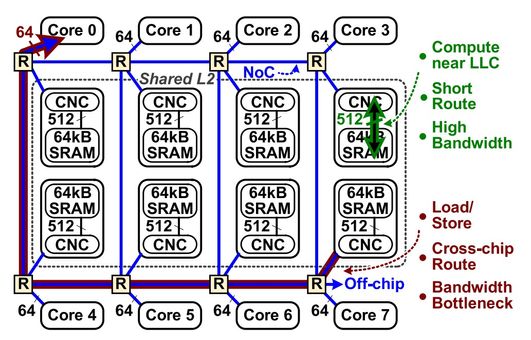

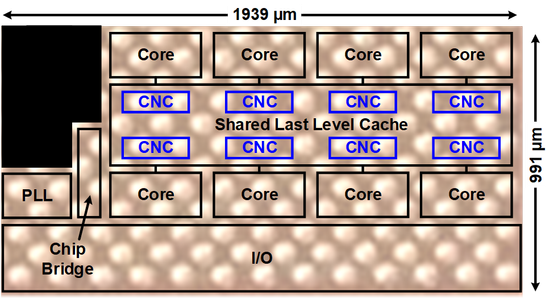

We present an eight-core 64-b RISC-V processor with a CNC, as depicted in Figure 1 below. The chip is fabricated in Intel 4 CMOS with an area of 1.92mm2 [1]. CNC is an extension of the RV64GC instruction set architecture (ISA). The RISC-V cores execute instructions out-of-order, enforce memory consistency in the load-store queue, and perform virtual address translation.

Figure 1. Diagram of eight-core RISC-V processor with CNC.

CNC is executed with 128 8-b MACs in the 512-kB shared, distributed LLC. The principles of near-memory computation apply on this chip; for traditional processing, data loaded from LLC traverses bandwidth bottlenecks, over the 64-b NoC and through the 8kB L1 data cache. In addition, this may be a cross-chip route since LLC data are distributed over eight slices for more uniform access patterns. In contrast, each CNC instruction accesses a 512-b cache line for higher bandwidth than scalar operation. These data are sent on short, local routes to the co-located MAC circuits, reducing global traffic (Figure 2).

Figure 2. Chip micrograph of eight-core RISC-V processor with CNC.

The chip supports CNC operation within the Linux operating system. CNC is implemented with virtual memory to eliminate physical memory hierarchy management and facilitate porting of code across hardware platforms. The CNC cache accesses obey coherency and consistency to allow predictable data modification and sharing among cores. The design supports error correction for reliable operation with SRAM in the presence of process variation and particle strikes. With these features, the processor runs C++ programs containing inline CNC assembly.

Performance

The RV64GC CNC instruction set architecture (ISA) extension performs digital MAC near unmodified SRAM arrays and has a low area overhead of 1.4%. CNC increases memory access width to 512 b per instruction by avoiding bottlenecks in the on-chip networks. The operation also reduces data movement by keeping MAC results and most input operands local to the LLC slices. CNC supports computation on cached data from main memory, coherent data sharing between cores, and virtual addressing. The CNC instructions are included in C++ programs and either run in Linux or baremetal. The 1.15-GHz chip reduces energy consumption by 52× for fully connected and 29× for convolutional deep neural network (DNN) layers, compared to scalar operation, as shown in Figure 3. Our approach performs well on two benchmarks: MLPerf Tiny Anomaly Detection v0.5 latency is reduced by 4.25× to 40 µs versus previous work, and inference latency on memory-augmented neural networks is improved by 4.1× versus scalar operation.

Figure 3. Fully-connected and convolutional layers chip measurements.

Conclusion

In this work, we aimed to achieve both the performance gains of near-memory computation and the programmability of a general-purpose microprocessor. CNC accesses the high memory bandwidth that exists in LLC SRAM arrays and bypasses bandwidth bottlenecks on the NoC. It also processes data where it is stored, successfully mitigating the high costs of data movement. CNC frees the programmer from some of the burdens of explicit data movement by allowing operation on data in main memory, enabling coherent data sharing between threads, and supporting virtual addressing. We believe that the continuing proliferation of data-intensive applications and a never-ending desire for higher performance makes this the right time for near-memory solutions. Yet, overcoming the near-memory challenges, which have persisted over decades, will require cross-disciplinary innovations in devices, circuits, architecture, and software.

Looking forward, we recognize that a digital approach guarantees accuracy through deterministic compute with much better flexibility but cannot achieve the same computational density and efficiency as analog. Therefore, we believe that a combined analog-digital system can potentially benefit from both sides, achieve higher efficiency without sacrificing accuracy, and cover more diverse applications.

Project Contributors:

Ram Krishnamurthy, Gregory Chen, Phil Knag, Carlos Tokunaga

References:

[1] Intel eight-core 64-b RISC-V: “An Eight-Core RISC-V Processor With Compute Near Last Level Cache in Intel 4 CMOS” IEEE Journal of Solid-State Circuits, April 2023. https://ieeexplore.ieee.org/document/9994784

Ram Krishnamurthy is a senior principal engineer at Intel Labs, where he leads high-performance and low-voltage circuits research. He received a Ph.D. degree in electrical and computer engineering from Carnegie Mellon University in 1997. He has been at Intel Labs since 1997. His current research interests include high-performance, energy-efficient and ultra-low-voltage circuits, AI accelerators, and in-memory/near-memory computing. He holds 215 issued patents, has published over 200 papers, and is an IEEE Fellow.

Ram Krishnamurthy is a senior principal engineer at Intel Labs, where he leads high-performance and low-voltage circuits research. He received a Ph.D. degree in electrical and computer engineering from Carnegie Mellon University in 1997. He has been at Intel Labs since 1997. His current research interests include high-performance, energy-efficient and ultra-low-voltage circuits, AI accelerators, and in-memory/near-memory computing. He holds 215 issued patents, has published over 200 papers, and is an IEEE Fellow.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.