Sasikanth Avancha is a Principal Engineer (AI Research & Dev) in Intel Labs leading research efforts on GNN training & inference performance optimizations.

Highlights:

- The 4th gen Intel® Xeon® Scalable Processor, formerly codenamed Sapphire Rapids is a balanced platform for Graph Neural Networks (GNN) training, accelerating both sparse and dense compute. In this article, Intel CPU refers to 4th gen Intel® Xeon® Scalable Processor with 56 cores per socket.

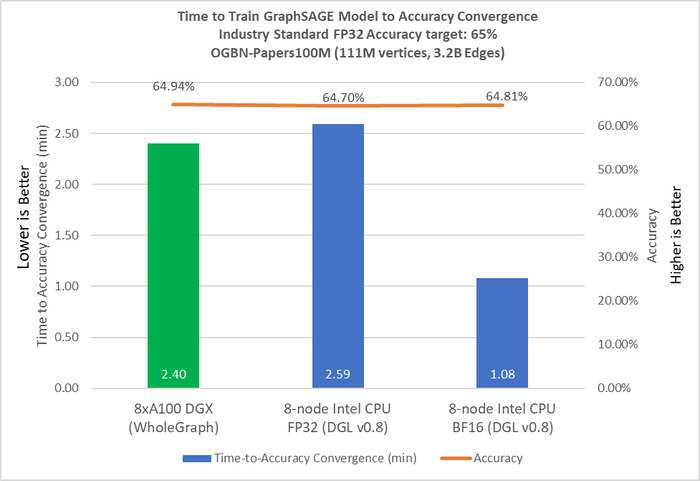

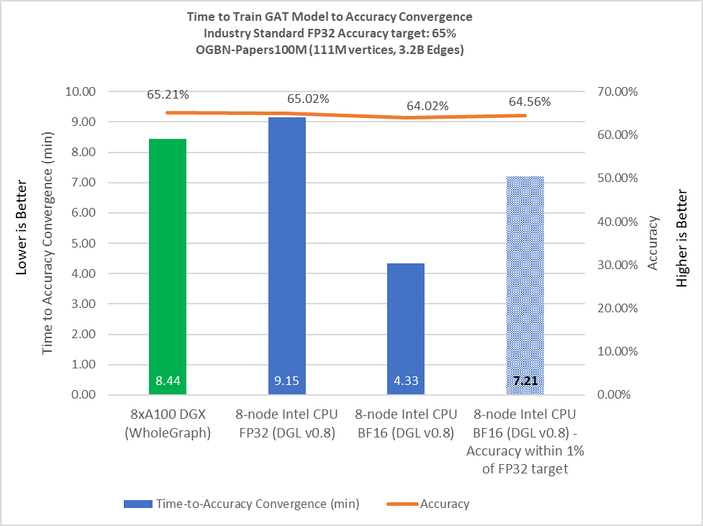

- Using half-precision (BF16) datatype, Intel CPUs outperform current industry best published A100 GPU results [1, 2] on training GraphSAGE and Graph Attention Network (GAT) benchmarks to the target accuracy, as detailed below.

- Intel’s version of the above will be made available through DGL for the community to reproduce these results.

As discussed in our previous blog, Optimizing Graph Neural Network Training Performance on Intel® Xeon®, graphs are an example of a data structure that captures relationships between data elements in non-Euclidean domains. Well-known examples of graphs in the real world are the Internet, social networks, web-scale networks, biomedical networks, and economic networks. Therefore, they do not fit into well-known deep learning 2-D or 3-D structures.

Thus, as discussed in our previous blog, traditional approaches to discovering the structure of data in such networks involve algorithms such as bread-first search (BFS), depth-first search (DFS), triangle counting, connected components, etc., which in combination refer to the computer science domain of graph analytics. Graph Neural Networks (GNN) is a modern, data-driven approach to analyzing large graphs and discovering their structure. A GNN consists of three key components: (i) information associated with each node (and optionally each edge) in the graph, represented as a single-dimensional vector known as an embedding, (ii) a recursive message-passing aggregation algorithm that aggregates vertex/edge embeddings in novel ways and (iii) a neural network model (typically an MLP), that learns the graph structure iteratively via standard gradient-descent training algorithms used in DL. For more background in “GNN Training: Flavors, Primitives and Process” and performance of full batch training, please refer to the previous blog.

In this article, we focus on distributed minibatch sampling and other techniques to train GraphSAGE and GAT to the target accuracy for node classification on Intel CPUs.

GNN Distributed Mini-batch Training: Optimizations for Intel Xeon

The well-known Deep Graph Library (DGL) implements graph data-structures (e.g., Adjacency Matrix) in standard Coordinate list (COO), Compressed Sparse Row (CSR), and Compressed Sparse Column (CSC) formats and provides a rich set of abstractions along with built-in functions to implement variants of the Aggregation (AGG) primitive. It supports PyTorch among other neural network frameworks and uses the autograd functionality to implement automatic differentiation. It implements the core graph manipulation and Aggregation primitive functionality in C++. DGL represents initial vertex features as a PyTorch Tensor object, enabling both AGG operations and various neural network operations on them. The above discussion implies that representing the graph as an adjacency matrix and vertex features as a dense PyTorch Tensor object will result in the following sequence of operations for the AGG primitive:

- Irregular memory accesses to read or gather feature-vectors of each vertex and its neighbors from memory as indicated by source vertex indices.

- Apply

on each

,

, or

tuple to perform unary or binary operations, respectively and

- Apply

to reduce each source vertex or edge feature-vector, and

- Irregular memory accesses to write or scatter the resulting feature-vector in memory as indicated by destination vertex indices in the adjacency matrix.

Operations 2 and 3 above are compute-bound and can be accelerated using multi-core support and SIMD units per core in Intel CPUs; we employ dynamic thread-scheduling using the OpenMP library and Tensor Processing Primitives (TPP) [3] in the LIBXSMM library [4] to generate optimal SIMD code for both types of operations. However, operations 1 and 4 are memory-bound, therefore, their performance may be limited by both memory latency and the achievable memory bandwidth utilization to access vertex or edge feature vectors. To reduce memory latency and improve memory bandwidth utilization, we apply cache blocking on the source vertex feature-vector tensor, potentially accessing either the complete source or destination vertex feature-vector tensor repeatedly. Details of this technique and associated algorithm are described in [8].

Optimizing the MLP primitive consists of blocking and transforming the layout of the model parameters to take advantage of caches and register files to maximally reuse weights, and fusing element-wise operators such as ReLU and Dropout along with the matrix-matrix-multiply operation of the Linear layer. Again, we use LIBXSMM TPP-based JITed code for all these operators. Further, we use OpenMP to take advantage of multiple cores in each Intel Xeon socket to parallelize these MLP computations across the minibatch dimension.

Next, we discuss our optimizations and results of GNN minibatch training on a cluster of Intel CPU nodes. (We use “machines” and “Intel CPU nodes” interchangeably in this article.)

Given a graph dataset and a set of training vertices, distributed GNN training using minibatch sampling for the purposes of node classification requires the following steps:

- Partition the graph dataset and training vertices across machines. Partitioning training vertices in a balanced manner is critical to minimize load-imbalance during training. Partitioning is typically an offline task, employing well-known techniques such as hash-based partitioning, random partitioning, minimum-edge-cut algorithms (Metis [5]) or minimum-vertex-cut algorithms (Libra [6]).

- On each machine, create a minibatch of training vertices and recursively sample a specified number of neighbors (i.e., fan-out) to a specified number of hops (i.e., number of layers), both of which are hyperparameters.

- Gather vertex features (

) and/or edge-features (

) using sampled vertex and/or edge IDs as indices and execute either AGG or Update primitive per model definition. Repeat this forward pass to specified hop-count.

- Compute predictions and backpropagate error, computing gradients along the way; update model parameters.

Steps (a) – (d) constitute one minibatch iteration on each machine. An epoch completes when all machines go through all their training vertices once. Training completes either at the end of a specific number of epochs or when the desired convergence target is achieved, whichever is earlier.

In our work, we use Metis to partition the citation graph (OGBN-Papers100M, with 111M vertices and 3.2B edges, 128-wide features and 172 vertex classes) [7] and training vertices as part of step (a).

Unlike single Intel CPU node minibatch training, steps (b)-(c) in distributed minibatch training involve feature communication; this is because neighbors in the un-partitioned graph involved in AGG computation could be on different machines after graph partitioning. This results in two types of AGG operations – local and remote AGG. One approach to perform remote AGG is for machines to exchange “missing” neighbor features in the minibatch using all-to-all communication and then proceed to perform only compute operations in the rest of the iteration.

Another approach, which we employ, is for machines to communicate “missing” neighbor features during AGG primitive execution. This approach affords the opportunity to overlap compute and communication tasks, resulting in better training performance. However, its disadvantage is that “missing” neighbor features may not be available for remote AGG computation in the current iteration, potentially resulting in lower accuracy due to incomplete AGG. To solve this problem, machines use historical embeddings, i.e., features they would have received in previous iterations, to complete the AGG operation in the current iteration. This method results in acceptable performance-accuracy trade-off. To effectively use historical embeddings, each machine maintains a Historical Embedding Cache (HEC) – a data structure implemented in software to cache embeddings received in previous iterations. Further, with knowledge of connectivity in the current minibatch, each machine eagerly communicates neighbor features to other machines to ensure remote HECs contain fresh embeddings. We describe this approach in detail in [9].

Step (d) involves communicating model parameter gradients using all-reduce algorithm. We set aside 1 thread per Intel CPU for communication in steps (c) and (d).

Using these techniques on a cluster of 8 Intel CPU nodes with 2 sockets per node and BF16 as datatype, we train GraphSAGE and GAT models to the target accuracy on the OGBN-Papers100M dataset as shown in Figure 1 and Figure 2.

Figure 1. Time to train GraphSAGE model to convergence. [1]

Figure 2. Time-to-train GAT to convergence. [2]

Conclusions and Ongoing Work

Graph Neural Networks are an important new class of AI workloads. This article demonstrates the ability of modern Intel Xeon processors to deliver impressive performance for this class of workloads when the underlying software primitives are well-optimized to take advantage of available architectural features. We continue to focus on this important workload – our papers on distributed GNN training [8] and [9] described approaches to scale GNN performance from a single node to multiple nodes on the 3rd gen Intel Xeon processors. This article discusses techniques and optimizations to train industry standard GNN benchmarks to within 1% of target accuracy on a cluster of 4th gen Intel Xeon Processors using distributed minibatch sampling combined with historical embeddings. We will continue to push the boundaries of scaling this workload to a larger number of CPU nodes.

References

- WholeGraph: A Fast Graph Neural Network Training Framework with Multi-GPU Distributed Shared Memory Architecture, D. Yang et al., Supercomputing 2022.

- Wholegraph: https://github.com/rapidsai/wholegraph/tree/branch-23.08

- Tensor processing primitives: a programming abstraction for efficiency and portability in deep learning workloads, E. Georganas et al., In Proc. Supercomputing 2021 (SC’21)

- https://github.com/libxsmm/libxsmm

- METIS: https://github.com/KarypisLab/METIS/tree/master

- A Vertex Cut based Framework for Load Balancing and Parallelism Optimization in Multi-core Systems, G. Ma et al., https://arxiv.org/pdf/2010.04414.pdf

- OGBN-Papers: https://ogb.stanford.edu/docs/nodeprop/#ogbn-papers100

- DistGNN: scalable distributed training for large-scale graph neural networks, V. Md et al., In Proc. Supercomputing 2021 (SC ’21)

- DistGNN-MB: Distributed Large-Scale Graph Neural Network Training on x86 via Minibatch Sampling (https://arxiv.org/abs/2211.06385)

GraphSAGE: Test by Intel as of 07/11/23. 8 nodes, 2x Intel® Xeon® 8480+ per node, 56 cores, HT On, Turbo On, Total Memory 1024 GB (16 slots/ 64 GB/ 4800 MHz [run @ 4800 MHz]), SE5C741.86B.01.01.0003.2302100643, 0x2b000190, Red Hat Enterprise Linux 8.7 (Ootpa), 4.18.0-425.3.1.el8.x86_64, gcc (GCC) 8.5.0 20210514 (Red Hat 8.5.0-15), DGL 0.8.x, PyTorch 1.13

GAT: Test by Intel as of 07/11/23. 8 nodes, 2x Intel® Xeon® 8480+ per node, 56 cores, HT On, Turbo On, Total Memory 1024 GB (16 slots/ 64 GB/ 4800 MHz [run @ 4800 MHz]), SE5C741.86B.01.01.0003.2302100643, 0x2b000190, Red Hat Enterprise Linux 8.7 (Ootpa), 4.18.0-425.3.1.el8.x86_64, gcc (GCC) 8.5.0 20210514 (Red Hat 8.5.0-15), DGL 0.8.x, PyTorch 1.13

Notices & Disclaimers

Performance varies by use, configuration and other factors. Learn more on the Performance Index site.

Performance results are based on testing as of dates shown in configurations and may not reflect all publicly available updates. See backup for configuration details. No product or component can be absolutely secure.

Your costs and results may vary.

Intel does not control or audit third party data. You should consult other sources to evaluate accuracy.

Intel technologies may require enabled hardware, software or service activation.

© Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others.

[1] Measured on 7/11/2023. Results may vary.

[2] Measured on 7/11/2023. Results may vary.

Sasikanth Avancha received a B.E. degree in computer science and engineering from the University Visvesvaraya College of Engineering (UVCE), Bengaluru, India, in 1994, and an M.S. and Ph.D. degrees in computer science from the University of Maryland at Baltimore County (UMBC), Baltimore, MD, USA, in 2002 and 2005, respectively. He is currently a Senior Research Scientist with the Parallel Computing Lab, Intel Labs, Intel India, Bengaluru. He has over 20 years of industry and research experience. He has three patents and over 25 articles spanning security, wireless networks, systems software, and computer architecture. His current research focuses on high-performance algorithm development and analysis for large-scale, distributed deep learning and machine learning training, and inference on different data-parallel architectures, including x86 and other accelerators, across application domains.

Sasikanth Avancha received a B.E. degree in computer science and engineering from the University Visvesvaraya College of Engineering (UVCE), Bengaluru, India, in 1994, and an M.S. and Ph.D. degrees in computer science from the University of Maryland at Baltimore County (UMBC), Baltimore, MD, USA, in 2002 and 2005, respectively. He is currently a Senior Research Scientist with the Parallel Computing Lab, Intel Labs, Intel India, Bengaluru. He has over 20 years of industry and research experience. He has three patents and over 25 articles spanning security, wireless networks, systems software, and computer architecture. His current research focuses on high-performance algorithm development and analysis for large-scale, distributed deep learning and machine learning training, and inference on different data-parallel architectures, including x86 and other accelerators, across application domains.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.