A Fast On-Ramp to Chatbots with No Coding Required

This article was originally published on medium.com.

Posted on behalf of Bob Chesebrough, Solutions Architect, Intel Corporation

I aim to demonstrate a fast on-ramp to playing with this chatbot based on the 7-billion parameter version of the Mistral foundation model. The neural-chat-7b model was fine-tuned on Intel® Gaudi® 2 AI accelerators using several Intel AI software libraries. I am going to show you how to play with this model with prebaked code on the Intel® Developer Cloud.

If you follow these instructions, you can register for a standard free account on Intel Developer Cloud and start playing with a Jupyter Notebook preconfigured for various large language models (LLMs) such as:

- Writer/camel-5b-hf

- openlm-research/open_llama_3b_v2

- Intel/neural-chat-7b-v3–1

- HuggingFaceH4/zephyr-7b-beta

- tiiuae/falcon-7b

You can do all this without writing a single line of code. Simply type out a prompt and start playing. Powered by Intel® Data Center GPU Max 1100s, this notebook provides a hands-on experience that doesn’t require deep technical knowledge.

Whether you’re a student, writer, educator, or simply curious about AI, this guide is designed for you. LLMs have a wide range of applications, but experimenting with them can also be fun. Here, we’ll use some simple pre-trained models to explore text generation interactively. Ready to try it out? Let’s set up our environment and start exploring the world of text generation with LLMs!

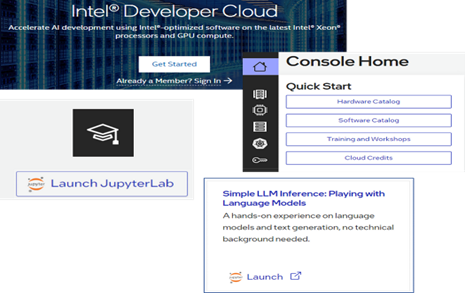

The short summary of your on-ramp instructions is listed here and shown in the screenshots below:

- Go to the Intel Developer Cloud.

- Click the “Get Started” button.

- Subscribe to the “Standard — Free” service tier and complete your cloud registration.

- To start up a free Jupyter Notebook session that uses the latest Intel CPUs and GPUs, click the “Training and Workshops” icon.

- Click “Simple LLM Inference: Playing with Language Models.” This will launch a prepopulated Jupyter Notebook (simple_llm_inference.ipynb).

- Run all cells.

- Select the model and options to try.

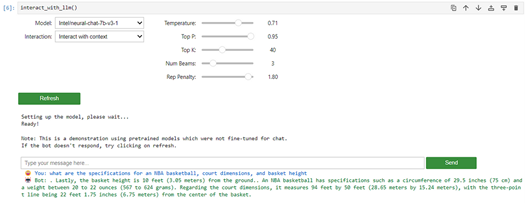

- Select the options and text prompt you want to try out. For options, I suggest choosing a model such as Intel using “with_context” and a prompt similar to “What are the specifications for an NBA basketball, court dimensions, and basket height.”

Interactive Prompt

The interactive nature of the notebook makes it easy to enter a prompt and generate a response:

Generative AI Accuracy Reminder

Chatbots such as these are known to produce erroneous results at times and should not be relied upon for accurate or trustworthy answers. For example, when I ran the above notebook with the “Without Context” option and the prompt “What are the specifications for an NBA basketball, court dimensions, and basket height,” the models returned nonsensical answers, i.e.:

“Bot: An NBA basketball has a diameter of 12 feet (3.7 meters), a height of 3 feet (0.91 meters) above the ground, and a width of 9 feet (2.7 meters).”

The bot generated the following response when I used the “With Context” option:

“Bot: . The specifications for an official NBA basketball include a 29.5 inches (75 cm) circumference and a weight ranging from 20 to 22 ounces (567 to 624 grams). The basketball hoop’s rim height is 10 feet (3.05 meters) from the ground. Regarding the court dimensions, it measures 94 feet (28.65 meters) in length and 50 feet (15.24 meters) in width.”

While the last response is not absurdly wrong, to me, a layman, it should be double-checked with official NBA specifications to ensure accuracy!

Call to Action

Try out this no-code approach to experimenting with chatbot models using the standard free Intel Developer Cloud account and the ready-made Jupyter Notebook. Try your hand with different 7B parameter models and prompts of your own.

We encourage you also to check out and incorporate Intel’s other AI/ML Framework optimizations and end-to-end portfolio of tools into your AI workflow and learn about the unified, open, standards-based oneAPI programming model that forms the foundation of Intel’s AI Software Portfolio to help you prepare, build, deploy, and scale your AI solutions.

Product Marketing Engineer bringing cutting edge AI/ML solutions and tools from Intel to developers.

Product Marketing Engineer bringing cutting edge AI/ML solutions and tools from Intel to developers.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.